be distributed within the system to provide additional protections against failures, as

well as significant opportunities for scaling performance.

Messages and Batches

The unit of data within Kafka is called a message. If you are approaching Kafka from a

database background, you can think of this as similar to a row or a record. A message

is simply an array of bytes, as far as Kafka is concerned, so the data contained within

it does not have a specific format or meaning to Kafka. Messages can have an optional

bit of metadata which is referred to as a key. The key is also a byte array, and as with

the message, has no specific meaning to Kafka. Keys are used when messages are to

be written to partitions in a more controlled manner. The simplest such scheme is to

treat partitions as a hash ring, and assure that messages with the same key are always

written to the same partition. Usage of keys is discussed more thoroughly in Chap‐

ter 3.

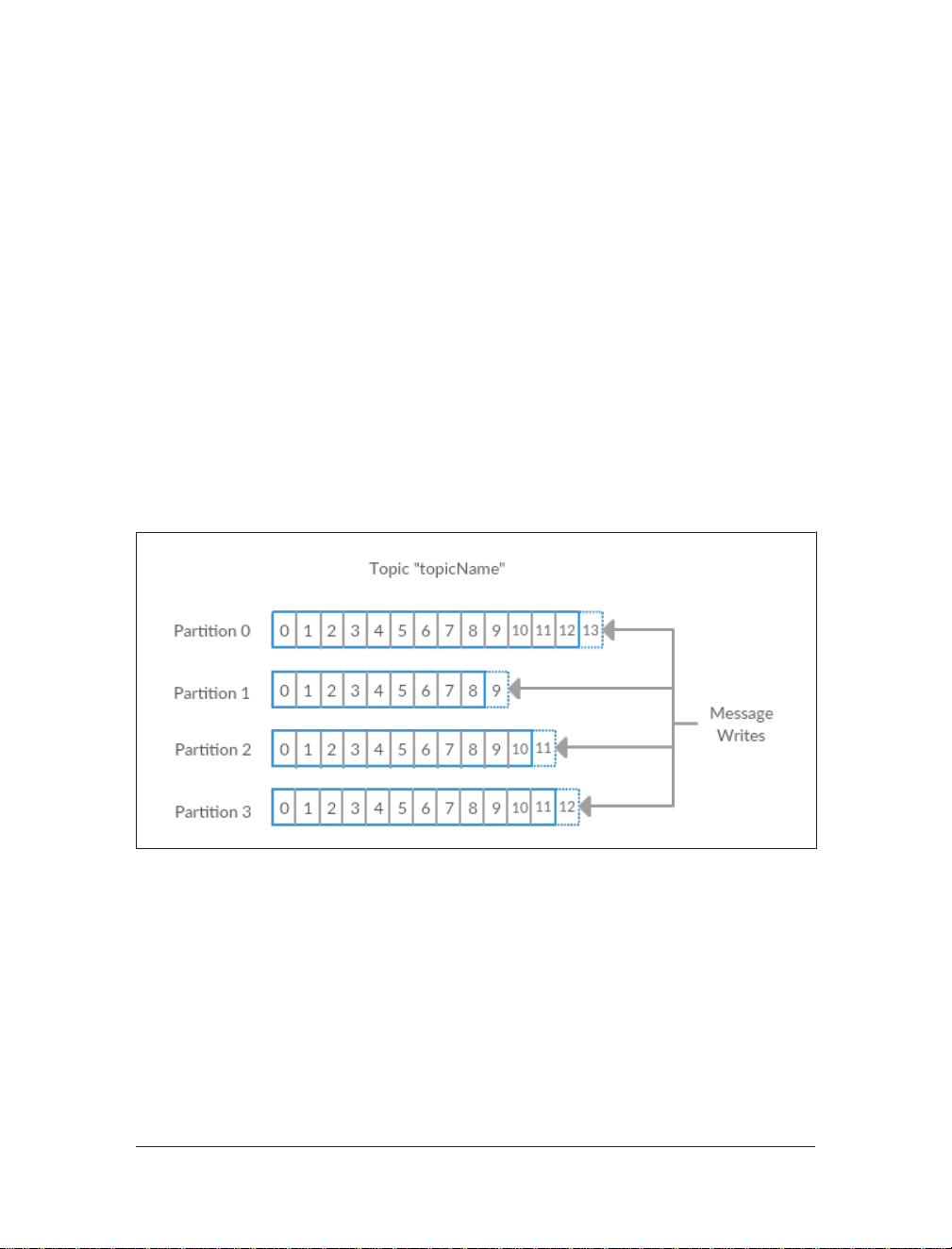

For efficiency, messages are written into Kafka in batches. A batch is just a collection

of messages, all of which are being produced to the same topic and partition. An indi‐

vidual round trip across the network for each message would result in excessive over‐

head, and collecting messages together into a batch reduces this. This, of course,

presents a tradeoff between latency and throughput: the larger the batches, the more

messages that can be handled per unit of time, but the longer it takes an individual

message to propagate. Batches are also typically compressed, which provides for more

efficient data transfer and storage at the cost of some processing power.

Schemas

While messages are opaque byte arrays to Kafka itself, it is recommended that addi‐

tional structure be imposed on the message content so that it can be easily under‐

stood. There are many options available for message schema, depending on your

application’s individual needs. Simplistic systems, such as Javascript Object Notation

(JSON) and Extensible Markup Language (XML), are easy to use and human reada‐

ble. However they lack features such as robust type handling and compatibility

between schema versions. Many Kafka developers favor the use of Apache Avro,

which is a serialization framework originally developed for Hadoop. Avro provides a

compact serialization format, schemas that are separate from the message payloads

and that do not require generated code when they change, as well as strong data typ‐

ing and schema evolution, with both backwards and forwards compatibility.

A consistent data format is important in Kafka, as it allows writing and reading mes‐

sages to be decoupled. When these tasks are tightly coupled, applications which sub‐

scribe to messages must be updated to handle the new data format, in parallel with

the old format. Only then can the applications that publish the messages be updated

to utilize the new format. New applications that wish to use data must be coupled

Enter Kafka | 15

www.ebook3000.com