2.2 Object Modeling and Localization

Many existing methods for object detection represent objects

with appearance [5], [6], [30], [31], such as HOG [5] features.

Lai et al. [6] extended the HOG features to RGB-D images.

Such features are often weakened by low resolution, heavy

occlusion, and large appearance variation. To solve such

problems, contextual information was introduced into object

modeling. The methods in [32] and [33] incorporated rela-

tions with other objects to improve detection or recognition.

Zhao and Zhu [9] defined objects by integrating function,

geometry, and appearance information. Gupta et al. [10]

extended object localization and recognition to human-cen-

tric scene understanding by inferring human and 3D scene

interactions. These methods aim at object detection or recog-

nition in still images.

Some studies aim at recognizing and localizing objects in

videos [15], [34]. Gupta et al. [34] labeled objects according

to human actions in videos. The method in [15] tracked

objects in each video frame. In comparison, our method

localizes objects in both 3D point clouds and 2D images,

and does not need accurate initialization of object locations.

2.3 Human-Object Interaction and Affordance

The concept of affordance was originally studied by Gibson

[7] and further developed by many studies to describe the

relations between organisms (humans) and environments

(objects) [35], [36], [37], [38], [39]. Many researchers have

recently applied human-object relations to event, object, and

scene modeling [10], [15], [34], [40], [41], [42], [43], [44], [45],

[46], [47], [48], [49], [50], [51]. Gupta et al. [34] combined spa-

tial and functional constraints between humans and objects

to recognize actions and objects. Prest et al. [44] inferred spa-

tial information of objects by modeling 2D geometric rela-

tions between human bodies and objects. Yao and Fei-

Fei [46] detected objects by modeling relations between

actions, objects, and poses in still images. These methods

define the human-object interactions in 2D images. Such con-

textual cues are often weakened by viewpoint change and

occlusion. Koppula et al. [15] modeled relations between

human activities and object affordance, and their changes

over time. This method requires videos to be pre-segmented,

and the object detection is independent of human actions.

Our model incorporates event recognition, segmentation,

and object localization into a unified framework, under

which these tasks mutually facilitate each other.

Human-object interactions are also used in robotics [52],

[53], [54]. Aksoy et al. [52] recognized manipulations by

learning object-action semantics. W

€

org

€

otter et al. [53] mod-

eled manipulation actions for robot task execution. This

stream of research demonstrates the significance of human-

object interactions from the perspective of robot learning

and task execution.

2.4 Action Structure Learning

Many existing approaches mine action structures by

explicitly modeling the latent structures [2], [4], [25],

[27], [55]. HMM [2] learned the hidden states a nd transi-

tion probabilities with maximum likelihood estimation.

Hidden conditional random fiel d (HCRF) [55] learned

the hidden structures of actions in a discriminative way.

These methods define the temporal structures on video

frames or fixed-size video segments, which can not effec-

tively characterize nor utilize the duration information of

hidden structures.

Yao and Fei-Fei [46] defined atomic poses in still images

and learned them through clustering human poses.

Zhou et al. [4] segmented an action sequence into motion

primitives with hierarchical cluster analysis. These cluster-

ing methods are under the framework similar to the expec-

tation-maximization (EM) clustering [12]. Conventional EM

does not consider the temporal order of sequence frames,

which may produce undesirable clustering results. For

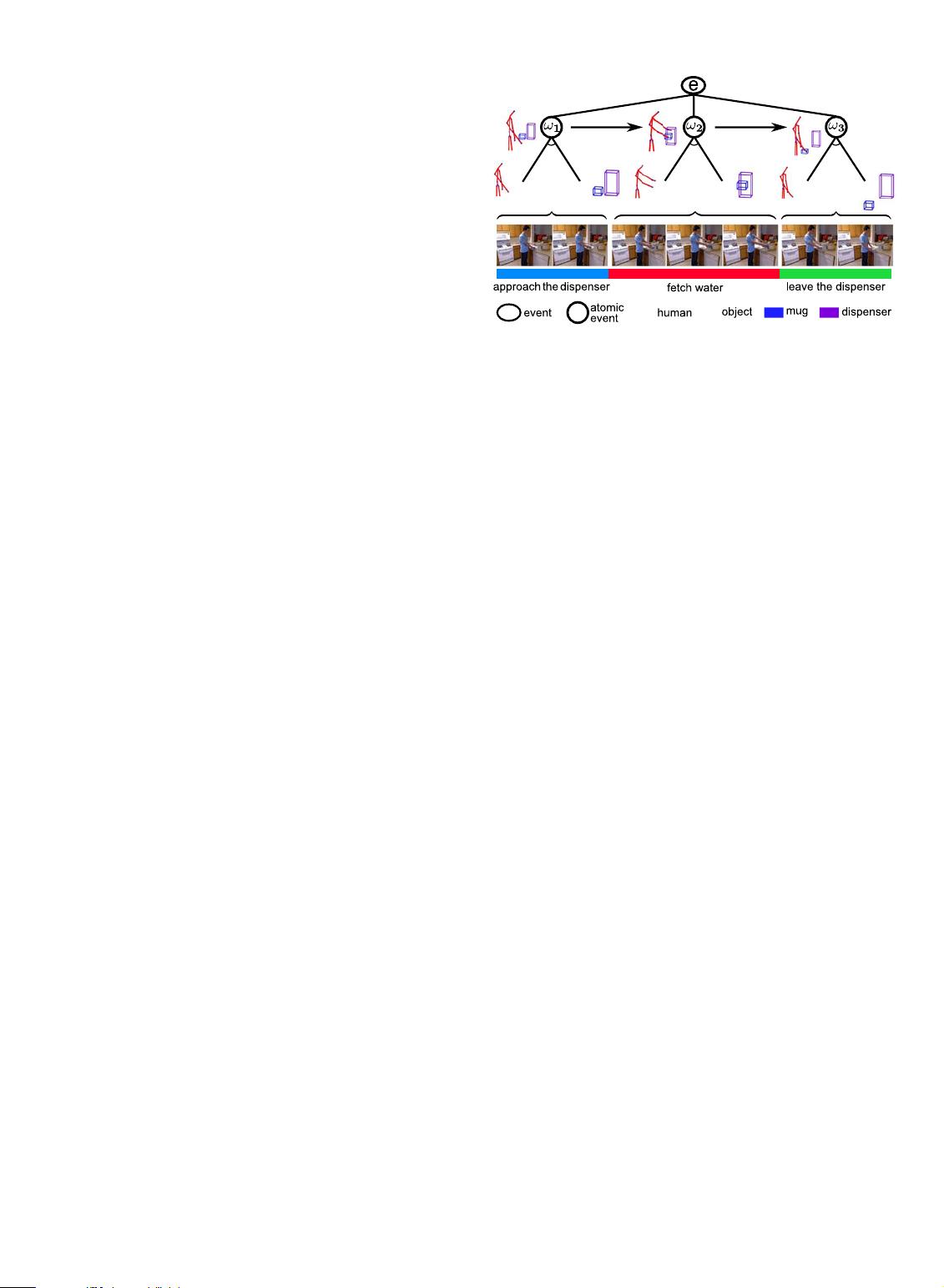

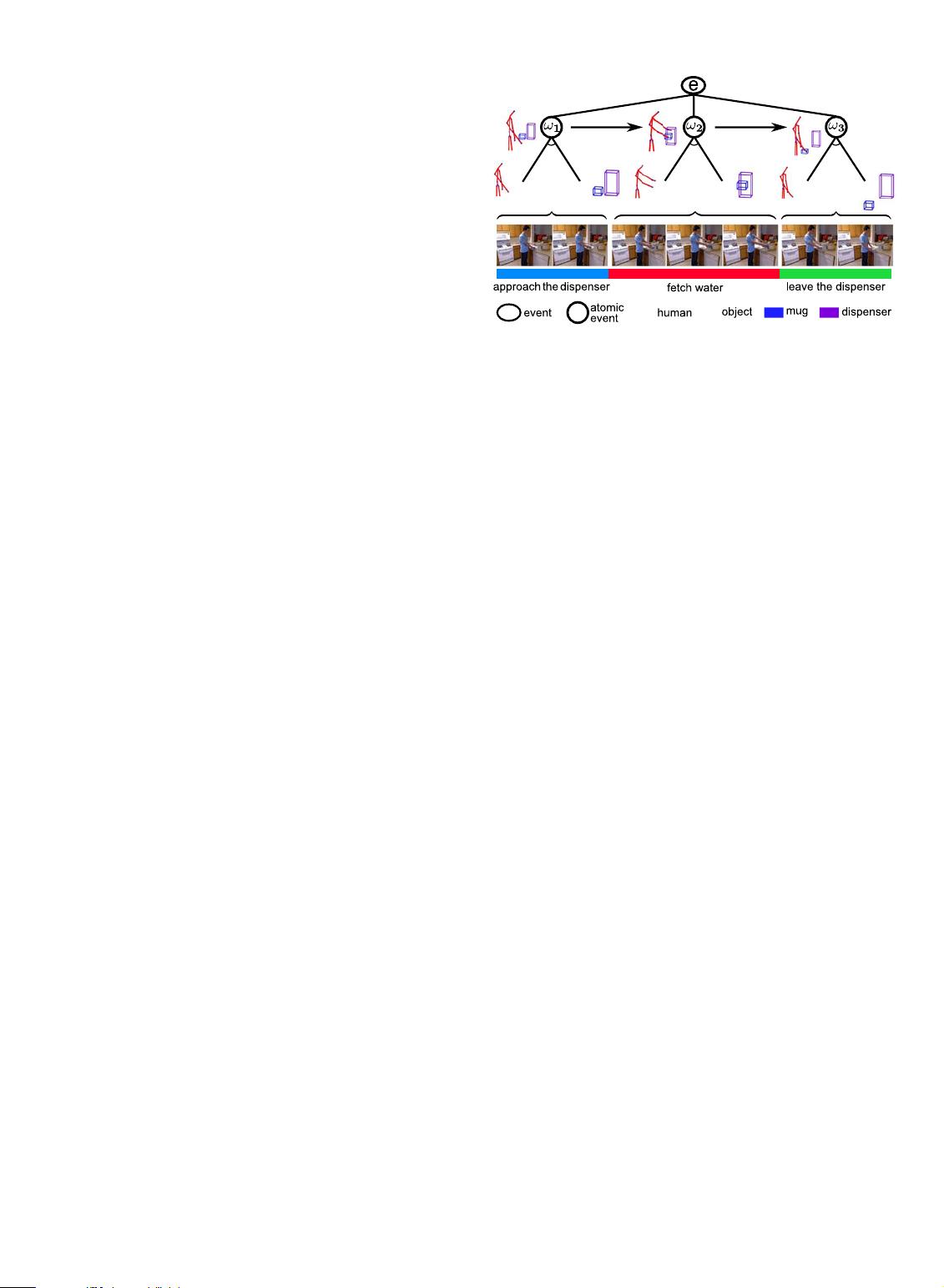

example, as is shown in Fig. 3, the poses of approach the dis-

penser and leave the dispenser are very similar. Without con-

sidering the temporal order, these two poses may be

clustered into the same cluster. Though the method in [4]

introduced the temporal order into clustering, it did not

consider the mutual constraints among the sequences of the

same category, but rather carried out the frame clustering

for each independent sequence.

2.5 Our Contributions

In comparison with the previous work, this paper makes

four contributions.

1. It presents a 4D human-object interaction model as a

stochastic hierarchical spatial-temporal graph, which

represents the 3D human-object relations and the

temporal relations between atomic events in RGB-D

videos.

2. It develops a unified framework for joint inference of

event recognition, sequence segmentation, and object

localization.

3. It proposes an unsupervised algorithm to learn the

latent temporal structures of events and the model

parameters from sequence samples.

4. It tests the model on three challenging datasets, and

the performance demonstrates the strength of the

model.

34DHUMAN-OBJECT INTERACTION MODEL

As Fig. 3 illustrates , the 4DHOI model is a hierarchical

graph for an event. On the time axis, an event is decom-

posedintoseveralorderedatomicevents.Forexample,

the event fetch water from dispenser is decomposed into

Fig. 3. A hierarchical graph of the 4D human-object interactions for an

example event fetch water from dispenser.

WEI ET AL.: MODELING 4D HUMAN-OBJECT INTERACTIONS FOR JOINT EVENT SEGMENTATION, RECOGNITION, AND OBJECT... 1167