PDF: 09005aef8202ec2e/Source: 09005aef8202ebf7 Micron Technology, Inc., reserves the right to change products or specifications without notice.

MT9D111__2_REV5.fm - Rev. B 2/06 EN

16 ©2004 Micron Technology, Inc. All rights reserved.

MT9D111 - 1/3.2-Inch 2-Megapixel SOC Digital Image Sensor

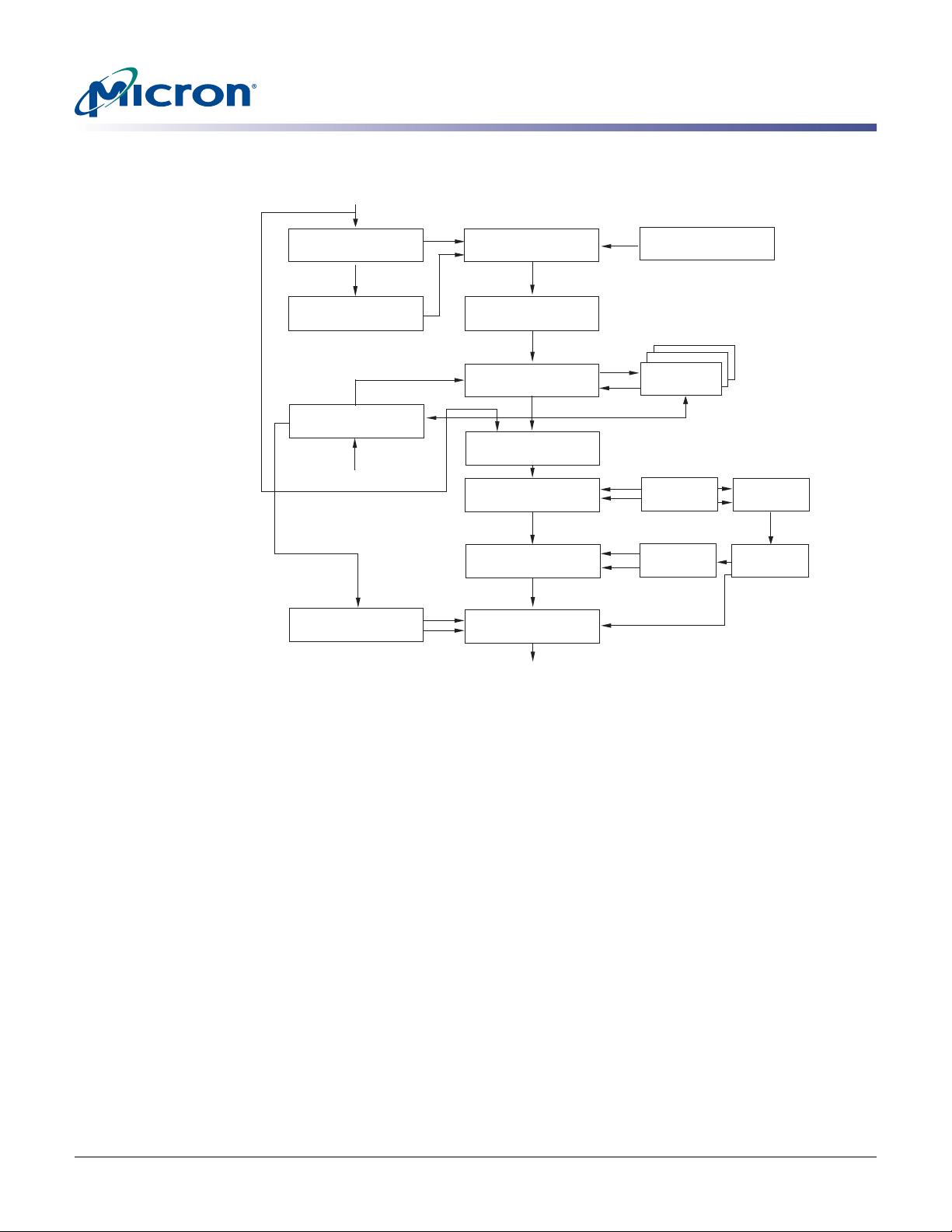

Architecture Overview

Micron Confidential and Proprietary

Color Correction and Aperture Correction

In order to achieve good color fidelity of IFP output, interpolated RGB values of all pixels

are subjected to color correction. The IFP multiplies each vector of three pixel colors by a

3 x 3 color correction matrix. The three components of the resulting color vector are all

sums of three 10-bit numbers. Since such sums can have up to 12 significant bits, the bit

width of the image data stream is widened to 12 bits per color (36 bits per pixel). The

color correction matrix can be either programmed by the user or automatically selected

by the auto white balance (AWB) algorithm implemented in the IFP. Color correction

should ideally produce output colors that are independent of the spectral sensitivity and

color cross-talk characteristics of the image sensor. The optimal values of color correc-

tion matrix elements depend on those sensor characteristics and on the spectrum of

light incident on the sensor.

To increase image sharpness, a programmable aperture correction is applied to color

corrected image data, equally to each of the 12-bit R, G, and B color channels.

Gamma Correction

Like the aperture correction, gamma correction is applied equally to each of the 12-bit R,

G, and B color channels. Gamma correction curve is implemented as a piecewise linear

function with 19 knee points, taking 12-bit arguments and mapping them to 8-bit out-

put. The abscissas of the knee points are fixed at 0, 64, 128, 256, 512, 768, 1024, 1280,

1536, 1792, 2048, 2304, 2560, 2816, 3072, 3328, 3584, 3840, and 4095. The 8-bit ordinates

are programmable via IFP registers or public variables of mode driver (ID = 7). The driver

variables include two arrays of knee point ordinates defining two separate gamma

curves for sensor operation contexts A and B.

YUV Processing

After the gamma correction, the image data stream undergoes RGB to YUV conversion

and optionally further corrective processing. The first step in this processing is removal

of highlight coloration, also referred to as “color kill.” It affects only pixels whose bright-

ness exceeds a certain pre-programmed threshold. The U and V values of those pixels

are attenuated proportionally to the difference between their brightness and the thresh-

old. The second optional processing step is noise suppression by 1-dimensional low-

pass filtering of Y and/or UV signals. A 3- or 5-tap filter can be selected for each signal.

Image Cropping and Decimation

To ensure that the size of images output by MT9D111 can be tailored to the needs of all

users, the IFP includes a decimator module. When enabled, this module performs “deci-

mation” of incoming images, i.e. shrinks them to arbitrarily selected width and height

without reducing the field of view and without discarding any pixel values. The latter

point merits underscoring, because the terms “decimator” and “image decimation” sug-

gest image size reduction by deleting columns and/or rows at regular intervals. Despite

the terminology, no such deletions take place in the decimator module. Instead, it per-

forms “pixel binning”, i.e. divides each input image into rectangular bins corresponding

to individual pixels of the desired output image, averages pixel values in these bins and

assembles the output image from the bin averages. Pixels lying on bin boundaries con-

tribute to more than one bin average: their values are added to bin-wide sums of pixel

values with fractional weights. The entire procedure preserves all image information

that can be included in the downsized output image and filters out high-frequency fea-

tures that could cause aliasing.

The image decimation in the IFP can be preceded by image cropping and/or image dec-

imation in the sensor core. Image cropping takes place when the sensor core is pro-

grammed to output pixel values from a rectangular portion of its pixel array - a window -