Physical memory

0

max

User

0

2

47

Kernel

−2

47

−1

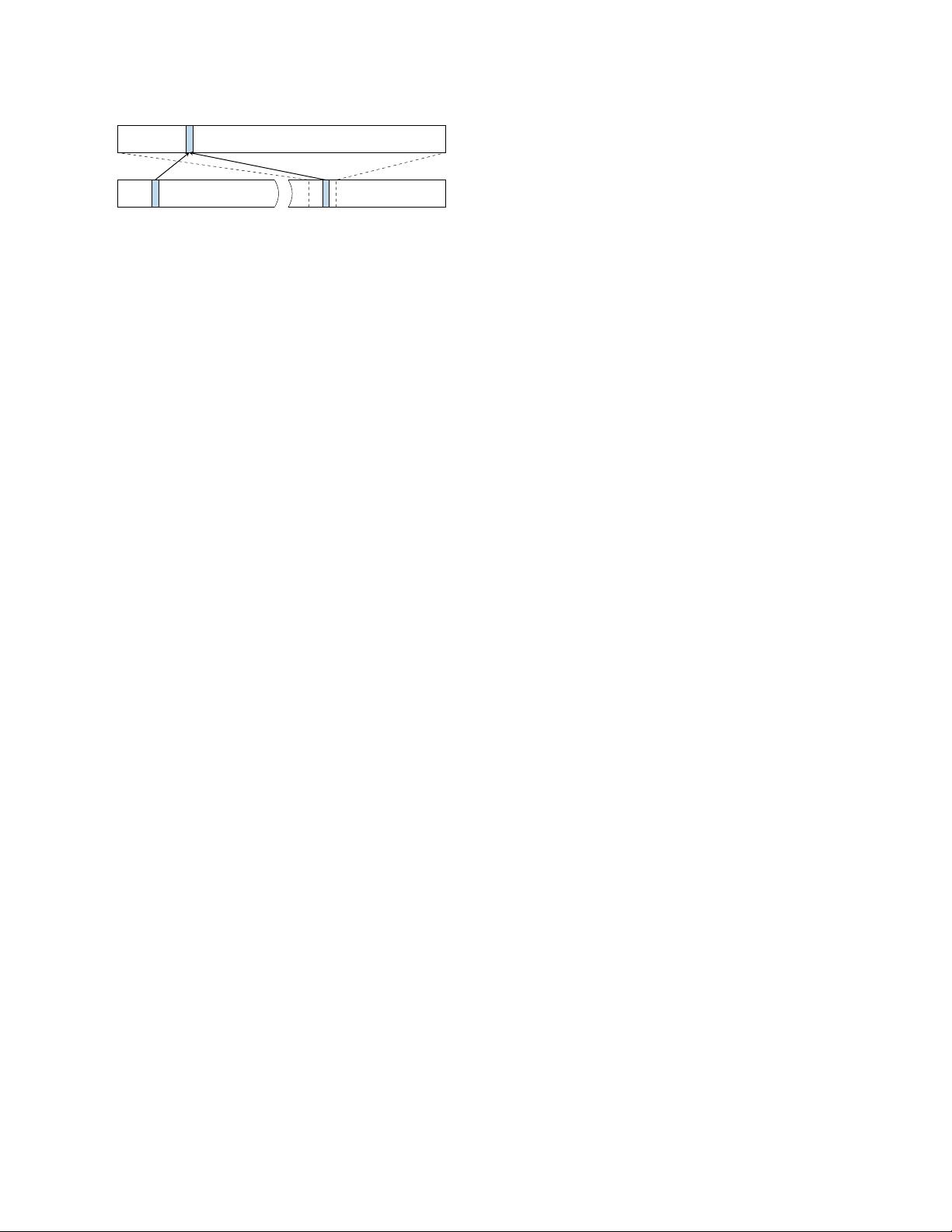

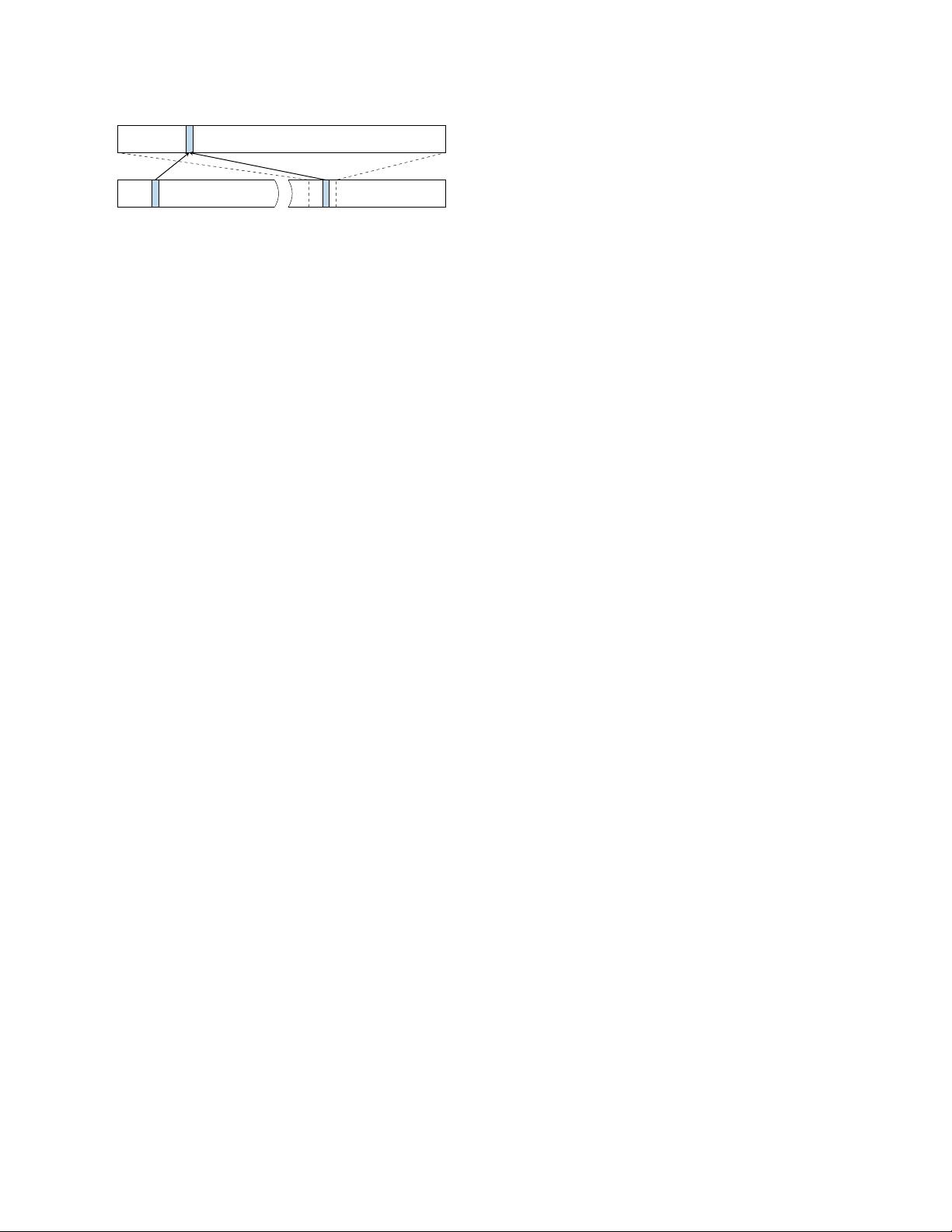

Figure 2: The physical memory is directly mapped in the

kernel at a certain offset. A physical address (blue) which

is mapped accessible to the user space is also mapped in

the kernel space through the direct mapping.

are used to enforce privilege checks, such as readable,

writable, executable and user-accessible. The currently

used translation table is held in a special CPU register.

On each context switch, the operating system updates

this register with the next process’ translation table ad-

dress in order to implement per-process virtual address

spaces. Because of that, each process can only reference

data that belongs to its virtual address space. Each vir-

tual address space itself is split into a user and a kernel

part. While the user address space can be accessed by the

running application, the kernel address space can only be

accessed if the CPU is running in privileged mode. This

is enforced by the operating system disabling the user-

accessible property of the corresponding translation ta-

bles. The kernel address space does not only have mem-

ory mapped for the kernel’s own usage, but it also needs

to perform operations on user pages, e.g., filling them

with data. Consequently, the entire physical memory is

typically mapped in the kernel. On Linux and OS X, this

is done via a direct-physical map, i.e., the entire physi-

cal memory is directly mapped to a pre-defined virtual

address (cf. Figure 2).

Instead of a direct-physical map, Windows maintains

a multiple so-called paged pools, non-paged pools, and

the system cache. These pools are virtual memory re-

gions in the kernel address space mapping physical pages

to virtual addresses which are either required to remain

in the memory (non-paged pool) or can be removed from

the memory because a copy is already stored on the disk

(paged pool). The system cache further contains map-

pings of all file-backed pages. Combined, these memory

pools will typically map a large fraction of the physical

memory into the kernel address space of every process.

The exploitation of memory corruption bugs often re-

quires knowledge of addresses of specific data. In or-

der to impede such attacks, address space layout ran-

domization (ASLR) has been introduced as well as non-

executable stacks and stack canaries. To protect the

kernel, kernel ASLR (KASLR) randomizes the offsets

where drivers are located on every boot, making attacks

harder as they now require to guess the location of kernel

data structures. However, side-channel attacks allow to

detect the exact location of kernel data structures [21, 29,

37] or derandomize ASLR in JavaScript [16]. A com-

bination of a software bug and the knowledge of these

addresses can lead to privileged code execution.

2.3 Cache Attacks

In order to speed-up memory accesses and address trans-

lation, the CPU contains small memory buffers, called

caches, that store frequently used data. CPU caches hide

slow memory access latencies by buffering frequently

used data in smaller and faster internal memory. Mod-

ern CPUs have multiple levels of caches that are either

private per core or shared among them. Address space

translation tables are also stored in memory and, thus,

also cached in the regular caches.

Cache side-channel attacks exploit timing differences

that are introduced by the caches. Different cache attack

techniques have been proposed and demonstrated in the

past, including Evict+Time [55], Prime+Probe [55, 56],

and Flush+Reload [63]. Flush+Reload attacks work on

a single cache line granularity. These attacks exploit the

shared, inclusive last-level cache. An attacker frequently

flushes a targeted memory location using the clflush

instruction. By measuring the time it takes to reload the

data, the attacker determines whether data was loaded

into the cache by another process in the meantime. The

Flush+Reload attack has been used for attacks on various

computations, e.g., cryptographic algorithms [63, 36, 4],

web server function calls [65], user input [23, 47, 58],

and kernel addressing information [21].

A special use case of a side-channel attack is a covert

channel. Here the attacker controls both, the part that in-

duces the side effect, and the part that measures the side

effect. This can be used to leak information from one

security domain to another, while bypassing any bound-

aries existing on the architectural level or above. Both

Prime+Probe and Flush+Reload have been used in high-

performance covert channels [48, 52, 22].

3 A Toy Example

In this section, we start with a toy example, i.e., a simple

code snippet, to illustrate that out-of-order execution can

change the microarchitectural state in a way that leaks

information. However, despite its simplicity, it is used as

a basis for Section 4 and Section 5, where we show how

this change in state can be exploited for an attack.

Listing 1 shows a simple code snippet first raising an

(unhandled) exception and then accessing an array. The

property of an exception is that the control flow does not

continue with the code after the exception, but jumps to

an exception handler in the operating system. Regardless