Detector

Text Encoder

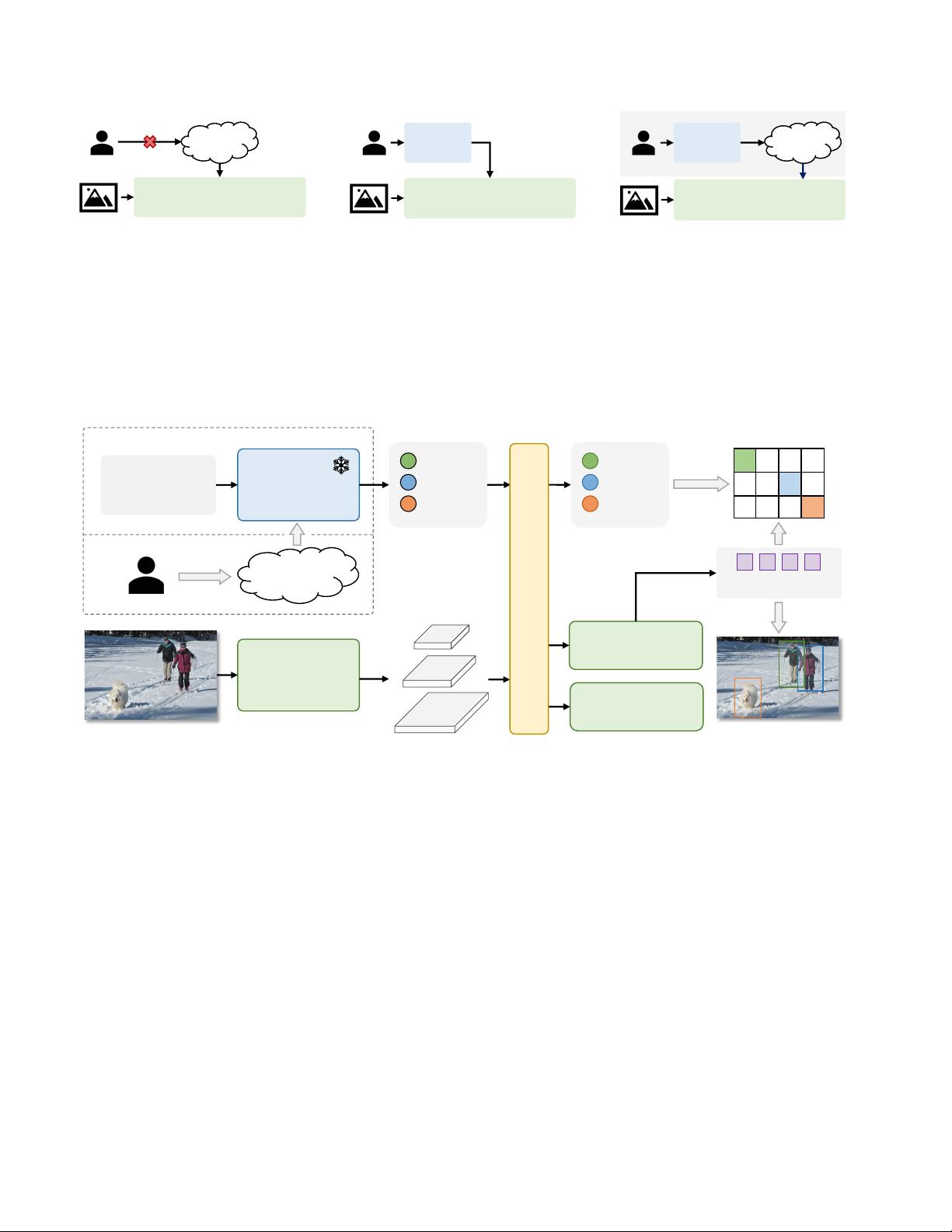

(a) Traditional Object Detector (b) Preivous Open-Vocabulary Detector (c) YOLO-World

Object Detector

Fixed

Vocabulary

Text

Encoder

Large Detector

Text

Encoder

Lightweight Detector

Offline

Vocabulary

User User

Online

Vocabulary

Re-parameterize

User

Train-only

Figure 2. Comparison with Detection Paradigms. (a) Traditional Object Detector: These object detectors can only detect objects

within the fixed vocabulary pre-defined by the training datasets, e.g., 80 categories of COCO dataset [26]. The fixed vocabulary limits the

extension for open scenes. (b) Previous Open-Vocabulary Detectors: Previous methods tend to develop large and heavy detectors for

open-vocabulary detection which intuitively have strong capacity. In addition, these detectors simultaneously encode images and texts as

input for prediction, which is time-consuming for practical applications. (c) YOLO-World: We demonstrate the strong open-vocabulary

performance of lightweight detectors, e.g., YOLO detectors [20, 42], which is of great significance for real-world applications. Rather than

using online vocabulary, we present a prompt-then-detect paradigm for efficient inference, in which the user generates a series of prompts

according to the need and the prompts will be encoded into an offline vocabulary. Then it can be re-parameterized as the model weights

for deployment and further acceleration.

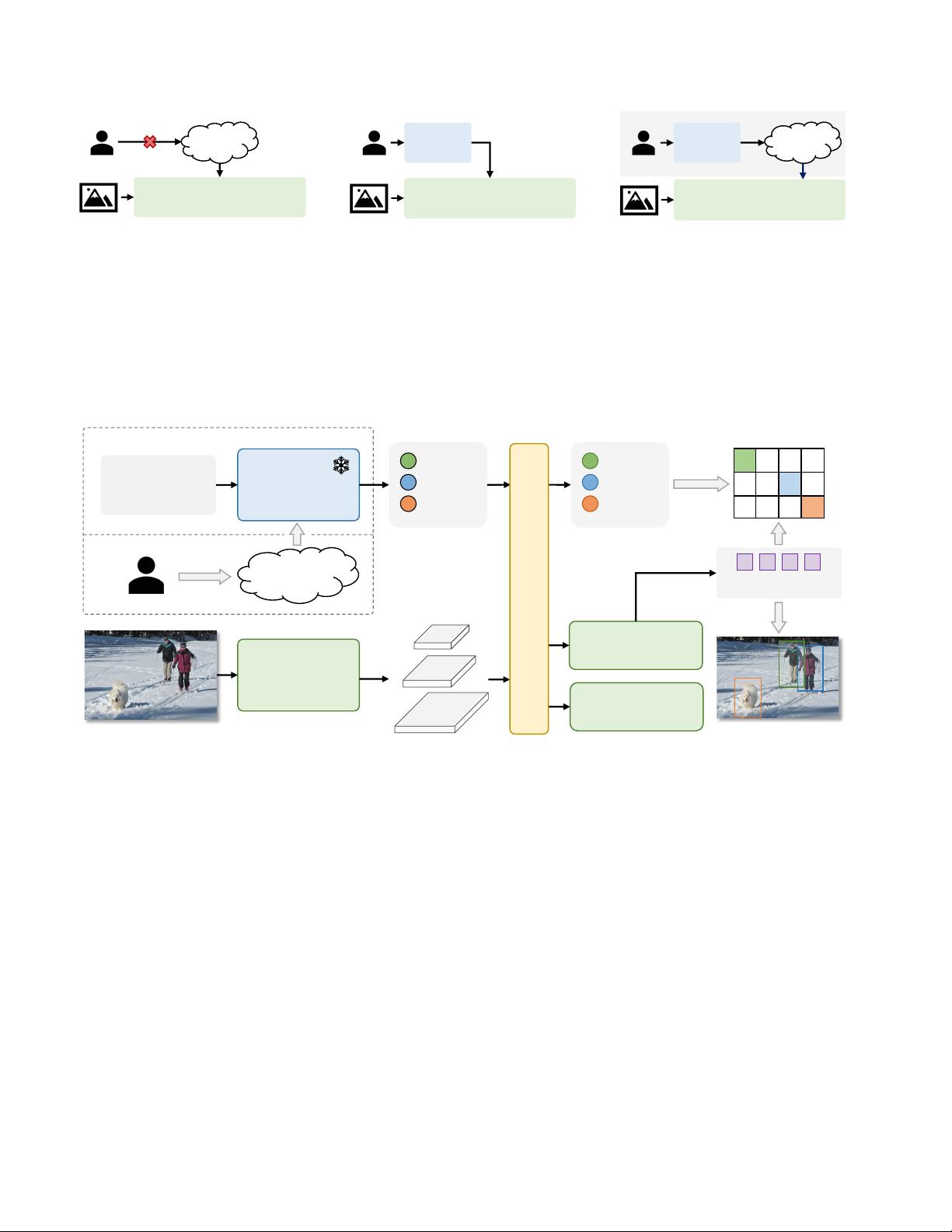

YOLO

Backbone

Text

Encoder

A man and a

woman are skiing

with a dog

caption, noun phrases, category…

User

Text Embeddings

Multi-scale Image Features

Text

Contrastive Head

Box Head

man

woman

dog

Vocabulary Embeddings

man

woman

dog

User’s

Vocabulary

Dog

Image-aware Embeddings

Multi-scale

Image Features

Training: Online Vocabulary

Deployment: Offline Vocabulary

Vision-Language PAN

Object Embeddings

Region-Text Matching

Input Image

Extract Nouns

Figure 3. Overall Architecture of YOLO-World. Compared to traditional YOLO detectors, YOLO-World as an open-vocabulary detector

adopts text as input. The Text Encoder first encodes the input text input text embeddings. Then the Image Encoder encodes the input image

into multi-scale image features and the proposed RepVL-PAN exploits the multi-level cross-modality fusion for both image and text features.

Finally, YOLO-World predicts the regressed bounding boxes and the object embeddings for matching the categories or nouns that appeared

in the input text.

by vision-language pre-training [19, 39], recent works [8,

22, 53, 62, 63] formulate open-vocabulary object detection

as image-text matching and exploit large-scale image-text

data to increase the training vocabulary at scale. OWL-

ViTs [35, 36] fine-tune the simple vision transformers [7]

with detection and grounding datasets and build the sim-

ple open-vocabulary detectors with promising performance.

GLIP [24] presents a pre-training framework for open-

vocabulary detection based on phrase grounding and eval-

uates in a zero-shot setting. Grounding DINO [30] incor-

porates the grounded pre-training [24] into detection trans-

formers [60] with cross-modality fusions. Several meth-

ods [25, 56, 57, 59] unify detection datasets and image-text

datasets through region-text matching and pre-train detec-

tors with large-scale image-text pairs, achieving promising

performance and generalization. However, these methods

often use heavy detectors like ATSS [61] or DINO [60]

with Swin-L [32] as a backbone, leading to high com-

putational demands and deployment challenges. In con-

trast, we present YOLO-World, aiming for efficient open-

vocabulary object detection with real-time inference and

easier downstream application deployment. Differing from

ZSD-YOLO [54], which also explores open-vocabulary de-

tection [58] with YOLO through language model align-

ment, YOLO-World introduces a novel YOLO framework

with an effective pre-training strategy, enhancing open-

3