A More Effective Method For Image Representation: Topic Model Based on Latent

Dirichlet Allocation

Zongmin Li

∗

, Weiwei Tian

∗

, Yante Li

∗

, Zhenzhong Kuang

†

and Yujie Liu

∗

∗

College of Computer and Communication Engineering

China University of Petroleum, Qingdao, China

Email: tianwei210@gmail.com

†

School of Geosciences

China University of Petroleum, Qingdao, China

Abstract—Nowadays, the Bag-of-words(BoW) representation

is well applied to recent state-of-the-art image retrieval works.

However, with the rapid growth in the number of images, the

dimension of the dictionary increases substantially which leads

to great storage and CPU cost. Besides, the local features do

not convey any semantic information which is very important

in image retrieval. In this paper, we propose to use “topics”

instead of “visual words” as the image representation by topic

model to reduce the feature dimension and mine more high-

level semantic information. We call this as Bag-of-Topics(BoT)

which is a type of statistical model for discovering the abstract

“topics” from the words. We extract the topics by Latent

Dirichlet Allocation (LDA) and calculate the similarity between

the images using BoT model instead of BoW directly. The

results show that the dimension of the image representation

has been reduced significantly, while the retrieval performance

is improved.

Keywords-image retrieval; Bag-of-Words; Bag-of-Topics;

topics; Latent Dirichlet Allocation;

I. INTRODUCTION

The Bag-of-Words model was first used in the methods of

document retrieval which uses the distribution of the words

to describe the document and retrieve the similarity on the

basis of the description. Recently, the Bag-of-Words model

has also been used for image retrieval [1] and it has been

proved to be one of the best methods [2], [3], [4], [10], [17]

as for now.Then many state-of-the-art methods build on it. In

the BoW model, local features such as the SIFT descriptors

[16] are extracted from the image and quantized to visual

words by using pre-trained codebook. The similarity match-

ing between the images based on visual words overcomes

the computational problem which is caused by matching the

original points one by one. So, the BoW model is suitable

for large scale settings.

However, with the data expanding sharply, the low-

dimension dictionary cannot have a good performance.

Flickr60k [4] trained dictionaries with different dimensions

for the same experiment, such as 10k,20k,30k and so on. The

results show that the retrieval performance becomes better

with the number of the dictionary dimension increasing.

However, at the same time, the speed of the retrieval has

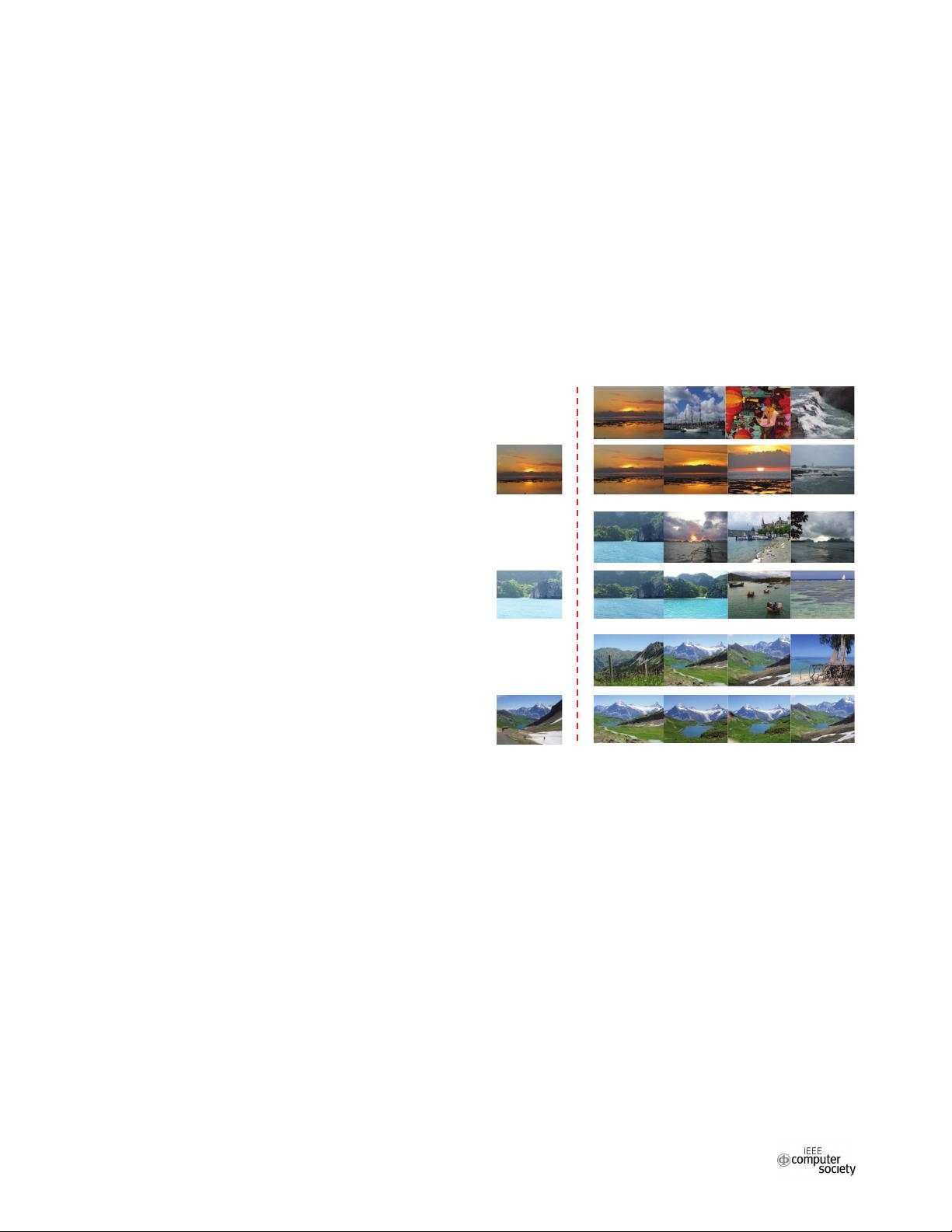

Figure 1: The examples of image retrieval from Holidays

database. For each query(left), the first row lists the results

obtained by the baseline(BoW) while the second row lists

the results obtained by our method. The dimension of the

dictionary in baseline is 20K, while there are only 128 topics

are learnt in our method.

a decrease because every image is represented by high

dimensional frequency vector, even though the inverted

index was employed.

Topic model is a type of statistical model which is first

proposed in the field of natural language processing for

discovering the abstract “topic” distribution by analyzing

the correlation of the documents and words in the corpus.

In this paper, we propose to solve the problem in image

retrieval by referring to the definition of topic and use Latent

Dirichlet Allocation (LDA) [8] to extract the image topics

2015 14th International Conference on Computer-Aided Design and Computer Graphics

978-1-4673-8020-1/15 $31.00 © 2015 IEEE

DOI 10.1109/CADGRAPHICS.2015.19

143