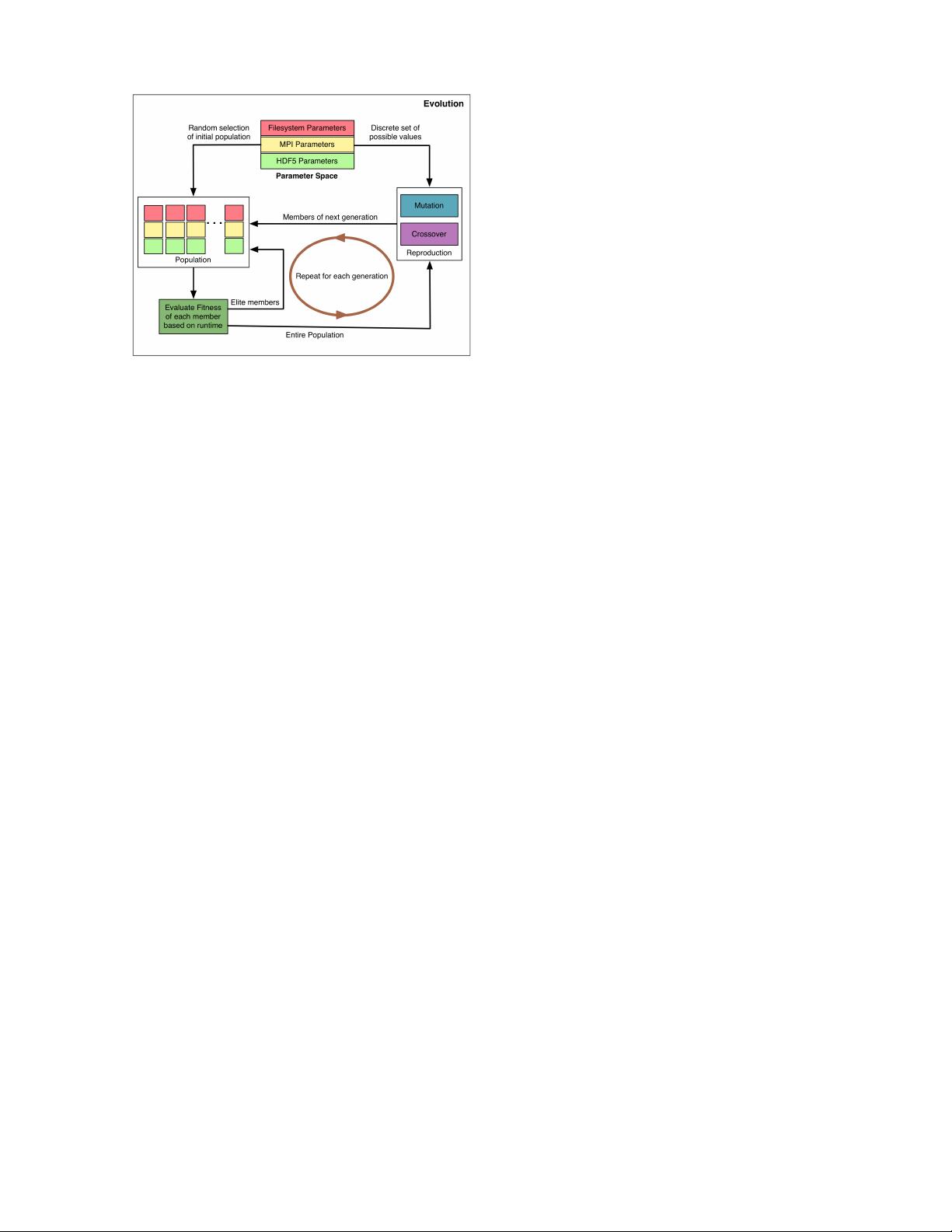

Figure 3: A pictorial depiction of the genetic algo-

rithm used in the auto-tuning framework.

performing configuration for a specific I/O benchmark.

3.1 H5Evolve: Sampling the Search Space

As mentioned previously, due to large size of the param-

eter space and possibly long execution time of a trial run,

finding optimal parameter sets for writing data of a given

size is a nontrivial task. Depending on the granularity with

which the parameter values are set, the size of the parameter

space can grow exponentially and unmanageably large for a

brute force and enumerative optimization approa ch.

Exact optimization techniques are not appropriate for sam-

pling the search space given the nondeterministic nature of

the objective function which is the runtime of a particular

configuration. Instead of relying on the simplest approach,

manual tweaking, adaptive heuristic search approaches such

as genetic evolution algorithms, simulated annealing, etc.,

can traverse the search space in a reasonable amount of time.

In H5Evolve, we explore genetic algorithms for sampling the

search space.

A genetic algorithm (GA) is a meta-heuristic for approach-

ing an optimization problem, particularly one that is ill-

suited for traditional exact or approximation methods. A

GA is meant to emulate the natural process of evolution,

working with a “population” of potential solutions through

successive “generations” (iterations) as they “reproduce” (in-

termingle portions between two members of the population)

and are subject to “mutations” (random changes to portions

of the solution). A GA is expected, although it cannot nec-

essarily be shown, to converge to an optimal or near-optimal

solution, as strong solutions beget stronger children, while

the random mutations o↵er a sampling of the remainder of

the space.

Our implementation, dubbed H5Evolve, is shown in Fig-

ure 3. It was built in Python using the Pyevolve [20] mod-

ule, which provides an intuitive framework for performing

genetic algorithm experiments in Python.

The workflow of H5Evolve is as follows. For a given bench-

mark at a specific concurrency and problem size, H5Evolve

runs the genetic algorithm (GA). H5Evolve takes a prede-

fined parameter space which contains possible values for the

I/O tuning parameters at each layer of the I/O stack. The

evolution process starts with randomly selected initial pop-

ulation. H5Evolve generates an XML file containing the se-

lected I/O parameters (an I/O configuration) that H5Tuner

injects into the benchmark. In all of our exp eri ments, the

H5Evolve GA uses a population size of 15; this size is a con-

figurable option. Starting with an initial group of configu-

ration sets, the genetic algorithm passes through successive

generations. H5Evolve uses the runtime as the fitness eval-

uation for a given I/O configuration. After each generation

has completed, H5Evolve evaluates the fitness of the popu-

lation and considers the fastest I/O configurations (i.e., the

“elite members”) for inclusion in the next generation. Ad-

ditionally, the entire current population undergoes a series

of mutations and crossovers to populate the other member

sets in the population of the next generation. This process

repeats for each generation. In our experiments, we set the

numb e r of generations to 40, meaning that H5Evolve runs a

maximum of 600 executions of a given benchmark. We used

a mutation rate of 15%, meaning that 15% of the population

undergoes mutation at each generation. After H5Evolve fin-

ishes sampling the search space, the best performing I/O

configuration is stored as the tuned parameter set.

3.2 H5Tuner: Setting I/O Parameters at Run-

time

The goal of the H5Tuner component is to develop an au-

tonomous parallel I/O parameter injector for scientific ap-

plications with minimal user involvement, allowing param-

eters to be altered without requiring a recompilation of the

application. The H5Tuner dynamic library is able to set

the parameters of di↵erent levels of the I/O stack—namely,

the HDF5, MPI-IO, and parallel file system levels in our

implementation. Assuming all the I/O optimization param-

eters for di↵erent levels of the stack are in a configuration

file, H5Tuner first reads the values of the I/O configuration.

When the HDF5 calls appear in the code during the exe-

cution of a benchmark or application, the H5Tuner library

intercepts the HDF5 function calls via dynamic linking. The

library reroutes the intercepted HDF5 calls to a new imple-

mentation, where the parameters from the configuration are

set and then the original HDF5 function is called using the

dynamic library package functions. This approach has the

added benefit of being completely transparent to the user;

the function calls remain exactly the same and all alterations

are made without change to the source code. We show an

example in Figure 4, where H5Tuner intercepts H5FCreate()

function call that creates a HDF5 file, applies various I/O

parameters, and calls the original H5FCreate() function call.

H5Tuner uses MiniXML [24], a small XML library to read

the XML configuration files. In our implementation, we are

reading the configuration file from user’s home directory.

A user has full flexibility to change the configuration file.

Figure 5 shows a sample configuration file with HDF5, MPI-

IO, and Lustre parallel file system tunable parameters.

4. EXPERIMENTAL SETUP

We have evaluated the e↵ectiveness of our auto-tuning

framework on three HPC platforms using three I/O bench-

marks at three di↵erent scales. The HPC platforms in-

clude Hopper, a Cray XE6 system at National Energy Re-

search Scientific Computing Center (NERSC); Intrepid, a

IBM BlueGene/P (BG/P) system at Argonne Leadership