for rare or unknown words. Indeed, manually engineered heuristics do not quite fully

characterize all intricacies of spoken language. For this reason, feature extraction

can also be done with trained models. The line between the feature extractor and

the acoustic model can then become blurry, especially for deep models. In fact, a

tendency that is common across all areas where deep models have overtaken traditional

machine learning techniques is for feature extraction to consist of less heuristics, as

highly nonlinear models become able to operate at higher levels of abstraction.

A common feature extraction technique is to build frames that will integrate

surrounding context in a hierarchical fashion. For example, a frame at the syllable level

could include the word that comprises it, its position in the word, the neighbouring

syllables, the phonemes that make up the syllable, ... The lexical stress and accent

of individual syllables can be predicted by a statistical model such as a decision tree.

To encode prosody, a set of rules such as ToBI (Beckman and Elam, 1997) can be

used. Ultimately, there remains a work of feature engineering to present a frame as a

numerical object to the model, e.g. categorical features are typically encoded using a

one-hot representation.

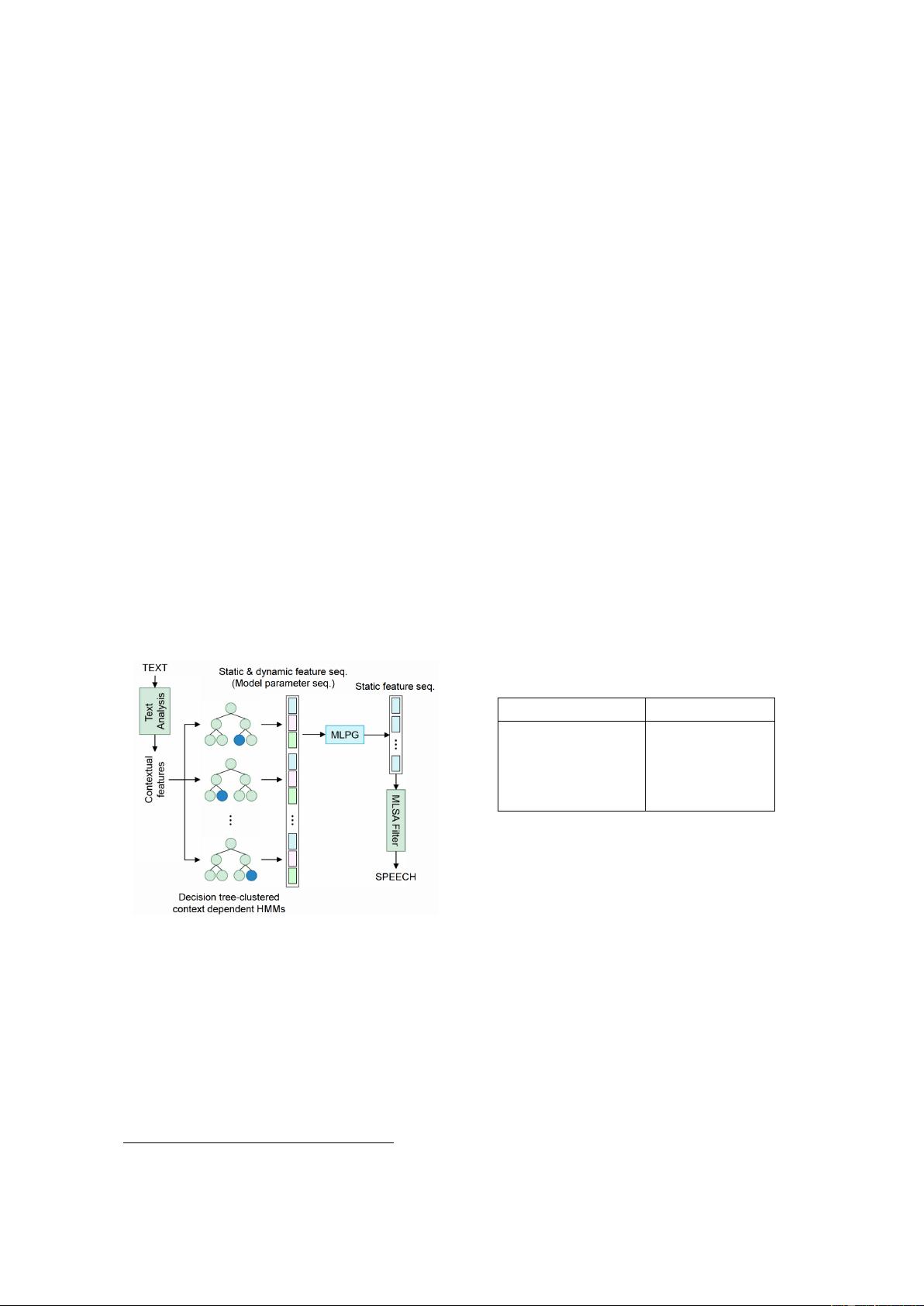

The reason why the acoustic model does not directly predict an audio waveform

is that audio happens to be difficult to model: it is a particularly dense domain

and audio signals are typically highly nonlinear. A representation that brings out

features in a more tractable manner is the time-frequency domain. Spectrograms

are smoother and much less dense than their waveform counterpart. They also have

the benefit of being two-dimensional, thus allowing models to better leverage spatial

connectivity. Unfortunately, a spectrogram is a lossy representation of the waveform

that discards the phase. There is no unique inverse transformation function, and

deriving one that produces natural-sounding results is not trivial. When referring to

speech, this generative function is called a vocoder. The choice of the vocoder is an

important factor in determining the quality of the generated audio.

As is often the case with tasks that involve generating perceptual data such as

images or audio, a formal and objective evaluation of the performance of the model

is difficult. In our case, we’re concerned with evaluating speech naturalness and voice

similarity of the generated audio. Older TTS methods often relied on statistics com-

puted on the waveform or on the acoustic features to compare different models. Not

only are those metrics often only weakly correlated with the human perception of

sound, but they would also typically be what the model was aiming to minimize.

“When a measure becomes a target, it ceases to be a good measure” (Strathern, 1997).

A recent tendency adopted in TTS is to perform a subjective evaluation with human

subjects and to report their Mean Opinion Score (MOS). The subjects are presented

with a series of audio segments and are asked to rate their naturalness (or similarity

when comparing two segments) on a Likert scale from 1 to 5. Because subjects do not

necessarily rate actual human speech with a 5, it may be possible for TTS systems to

surpass humans on this metric in the future. (Shirali-Shahreza and Penn, 2018) argue

that MOS is not a metric adapted to evaluate TTS systems, and they advocate using

A/B testing instead, where subjects are asked to say which audio segment they prefer

6