NVLink Bandwidth (GB/s) (uni-direction)

Deep Learning (Tensor OPS)

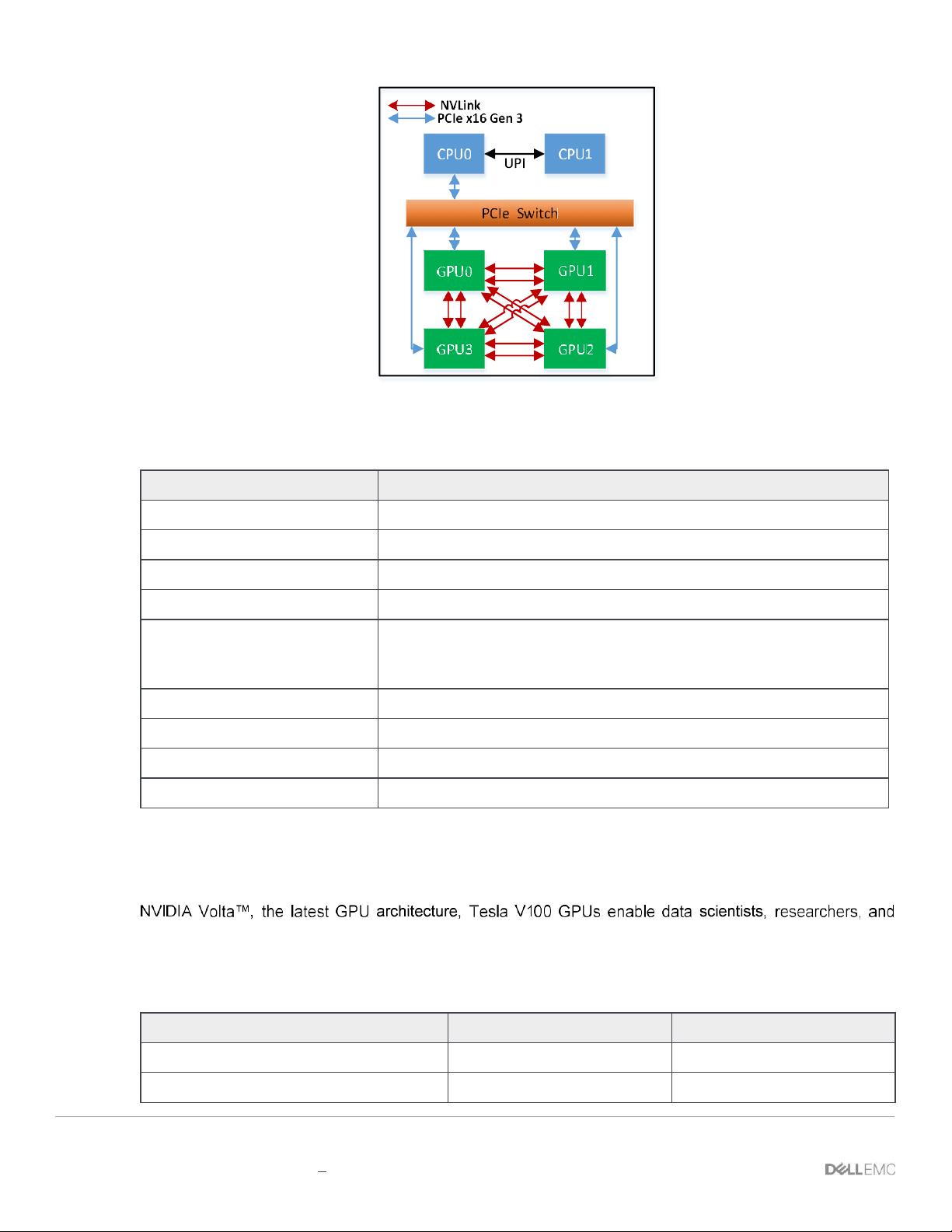

Tesla V100 product line includes two variations, V100-PCIe and V100-SXM2. The comparison of two variants

is shown in Table 3. In the V100-PCIe, all GPUs communicate with each other over PCIe buses. With the V100-

SXM2 model, all GPUs are connected by NVIDIA NVLink. In use-cases where multiple GPUs are required, the

V100-SXM2 models provide the advantage of faster GPU-to-GPU communication over the NVLINK

interconnect when compared to PCIe. V100-SXM2 GPUs provide six NVLinks per GPU for bi-directional

communication. The bandwidth of each NVLink is 25GB/s in uni-direction and all four GPUs within a

node can communicate at the same time, therefore the theoretical peak bandwidth is 6*25*4=600GB/s

in bi-direction. However, the theoretical peak bandwidth using PCIe is only 16*2=32GB/s as the GPUs can only

communicate in order, which means the communication cannot be done in parallel. So in theory the data

communication with NVLink could be up to 600/32=18x faster than PCIe. The evaluation of this

performance advantage in real models will be discussed in Section 3.1.3. Because of this advantage, the

PowerEdge C4140 compute node in the Deep Learning solution uses V100-SXM2 instead of V100-PCIe GPUs.

2.3

Processor recommendation for Head Node and Compute Nodes

The processor chosen for the head node and compute nodes is Intel

®

Xeon

®

Gold 6148 CPU. This is the latest

Intel

®

Xeon

®

Scalable processor with 20 physical cores which support 40 threads. Previous studies, as

described in Section 3.1, have concluded that 16 threads are sufficient to feed the I/O pipeline for the state-of-

the-art convolutional neural network, so the Gold 6148 CPU is a reasonable choice. Additionally this CPU model

is recommended for the compute nodes as well, making this a consistent choice across the cluster.

2.4

Memory recommendation for Head Node and Compute Nodes

The recommended memory for the head node is 24x 16GB 2666MT/s DIMMs. Therefore the total size of

memory is 384GB. This is chosen based on the following facts:

Capacity: An ideal configuration must support system memory capacity that is larger than the total size

of GPU memory. Each compute node has 4 GPUs and each GPU has 16GB memory, so the system

memory must be at least 16GBx4=64GB. The head node memory also affects I/O performance. For

NFS service, larger memory will reduce disk read operations since NFS service needs to send out data

from memory. 16GB DIMMs demonstrate the best performance/dollar value.

DIMM configuration: Choices like 24x 16GB or 12x 32GB will provide the same capacity of 384GB

system memory, but according to our studies as shown in Figure 4, the combination of 24x 16GB

DIMMs provides 11% better performance than using 12x 32GB. The results shown here was on the

Intel Xeon Platinum 8180 processor, but the same trends will apply across other models in the Intel

Scalable Processor Family including the Gold 6148, although the actual percentage differences across

configurations may vary. More details can be found in our Skylake memory study.

Serviceability: The head node and compute nodes memory configurations are designed to be similar

to reduce parts complexity while satisfying performance and capacity needs. Fewer parts need to be

stocked for replacement, and in urgent cases if a memory module in the head node needs to be

10 Dell EMC Ready Solutions for AI Deep Learning with NVIDIA | v1.0