S. Zhao, B. Zhang and C.L. Philip Chen / Information Sciences 489 (2019) 167–181 169

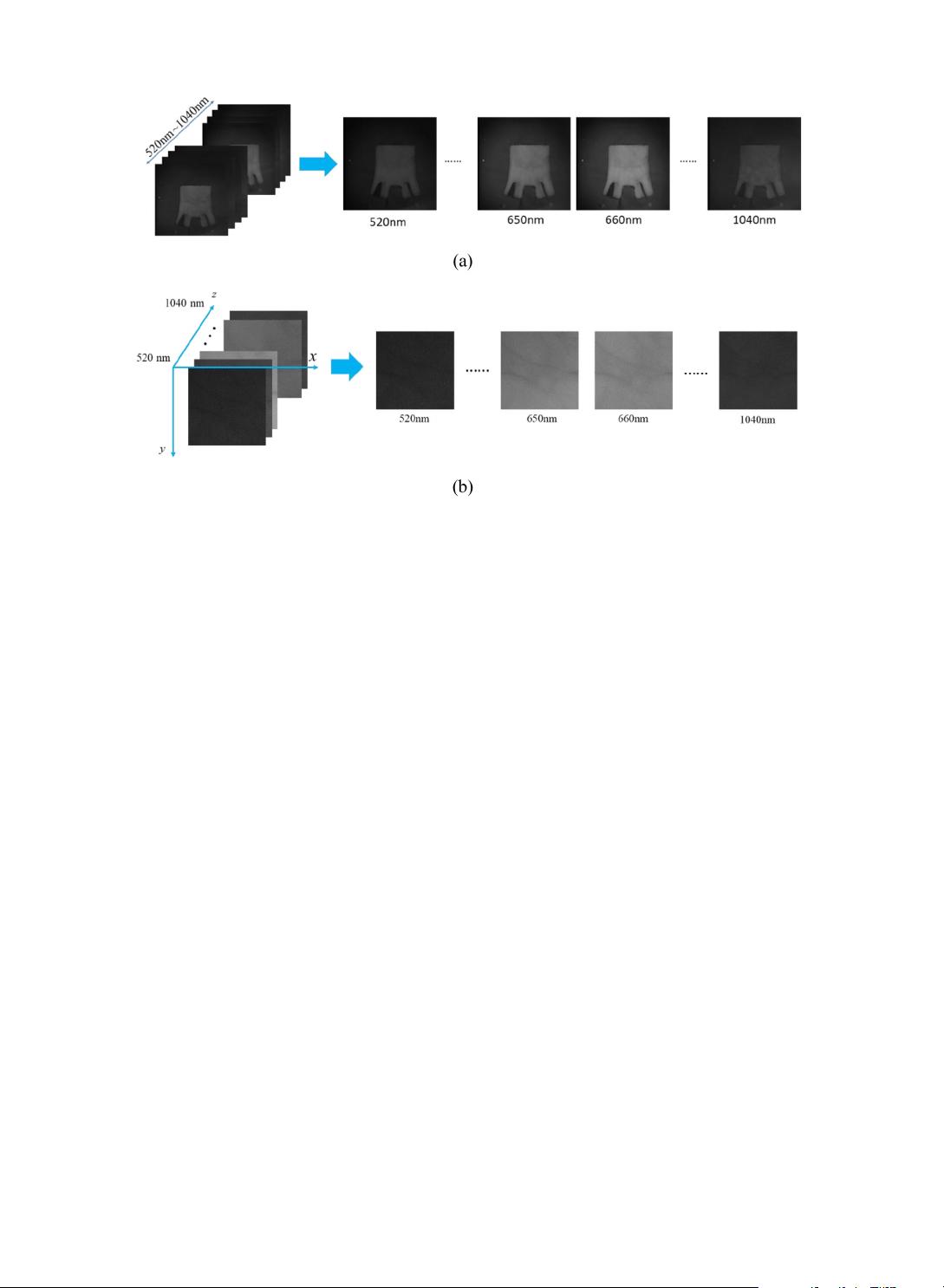

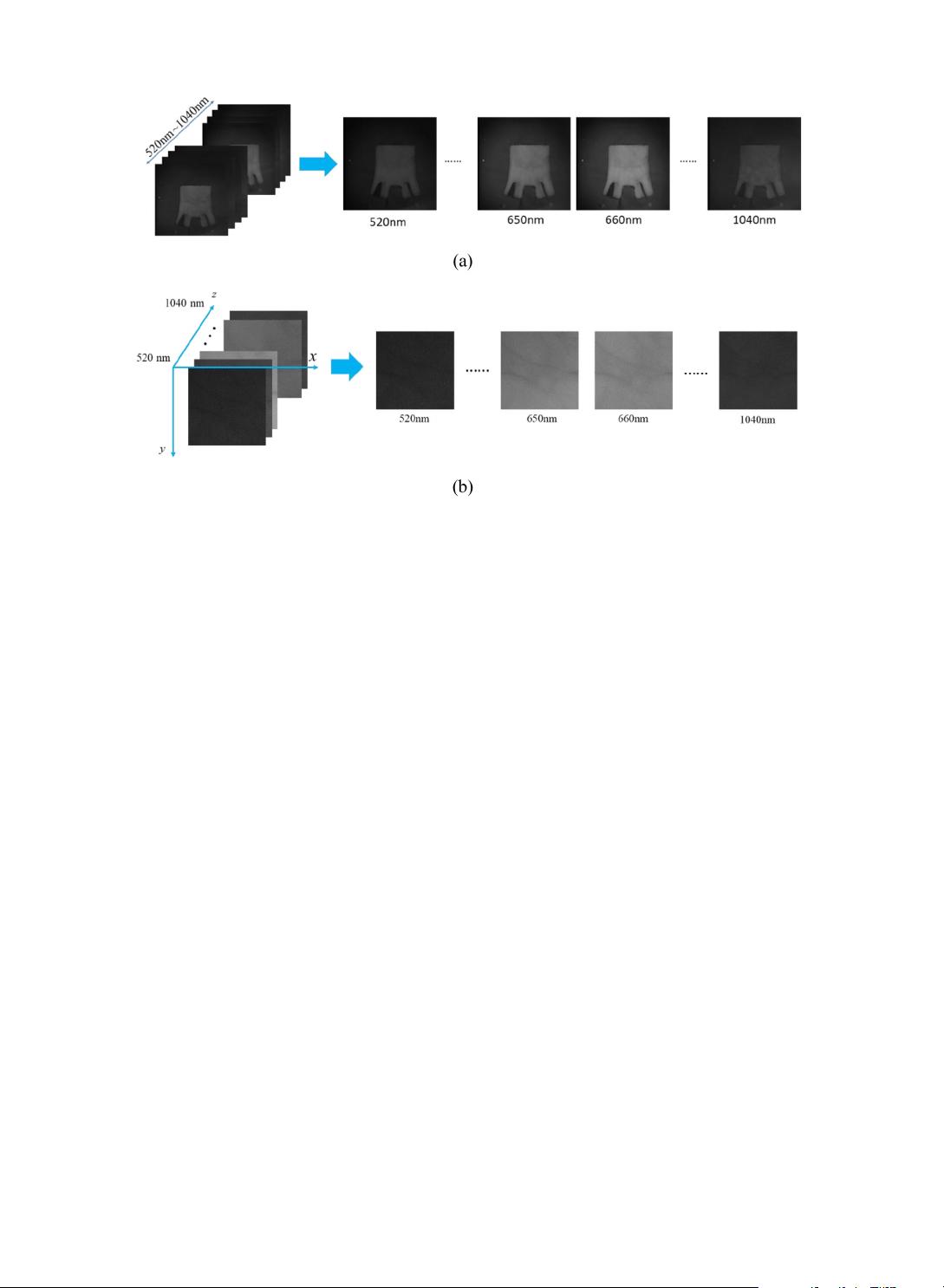

Fig. 1. An example of a hyperspectral palmprint cube containing dozens of palmprint images captured under different spectrums. (a) is the original hyper-

spectral palmprint image. (b) is the region of interest (ROI) extracted from the original image [49] .

joint deep convolutional features must be represented in another way. To resolve this issue, the collaborative representation

based classifier (CRC) [36] is adopted. In the process of classification, the coding coefficient generated by the collabora-

tive representation is sparse. This means the features can be represented by a sparse coefficient with minimum redundant

information.

JDCFR can convey and combine discriminative features from the palmprint images on all bands. Furthermore, influences

of redundant characteristics can be reduced to a minimum. The main contributions of this work are summarized as follows:

i. A new convolutional neural network is proposed for the feature extraction of hyperspectral palmprint images which

is trained locally. The network consists of 16 layers and can extract the palmprint features of a specific band, while

outputting a suitable dimensional feature vector for recognition.

ii. We developed a CNN stack architecture, where each stack element corresponds to a novel CNN for a specific hyper-

spectral palmprint band. This ensures the extraction of all features from the entire set of hyperspectral palmprint bands.

Afterwards, all the extracted features for each spectral band are combined to form a joint convolutional feature. To reduce

the redundant characteristics of the joint features, the sparse representation based CRC method is utilized to represent

the features, while simultaneously carrying out classification.

2. Joint deep convolutional feature representation

2.1. Hyperspectral palmprint convolutional neural network

2.1.1. Architecture

The main purpose of our deep CNN is to extract features from hyperspectral palmprint images rather than classification

directly. Therefore, the architecture of the CNN cannot be too deep as it will consume a lot of time. To obtain a better

performance in the classification using CRC after the features have been extracted, it is best that the number of samples is

equal to or greater than the palmprint feature dimension. Otherwise, we cannot use the CRC function to obtain an accurate

solution. To avoid this problem, the dimension of the output layer cannot be too large, such that the number of samples is

balanced with the dimension of the input feature.

Inspired by the successful implementation of LeNet [37] and AlexNet [22] , a new deep convolutional neural network

is proposed to satisfy the application requirements in this paper. The architecture of our deep CNN shown in Fig. 2 is

utilized to extract deep convolutional hyperspectral palmprint features. Table 1 illustrates the configuration of our CNN. In

total, there are 16 layers, including 3 convolutional layers, 3 pooling layers, 5 RELU layers, 2 batch normalization layers, 2

fully connected ( FC

1

and FC

2

) layers and a Softmax layer. The convolutional and FC layers are also called learnable layers,

including a number of convolutional filters learned from the input. The Softmax layer is just used for training the network.

For this network, the input is one palmprint image and the output is a feature vector. The second fully connected ( FC

2

))

layer is the output layer of the feature vector.