Condensation—Conditional Density Propagation for Visual Tracking 9

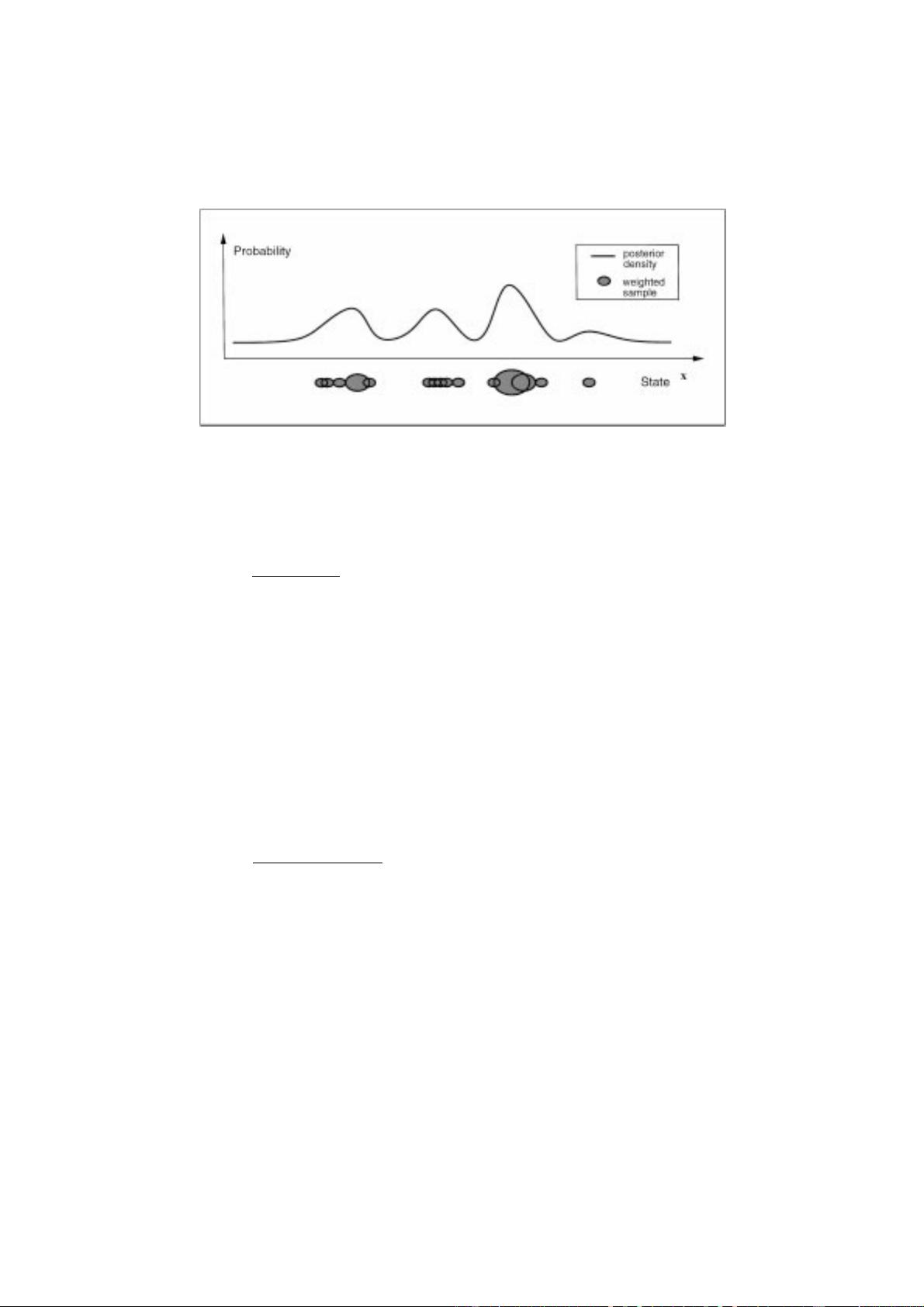

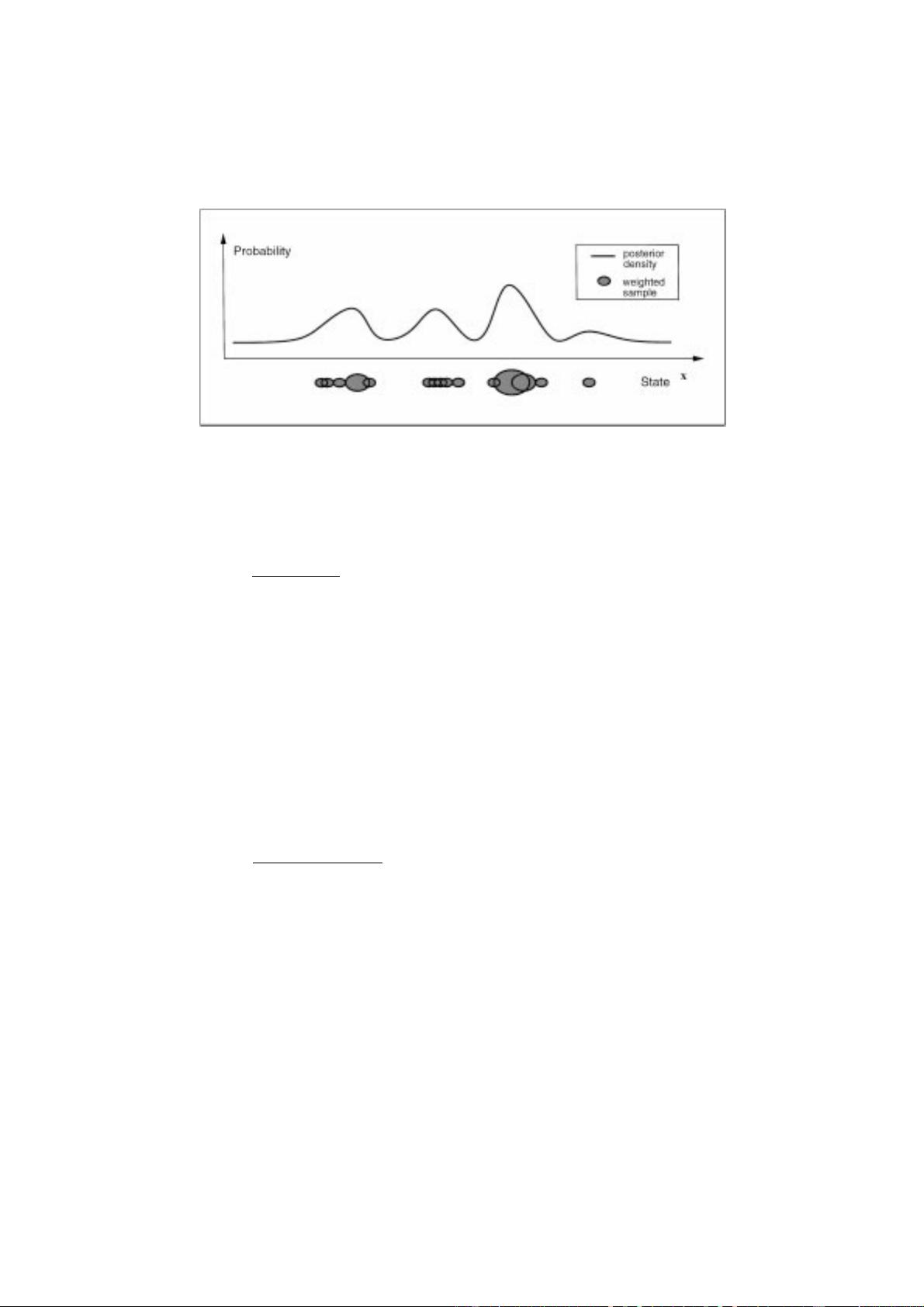

Figure 3. Factored sampling: a set of points s

(n)

, the centres of the blobs in the figure, is sampled randomly from a prior density p(x). Each

sample is assigned a weight π

i

(depicted by blob area) in proportion to the value of the observation density p(z |x = s

(n)

). The weighted

point-set then serves as a representation of the posterior density p(x |z), suitable for sampling. The one-dimensional case illustrated here extends

naturally to the practical case that the density is defined over several position and shape variables.

n ∈{1,...,N}is chosen with probability π

n

, where

π

n

=

p

z

¡

s

(n)

¢

P

N

j=1

p

z

¡

s

( j)

¢

and

p

z

(x) = p(z |x),

the conditional observation density. The value x

0

= x

n

chosen in this fashion has a distribution which approx-

imates the posterior p(x |z) increasingly accurately as

N increases (Fig. 3).

Note that posterior mean properties E [g(x) |z] can

begenerateddirectlyfromthesamples{s

(n)

}byweight-

ing with p

z

(x) to give:

E[g(x) |z] ≈

P

N

n=1

g

¡

s

(n)

¢

p

z

¡

s

(n)

¢

P

N

n=1

p

z

¡

s

(n)

¢

. (7)

Forexample, themeancanbeestimatedusing g(x) = x

(illustrated in Fig. 4) and the variance using g(x) =

xx

T

. In the case that p(x) is a spatial Gauss-Markov

process, Gibbs sampling from p(x) has been used

to generate the random variates {s

(1)

,...,s

(N)

}. Oth-

erwise, for low-dimensional parameterisations as in

this paper, standard, direct methods can be used for

Gaussians

2

(Press et al., 1988). Note that, in the case

that the density p(z |x) is normal, the mean obtained

by factored sampling is consistent with an estimate ob-

tained more conventionally, and efficiently, from linear

least squaresestimation. For multi-modal distributions

which cannot be approximated as normal, so that linear

estimators are unusable, estimates of mean x by fac-

tored sampling continue to apply.

4. The C

ONDENSATION Algorithm

The Condensation algorithm is based on factored

samplingbutextendedtoapplyiteratively to successive

images in a sequence. The same sampling strategy

has been developed elsewhere (Gordon, et al., 1993;

Kitagawa,1996), presentedasdevelopments ofMonte-

Carlo methods. Jump-diffusion tracking (Miller et al.,

1995) may also be related to the approach described

here.

Given that the process at each time-step is a self-

contained iteration of factored sampling, the out-

put of an iteration will be a weighted, time-stamped

sample-set, denoted {s

(n)

t

, n = 1,...,N}with weights

π

(n)

t

, representing approximately the conditional state-

density p(x

t

|Z

t

) at time t. How is this sample-set

obtained? Clearly, the process must begin with a prior

density and the effective prior for time-step t should

be p(x

t

|Z

t−1

). This prior is of course multi-modal in

general and no functional representation of it is avail-

able. It is derived from the sample set representation

{(s

(n)

t−1

,π

(n)

t−1

), n = 1,...,N} of p(x

t−1

|Z

t−1

), the

output from the previous time-step, to which predic-

tion (5) must then be applied.

The iterative process as applied to sample-sets, de-

picted in Fig. 5, mirrors the continuous diffusion pro-

cess in Fig. 2. At the top of the diagram, the out-

put from time-step t − 1 is the weighted sample-set

{(s

(n)

t−1

,π

(n)

t−1

), n = 1,...,N}. The aim is to maintain,

at successive time-steps, sample sets of fixed size N,