The Bad

R has some drawbacks, as well. Many algorithms in its ecosystem are provided by the community or other third parties, so there can be some

inconsistency between them and other tools. Each package in R is like its own mini-ecosystem that requires a little bit of understanding first before

going all out with it. Some of these packages were developed a long time ago and it’s not obvious what the current “killer app” is for a particular

machine learning model. You might want to do a simple neural network model, for example, but you also want to visualize it. Sometimes, you might

need to select a package you’re less familiar with for its specific functionality and leave your favorite one behind.

Sometimes, documentation for more obscure packages can be inconsistent, as well. As referenced earlier, you can pull up the help file or manual

page for a given function in R by doing something like ?lm() or ?rf() . In a lot of cases, these include helpful examples at the bottom of the page for

how to run the function. However, some cases are needlessly complex and can be simplified to a great extent. One goal of this book is to try to

present examples in the simplest cases to build an understanding of the model and then expand on the complexity of its workings from there.

Finally, the way R operates from a programmatic standpoint can drive some professional developers up a wall with how it handles things like type

casting of data structures. People accustomed to working in a very strict object-oriented language for which you allocate specific amounts of memory

for things will find R to be rather lax in its treatment of boundaries like those. It’s easy to pick up some bad habits as a result of such pitfalls, but this

book aims to steer clear of those in favor of simplicity to explain the machine learning landscape.

Summary

In this chapter we’ve scoped out the vision for our exploration of machine learning using the R programming language.

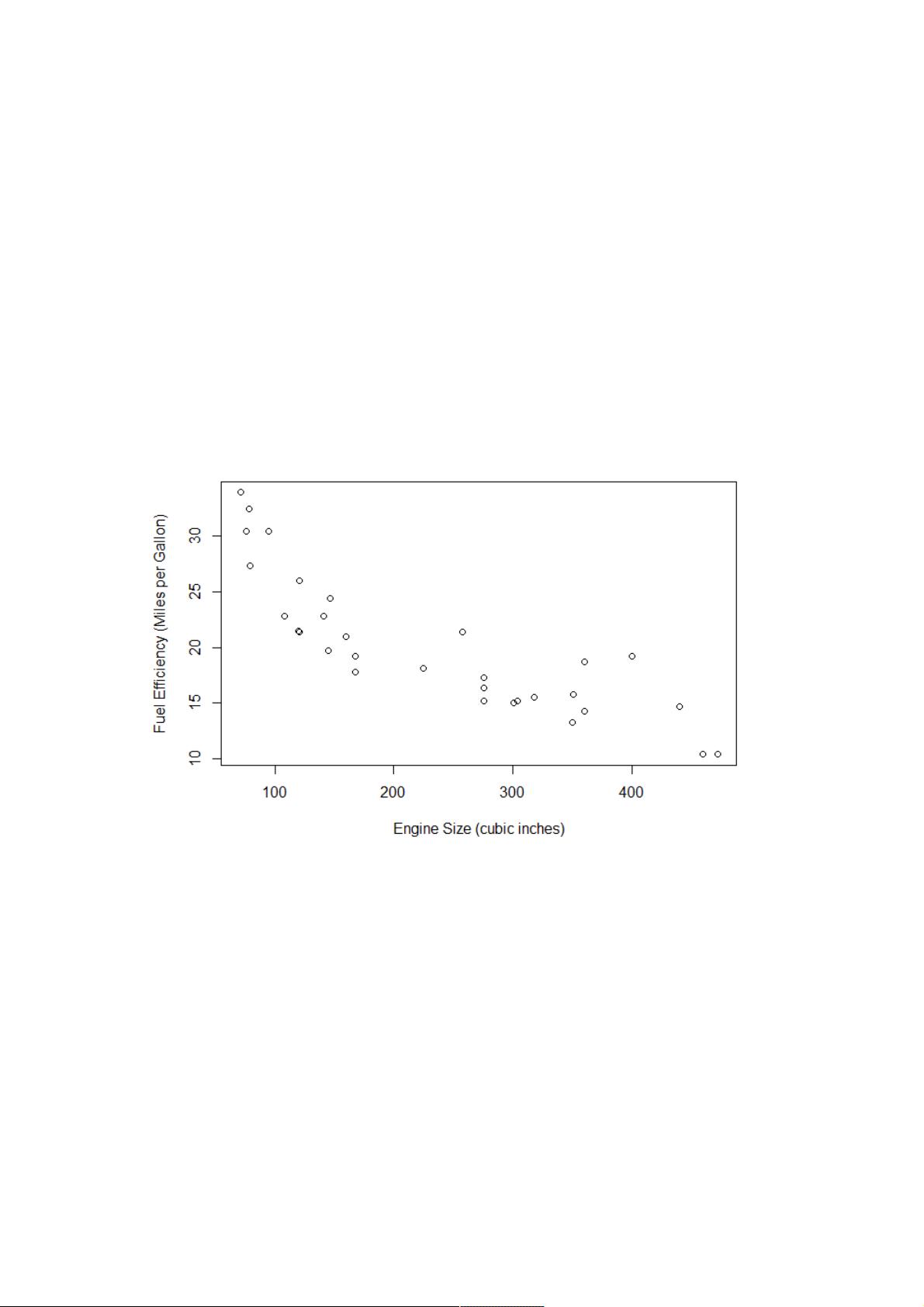

First we explored what makes up a model and how that differs from a report. You saw that a static report doesn’t tell us much in terms of

predictability. You can turn a report into something more like a model by first introducing another feature and examining if there is some kind of

relationship in the data. You then fit a simple linear regression model using the lm() function and got an equation as your final result. One feature of R

that is quite powerful for developing models is the function operator ~ . You can use this function with great effect for symbolically representing the

formulas that you are trying to model.

We then explored the semantics of what defines a model. A machine learning model like linear regression utilizes algorithms like gradient descent to

do its background optimization procedures. You call linear regression in R by using the lm() function and then extract the coefficients from the

model, using those to build your equation.

An important step with machine learning and modeling in general is to understand the limits of the models. Having a robust model of a complex set of

data does not prevent the model itself from being limited in scope from a time perspective, like we saw with our mtcars data. Further, all models

have some kind of error tied to them. We explore error assessment on a model-by-model basis, given that we can’t directly compare some types to

others.

Lots of machine learning models utilize complicated statistical algorithms for them to compute what we want. In this book, we cover the basics of

these algorithms, but focus more on implementation and interpretation of the code. When statistical concepts become more of a focus than the

underlying code for a given chapter, we give special attention to those concepts in the appendixes where appropriate. The statistical techniques that

go into how we shape the data for training and testing purposes, however, are discussed in detail. Oftentimes, it is very important to know how to

specifically tune the machine learning model of choice, which requires good knowledge of how to handle training sets before passing test data through

the fully optimized model.

To cap off this chapter, we make the case for why R is a suitable tool for machine learning. R has its pedigree and history in the field of statistics,

which makes it a good platform on which to build modeling frameworks that utilize those statistics. Although some operations in R can be a little

different than other programming languages , on the whole R is a relatively simple-to-use interface for a lot of complicated machine learning concepts

and functions.

Being an open source programming language, R offers a lot of cutting-edge machine learning models and statistical algorithms. This can be a double-

edged sword in terms of help files or manual pages, but this book aims to help simplify some of the more impenetrable examples encountered when

looking for help.

In Chapter 2 , we explore some of the most popular machine learning models and how we use them in R. Each model is presented in an introductory

fashion with some worked examples. We further expand on each subject in a more in-depth dedicated chapter for each topic.

Box, G. E. P., J. S. Hunter, and W. G. Hunter. Statistics for Experimenters . 2nd ed. John Wiley & Sons, 2005.

1