𝜙

Transformer

𝜙

!""

𝐹

!

"##

𝜓

#$%&

𝐹

'

𝐻

#("

𝐻

#$%&

Concatenate

Product

𝐹

$

%#

𝜙

)$*+$)

targetsource

Value 𝐹

'

ℒ

#$%&

ℒ

#("

reconstruction

Self-supervised Task

Supervised Task

Query 𝑄

#("

Key 𝐹

'

𝑊

𝐼

'

𝐼

,

)

𝐼

'

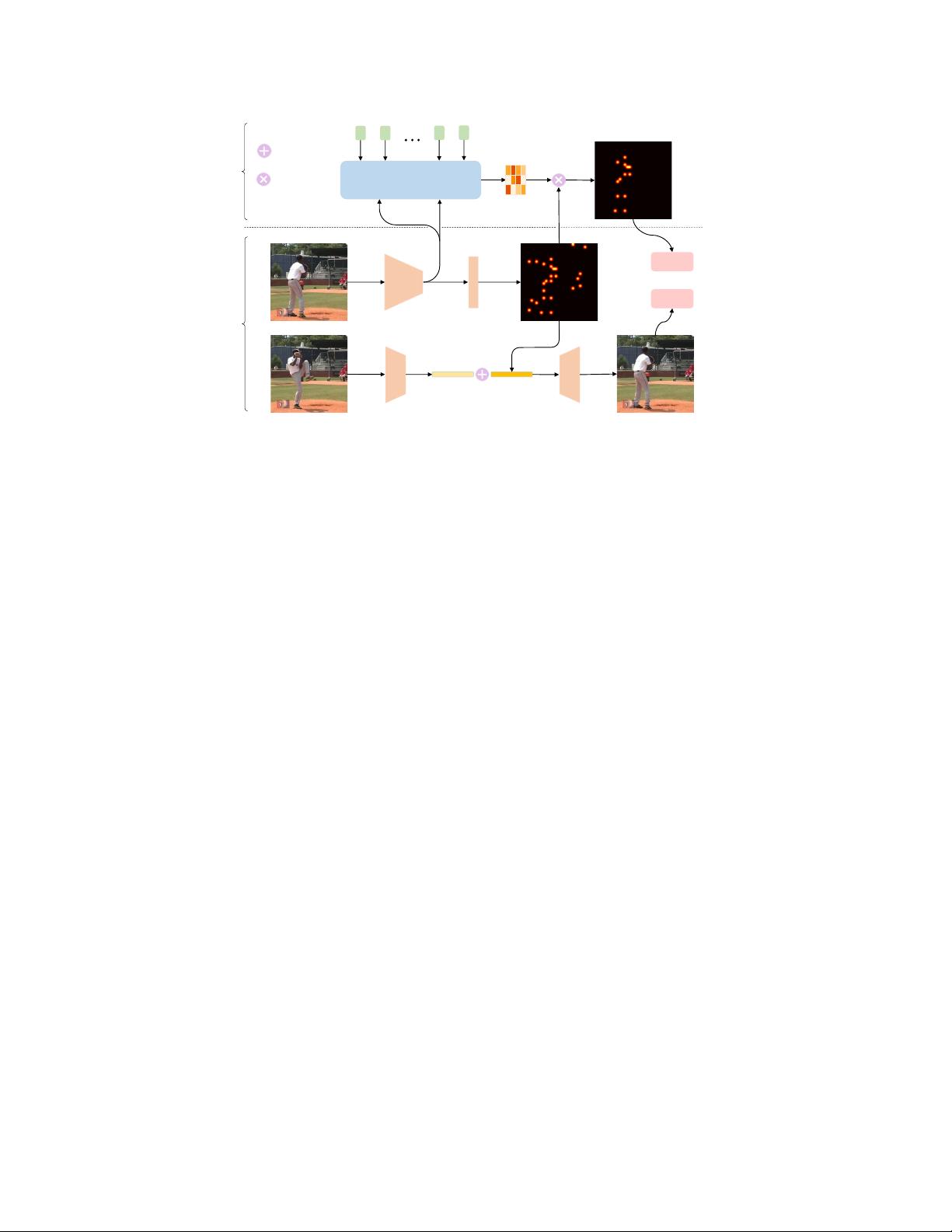

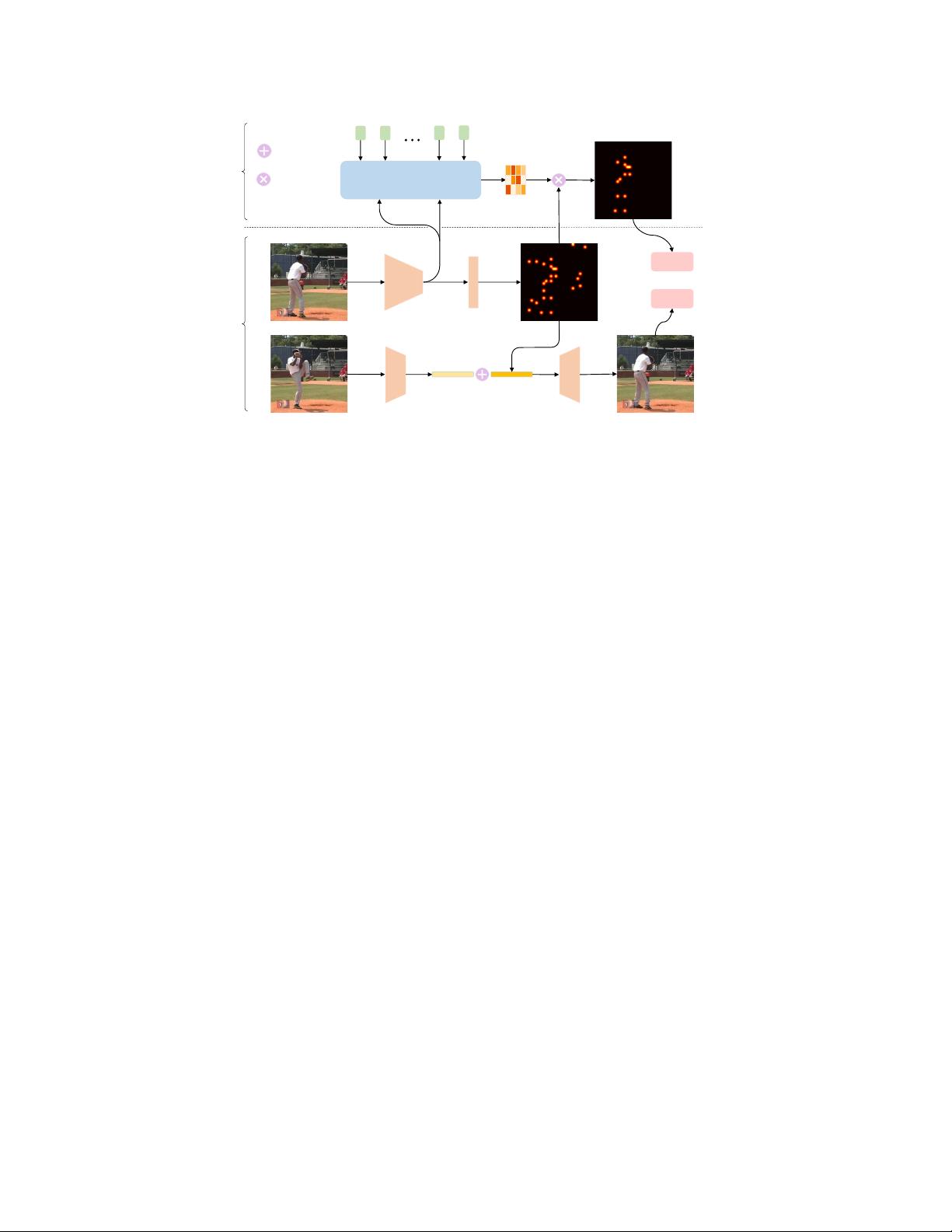

Figure 2: The proposed pipeline. 1)

Self-supervised task for personalization.

In the middle stream,

the encoder

φ

encodes the target image into feature

F

t

. Then

F

t

is fed into the self-supervised

head

ψ

self

obtaining self-supervised keypoint heatmaps

H

self

. Passing

H

self

into a keypoint encoder

(skipped in the figure) leads to keypoint feature

F

kp

t

. In the bottom stream, a source image is

forwarded to an appearance extractor

φ

app

which leads to appearance feature

F

app

t

. Together, a

decoder reconstructs the target image using concatenated

F

app

s

and

F

kp

t

. 2)

Supervised task with

Transformer.

On the top stream, a Transformer predicts an affinity matrix given learnable keypoint

queries

Q

sup

and

F

t

. The final supervised heatmaps

H

sup

is given as weighted sum of

H

self

using

W

.

simple extractor

φ

app

(see the bottom stream in Figure 2). The extraction of keypoints information

from the target image follows three steps as below (also the see the middle stream in Figure 2).

Firstly, the target image

I

t

is forwarded to the encoder

φ

to obtain shared feature

F

t

. The self-

supervised head

ψ

self

further encodes the shared feature

F

t

into heatmaps

H

self

t

. Note the number of

channels in the heatmap

H

self

t

is equal to the number of self-supervised keypoints. Secondly,

H

self

t

is

normalized using a

Softmax

function and thus becomes condensed keypoints. In the third step, the

heatmaps are replaced with fixed Gaussian distribution centered at condensed points, which serves as

keypoint information

F

kp

t

. These three steps ensure a bottleneck of keypoint information, ensuring

there is not enough capacity to encode appearance features to avoid trivial solutions.

The objective of the self-supervised task is to reconstruct the target image with a decoder using both

appearance and keypoint features:

ˆ

I

t

= φ

render

F

app

s

, F

kp

t

. Since the bottleneck structure from the

target stream limits the information to be passed in the form of keypoints, the image reconstruction

enforces the disentanglement and the network has to borrow appearance information from source

stream. The Perceptual loss [29] and L2 distance are utilized as the reconstruction objective,

L

self

= PerceptualLoss

I

t

,

ˆ

I

t

+

I

t

−

ˆ

I

t

2

(1)

Instead of self-supervised tasks like image rotation prediction [

18

] or colorization [

70

], choosing an

explicitly related self-supervised key-point task in joint training naturally preserves or even improves

performance, and it is more beneficial to test-time personalization. Attention should be paid that our

method requires only label of one single image and unlabeled samples belonging to the same person.

Compared to multiple labeled samples of the same person or even more costly consecutively labeled

video, acquiring such data is much more easier and efficient.

3.1.2 Supervised Keypoint Estimation with a Transformer

A natural and basic choice for supervised keypoint estimation is to use an unshared supervised head

ψ

sup

to predict supervised keypoints based on

F

t

. However, despite the effectiveness of multi-task

learning on two pose estimation tasks, their relation still stays plain on the surface. As similar tasks

do not necessarily help each other even when sharing features, we propose to use a Transformer

decoder to further strengthen their coupling. The Transformer decoder models the relation between

4