Machine Learning for Electronic Design Automation: A Survey x:9

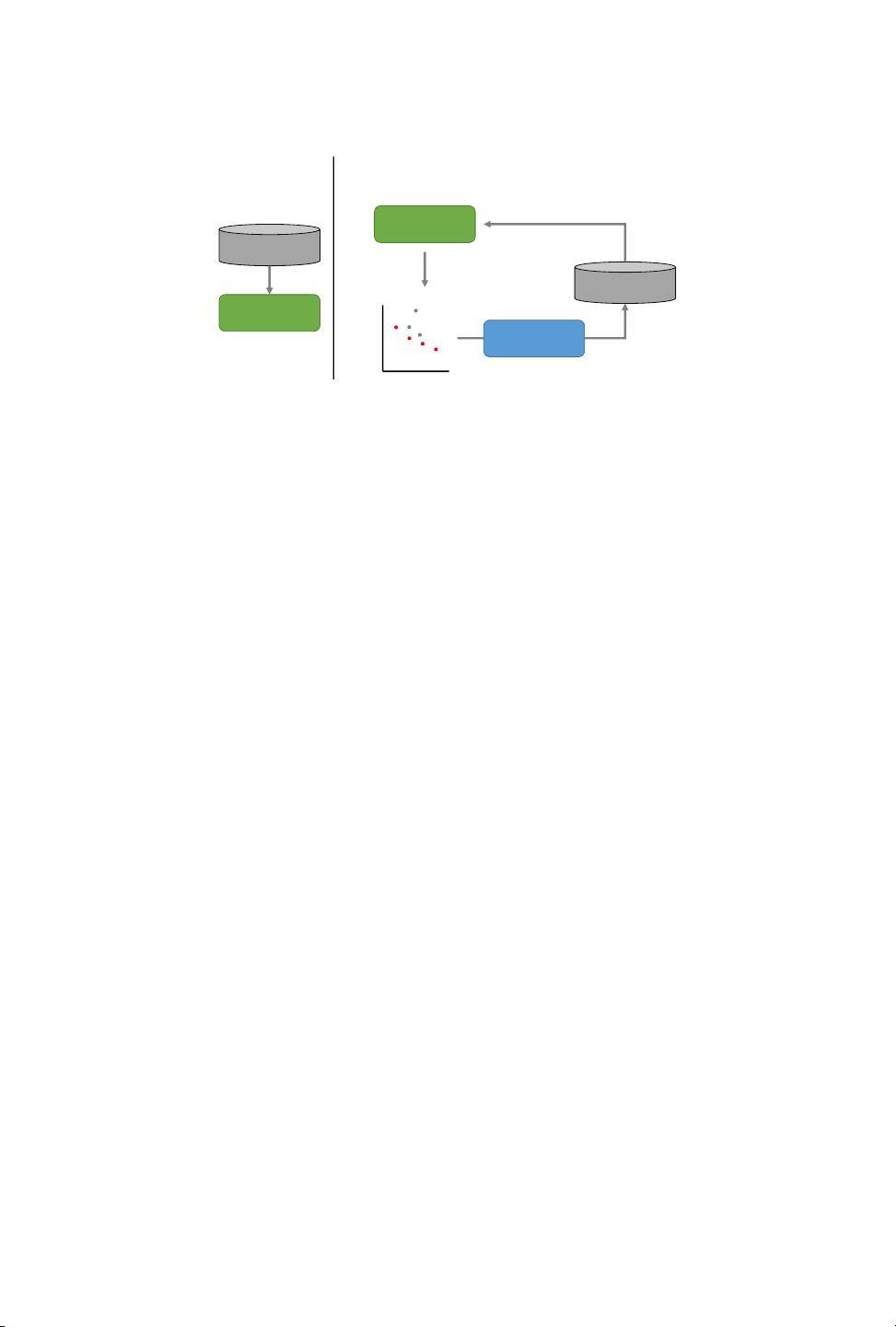

SA/GA-Based

Design Space

Explorer

Local Search

ML Predictor

Meta Search

New search trajectory to

improve the predictor

Good starting states to find

better designs

Fig. 5. Overview of the STAGE algorithm (reproduced from [74]).

where

𝑇 [·]

is the matrix trace operator and

𝜇 > 0

. The authors interprete their solution as sampling

from a set

˜

K that span a linear space, to retain most of the information of K [93].

PAL [

177

] is proposed for general active learning scenarios, and is demonstrated by a sorting

network synthesis DSE problem in the paper. It uses Gaussian Process (GP) to predict Pareto-

optimal points in design space . The models predict the objective functions to identify points that

are Pareto-optimal with high probabilities. A point

𝑥

that has not been sampled is predicted as

ˆ

𝑓 (𝑥) = 𝜇 (𝑥)

and

𝜎 (𝑥)

is interpreted as the uncertainty of the prediction which can be captured by

the hyperrectangle

𝑄

𝜇,𝜎,𝛽

(𝑥) =

n

𝑦 : 𝜇 (𝑥) − 𝛽

1/2

𝜎 (𝑥) ⪯ 𝑦 ⪯ 𝜇 (𝑥) + 𝛽

1/2

𝜎 (𝑥)

o

,

where

𝛽

is a scaling parameter to be chosen. PAL focuses on accurately predicting points near the

Pareto frontier, instead of the whole design space. In every iteration, the algorithm classies samples

into three groups: Pareto-optimal, Non-Pareto-optimal, and uncertain ones. The next design point

to evaluate is the one with the largest uncertainty, which intuitively has more information to

improve the model. The training process is terminated when there are no uncertain points. The

points classied as Pareto-optimal are then returned.

ATNE [

109

] utilizes Random Forest (RF) to aid the DSE process. This work uses a Pareto iden-

tication threshold that adapts to the estimated inaccuracy of the RF regressor and eliminates

the non-Pareto-optimal designs incrementally. Instead of focusing on improving the accuracy of

the learner, ATNE focuses on estimating and minimizing the risk of losing “good” designs due to

learning inaccuracy.

3.2.2 Machine Learning for Improving Other Optimization Algorithms. In this part, we summarize

three studies that use ML techniques to improve classical optimization algorithms.

STAGE [

74

] is proposed for DSE of many-core systems. The motivating observation of STAGE is

that the performance of simulated annealing is highly sensitive to the starting point of the search

process. The authors build an ML model to learn which parts of the design space should be focused

on, eliminating the times of futile exploration [

13

]. The proposed strategy is divided into two stages.

The rst stage (local search) performs a normal local search, guided by a cost function based on the

designer’s goals. The second stage (meta search) tries to use the search trajectories from previous

local search runs to learn to predict the outcome of local search given a certain starting point [

74

].

Fast Simulated Annealing (FSA) [

104

] utilizes the decision tree to improve the performance of

SA. Decision tree learning is a widely used method for inductive inference. The HLS pragmas are

taken as input features. FSA rst performs standard SA to generate enough training sets to build

ACM Trans. Des. Autom. Electron. Syst., Vol. x, No. x, Article x. Publication date: January 0000.