10

Using neural nets to recognize handwritten digits

where the sum is over all the weights,

w

j

, and

∂ output/∂ w

j

and

∂ output/∂ b

denote partial

derivatives of the output with respect to

w

j

and

b

, respectively. Don’t panic if you’re not

comfortable with partial derivatives! While the expression above looks complicated, with all

the partial derivatives, it’s actually saying something very simple (and which is very good

news):

∆output

is a linear function of the changes

∆w

j

and

∆b

in the weights and bias.

This linearity makes it easy to choose small changes in the weights and biases to achieve

any desired small change in the output. So while sigmoid neurons have much of the same

qualitative behavior as perceptrons, they make it much easier to figure out how changing

the weights and biases will change the output.

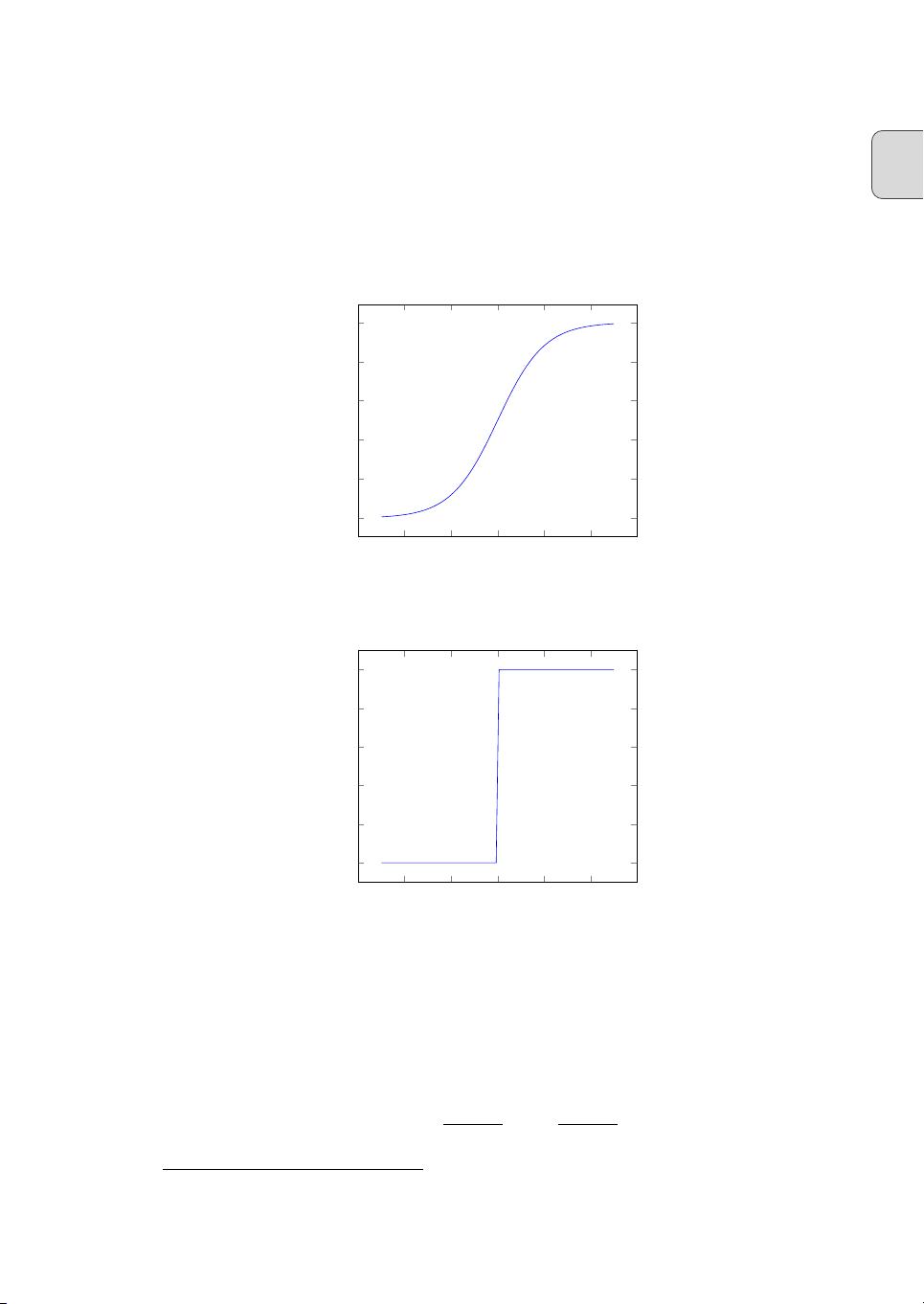

If it’s the shape of

σ

which really matters, and not its exact form, then why use the

particular form used for

σ

in Equation 1.3? In fact, later in the book we will occasionally

consider neurons where the output is

f

(

w·x

+

b

) for some other activation function

f

(

·

). The

main thing that changes when we use a different activation function is that the particular

values for the partial derivatives in Equation 1.5 change. It turns out that when we compute

those partial derivatives later, using

σ

will simplify the algebra, simply because exponentials

have lovely properties when differentiated. In any case,

σ

is commonly-used in work on

neural nets, and is the activation function we’ll use most often in this book.

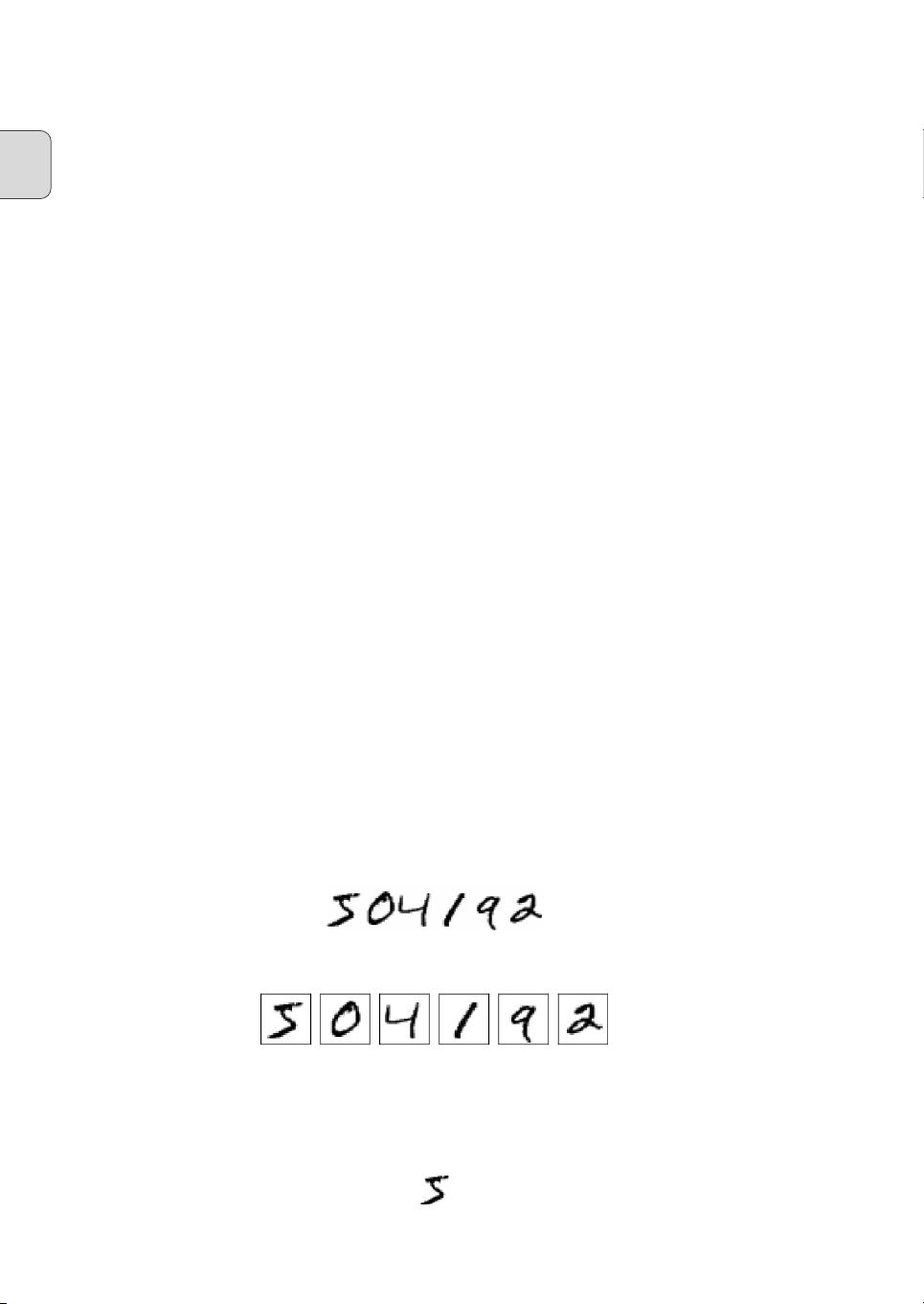

How should we interpret the output from a sigmoid neuron? Obviously, one big difference

between perceptrons and sigmoid neurons is that sigmoid neurons don’t just output 0 or

1. They can have as output any real number between 0 and 1, so values such as 0.173

...

and 0.689

...

are legitimate outputs. This can be useful, for example, if we want to use the

output value to represent the average intensity of the pixels in an image input to a neural

network. But sometimes it can be a nuisance. Suppose we want the output from the network

to indicate either “the input image is a 9” or “the input image is not a 9”. Obviously, it’d be

easiest to do this if the output was a 0 or a 1, as in a perceptron. But in practice we can

set up a convention to deal with this, for example, by deciding to interpret any output of at

least 0.5 as indicating a “9”, and any output less than 0.5 as indicating “not a 9”. I’ll always

explicitly state when we’re using such a convention, so it shouldn’t cause any confusion.

Exercises

• Sigmoid neurons simulating perceptrons, part I

Suppose we take all the weights

and biases in a network of perceptrons, and multiply them by a positive constant, c>0.

Show that the behavior of the network doesn’t change.

• Sigmoid neurons simulating perceptrons, part II

Suppose we have the same setup

as the last problem – a network of perceptrons. Suppose also that the overall input to

the network of perceptrons has been chosen. We won’t need the actual input value, we

just need the input to have been fixed. Suppose the weights and biases are such that

w · x

+

b 6

= 0 for the input x to any particular perceptron in the network. Now replace

all the perceptrons in the network by sigmoid neurons, and multiply the weights and

biases by a positive constant

c >

0. Show that in the limit as

c → ∞

the behaviour of

this network of sigmoid neurons is exactly the same as the network of perceptrons.

How can this fail when w · x + b = 0 for one of the perceptrons?

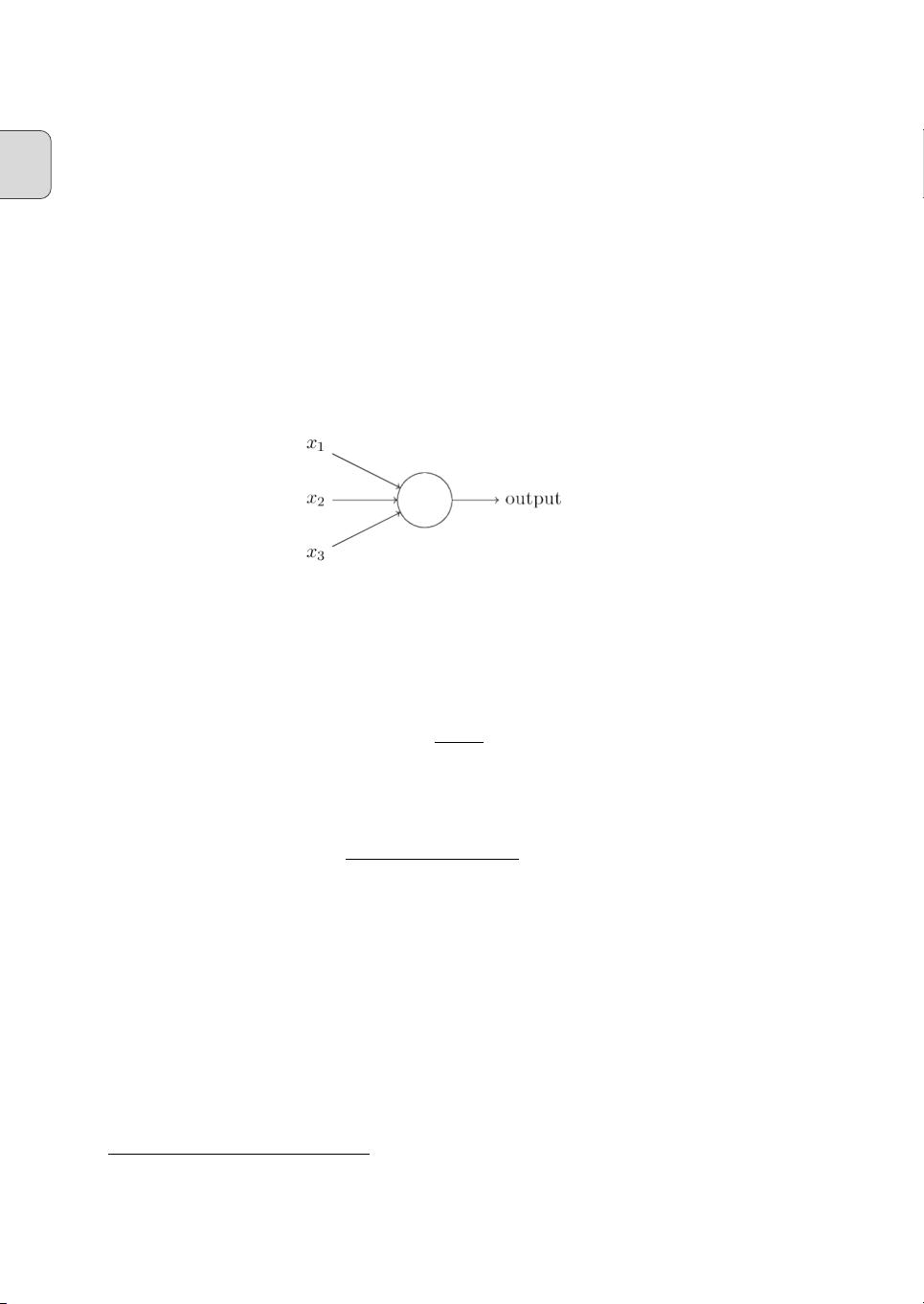

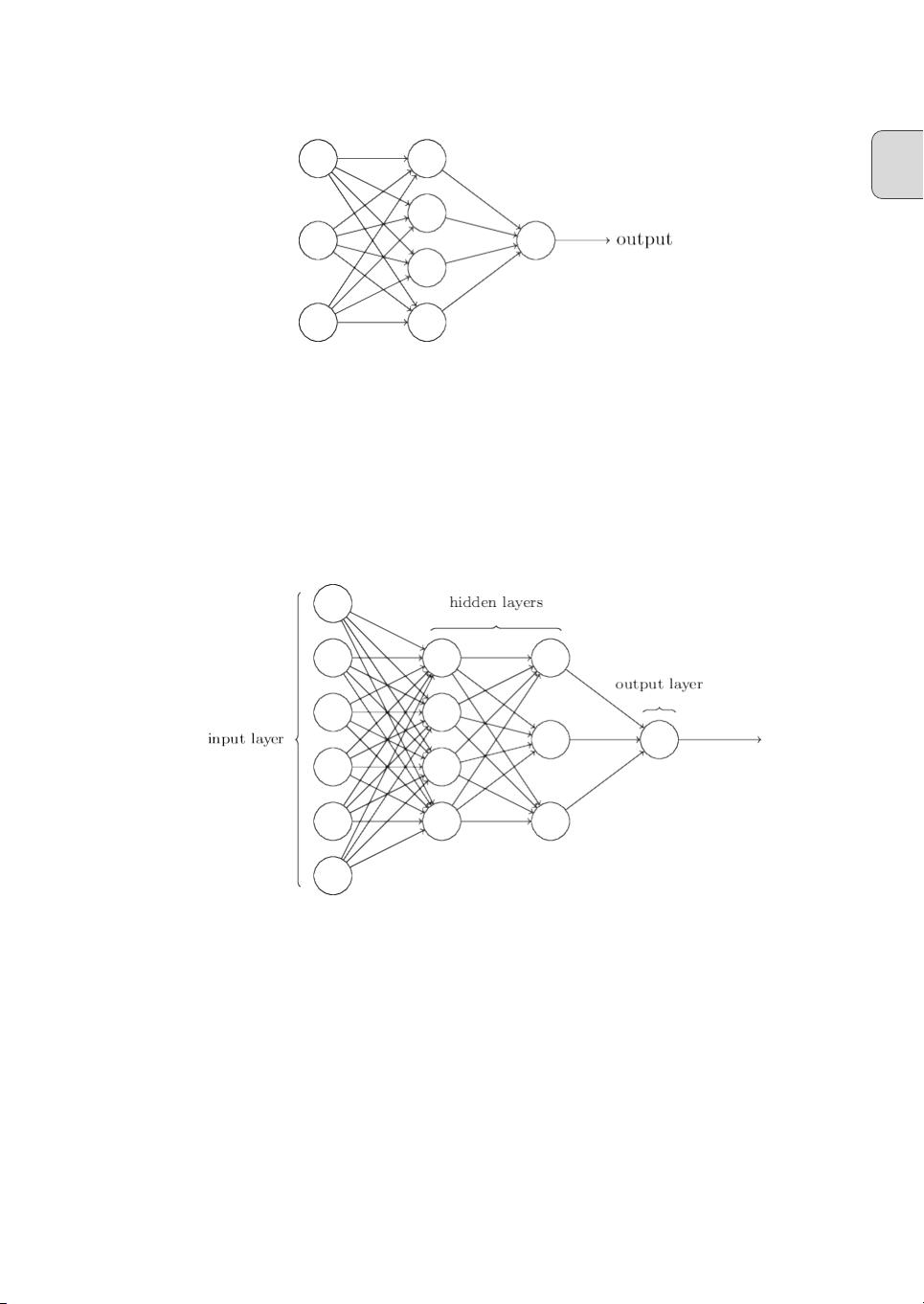

1.3 The architecture of neural networks

In the next section I’ll introduce a neural network that can do a pretty good job classifying

handwritten digits. In preparation for that, it helps to explain some terminology that lets us

name different parts of a network. Suppose we have the network:

1