To answer a query q that requires a set R of blocks, P

decides in an online manner whether to use the current ver-

sions of data blocks in D, or to acquire the most recent

versions of blocks in R from S (and update D) by paying a

cost c

d

(R), which is called the data cost. If P decides not to

pay the data cost, and the version in D is outdated, there is

a query result penalty cost c

q

(R, q) for its inaccuracy. Let a

policy be an oracle that tells what next action for P to take,

given P’s current action and the distribution of states P is

believed to be in.

Definition 1. (Sequential Data Acquisition Problem)

An infinite sequence of queries are revealed to a query pro-

cessor P in an online manner. Upon receiving each query q,

P decides, also in an online fashion, whether or not to ac-

quire the data blocks R required by q from the data source

S. The sequential data acquisition problem for P is to find

an optimal policy π

∗

so as to minimize the long term costs,

which include both data costs and query result penalty costs,

for answering the sequence of queries.

The notion of “long term costs” will be made more precise

in Section 2.2 below (i.e., expected discounted sum of costs).

Analogous to DBMS blocks/pages, in this work, we assume

simple equal-size partitioning of blocks. For instance, in Ex-

ample 1, the sequence of data from each road can be treated

as a block; data tuples from each sensor in a sensor network

are a block. More sophisticated block partitioning is beyond

the scope of this paper. In addition, in these applications,

typically there are one or a few highly dynamic attributes

(which are to be kept track of), while other attributes (e.g.,

locations) are relatively stable/static. An example is the

multidimensional array model which is the most common

data model in scientific databases, where the dimension at-

tributes are usually static and in the clear. Most, if not all,

queries have predicates over ranges of the dimensions. The

block partitions should be based on stable/static attributes.

R can be easily determined if there is a predicate over

block-partitioning attributes. In the (arguably rare) event

that either (E1) there are predicates on static attributes,

but none of these attributes is aligned with block partitions,

or (E2) there are no predicates on static attributes, then we

use a simple two-step process. In the first step, we execute

the query using the existing data (without acquisition), and

get a version of qualified/required set of blocks R. In the

second step, we use R to determine the action of whether to

acquire the data in R (and re-run the query) or to just stop

there (returning the result from the first step). Note that in

the event of E2 above, R may be approximate (a precise R

would include all blocks of the table, as there is no predicate

on stable attributes).

2.2 Preliminaries

POMDP. Markov Decision Processes (MDPs) provide a

mathematical framework for modeling decision making in

situations where outcomes are partly random and partly

under the control of a decision maker [22]. MDPs are an

extension of Markov chains; the difference is the addition of

actions (allowing choice) and rewards (giving motivation).

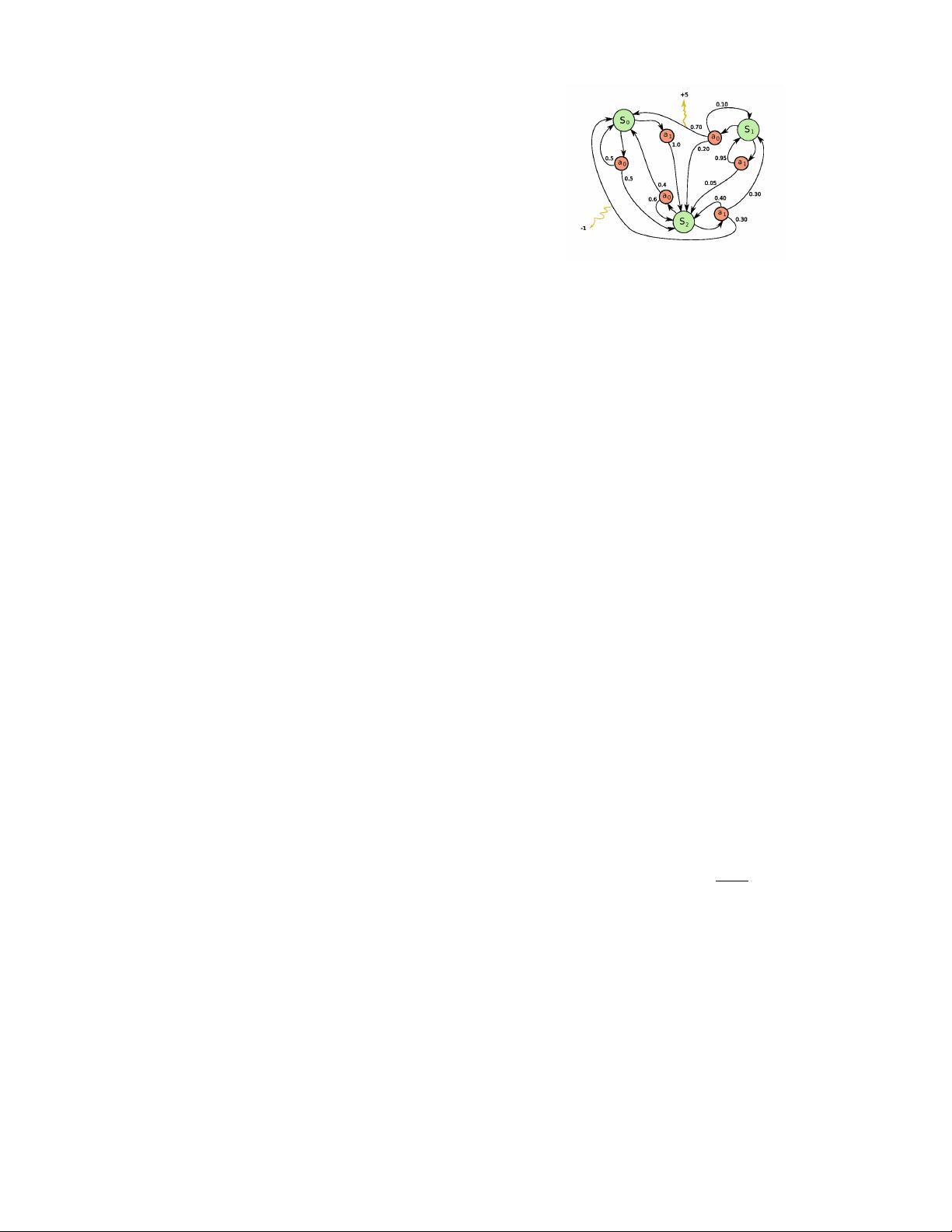

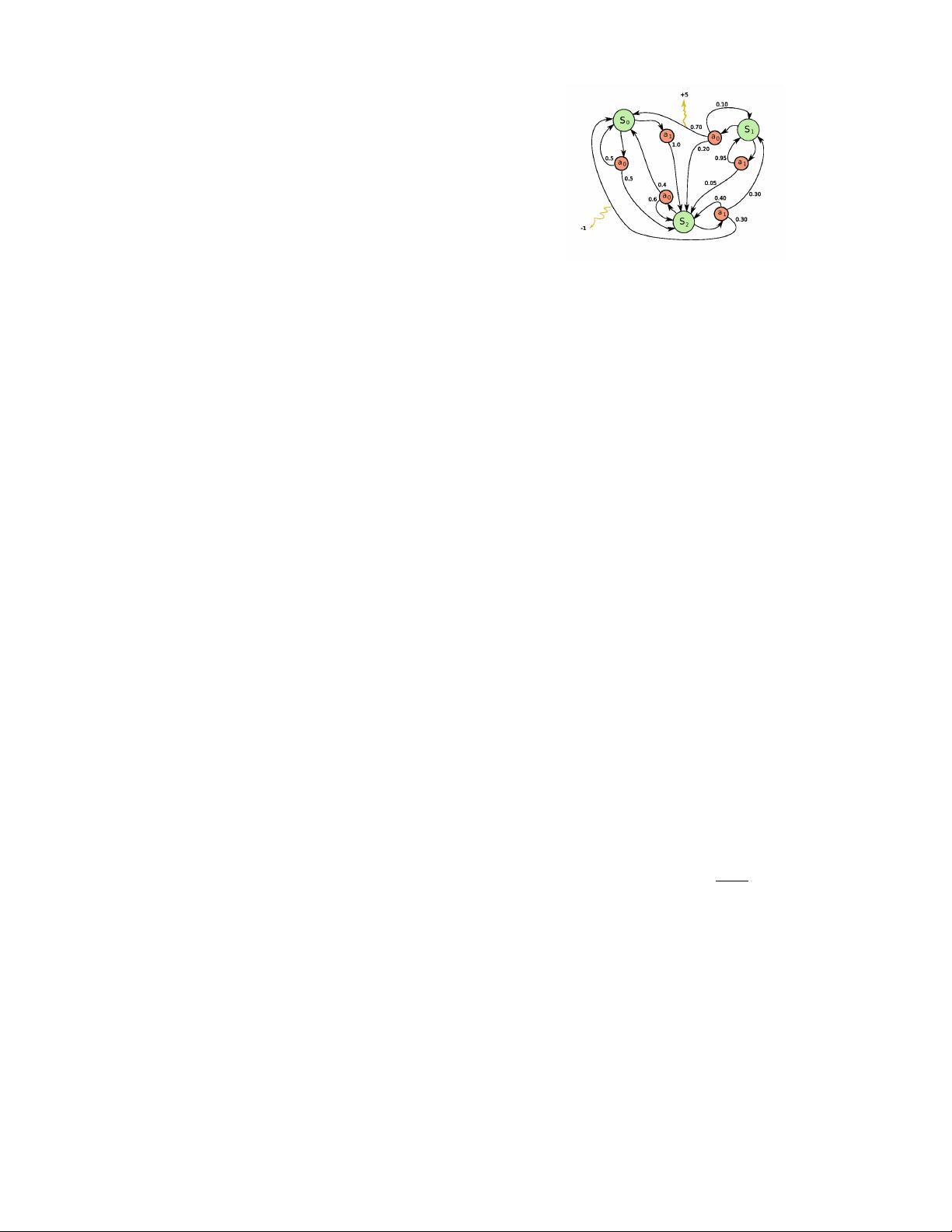

Figure 2 shows an example of a simple MDP with three

states s

0

, s

1

, s

2

and two actions a

0

, a

1

. A yellow squiggle

arrow shows the reward associated with the corresponding

transition black edge. Formally, an MDP is defined as a

tuple (S, A, T, R), where S is a set of states, A is a set of

actions, T is the transition function T (s, a, s

0

) = P r[s

t+1

=

Figure 2: A simple MDP with 3 states and 2 actions.

s

0

| s

t

= s, a

t

= a] (where s

t

and a

t

denote the state and

action at time t, resp.), and R(s, a) is the reward for execut-

ing action a in state s. The core problem of MDPs is to find

a policy for the decision maker: a function π that specifies

the action π(s) that the decision maker will choose when in

state s. The goal is to choose a policy π that will maximize

some cumulative function of the random rewards, typically

the expected discounted sum over a potentially infinite hori-

zon:

P

∞

t=0

γ

t

R(s

t

, π(s

t

)), where γ is called a discount factor

and satisfies 0 ≤ γ < 1. The larger the discount factor

(closer to 1), the more effect future rewards have on current

decision making. This is equivalent to minimizing expected

discounted sum of costs as in Definition 1.

In MDP, we assume that the decision maker knows the

environment state exactly; in other words, the environment

state must be fully observable. However, this assumption of-

ten does not hold in reality. A Partially Observable Markov

Decision Process (POMDP) is for this problem. In addition

to S, A, T , R, a POMDP has two extra elements: a set

of observations Ω and an observation function O(a, s

0

, o) =

P r[o

t+1

= o | a

t

= a, s

t+1

= s

0

]. There are a number of tools

for solving a POMDP model. We need to first define and

learn the S, A, T , R, Ω, and O elements, and provide them

to a POMDP solver, which in turn computes the optimal

policy. At runtime, when each query comes in, we interact

with a program and feed our observation to it. Accordingly,

the program consults the computed policy and notifies us

the next action. This step at runtime is instantaneous as

the policy is pre-computed only once. We refer the reader

to [22, 16] for the details of POMDP.

Locality-sensitive hashing. Locality-sensitive hashing

(LSH) is a method of performing probabilistic dimension re-

duction of high-dimensional data [19]. The idea is to hash

the input items so that similar items are mapped to the

same buckets with high probability. A key element is defin-

ing “similarity”. One metric we use is set similarity. In par-

ticular, the Jaccard similarity coefficient between two sets

A and B is defined as J(A, B) =

|A∩B|

|A∪B|

, which is a value

in [0,1] and measures the similarity of two sets (e.g., when

A = B, J(A, B) = 1). The Jaccard distance is 1 − J(A, B).

MinHash is a LSH mechanism based on this metric [10]. Our

goal is to hash a set of elements E. A simple version of Min-

Hash is to start with k independent conventional (random)

hash functions h

1

, ..., h

k

. Then the MinHash value for the

whole set E is the concatenation of k minimum hash values:

min

e∈E

h

1

(e), ..., min

e∈E

h

k

(e).

Q-learning. As surveyed by Kaelbling et al. [15], there

are mainly three types of model-free approaches in the liter-

ature: (1) Adaptive Heuristic Critic (AHC), (2) Q-learning,

and (3) Model-free learning with average reward. AHC ar-

chitectures are more difficult to work with than Q-learning

on a practical level [15]. It can be hard to get the relative

279