Mask-Guided Portrait Editing with Conditional GANs

Shuyang Gu

1

Jianmin Bao

1

Hao Yang

2

Dong Chen

2

Fang Wen

2

Lu Yuan

2

1

University of Science and Technology of China

2

Microsoft Research

{gsy777,jmbao}@mail.ustc.edu.cn {haya,doch,fangwen,luyuan}@microsoft.com

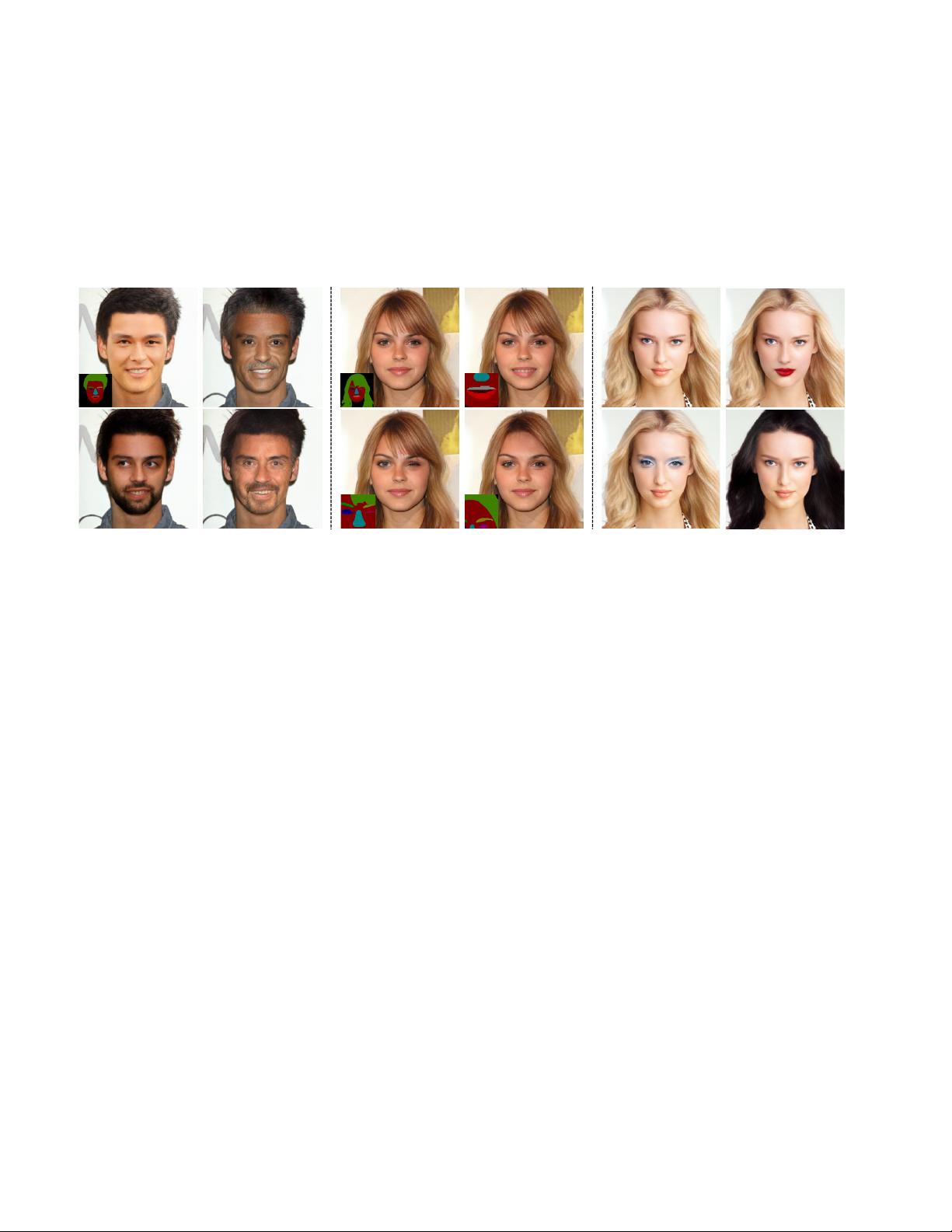

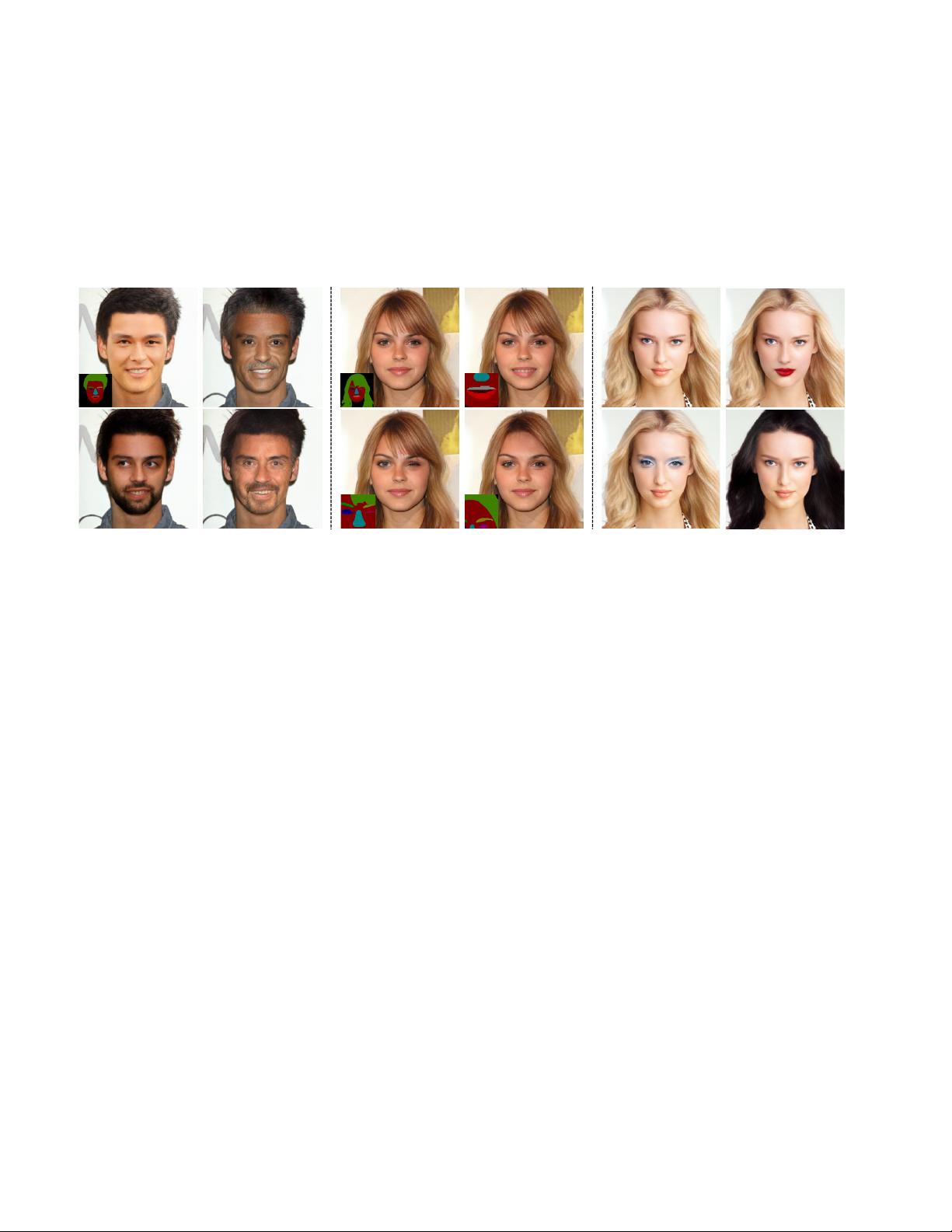

(a) Mask2image

(b) Component editing

(c) Component transfer

Figure 1: We propose a framework based on conditional GANs for mask-guided portrait editing. (a) Our framework can generate diverse

and realistic faces using one input target mask (lower left corner in the first image). (b) Our framework allows us to edit the mask to change

the shape of face components, i.e. mouth, eyes, hair. (c) Our framework also allows us to transfer the appearance of each component for a

portrait, including hair color.

Abstract

Portrait editing is a popular subject in photo manipula-

tion. The Generative Adversarial Network (GAN) advances

the generating of realistic faces and allows more face edit-

ing. In this paper, we argue about three issues in existing

techniques: diversity, quality, and controllability for por-

trait synthesis and editing. To address these issues, we pro-

pose a novel end-to-end learning framework that leverages

conditional GANs guided by provided face masks for gener-

ating faces. The framework learns feature embeddings for

every face component (e.g., mouth, hair, eye), separately,

contributing to better correspondences for image transla-

tion, and local face editing. With the mask, our network is

available to many applications, like face synthesis driven

by mask, face Swap+ (including hair in swapping), and lo-

cal manipulation. It can also boost the performance of face

parsing a bit as an option of data augmentation.

1. Introduction

Portrait editing is of great interest in the vision and

graphics community due to its potential applications in

movies, gaming, photo manipulation and sharing, etc. Peo-

ple enjoy the magic that makes faces look more interesting,

funny, and beautiful, which appear in an amount of popular

apps, such as Snapchat, Facetune, etc.

Recently, advances in Generative Adversarial Networks

(GANs) [16] have made tremendous progress in synthesiz-

ing realistic faces [1, 29, 25, 12], like face aging [46], pose

changing [44, 21] and attribute modifying [4]. However,

these existing approaches still suffer from some quality is-

sues, like lack of fine details in skin, difficulty in dealing

with hair and background blurring. Such artifacts cause

generated faces to look unrealistic.

To address these issues, one possible solution is to use

the facial mask to guide generation. On one hand, a face

mask provides a good geometric constraint, which helps

synthesize realistic faces. On the other hand, an accurate

contour for each facial component (e.g., eye, mouth, hair,

etc.) is necessary for local editing. Based on the face mask,

some works [40, 14] achieve very promising results in por-

trait stylization. However, these methods focus on transfer-

ring the visual style (e.g., B&W, color, painting) from the

reference face to the target face. It seems to be unavailable

for synthesizing different faces, or changing face compo-

nents.

Some kinds of GAN models begin to integrate the face

mask/skeleton for better image-to-image translation, for

arXiv:1905.10346v1 [cs.CV] 24 May 2019