3

the paths diversity by taking potential path correlation

into account, which avoids underlying shared bottle-

necks. A rate allocation model for best path transfer

was presented by our team in [19] and we showed

that it achieves the global optimum. However, none of

the above works consider the dynamic path selection

according to the likely variation of the current network

conditions.

Yilmaz

et al.

[20] introduced non-renegable selective

acknowledgements (NR-SACK) in order to avoid retain-

ing the non-outstanding gap ACK chunks in the sender

buffer. NR-SACK gives possibility to free buffer space

earlier and reuse it for new data chunks. Dreibholz

et al.

[21] presented a blocking fraction factor and proposed

a preventive retransmission policy based on the factor

for effective transmission. Adhari

et al.

[22] proposed

an optimized strategy to enhance the send and receive

buffer handling by avoiding one path to dominate the

buffer occupation. These solutions achieve performance

improvements. However, the researchers do not provide

a proper data distribution mechanism to ensure data

packets arrival at receiver in order as much as possible.

Cui

et al.

[23] introduced a fast selective ACK scheme

for SCTP to enhance transmission throughput in mul-

tihoming scenarios. In the networks with asymmetric

delays for forward and reverse paths, a multihomed

receiver sends SACK chunks to the sender over the

fastest reverse path, which facilitates to inflate the con-

gestion window and to retransmit the lost data packets

as quickly as possible. Yet the solution just considers the

transmission of control chunks and fails to enhance the

overall transmission efficiency.

3 CMT-QA SYSTEM DESIGN OVERVIEW

During multihomed communications in a heterogeneous

wireless networks, delay, bandwidth and loss rate of al-

ternative paths can be significantly different. If a round-

robin data delivery approach is used, slower paths are

easily overloaded, while faster paths remain underuti-

lized. In order to avoid unbalanced transmissions, re-

duce received data reordering and alleviate the receiver

buffer blocking problem caused by the use of dissimilar

paths using CMT, CMT-QA makes important contribu-

tions in the following three stages:

• Accurately senses each path’s current transmission

status and estimates in real time each path’s data

handling capacity.

• Includes a newly designed data distribution algo-

rithm to deliver optimally the application layer data

over multiple paths and ensure the received data

arrives in order.

• Introduces a proper retransmission mechanism to

handle different kinds of packet loss and alleviate

the packet reordering problem.

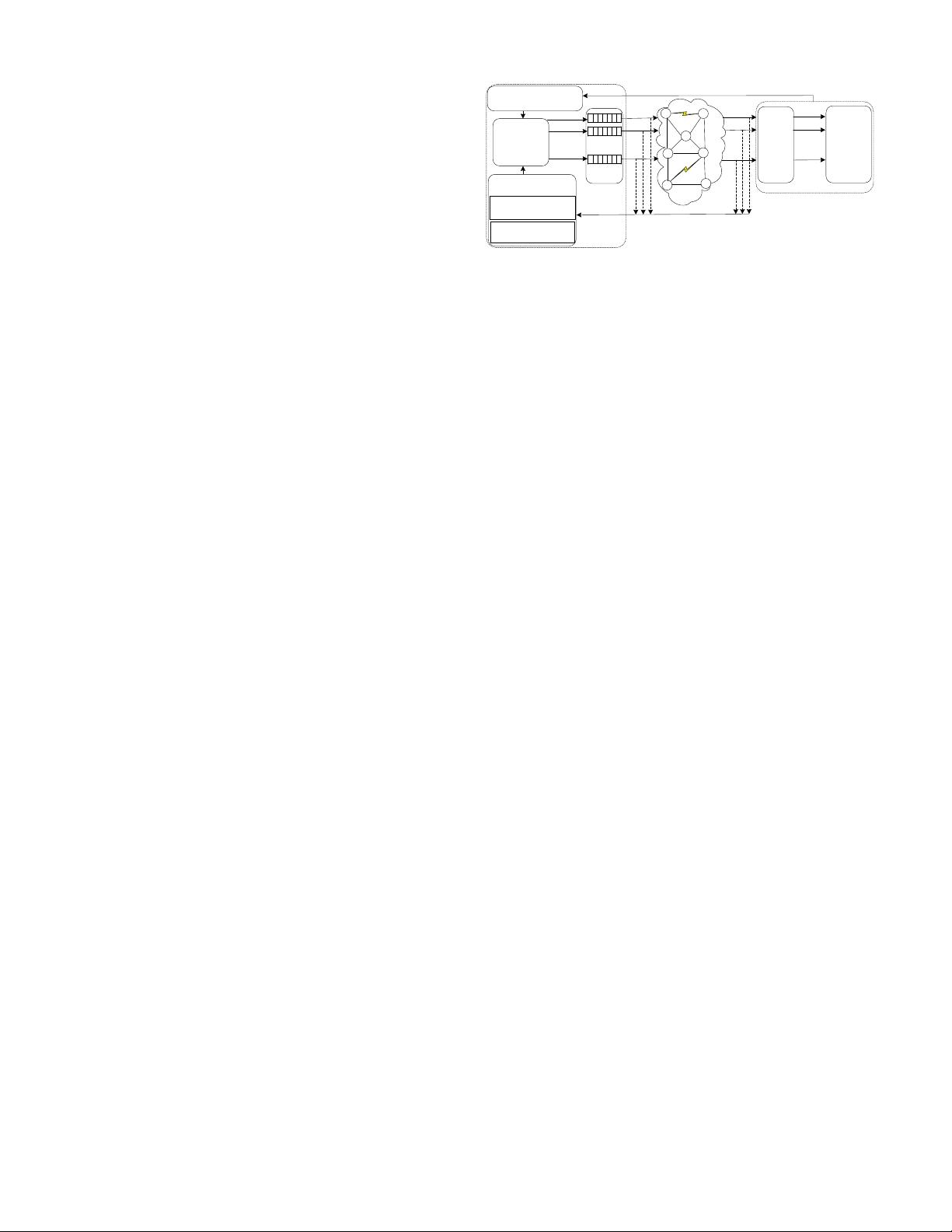

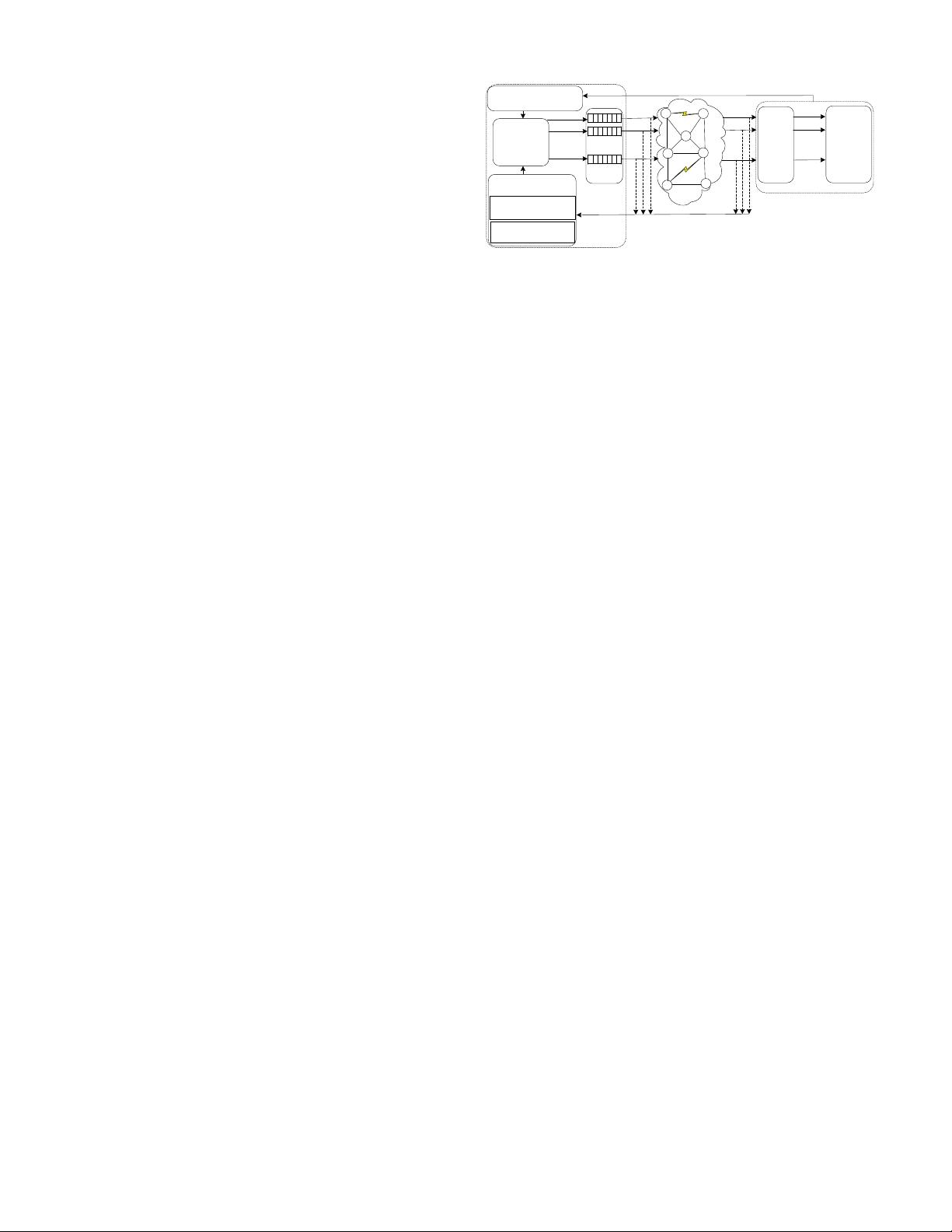

Fig. 2 illustrates the design of the CMT-QA architec-

ture, which includes a Sender, a Receiver and n commu-

nications Paths via the heterogeneous wireless network

sender

buffer

Stream 1

Stream 2

Stream N

Data

Distribution

Scheduler

sender

Path 1

Stream 1

Stream 2

Stream N

Receiver

network

Path status feedback information

Path 1

Path condition

Optimal

Retransmission Policy

Calculate the

estimation interval

Path handling

capability estimater

Sender

Reassembly

in receiver

buffer

Unbound

and

collect

data

chunks

Path 2

Path n

Path 2

Path n

Path Quality

Estimation Model

Ă

Ă

Ă

Ă

Ă

Fig. 2. CMT-QA architecture.

environment. The Receiver receives data and recreates

the original data chunks, if multiple data and control

chunks are bundled together by the Sender into a single

SCTP packet for transmission. In the case in which a

user message is fragmented into multiple chunks, the

Receiver reassembles the fragmented message in the re-

ceiver buffer before its delivery to the user. The feedback

information of path status in the network is collected by

the Sender and used to estimate the path quality. At the

Sender there are three major CMT-QA blocks which are

the

Path Quality Estimation Model

(PQEM),

Data Dis-

tribution Scheduler

(DDS) and

Optimal Retransmission

Policy

(ORP). CMT-QA aims to intelligently adjust data

distribution for each path and support in order data

packet arrival at destination.

PQEM chooses a reasonable estimation interval to

calculate the data handling rate of entering and leaving

sender buffer for each path, which describes any path’s

communication quality. Any unfavorable conditions in-

cluding packet loss rate, link delay, buffer size of routers,

channel capacity and number of other data flows etc.

will determine performance degradations of the paths

handling capability. PQEM uses a comprehensive eval-

uation method to reflect the impact of above factors

on the communication quality. PQEM’s data handling

rate of sender buffer describes better the end-to-end

delivery conditions as its shorter estimation time enables

its timely reflection of the current communication path

status. Additionally, the samples for the time interval of

distributed data’s entering and leaving the sender buffer

can be obtained easily to predict the path quality change

trends.

Based on the path quality estimation results by PQEM,

DDS chooses a subset of suitable paths for load sharing

and dynamically assigns them appropriate data flows.

In meanwhile, by forecasting the time of data arriving at

destination in terms of each path quality, DDS can draft

the period of packet distribution and also can adjust

the distributed data amount for each path. In this way,

the application data chunks are intelligently dispatched

over multiple paths in real-time. Compared with the

round robin scheme, we believe that the most effective

approach to mitigate the reordering is to use a heuristic

mechanism to decide the fraction of data scheduled to

be transmitted on each path. The data distribution rate