44 Y. Zhao et al. / J Control Theory Appl 2013 11 (1) 42–53

ized weights. The programming details for one iteration step

are shown as follows:

1) Choose a tracking object at the first frame in a vision

sequence and obtain the CH q

u

= C

I

i=1

k(

y − x

i

a

) ×

δ[h(x

i

) − u], where I is the number of pixels in the re-

gion, δ is the Kronecker delta function, the parameter a =

√

w

2

+ h

2

is used to adapt the size of the region, and the

normalized factor is

C =

1

I

i=1

k(

y − x

i

a

)

, (3)

k(r)=

1 − r

2

, if r<1,

0, otherwise.

(4)

r is the distance between the location of the pixels and the

center of the region.

2) Copy the parameters of the selected object to the par-

ticles so that the sample set S

t−1

is with N particles.

3) According to the second-order autoregressive dynam-

ics model equation (1), propagate each sample from the set

S

t−1

and obtain s

n

t

= As

n

t−1

+ ω

t−1

.

4) Calculate the color histograms in the regions defined

by each sample set S

t

: p

u

s

n

t

= C

I

i=1

k(

s

n

t

− x

i

a

)δ[h(x

i

)−

u]. Calculate the Bhattacharyya distance for each sample of

the set S

t

: ρ =1−

m

u=1

p

u

s

n

t

q

u

, where m is the number

of the bins which discretize the CH. Weight each sample of

the set S

t

according to equation (2) and obtain the weight

w

t

(n).

5) Estimate the mean state of the set S

t

and obtain the

location of the object. E(S

t

)=

N

n=1

w

t

(n)s

n

t

.

6) Rank the sample set S

t

according to the weights of

each sample. Copy particles s

n

t

with numbers of N/w

t

(n)

to sample set S

t+1

, n from 1 to N, until the sample set S

t+1

is full filled. If the sample set is not full filled, s

1

t

is copied

to the sample set S

t+1

until it is full filled.

As the above steps 2)–3) are used to initialize the color-

based particle filter, while steps 3)–6) are iteration steps of

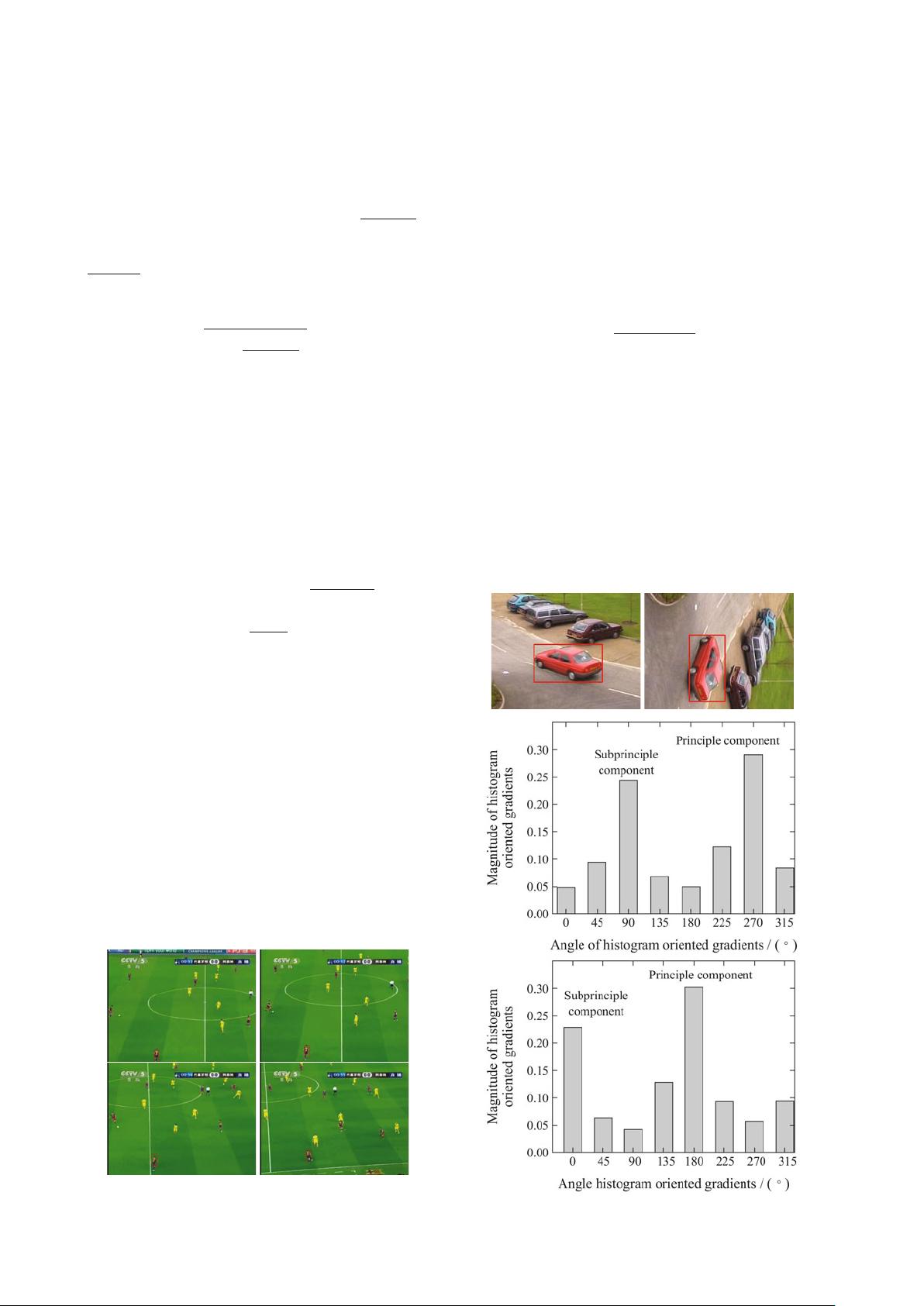

the color-based particle filter. Fig. 3 shows the tracking ef-

fect of the color-based particle filter. The tracking object is

selected in frame one.

Fig. 3 Tracking effect of the color-based particle filter. The frames

1, 20, 30, and 40 are shown (left-right, top-down).

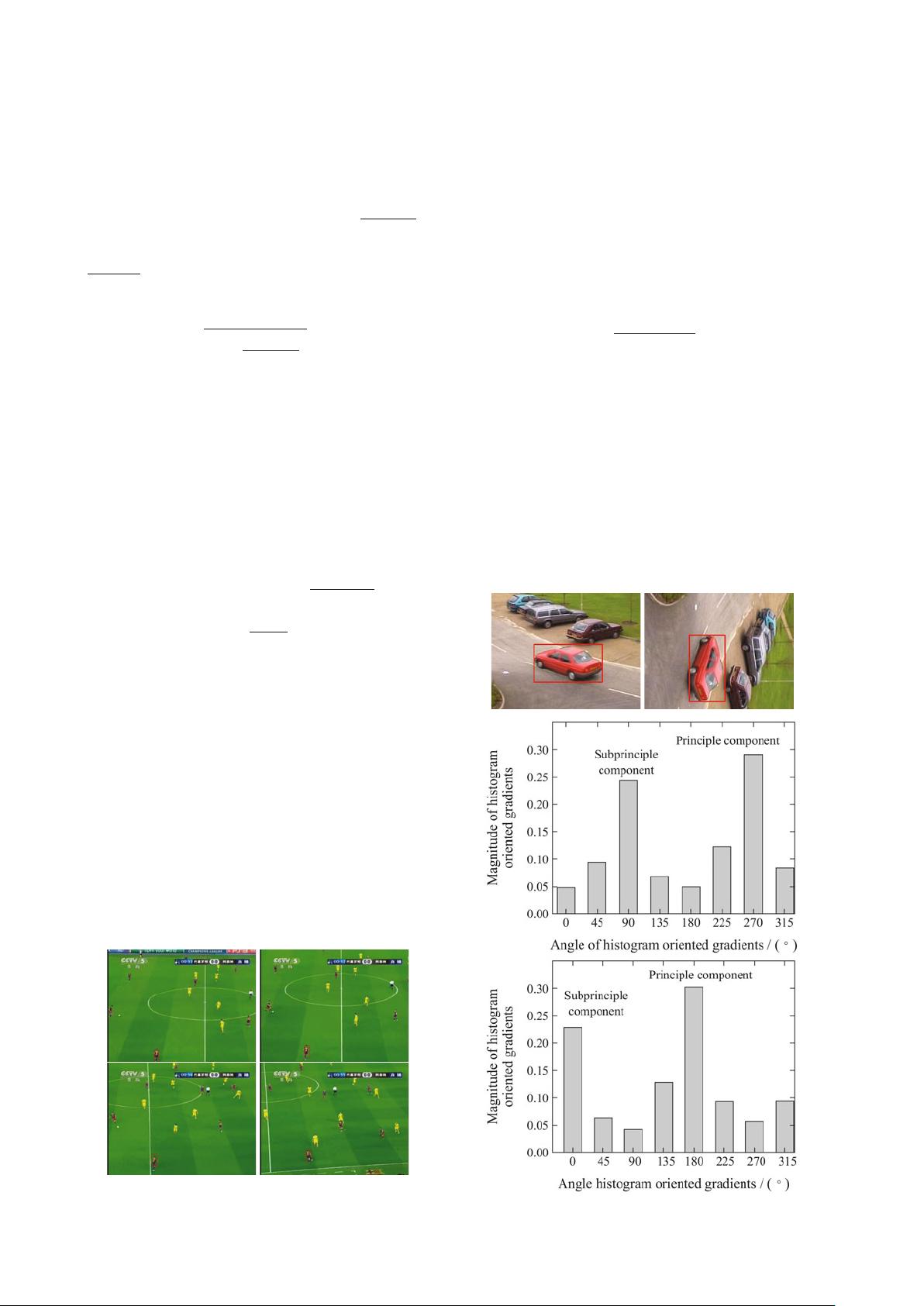

2.2 Object tracking algorithm with CHOG

HOG [11] can describe the shape information of objects.

HOG is calculated in gray-scale space as reference [12] de-

scribed. As scale invariant feature transform (SIFT) [28], we

adopt the method to obtain the HOG, whilst we introduce

the weight function. To increase the reliability of the HOG

when boundary pixels belong to the background, smaller

weights are assigned to the pixels further away from the re-

gion center. The weighting function is shown as

w =exp(−

i × i + j × j

2

× δ

2

), (5)

where i and j are the coordinates of the pixels depending on

the origin which is the center of the computational domain.

δ is the same as the one which is used to smooth the image

to avoid the affection of noise. According to the weight of

the HOG, the region will be obtained as follows:

B

u

y

=

I

i=1

w(x

i

)δ(b(x

i

) − u), (6)

where B

u

y

represents the magnitude of u directions in re-

gion y. I is the number pixels in the region of y, δ is the

Kronecker delta function, and b(x

i

) is the function of the

HOG computed at the pixel x

i

. Fig. 4 shows the HOG of

the object in different visual angle.

Fig. 4 HOG of the object in different visual angle.