Amazon Web Services

In this paper, we will discuss one application example in

detail - code-named as “GrepTheWeb”.

Cloud Architecture Example: GrepTheWeb

The Alexa Web Search web service allows developers to

build customized search engines against the massive

data that Alexa crawls every night. One of the features of

their web service allows users to query the Alexa search

index and get Million Search Results (MSR) back as

output. Developers can run queries that return up to 10

million results.

The resulting set, which represents a small subset of all

the documents on the web, can then be processed

further using a regular expression language. This allows

developers to filter their search results using criteria that

are not indexed by Alexa (Alexa indexes documents

based on fifty different document attributes) thereby

giving the developer power to do more sophisticated

searches. Developers can run regular expressions against

the actual documents, even when there are millions of

them, to search for patterns and retrieve the subset of

documents that matched that regular expression.

This application is currently in production at Amazon.com

and is code-named GrepTheWeb because it can “grep” (a

popular Unix command-line utility to search patterns) the

actual web documents. GrepTheWeb allows developers to

do some pretty specialized searches like selecting

documents that have a particular HTML tag or META tag

or finding documents with particular punctuations

(“Hey!”, he said. “Why Wait?”), or searching for

mathematical equations (“f(x) = ∑x + W”), source code,

e-mail addresses or other patterns such as

“(dis)integration of life”.

While the functionality is impressive, for us the way it

was built is even more so. In the next section, we will

zoom in to see different levels of the architecture of

GrepTheWeb.

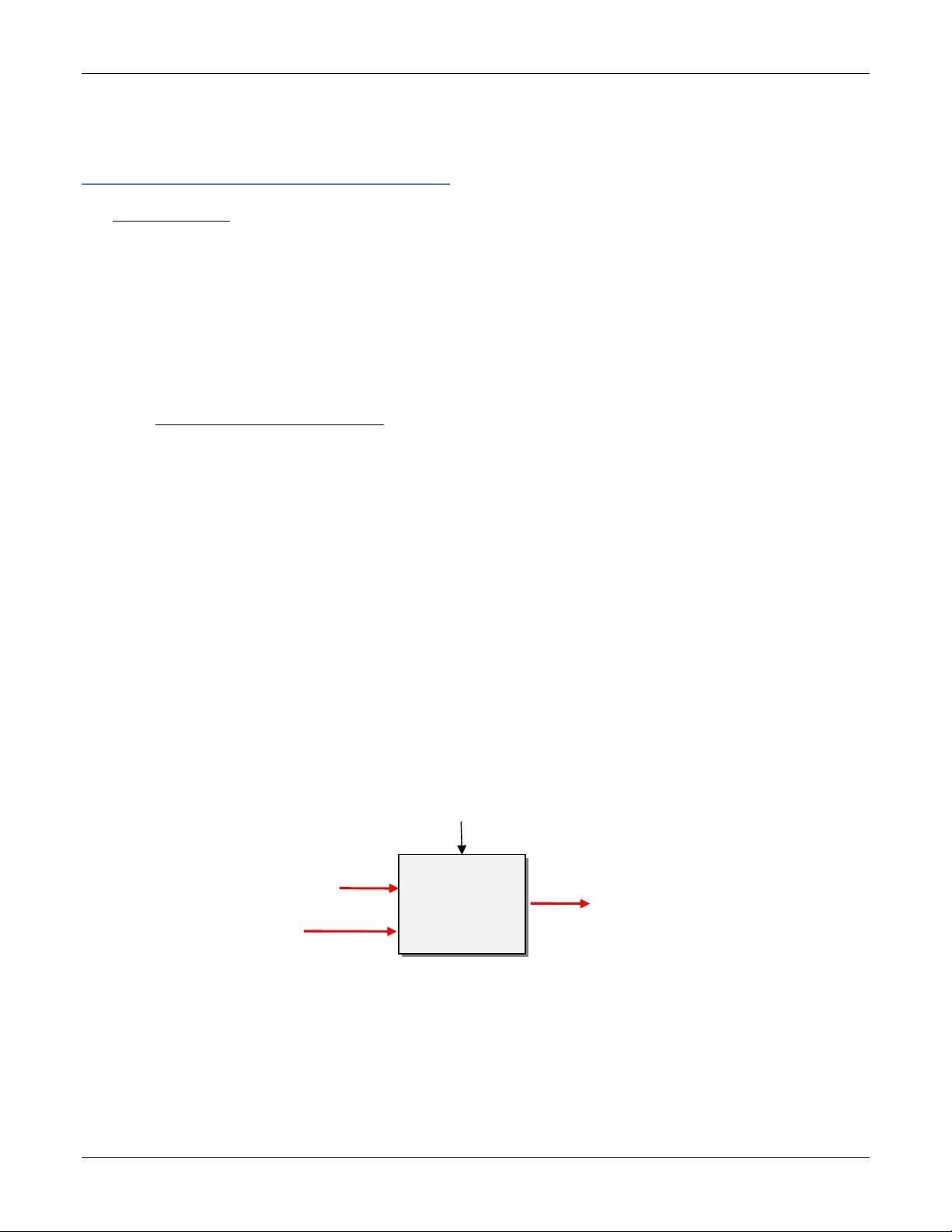

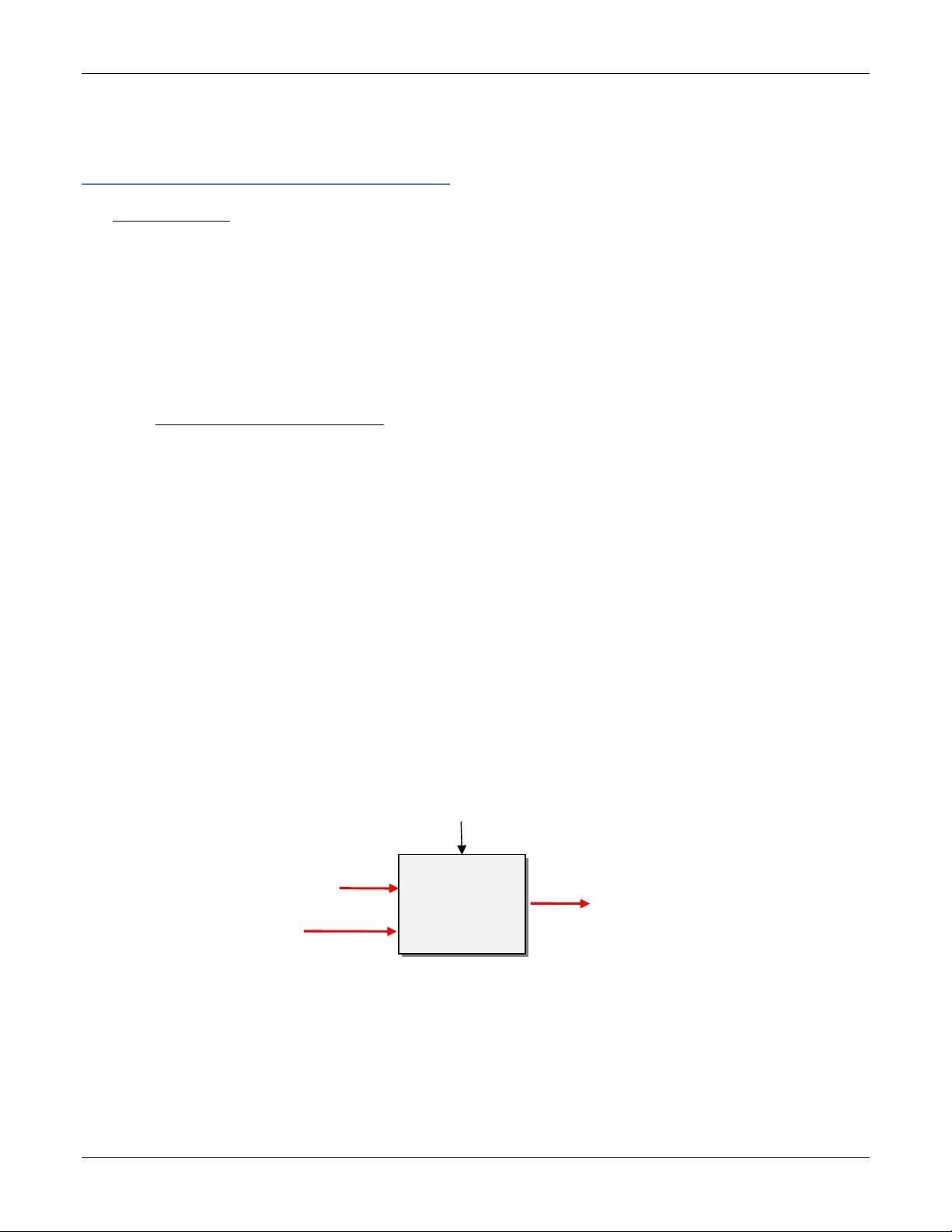

Figure 1 shows a high-level depiction of the architecture.

The output of the Million Search Results Service, which is

a sorted list of links and gzipped (compressed using the

Unix gzip utility) in a single file, is given to GrepTheWeb

as input. It takes a regular expression as a second input.

It then returns a filtered subset of document links sorted

and gzipped into a single file. Since the overall process is

asynchronous, developers can get the status of their jobs

by calling GetStatus() to see whether the execution is

completed.

Performing a regular expression against millions of

documents is not trivial. Different factors could combine

to cause the processing to take lot of time:

• Regular expressions could be complex

• Dataset could be large, even hundreds of

terabytes

• Unknown request patterns, e.g., any number of

people can access the application at any given

point in time

Hence, the design goals of GrepTheWeb included to scale

in all dimensions (more powerful pattern-matching

languages, more concurrent users of common datasets,

larger datasets, better result qualities) while keeping the

costs of processing down.

The approach was to build an application that not only

scales with demand, but also without a heavy upfront

investment and without the cost of maintaining idle

machines (“downbottom”). To get a response in a

reasonable amount of time, it was important to distribute

the job into multiple tasks and to perform a Distributed

Grep operation that runs those tasks on multiple nodes in

parallel.

GrepTheWeb

Application

RegEx

Subset of

document URLs

that matched

the RegEx

Document Urls)

: GrepTheWeb Architecture