super-resolution.

Temporal Frame Interpolation

Given a low frame rate

video, a temporal frame interpolation algorithm generates

a high frame rate video by synthesizing additional frames

between two temporally neighboring frames. Specifically, let

I

1

and

I

3

be two consecutive frames in an input video, the

task is to estimate the missing middle frame

I

2

. Temporal

frame interpolation doubles the video frame rate, and can be

recursively applied for even higher frame rates.

Video Denoising/Deblocking

Given a degraded video with

artifacts from either the sensor or compression, video denois-

ing/deblocking aims to remove the noise or compression arti-

facts to recover the original video. This is typically done by

aggregating information from neighboring frames. Specifi-

cally, Let

I

1

,

I

2

, ...,

I

N

be N-consecutive frames in an input

video, the task of video denoising is to estimate the middle

frame

I

∗

ref

given degraded frames

I

1

,

I

2

, ...,

I

N

as input. For

the ease of description, in the rest of paper, we simply call

both tasks as video denoising.

Video Super-Resolution (SR)

Similar to video denoising,

given

N

consecutive low-resolution frames as input, the task

of video super-resolution is to recover the high-resolution

middle frame. In this work, we first upsample all the input

frames to the same resolution of the output using bicubic

interpolation, and our algorithm only needs to recover the

high-frequency component in the output image.

4. Task-Oriented Flow for Video Processing

Most motion-based video processing algorithms has two

steps: motion estimation and image processing. For example,

in temporal frame interpolation, most algorithms first estimate

how pixels move between input frames (frame 1 and 3), and

then move pixels to the estimated location in the output frame

(frame 2) [

2

]. Similarly, in video denoising, algorithms first

register different frames based on estimated motion fields

between them, and then remove noises by aggregating infor-

mation from registered frames.

In this paper, we propose to use task-oriented flow

(TOFlow) to integrate the two steps. To learn the task-oriented

flow, we design an end-to-end trainable network with three

parts (Figure 3): a flow estimation module that estimates the

movement of pixels between input frames; an image transfor-

mation module that warps all the frames to a reference frame;

and a task-specific image processing module that performs

video interpolation, denoising, or super-resolution on regis-

tered frames. Because the flow estimation module is jointly

trained with the rest of the network, it learns to predict a flow

field that fits to a particular task.

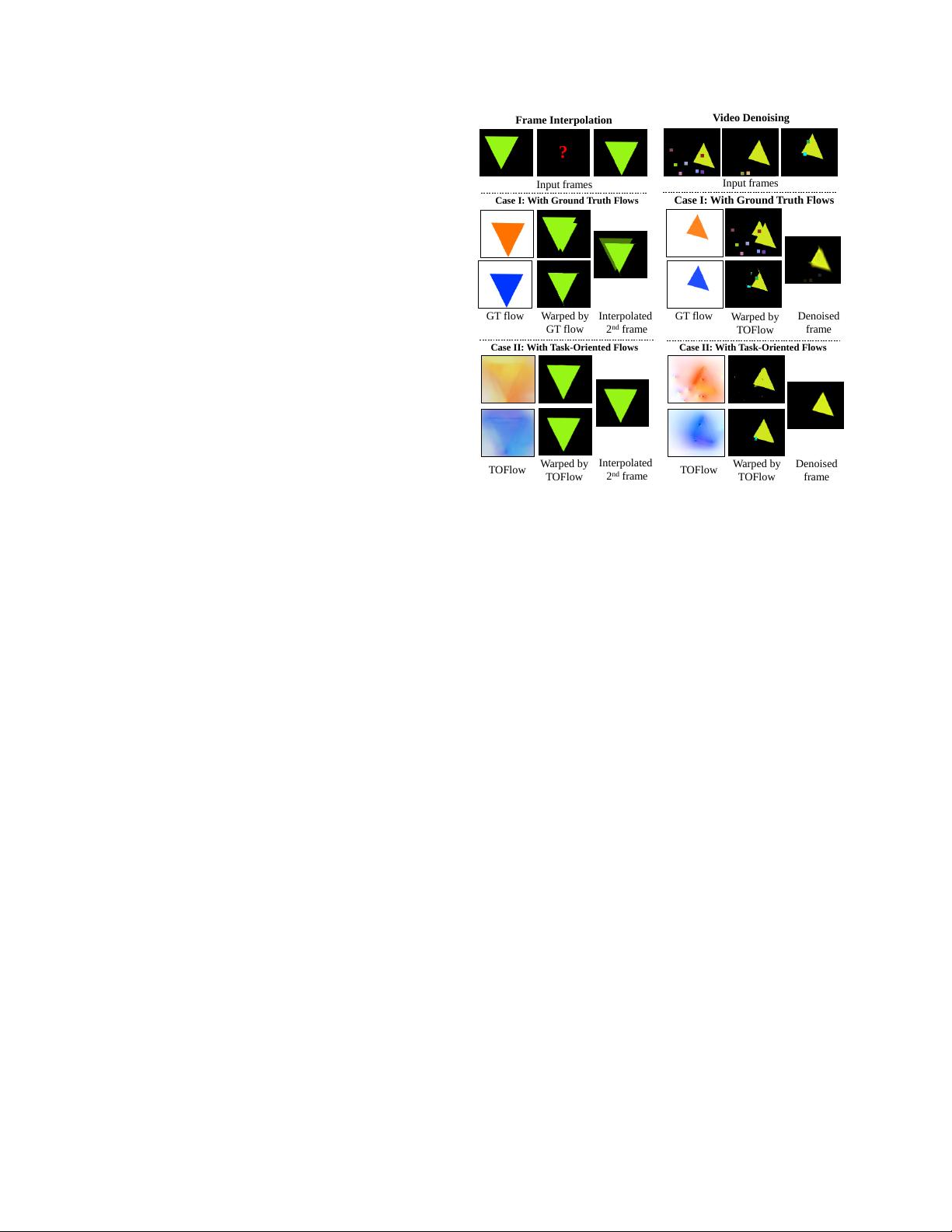

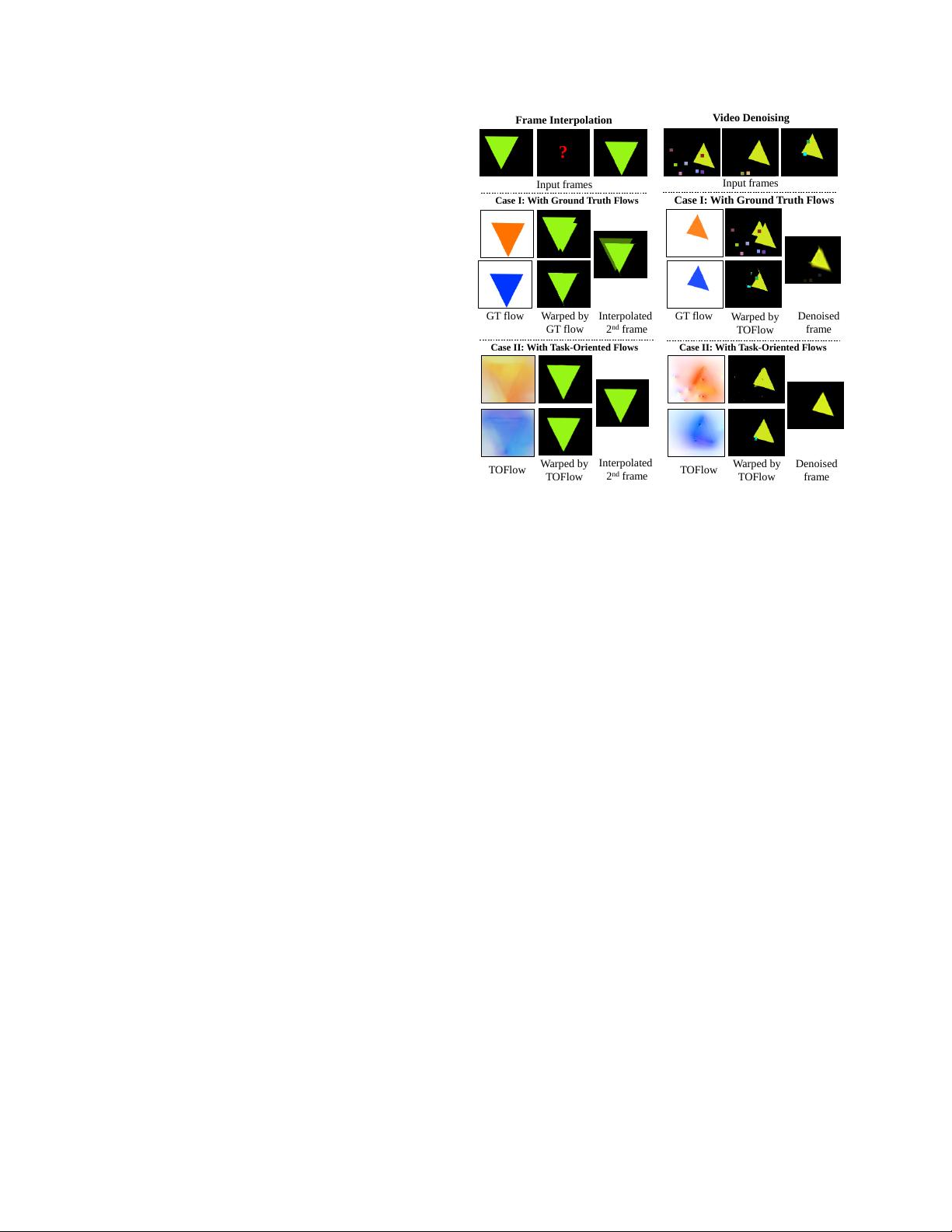

4.1. Toy Example

Before discussing the details of network structure, we first

start with two synthetic sequences to demonstrate why our

TOFlow can outperform traditional optical flows. The left

Case I: With Ground Truth Flows

Case II: With Task-Oriented Flows

Input frames

TOFlow

Warped by

TOFlow

Denoised

frame

Video Denoising

?

Case I: With Ground Truth Flows

Case II: With Task-Oriented Flows

Input frames

TOFlow

Warped by

TOFlow

Interpolated

2

nd

frame

Frame Interpolation

Warped by

TOFlow

Denoised

frame

GT flow Warped by

GT flow

Interpolated

2

nd

frame

GT flow

Figure 2: A toy example that demonstrates the effectiveness

of task oriented flow over the traditional optical flow. See

Section 4.1 for details.

of Figure 2 shows an example of frame interpolation, where

a green triangle is moving to the bottom in front of a black

background. If we warp both the first and third frames to the

second, even using the ground truth flow (Case I, left column),

there is an obvious doubling artifact in the warped frames due

to occlusion (Case I, middle column), which is a well-known

problem in the optical flow literature [

2

]. The final interpola-

tion result based on these two warp frames still contains this

artifact (Case I, right column). In contrast, TOFlow does not

stick to object motion: the background should be static, but

it has non-zero motion (Case II, left column). With TOFlow,

however, there is barely any artifact in the warped frames

(Case II, middle column) and the interpolated frame looks

clean (Case II, right column). The hallucinated background

motion actually helps to reduce the doubling artifacts. This

shows that TOFlow can reduce errors and synthesize frames

better than the ground truth flow.

Similarly, on the right of Figure 2, we show an example of

video denoising. The random small boxes in the input frames

are synthetic noises. If we warp the first and the third frames

to the second using the ground truth flow, the noisy patterns

(random squares) remain, and the denoised frame still contains

some noise (Case I, right column. There are some shadows of

boxes on the bottom). But if we warp these two frames using

TOFlow (Case II, left column), those noisy patterns are also

reduced or eliminated (Case II, middle column), and the final

denoised frame base on them contains almost no noise. This

also shows that TOFlow learns to reduce the noise in input

frames by inpainting them with neighboring pixels, which