3

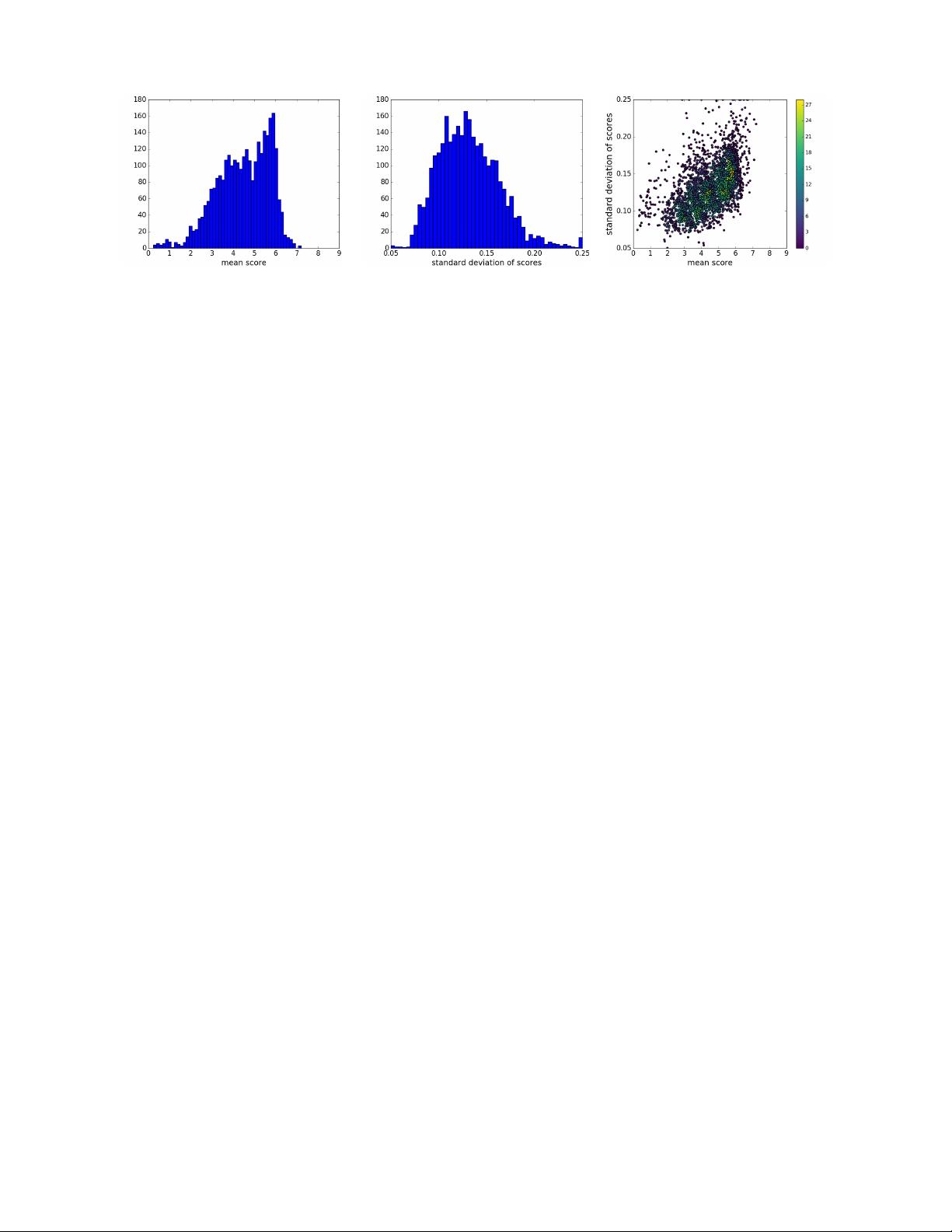

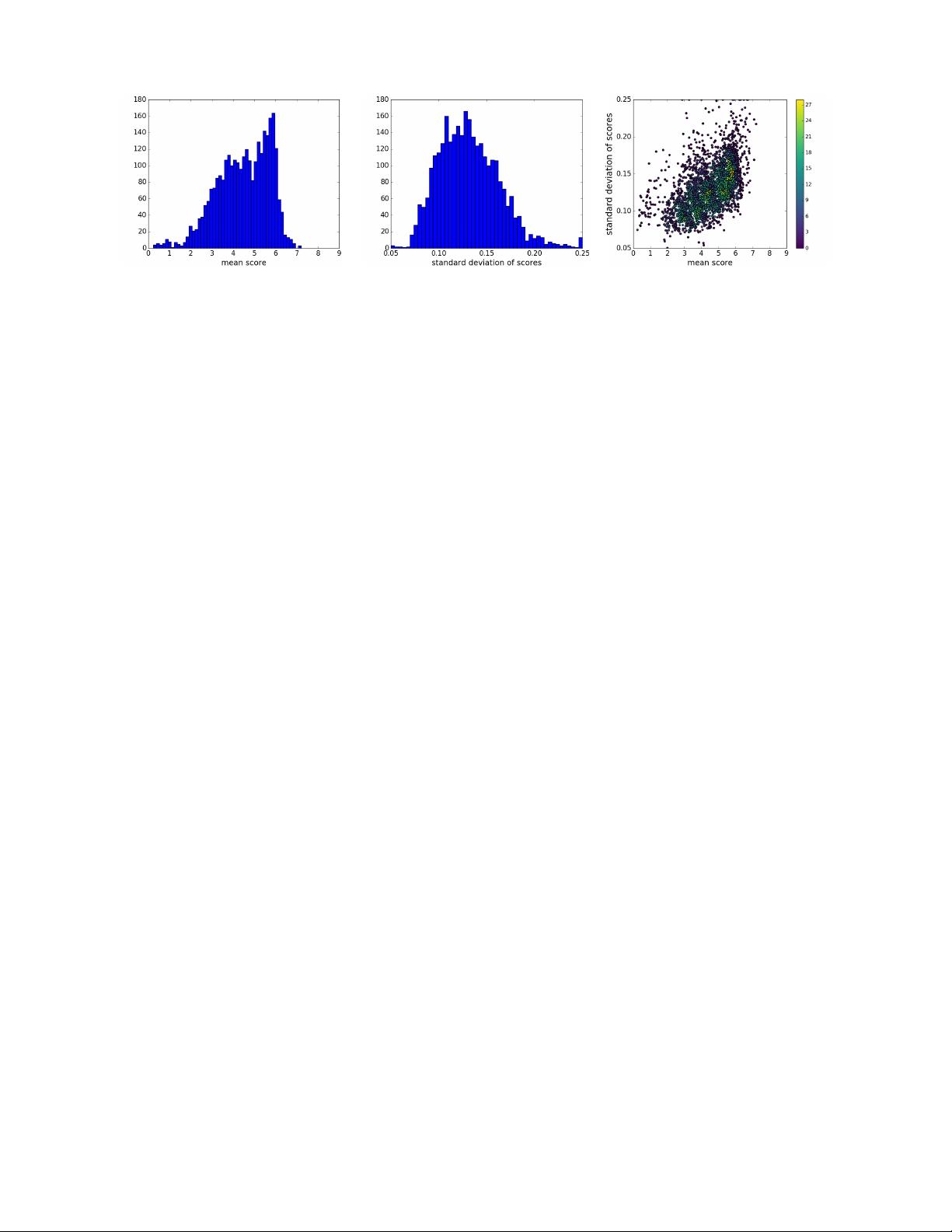

Fig. 3: Histograms of ratings from TID2013 dataset [2]. Left: Histogram of mean scores. Middle: Histogram of standard

deviations. Right: Joint histogram of the mean and standard deviation.

D. Tampere Image Database 2013 (TID2013) [2]

TID2013 is curated for evaluation of full-reference percep-

tual image quality. It contains 3000 images, from 25 reference

(clean) images (Kodak images [20]), 24 types of distortions

with 5 levels for each distortion. This leads to 120 distorted

images for each reference image; including different types of

distortions such as compression artifacts, noise, blur and color

artifacts.

Human ratings of TID2013 images are collected through

a forced choice experiment, where observers select a better

image between two distorted choices. Set up of the experiment

allows raters to view the reference image while making a

decision. In each experiment, every distorted image is used

in 9 random pairwise comparisons. The selected image gets

one point, and other image gets zero points. At the end of

the experiment, sum of the points is used as the quality score

associated with an image (this leads to scores ranging from 0 to

9). To obtain the overall mean scores, total of 985 experiments

are carried out.

Mean and standard deviation of TID2013 ratings are shown

in Fig. 3. As can be seen in Fig. 3(c), the mean and score

deviation values are weakly correlated. A few images from

TID2013 are illustrated in Fig. 4 and Fig. 5. All five levels

of JPEG compression artifacts and the respective ratings are

illustrated in Fig. 4. Evidently higher distortion level leads to

lower mean score

2

. Effect of contrast compression/stretching

distortion on the human ratings is demonstrated in Fig. 5.

Interestingly, stretch of contrast (Fig. 5(c) and Fig. 5(e)) leads

to relatively higher perceptual quality.

Unlike AVA, which includes distribution of ratings for each

image, TID2013 only provides mean and standard deviation

of the opinion scores. Since our proposed method requires

training on score probabilities, the score distributions are

approximated through maximum entropy optimization [21].

II. PROPOSED METHOD

Our proposed quality and aesthetic predictor stands on

image classifier architectures. More explicitly, we explore a

few different classifier architectures such as VGG16 [17],

Inception-v2 [22], and MobileNet [23] for image quality

2

This is a quite consistent trend for most of the other distortions too

(namely noise, blur and color distortions). However, in case of the contrast

change (Fig. 5), this trend is not obvious. This is due to the order of contrast

compression/stretching from level 1 to level 5)

assessment task. VGG16 consists of 13 convolutional and 3

fully-connected layers. Small convolution filters of size 3 × 3

are used in the deep VGG16 architecture [17]. Inception-

v2 [22] is based on Inception module [24] which allows for

parallel use of convolution and pooling operations. Also, in

the Inception architecture, traditional fully-connected layers

are replaced by average pooling, which leads to a signifi-

cant reduction in number of parameters. MobileNet [23] is

an efficient deep CNN, mainly designed for mobile vision

applications. In this architecture, dense convolutional filters are

replaced by separable depth filters. This simplification results

in smaller and faster CNN models.

We replaced the last layer of the baseline CNN with a

fully-connected layer with 10 neurons followed by soft-max

activations (shown in Fig. 6). Baseline CNN weights are

initialized by training on the ImageNet dataset [15], and then

an end-to-end training on quality assessment is performed. In

this paper, we discuss performance of the proposed model with

various baseline CNNs.

In training, input images are rescaled to 256 × 256, and

then a crop of size 224 × 224 crop is randomly extracted.

This lessens potential over-fitting issues, especially when

training on relatively small datasets (e.g. TID2013). It is worth

noting that we also tried training with random crops without

rescaling. However, results were not compelling. This is due to

the inevitable change in image composition. Another random

data augmentation in our training process is horizontal flipping

of the image crops.

Our goal is to predict the distribution of ratings for a

given image. Ground truth distribution of human ratings of

a given image can be expressed as an empirical probability

mass function p = [p

s

1

, . . . , p

s

N

] with s

1

≤ s

i

≤ s

N

,

where s

i

denotes the ith score bucket, and N denotes the

total number of score buckets. In both AVA and TID2013

datasets N = 10, in AVA, s

1

= 1 and s

N

= 10, and in TID

s

1

= 0 and s

N

= 9. Since

P

N

i=1

p

s

i

= 1, p

s

i

represents the

probability of a quality score falling in the ith bucket. Given

the distribution of ratings as p, mean quality score is defined

as µ =

P

N

i=1

s

i

× p

s

i

, and standard deviation of the score is

computed as σ = (

P

N

i=1

(s

i

− µ)

2

× p

s

i

)

1/2

. As discussed in

the previous section, one can qualitatively compare images by

mean and standard deviation of scores.

Each example in the dataset consists of an image and its

ground truth (user) ratings p. Our objective is to find the