R. Li, Z. Liu and J. Tan / Pattern Recognition 93 (2019) 251–272 255

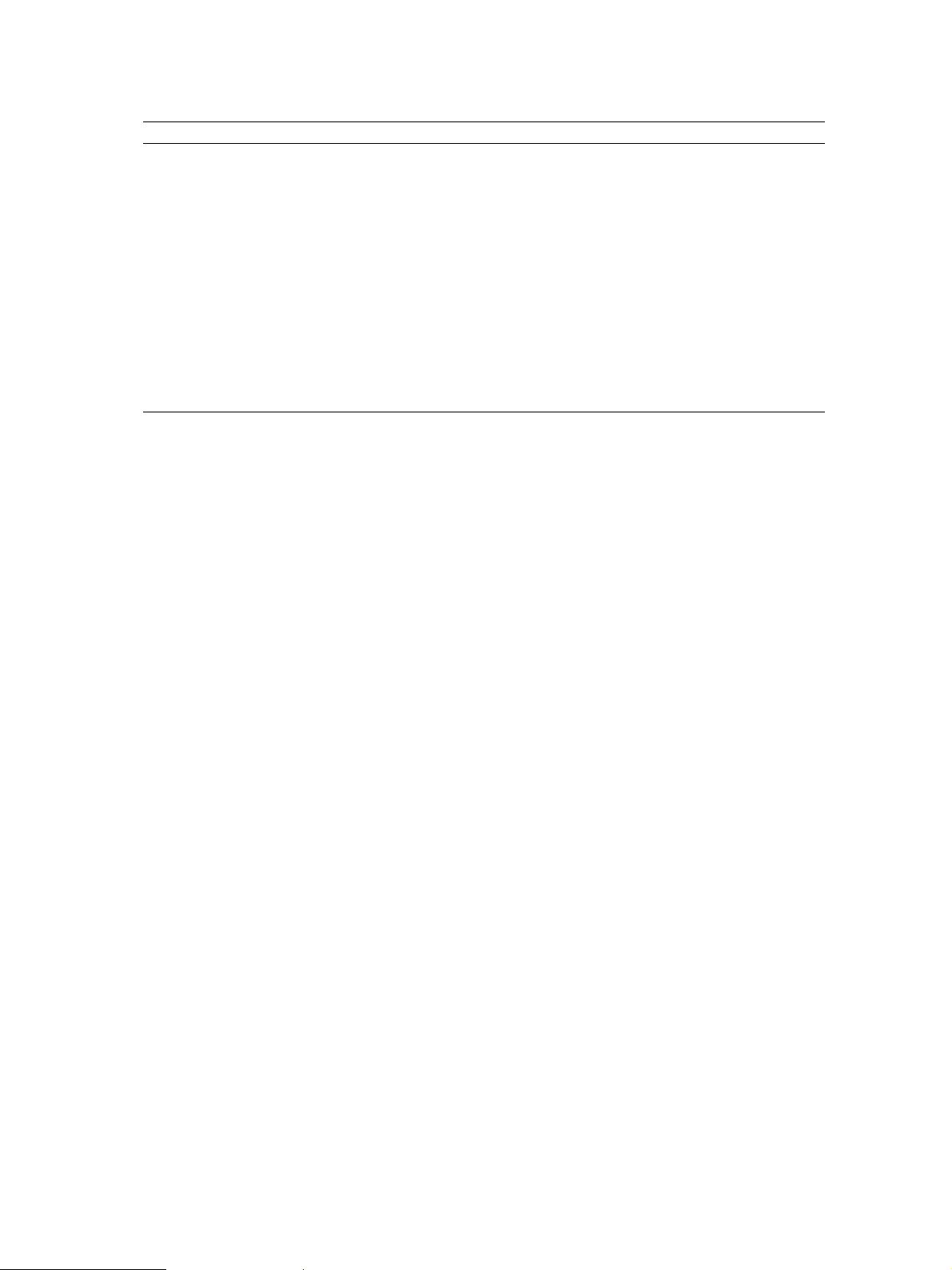

Table 1

Popular commercial depth cameras.

Camera Model Release date Discontinued Depth technology Range Max depth Fps

Microsoft Kinect 1st generation 2010 Yes Structured light 0.5–4.5 m 30

2nd generation 2014 Yes ToF 0.5–4.5 m 30

ASUS Xtion PRO LIVE 2012 Yes Structured light 0.8–3.5 m 60

2 2017 Yes Structured light 0.8–3.5m 30

Leap Motion (updated on December 20, 2018) 2013 No Dual IR stereo vision 0.03–0.6

m 200

Intel RealSense F200 2014 Yes Structured light 0.2–1.2 m 60

R200 2015 No Structured light 0.5–3.5 m 60

LR200 2016 Yes Structured light 0.5–3.5 m 60

SR300 2016 No Structured light 0.3–2 m 30

ZR300 2017 Yes Structured light 0.5–3.5 m 60

D415 2018 No Structured light 0.16–10

m 90

D435 2018 No Structured light 0.11–10 m 90

SoftKinetic DS311 2011 Yes ToF 0.15–4.5 m 60

DS325 2012 Yes ToF 0.15–1 m 60

DS525 2013 Yes ToF 0.15–1 m 60

DS536A 2015 Yes ToF 0.1–5 m 60

DS541A 2016 Yes ToF 0.1–5m 60

Creative Interactive Gesture 2012 Yes ToF

0.15–1 m 60

Structure Sensor (updated on July 24, 2018) 2013 No Structured light 0.4–3.5 m 60

map that encodes the difference in horizontal coordinates of the

corresponding image points. The values in the disparity map are

inversely proportional to the scene depth at the corresponding

pixel location. Due to the sensitivity to illumination and texture,

this type of depth camera is not popular in hand pose estimation.

It is difficult to say which type of camera works best for

hand pose estimation, because the performance is also influenced

by environmental factors and application scenarios. Sridhar et al.

[56] validated the effectiveness of their method with the Creative

Interactive Gesture, Intel RealSense, and Primesense Carmine. In

[57] , Sridhar et al. published a benchmark dataset with the Cre-

ative Interactive Gesture and Kinect v1. Melax et al. [58] and Su-

pancic et al. [30] used the ASUS Xtion and Creative Interactive Ges-

ture.

There is no strict universal rule for selecting the most appro-

priate camera. The selection mainly depends on the nature of the

problem. From Table 1 , we can see that the Intel RealSense se-

ries are suitable for mid-range and long-range applications, the

Leap Motion is suitable for short-range applications, and the Struc-

ture Sensor is suitable for mobile applications. Cameras like the

Microsoft Kinect, ASUS Xtion, SoftKinetic, and Creative Interactive

Gesture have been discontinued. From the point of view of long-

term maintenance and update, these cameras are less attractive

compared to the other depth cameras.

2.2. Existing evaluation approaches

There has been extensive work on evaluating the performance

of a depth camera in medical fields. Harkel et al. [59] tested the

accuracy of the RealSense in a cohort of patients with a unilateral

facial palsy. House et al. [60] evaluated the RealSense for image-

guided interventions and applications in vertebral level localiza-

tion. Yeung et al. [61] evaluated the performance of the Kinect v1

when it was used as a clinical assessment tool for total body cen-

ter of mass sway measurement. Noonan et al. [62] evaluated the

Kinect v1 for motion tracking of a head phantom with a head CT.

Ferche et al. [63] utilized the Leap Motion and RealSense to assist

the rehabilitation of patients with a disability in upper limbs by

providing them with augmented feedback presented in a dedicated

virtual environment.

Numerous evaluation approaches can be found in other fields.

Cree et al. [64] analyzed the precision of the SoftKinetic for range

imaging. Jakus et al. [65] assessed the consistency and accuracy of

the Leap Motion. Fankhauser et al. [66] analyzed the depth data

quality of the Kinect v2 for mobile robot navigation in overcast

conditions with direct sunlight. Carfagni et al. [67] studied the

metrological and critical characterization of the RealSense when it

was used as a 3D scanner. Yang et al. [68] obtained an accuracy

distribution of the Kinect v2 through a cone model. Lachat et al.

[69] provided an assessment and calibration method of the Kinect

v2 toward a potential use of close-range 3D modeling. Corti et al.

[70] presented a metrological characterization of the Kinect v2 by

taking into account measuring conditions and environmental pa-

rameters. Breuer et al. [71] provided an analysis of measurement

noise, accuracy, and error sources of the Kinect v2.

Some researchers focus on a comparison of different depth

cameras. Zennaro et al. [72] compared the performance of the

Kinect v1 and Kinect v2 in order to explain the results achieved

by switching the depth sensing technology. Gonzalez-Jorge et al.

[73] presented an accuracy and precision test of the Kinect v1 and

Kinect v2 using a standard artifact based on five spheres and seven

cubes. Wasenmuller et al. [74] investigated the accuracy and pre-

cision of the Kinect v1 and Kinect v2 in the context of 3D recon-

struction, SLAM, and visual odometry. Boehm et al. [75] studied

structured light cameras with respect to their repeatability and ac-

curacy. Langmann [76] presented a depth camera assessment, in-

cluding the Kinect v1, ZESS MultiCam, PMDTec 3k-S, SoftKinetic,

and PMDTec CamCube 41k.

Comparisons between depth cameras and other devices can also

be found. Lima et al. [77] used the RealSense as an eye gaze tracker

to estimate a user’s gaze location, and compared it with a special-

ized device, the Tobii EyeX. Seixas et al. [78] designed an experi-

ment to study the performance of the Leap Motion in 2D pointing

tasks and compared the Leap Motion to a mouse and touchpad.

The experimental results indicated that the Leap Motion worked

poorly.

To obtain the accuracy of a depth camera, one must know

the ground-truth results that serve as a reference. Various high-

precision measuring devices have been introduced in the exist-

ing approaches, e.g., the Vicon motion capture system [61] , AGPtek

Handheld Digital Laser Point Distance Meter [68] , tape measure

[66] , Polaris optical tracker [62] , FARO Focus terrestrial laser scan-

ner [69] , coordinate measurement machine [67,73] , clinical 3dMD

system [59] , NextEngine scanner [72] , and Qualisys motion capture

system [65,79] .

The emphasis of the existing approaches is to evaluate the

performance of a camera measuring fixed spatial positions. Such

evaluations essentially characterize the static property. In contrast,