to computer vision, was only 74.3%. Then in 2012, a team led by Alex Krizhevsky and

advised by Geoffrey Hinton was able to achieve a top-5 accuracy of 83.6%—a significant

breakthrough. The competition has been dominated by deep convolutional neural

networks every year since. By 2015, we had reached an accuracy of 96.4%, and the

classification task on ImageNet was considered to be a completely solved problem.

Since 2012, deep convolutional neural networks ("convnets") have become the go-to

algorithm for all computer vision tasks, and generally all perceptual tasks. At major

computer vision conferences in 2015 or 2016, it had become nearly impossible to find

presentations that did not involve convnets in some form. At the same time, deep learning

has also found applications in many other types of problems, such as natural language

processing. It has come to completely replace SVMs and decision trees in a wide range of

applications. For instance, for several years, the European Organization for Nuclear

Research, CERN, used decision tree-based methods for analysis of particle data from the

ATLAS detector at the Large Hadron Collider (LHC), but they eventually switched to

Keras-based deep neural networks due to their higher performance and ease of training

on large datasets.

The reason why deep learning took off so quickly is primarily that it offered better

performance on many problems. But that’s not the only reason. Deep learning is also

making problem-solving much easier, because it completely automates what used to be

the most crucial step in a machine learning workflow: "feature engineering".

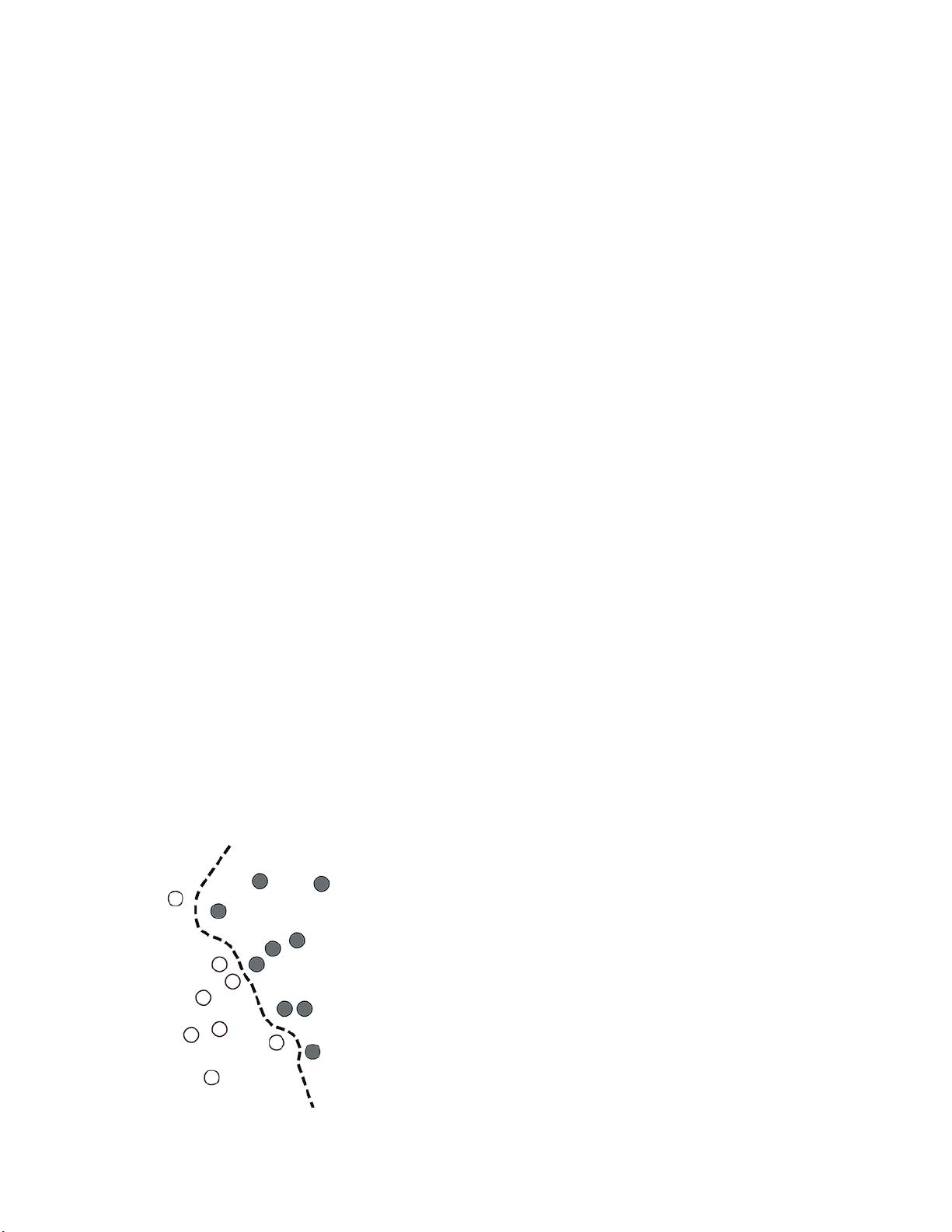

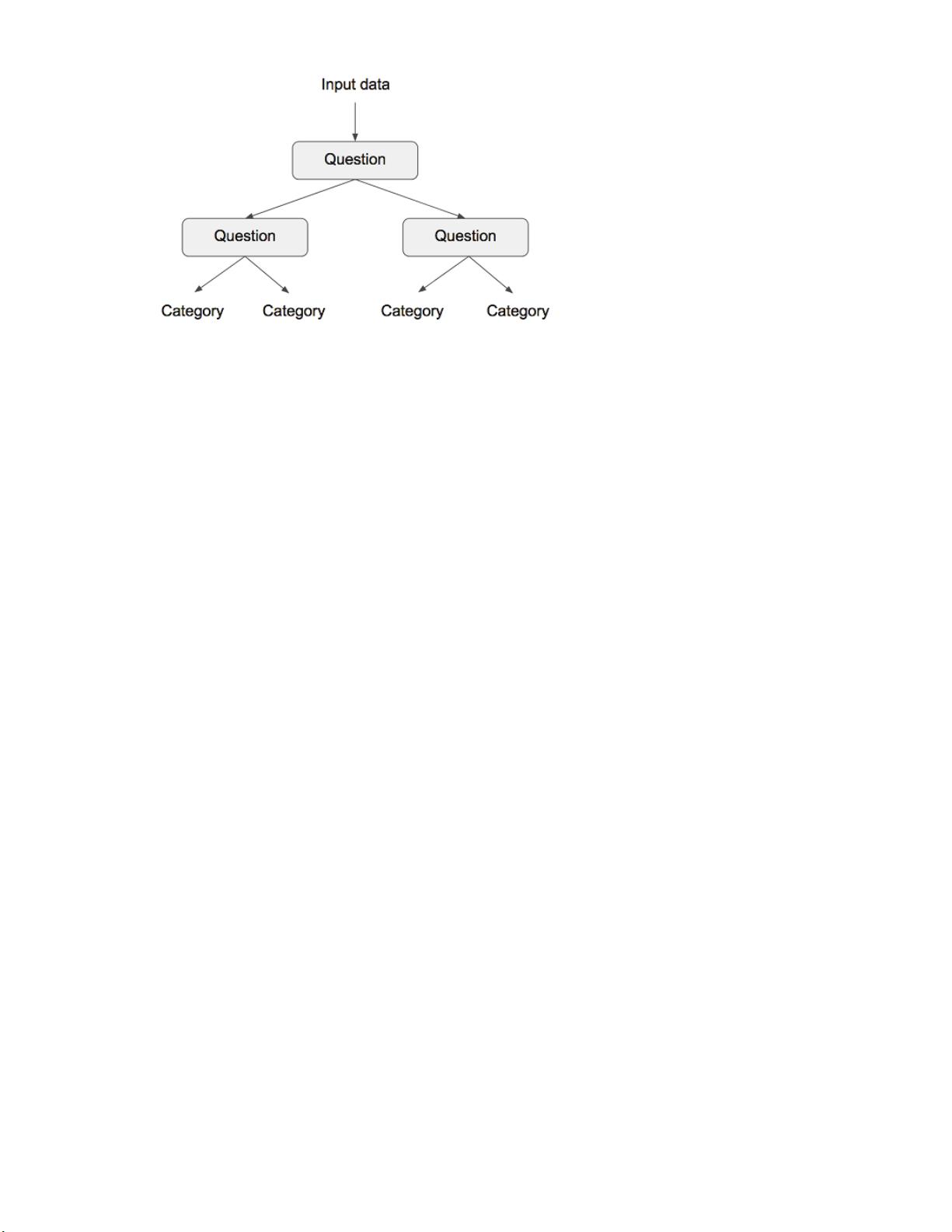

Previous machine learning techniques, "shallow" learning, only involved

transforming the input data into one or two successive representation spaces, usually via

very simple transformations such as high-dimensional non-linear projections (SVM) or

decision trees. But the refined representations required by complex problems generally

cannot be attained by such techniques. As such, humans had to go to great length to make

the initial input data more amenable to processing by these methods, i.e. they had to

manually engineer good layers of representations for their data. This is what is called

"feature engineering". Deep learning, on the other hand, completely automates this step:

with deep learning, you all features in one pass rather than having to engineer themlearn

yourself. This has greatly simplified machine learning workflows, often replacing very

sophisticated multi-stage pipelines with a single, simple, end-to-end deep learning model.

You may ask, if the crux of the issue is to have multiple successive layers of

representation, could shallow methods be applied repeatedly to emulate the effects of

deep learning? In practice, there are fast-diminishing returns to successive application of

shallow learning methods, because the optimal first representation layer in a 3-layer

. What is transformativemodel is not the optimal first layer in a 1-layer or 2-layer model

about deep learning is that it allows a model to learn all layers of representation , atjointly

the same time, rather than in succession ("greedily", as it is called). With joint feature

learning, whenever the model adjusts one of its internal features, all other features that

depend on it will automatically adapt to the change, without requiring human

1.2.6 What makes deep learning different

©Manning Publications Co. We welcome reader comments about anything in the manuscript - other than typos and

other simple mistakes. These will be cleaned up during production of the book by copyeditors and proofreaders.

https://forums.manning.com/forums/deep-learning-with-python

Licensed to <null>