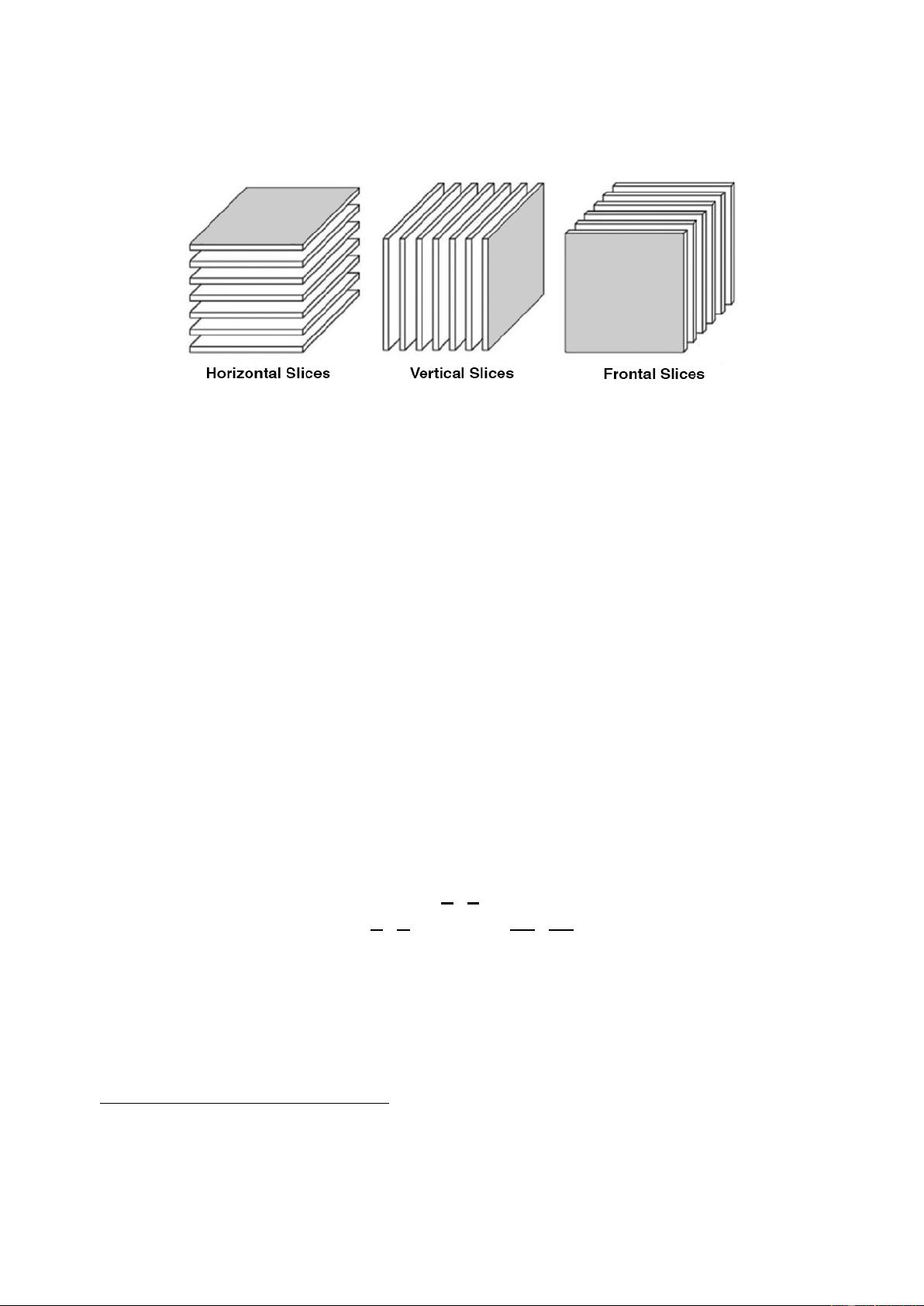

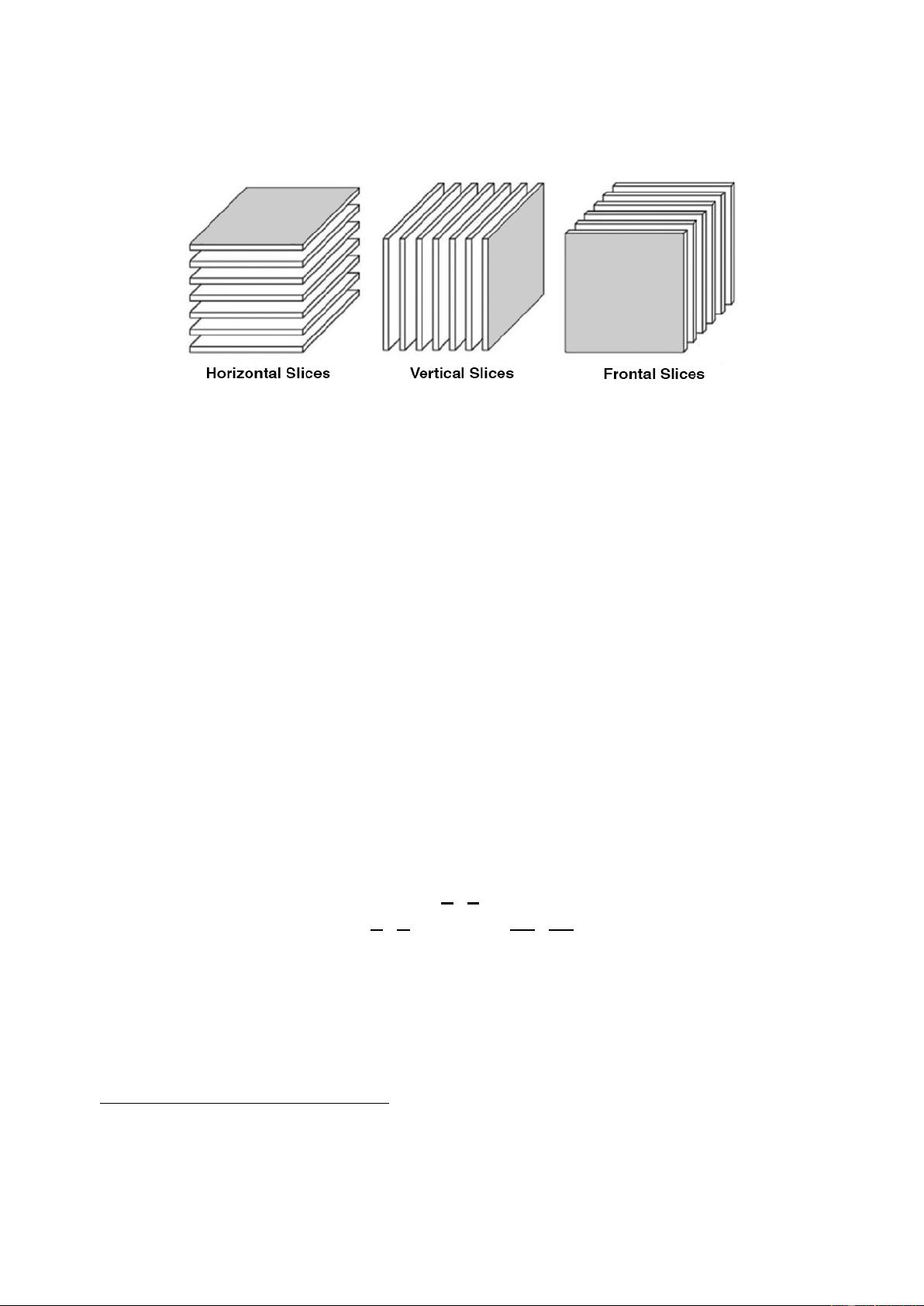

Figure 2: The similarity matrix C is used to compute the similarity of pairs of relations in the knowledge graph. Its i

th

frontal slice is the adjacency

matrix of the i

th

relation, i.e., a two-dimensional matrix with a row and column for each entity whose values are 1 if the relation holds for a pair

and 0 otherwise.

In our framework, we represent a multi-relational knowledge graph of N

r

binary relations among N

e

entities by

the order-3 tensor X of dimension N

e

× N

e

× N

r

. This binary tensor is often very large and sparse. Our goal is to

construct dense, informative p-dimensional embeddings, where p is much smaller than either the number of entities

or the number of relations. We represent the collection of p-dimensional entity embeddings by A, the collection of

relation embeddings by R, and the similarity matrix by C. The entity embeddings collection A contains matrices A

α

of size N

e

× p while the relation embeddings collection R contains matrices R

k

of size p × p. Recall that the frontal

slice X

k

of tensor X is the adjacency matrix of the k

th

binary relation, as shown in Figure 2. We use A ⊗B to denote

the Kronecker product of two matrices A and B, vec (B) to denote the vectorization of a matrix B, and a lower italic

letter like a to denote a scalar.

2

Mathematically, our objective is to reconstruct each of the k relation slices of X , X

k

, as the product

X

k

≈ A

α

R

k

A

|

β

. (1)

Recall that both A

α

and A

β

are matrices: each row is the embedding of an entity. By changing the exact form of

A—that is, the number of different entity matrices, or the different ways to index A—we can then arrive at different

models. These model variants encapsulate both mathematical and philosophical differences. In this paper, we specif-

ically study two cases. First, we examine the case of having only a single entity embedding matrix, represented as

A—that is, A

α

= A

β

= A. This results in a quadratic reconstruction problem, as we approximate X

k

≈ AR

k

A

|

.

Second, we examine the case of having two separate entity embedding matrices, represented as A

1

and A

2

. This

results in a reconstruction problem that is linear in the entity embeddings, as we approximate X

k

≈ A

1

R

k

A

|

2

.

We learn A

α

, A

β

, and R by minimizing the augmented reconstruction loss

min

A,R

f(A, R)

| {z }

reconstruction loss

+

numerical regularization of the embeddings

z }| {

g(A, R) + f

s

(A, R, C)

| {z }

knowledge-directed enrichment

. (2)

The first term of (2) reflects each of the k relational criteria given by (1). The second term employs standard numerical

regularization of the embeddings, such as Frobenius minimization, that enhances the algorithm’s numerical stability

and supports the interpretability of the resulting embeddings. The third term uses our similarity matrix C to enrich

the learning process with our extra knowledge.

We first discuss how we construct the similarity matrix C in Section 3.2 and then, starting in Section 3.3, describe

how the framework readily yields three novel embedding models, while also generalizing prior efforts. Throughout,

2

We use the standard tensor notations and definitions in Kolda and Bader [10]. Recall that the Kronecker product A ⊗ B of an (m

1

, n

1

)

matrix A and a (m

2

, n

2

) matrix B returns an (m

1

m

2

, n

1

n

2

) block matrix, where each element of A scales the entire matrix B.

6