4

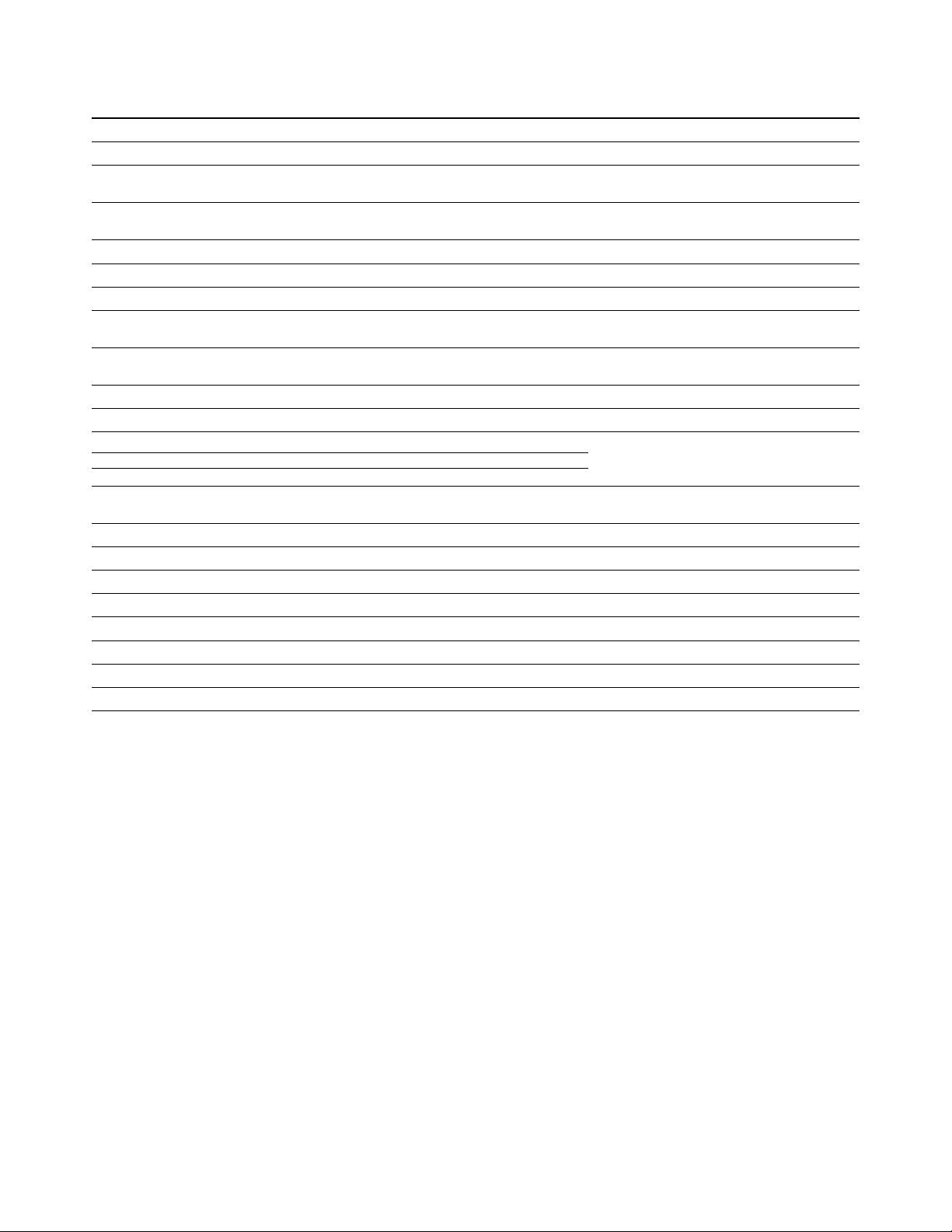

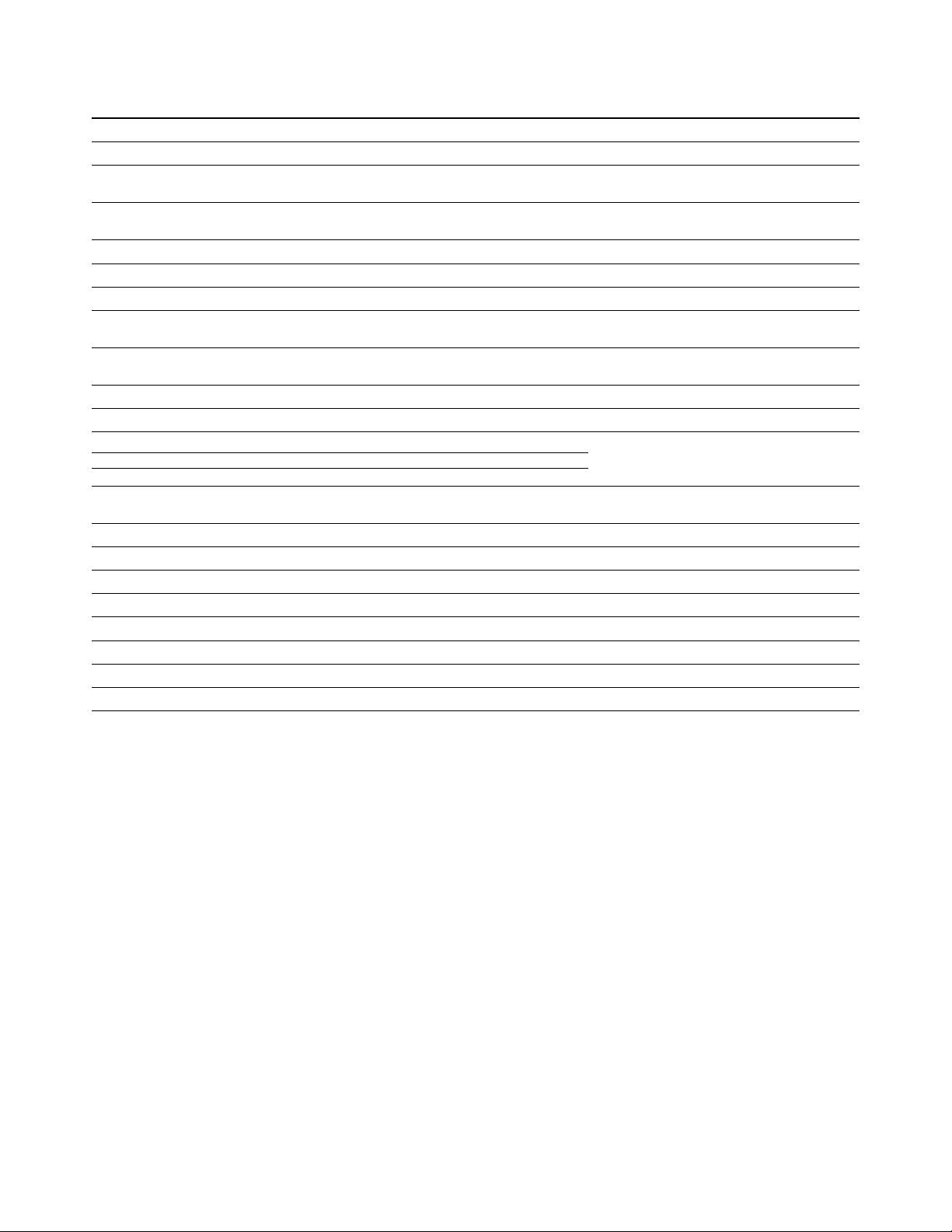

TABLE 2

Commonly used domain generalization datasets.

Benchmark # samples # domains Task Description

Rotated MNIST [53] 70,000 6 Handwritten digit recognition Rotation degree ∈ {0, 15, 30, 45, 60, 75}

Digits-DG [31] 24,000 4 Handwritten digit recognition Combination of MNIST [54], MNIST-M [13],

SVHN [55] and SYN [13]

VLCS [56] 10,729 4 Object recognition Combination of Caltech101 [39], LabelMe [40],

PASCAL [57], and SUN09 [58]

Office-31 [10] 4,652 3 Object recognition Domain ∈ {amazon, webcam, dslr}

OfficeHome [59] 15,588 4 Object recognition Domain ∈ {art, clipart, product, real}

PACS [33] 9,991 4 Object recognition Domain ∈ {photo, art, cartoon, sketch}

DomainNet [60] 586,575 6 Object recognition Domain ∈ {clipart, infograph, painting, quick-

draw, real, sketch}

miniDomainNet [61] 140,006 4 Object recognition A smaller and less noisy version of Domain-

Net; domain ∈ {clipart, painting, real, sketch}

ImageNet-Sketch [51] 50,000 2 Object recognition Domain shift between real and sketch images

VisDA-17 [62] 280,157 3 Object recognition Synthetic-to-real generalization

CIFAR-10-C [8] 60,000 - Object recognition

The test data are damaged by 15 corruptions

(each with 5 intensity levels) drawn from 4

categories (noise, blur, weather, and digital)

CIFAR-100-C [8] 60,000 - Object recognition

ImageNet-C [8] ≈1.3M - Object recognition

Visual Decathlon [63] 1,659,142 10 Object/action/handwritten

digit recognition

Combination of 10 datasets

IXMAS [64] 1,650 5 Action recognition 5 camera views; 10 subjects; 5 actions (see [27])

UCF-HMDB [65], [66] 3,809 2 Action recognition 12 overlapping actions (see [67])

SYNTHIA [68] 2,700 15 Semantic segmentation 4 locations; 5 weather conditions (see [43])

GTA5-Cityscapes [69], [70] 29,966 2 Semantic segmentation Synthetic-to-real generalization

TerraInc [71] 24,788 4 Animal classification Captured at different geographical locations

Market-Duke [72], [73] 69,079 2 Person re-identification Cross-dataset re-ID; heterogeneous DG

Face [36] >5M 9 Face recognition Combination of 9 face datasets

COMI [74], [75], [76], [77] ≈8,500 4 Face anti-spoofing Combination of 4 face anti-spoofing datasets

style is close to the target image style (both sharing the

same visual cues), the performance would be higher (e.g.,

photo→painting, both relying on colors and textures); oth-

erwise, if the source image style is drastically different from

the target image style, the performance would be poor

(e.g., photo→quickdraw, with the latter strongly relying on

shape information while requiring no color information at

all). This observation also applies to unsupervised domain

adaptation. For instance, the performance on the quickdraw

domain of DomainNet is usually the lowest among all target

domains [61], [81], [82].

3) A couple of recent DG studies [83], [84] have investi-

gated, from a transfer learning perspective, how to preserve

the knowledge learned via large-scale pre-training when

training on abundant labeled synthetic data for synthetic-

to-real applications. The experiments were carried out on

VisDA-17 [62]. This is an important yet under-studied topic

in DG: when only given sufficient synthetic data, how can

we avoid over-fitting in synthetic images by leveraging the

initialization weights learned on real images? Such a setting

is particularly useful to problems where manual labels are

difficult/expensive to obtain.

4) Synthetic image corruptions like Gaussian noise and

motion blur have also been used to simulate domain shift

by Hendrycks and Dietterich [8]. In their proposed datasets,

i.e. CIFAR-10-C, CIFAR-100-C and ImageNet-C, a model is

learned using the original images but tested on the cor-

rupted images. This research is largely motivated by adver-

sarial attacks [85], and aims to evaluate model robustness

under common image perturbations for safety applications.

5) Lastly, a hybrid dataset initially proposed for multi-

domain/task learning, i.e. Visual Decathlon [63], has also

been employed, for evaluating heterogeneous DG [34], [37].

However, due to both the changes in label space and the

use of target data for training SVM classifiers, this setup

overlaps with transfer learning [86].

Action Recognition Learning generalizable models is

critical for action recognition. This is because the test data

typically contain actions performed by new subjects in

new environments. IXMAS [64] has been widely used as

a cross-view action recognition benchmark [27], [37], which

contains action videos collected from five different views.

The common practice is to use four views for training and

the remaining view for test. In addition to view changes, dif-

ferent subjects and environments might also cause failure.

Intuitively, different persons can perform the same action in