Text Generation from Knowledge Graphs with Graph Transformers

Rik Koncel-Kedziorski

1

, Dhanush Bekal

1

, Yi Luan

1

, Mirella Lapata

2

, and Hannaneh Hajishirzi

1,3

1

University of Washington

{kedzior,dhanush,luanyi,hannaneh}@uw.edu

2

University of Edinburgh

mlap@inf.ed.ac.uk

3

Allen Institute for Artificial Intelligence

Abstract

Generating texts which express complex ideas

spanning multiple sentences requires a struc-

tured representation of their content (docu-

ment plan), but these representations are pro-

hibitively expensive to manually produce. In

this work, we address the problem of gener-

ating coherent multi-sentence texts from the

output of an information extraction system,

and in particular a knowledge graph. Graph-

ical knowledge representations are ubiquitous

in computing, but pose a significant challenge

for text generation techniques due to their

non-hierarchical nature, collapsing of long-

distance dependencies, and structural variety.

We introduce a novel graph transforming en-

coder which can leverage the relational struc-

ture of such knowledge graphs without impos-

ing linearization or hierarchical constraints.

Incorporated into an encoder-decoder setup,

we provide an end-to-end trainable system

for graph-to-text generation that we apply to

the domain of scientific text. Automatic and

human evaluations show that our technique

produces more informative texts which ex-

hibit better document structure than competi-

tive encoder-decoder methods.

1

1 Introduction

Increases in computing power and model capac-

ity have made it possible to generate mostly-

grammatical sentence-length strings of natural

language text. However, generating several sen-

tences related to a topic and which display over-

all coherence and discourse-relatedness is an open

challenge. The difficulties are compounded in do-

mains of interest such as scientific writing. Here

the variety of possible topics is great (e.g. top-

ics as diverse as driving, writing poetry, and pick-

ing stocks are all referenced in one subfield of

1

Data and code available at https://github.com/

rikdz/GraphWriter

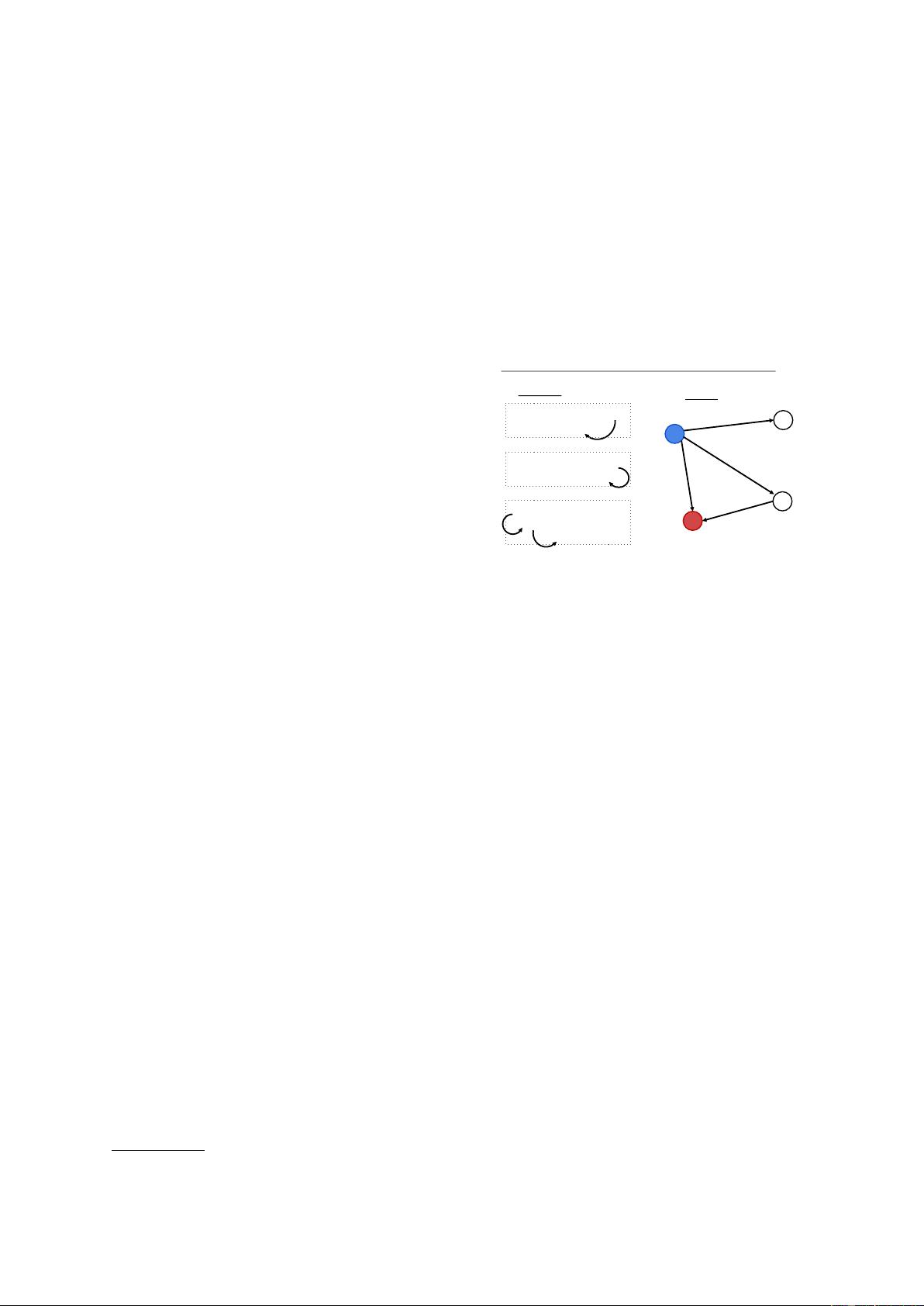

Our Model outperforms

HMM models by 15% on

this data.

used-for

comparison

We present a CRF Model

for Event Detection.

CRF Model

Event Detection

SemEval 2011

Task 11

used

-

for

We evaluate this model

on SemEval 2010 Task 11

evaluate-for

evaluate-for

evaluate

-

for

evaluate

-

for

HMM Models

comparison

Title: Event Detection with Conditional Random Fields

Abstract

Graph

Figure 1: A scientific text showing the annotations of

an information extraction system and the correspond-

ing graphical representation. Coreference annotations

shown in color. Our model learns to generate texts from

automatically extracted knowledge using a graph en-

coder decoder setup.

one scientific discipline). Additionally, there are

strong constraints on document structure, as sci-

entific communication requires carefully ordered

explanations of processes and phenomena.

Many researchers have sought to address these

issues by working with structured inputs. Data-to-

text generation models (Konstas and Lapata, 2013;

Lebret et al., 2016; Wiseman et al., 2017; Pudup-

pully et al., 2019) condition text generation on

table-structured inputs. Tabular input representa-

tions provide more guidance for producing longer

texts, but are only available for limited domains

as they are assembled at great expense by manual

annotation processes.

The current work explores the possibility of us-

ing information extraction (IE) systems to auto-

matically provide context for generating longer

texts (Figure 1). Robust IE systems are avail-

able and have support over a large variety of tex-

tual domains, and often provide rich annotations

of relationships that extend beyond the scope of

arXiv:1904.02342v2 [cs.CL] 18 May 2019