WANG et al.: A COMPARATIVE ANALYSIS OF IMAGE FUSION METHODS 1393

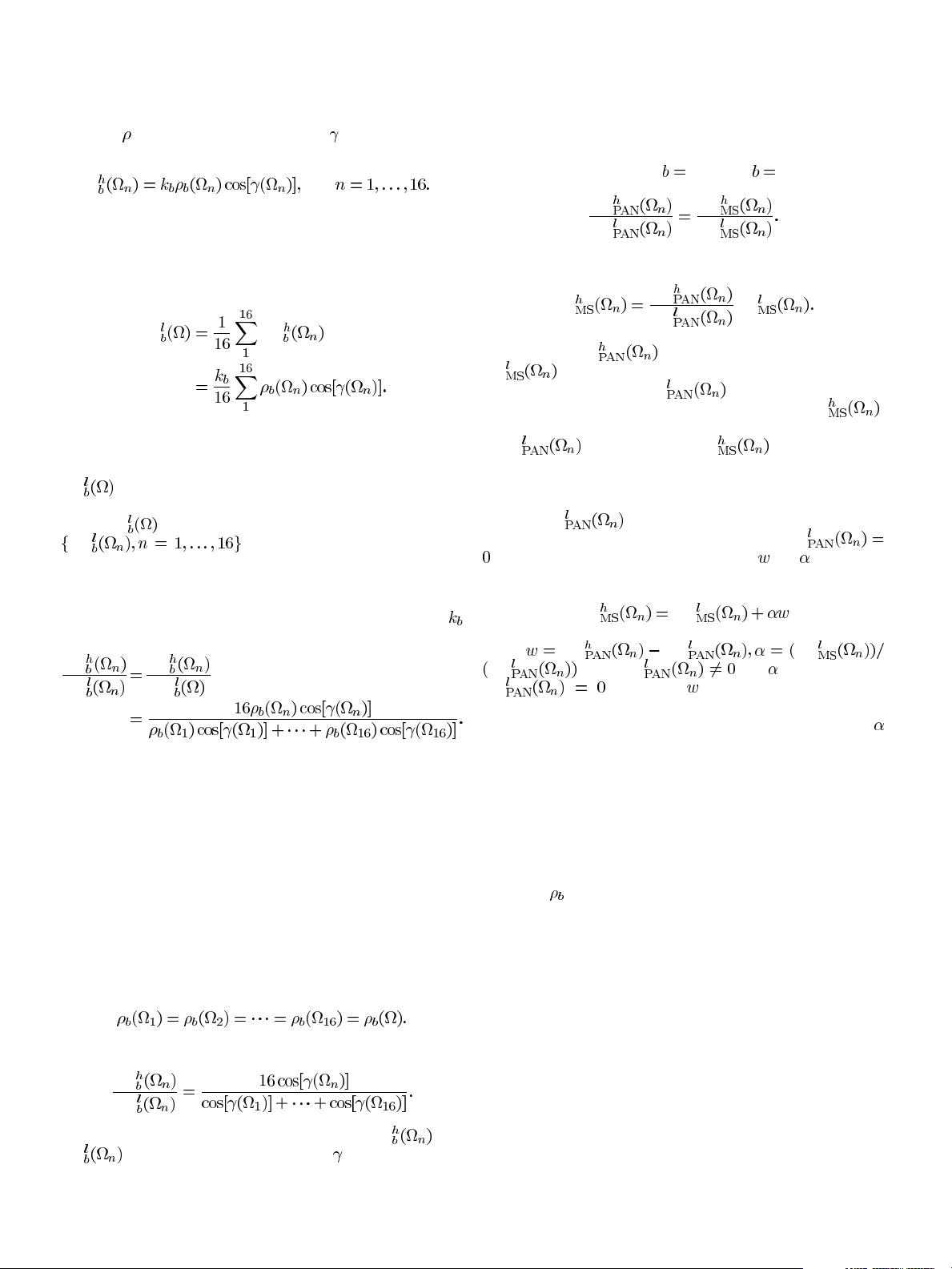

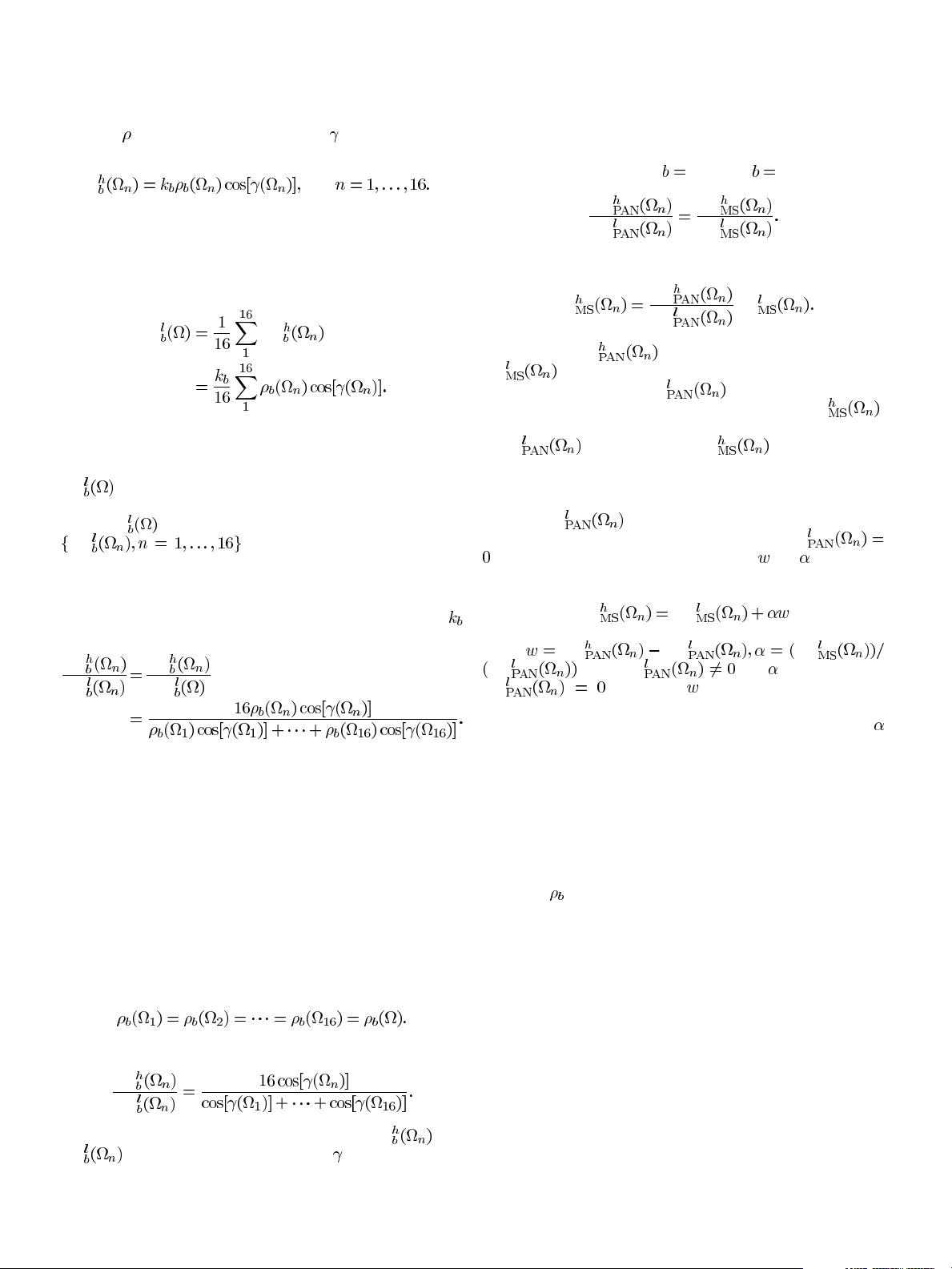

a high-resolution pixel as a pure unit surface on which the re-

flectance

and the solar incidence angle are uniform. From

(1), we have

DN

(2)

Since each low-resolution pixel value (or radiance) can be

treated as a weighted average of high-resolution pixel values (or

radiances) over the corresponding space, provided that only the

uniformly weighted case is considered for the sake of simplicity,

we have

DN

DN

(3)

However, for pixel-level image fusion, it is necessary to

sample the low-resolution image and the high-resolution

image at the same pixel size. The low-resolution mother pixel

DN

is thus replaced with 16 child pixels at 1-m pixel

size, whose pixel values may be considered to be the same

as the DN

, for the sake of simplification, and denoted as

DN . Then the one-to-one relationship

between the pixels of the high-resolution image and the pixels

of the low-resolution image is established at 1-m pixel size

(see Fig. 1). If we divide the corresponding pixel values of the

same band high-resolution and low-resolution images, then

is cancelled. We obtain

DN

DN

DN

DN

(4)

Within a low-resolution image, pixels can be classified as

pure pixels and mixed pixels. Pure pixels correspond to the

interior pixels (homogeneous regions), which originate from

the same type of high-resolution pixels. The spatial difference

between the high-resolution pixels and the corresponding pure

low-resolution pixels is caused by the cosine factor. Mixed

pixels correspond to the boundary pixels (heterogeneous re-

gions), which originate from different types of high-resolution

pixels. The spatial difference between the high-resolution

pixels and the corresponding mixed low-resolution pixels is

caused by both reflectance and cosine variations.

For pure low-resolution pixels, we have

(5)

Equation (4) thus becomes

DN

DN

(6)

Equation (6) shows that the ratio between DN

and

DN

is controlled by the cosine factor and is independent

of the sensor band. It is worth mentioning that independence

of the sensor band is only approximately true. Perfect inde-

pendence of the sensor band is never achieved because image

formation in reality is far more complex than the simplified

image formation model.

If PAN represents a panchromatic band and MS a multispec-

tral band, then from (6), for

PAN and MS, we have

DN

DN

DN

DN

(7)

Manipulating (7) yields the equivalent expression

DN

DN

DN

DN (8)

In practice, DN

, the pixel values of the HRPI, and

DN

, the corresponding pixel values of the upsam-

pled LRMI, are known. DN

, the pixel values of the

low-resolution panchromatic image (LRPI), and DN

,

the pixel values of the HRMI, are unknown. However, as long

as DN

is approximated, DN can be solved for.

The mathematical model itself is the same as the HPM method

and has also appeared in many other papers, such as those of

[19], [20], andd [33]. However, different methods are used to

compute DN

in those papers.

Since (8) can lead to an ill-posed situation if DN

, introducing two intermediate parameters and in (8) gives

DN

DN (9)

where

DN DN DN

DN when DN and is set to 1 when

DN

. Parameter is the signal difference be-

tween the HRPI and the LRPI. It represents the detail informa-

tion between the high- and low-resolution levels. Parameter

is the modulation coefficient for the detail information. It deter-

mines how the detail information of the HRPI is injected into

the LRMIs.

For mixed low-resolution pixels, (7) does not always hold.

One solution in this case is to subdivide these pixels into sev-

eral homogeneous sections according to the information from

high-resolution pixels; (7) will then hold true within each ho-

mogeneous section. However, it is hard to un-mix the spectral

properties

of these pixels when the HRPI has only one spec-

tral band. For the sake of simplicity, we assume that (7) holds

true for these pixels as well, so that spatial information caused

by variations in reflectance is restored in the same way as that

resulting from cosine variation.

Equation (9) is considered as the mathematical model of the

GIF method. It shows that the pixel values of the HRMIs are

determined by the corresponding pixel values of the LRPI, or,

say, by the corresponding detail information of the panchro-

matic band and the modulation coefficients. The performance

of the fusion process is therefore determined by how the LRPI

is computed and how the modulation coefficients are set.

It is also worth mentioning that, while the GIF method is de-

rived based on the IKONOS sensor, it is also applicable to other

sensors where the calibration coefficient offset in (1) is zero. For

those sensors whose offset is not equal to zero, the DN must be

calibrated to the radiance or, at least, the offset must be cali-

brated from the DN in order to make the GIF method hold true.