Transformer Encoder

Linear Projection

ViT-B/16

Spatial-Frequency Token SelectionShared Feature Extraction Hierarchical Masked Aggregation

Masked

Encoder

Masked Encoder

Triplet Loss

CE Loss

BN Neck

Spatial-based

Token Selection

Frequency-based

Token Selection

Union

Background Consistency Constraint

RGB

NIR

TIR

Masked

Encoder

Masked

Encoder

Object-Centric Feature Refinement

Concatenation

C [cls] Token Patch Token Reserved Token

Dropped Token

C

C

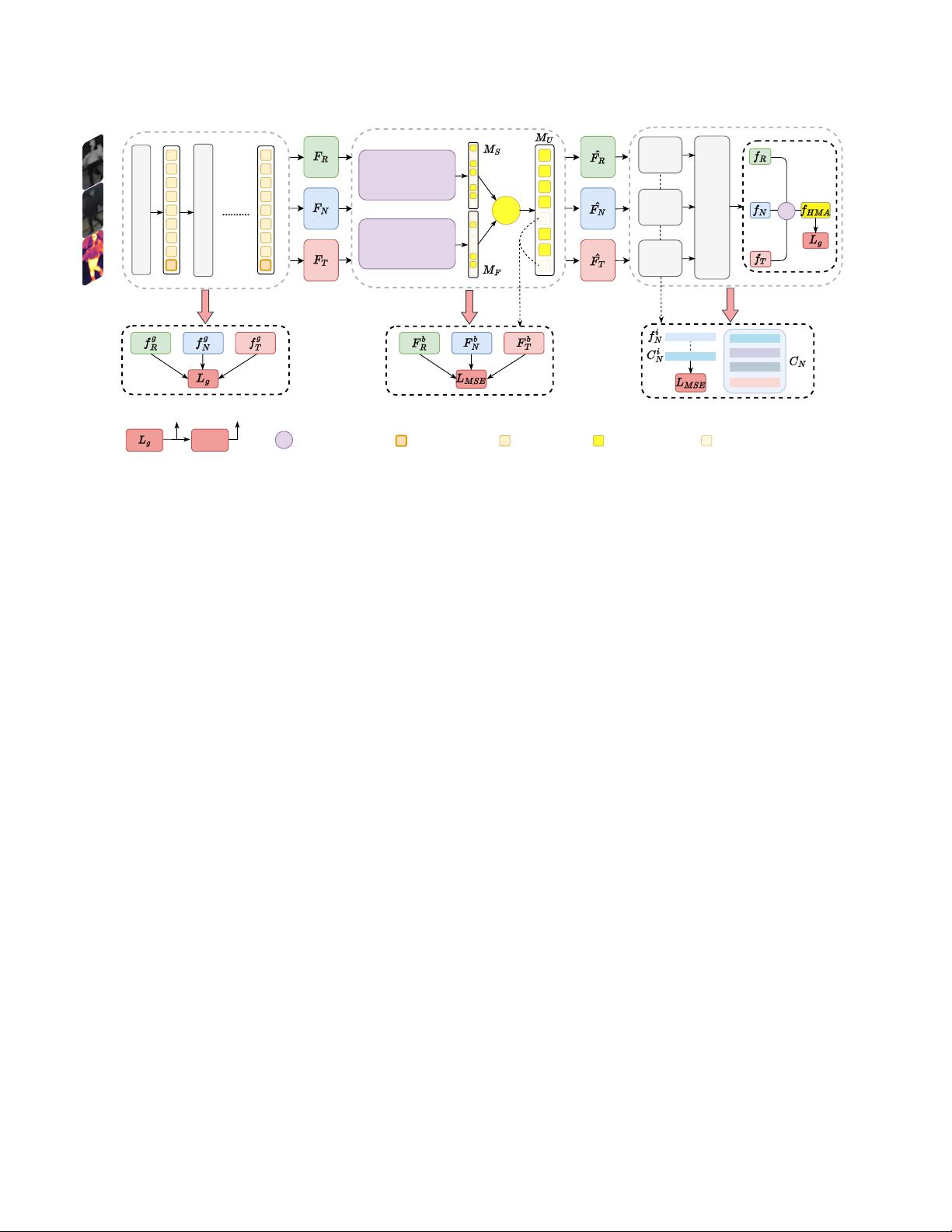

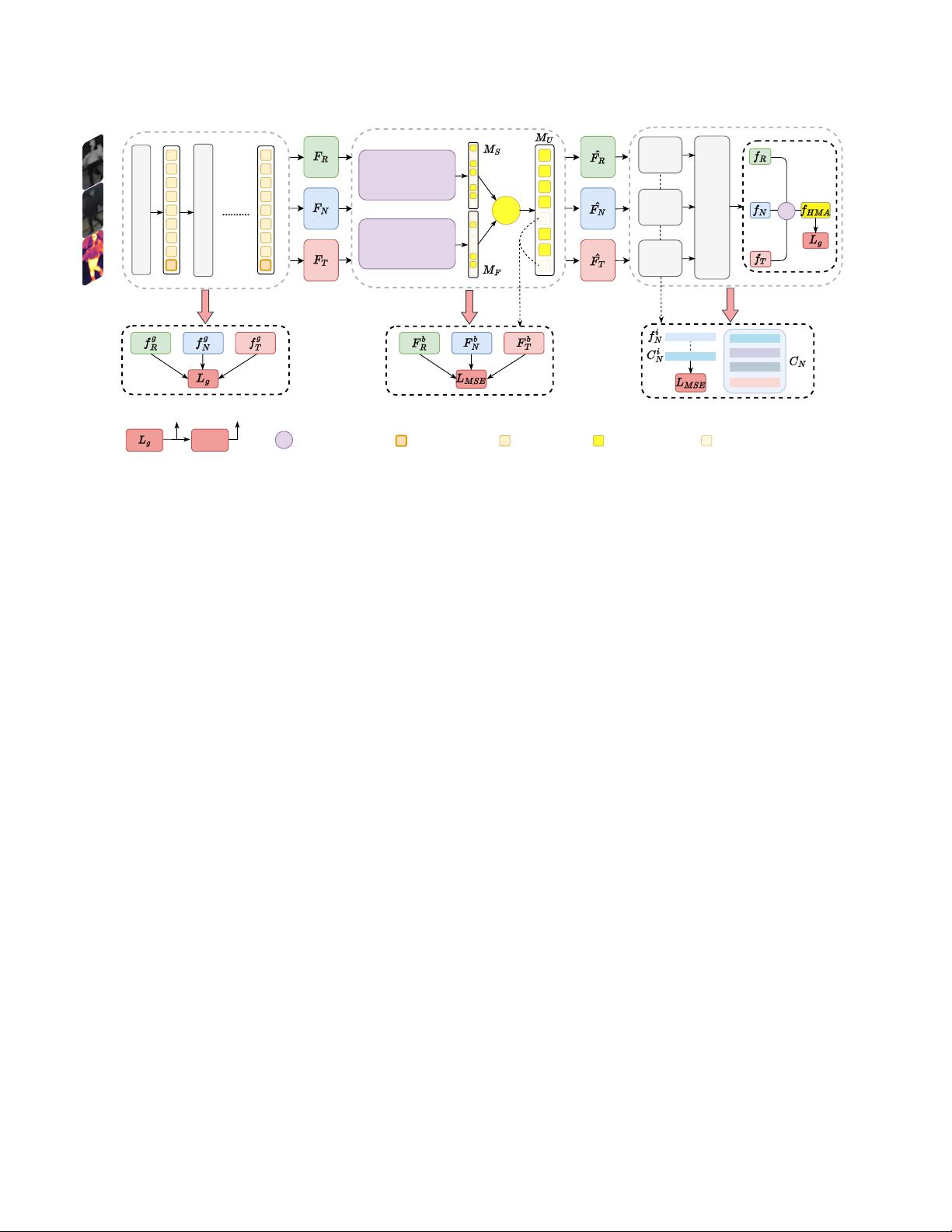

Figure 2. An illustration of our proposed EDITOR. First, features from different input modalities are extracted by using the shared ViT-B/16

backbone. Then, a Spatial-Frequency Token Selection (SFTS) is utilized to select diverse tokens with object-centric features. Meanwhile,

the Background Consistency Constraint (BCC) loss is designed for stabilizing the selection process. After that, a Hierarchical Masked

Aggregation (HMA) is grafted to aggregate the selected tokens. Finally, combined with the Object-Centric Feature Refinement (OCFR)

loss, the whole framework can obtain more discriminative features for multi-modal object ReID.

ferent modal features with a heterogeneous score coher-

ence loss. Then, Zheng et al. [54] reduce the discrep-

ancies from sample and modality aspects. From the per-

spective of generating modalities, Guo et al. [9] propose a

GAFNet to fuse the multiple data sources. He et al. [12]

propose a GPFNet to adaptively fuse multi-modal features

with graph learning. With Transformers, Pan et al. [29] in-

troduce a PHT, employing a feature hybrid mechanism to

balance modal-specific and modal-shared information. Jen-

nifer et al. [4] provide a UniCat by analyzing the issue of

modality laziness. Very recently, Wang et al. [43] propose a

novel token permutation mechanism for robust multi-modal

object ReID. While contributing to the multi-modal object

ReID, they commonly overlook the influence of irrelevant

backgrounds on the aggregation of features across different

modalities. In contrast, our proposed EDITOR explicitly

addresses the influence of irrelevant backgrounds on multi-

modal feature aggregation. Our approach effectively iden-

tifies critical regions within each modality while fostering

inter-modal collaboration. Furthermore, the incorporation

of BCC and OCRF losses, along with the innovative SFTS

and HMA modules, distinguishes our work as a promising

avenue for improved performance in complex scenarios.

2.3. Token Selection in Transformer

With the increasing adoption of Transformers [16, 24, 31],

token selection has gained significant attention [1, 8, 10, 11,

23, 28, 33, 46], due to its ability to focus on essential objects

and reduce computational overhead. In vision tasks, such as

ReID, where fine-grained features are crucial, the extraction

of key regions becomes particularly important. For exam-

ple, TransFG [11] utilizes the multi-head self-attention of

ViT to select representative local patches, achieving out-

standing performance in fine-grained classification tasks.

DynamicViT [33] employs gating mechanisms to dynam-

ically accelerate both training and inference. TVTR [46]

extends token selection to cross-modal ReID, aligning fea-

tures by selecting the top-K salient tokens. However, our

method differs from them in the following ways: (1) Our se-

lection is instance-level, where for different input images,

the model dynamically selects different numbers of object-

centirc tokens. Unlike previous methods, which specify the

fixed top-K local regions for feature aggregation, our ap-

proach allows the model to adapt more flexibly to various

inputs. (2) Previous methods do not consider the impact of

distracted backgrounds during the early selection process.

With our proposed losses, we effectively stabilize the se-

lection process, achieving dynamic distribution alignments.

Thus, we provide a more flexible framework, ultimately en-

hancing ReID performance in complex scenarios.

3. Proposed Method

As illustrated in Fig. 2, our proposed EDITOR com-

prises three key components: Shared Feature Extraction,

Spatial-Frequency Token Selection (SFTS) and Hierarchi-

cal Masked Aggregation (HMA). In addition, we incor-

porate the Background Consistency Constraint (BCC) and