DeepFM: A Factorization-Machine based Neural Network for CTR Prediction

Huifeng Guo

∗1

, Ruiming Tang

2

, Yunming Ye

†1

, Zhenguo Li

2

, Xiuqiang He

2

1

Shenzhen Graduate School, Harbin Institute of Technology, China

2

Noah’s Ark Research Lab, Huawei, China

1

huifengguo@yeah.net, yeyunming@hit.edu.cn

2

{tangruiming, li.zhenguo, hexiuqiang}@huawei.com

Abstract

Learning sophisticated feature interactions behind

user behaviors is critical in maximizing CTR for

recommender systems. Despite great progress, ex-

isting methods seem to have a strong bias towards

low- or high-order interactions, or require exper-

tise feature engineering. In this paper, we show

that it is possible to derive an end-to-end learn-

ing model that emphasizes both low- and high-

order feature interactions. The proposed model,

DeepFM, combines the power of factorization ma-

chines for recommendation and deep learning for

feature learning in a new neural network architec-

ture. Compared to the latest Wide & Deep model

from Google, DeepFM has a shared input to its

“wide” and “deep” parts, with no need of feature

engineering besides raw features. Comprehensive

experiments are conducted to demonstrate the ef-

fectiveness and efficiency of DeepFM over the ex-

isting models for CTR prediction, on both bench-

mark data and commercial data.

1 Introduction

The prediction of click-through rate (CTR) is critical in rec-

ommender system, where the task is to estimate the probabil-

ity a user will click on a recommended item. In many recom-

mender systems the goal is to maximize the number of clicks,

and so the items returned to a user can be ranked by estimated

CTR; while in other application scenarios such as online ad-

vertising it is also important to improve revenue, and so the

ranking strategy can be adjusted as CTR×bid across all can-

didates, where “bid” is the benefit the system receives if the

item is clicked by a user. In either case, it is clear that the key

is in estimating CTR correctly.

It is important for CTR prediction to learn implicit feature

interactions behind user click behaviors. By our study in a

mainstream apps market, we found that people often down-

load apps for food delivery at meal-time, suggesting that the

(order-2) interaction between app category and time-stamp

∗

This work is done when Huifeng Guo worked as intern at

Noah’s Ark Research Lab, Huawei.

†

Corresponding Author.

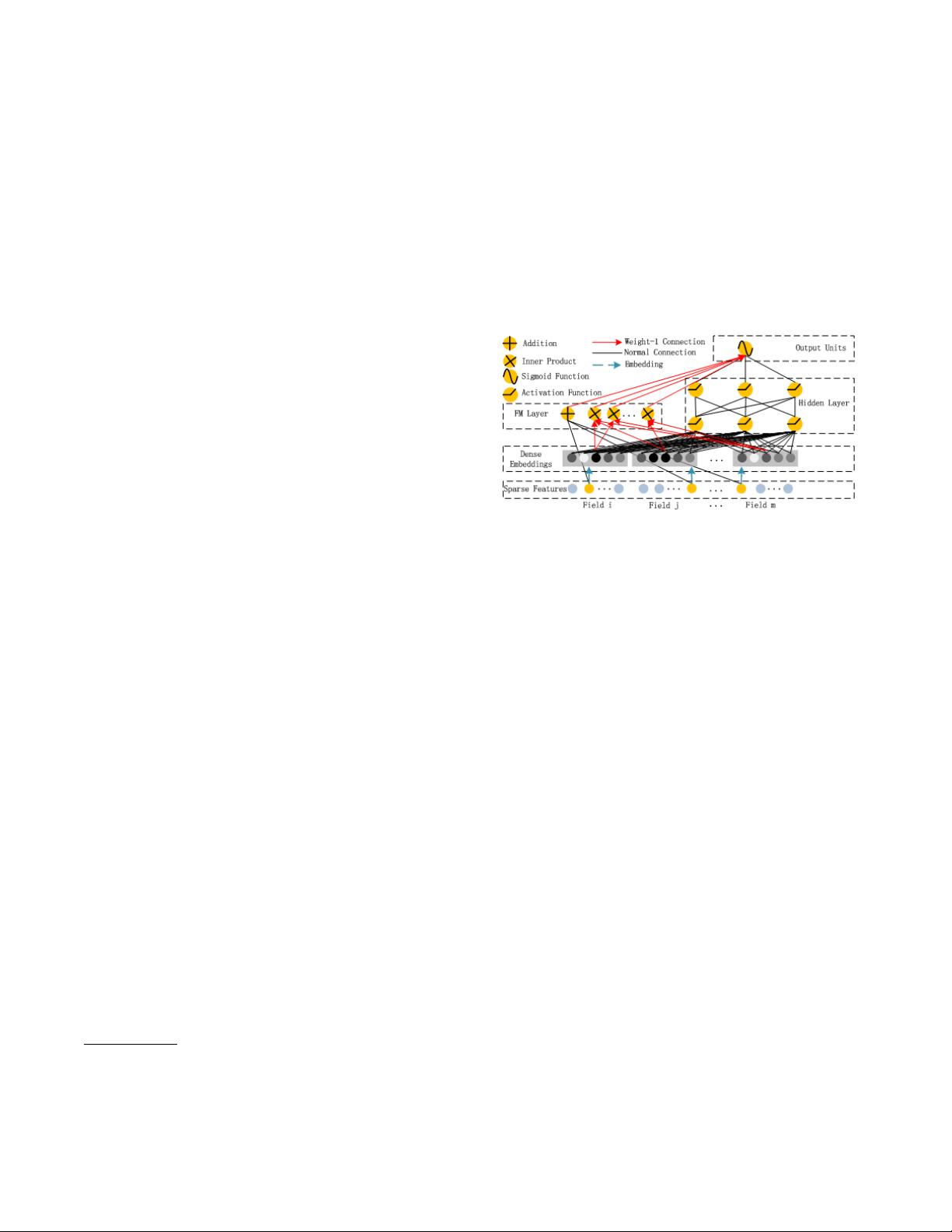

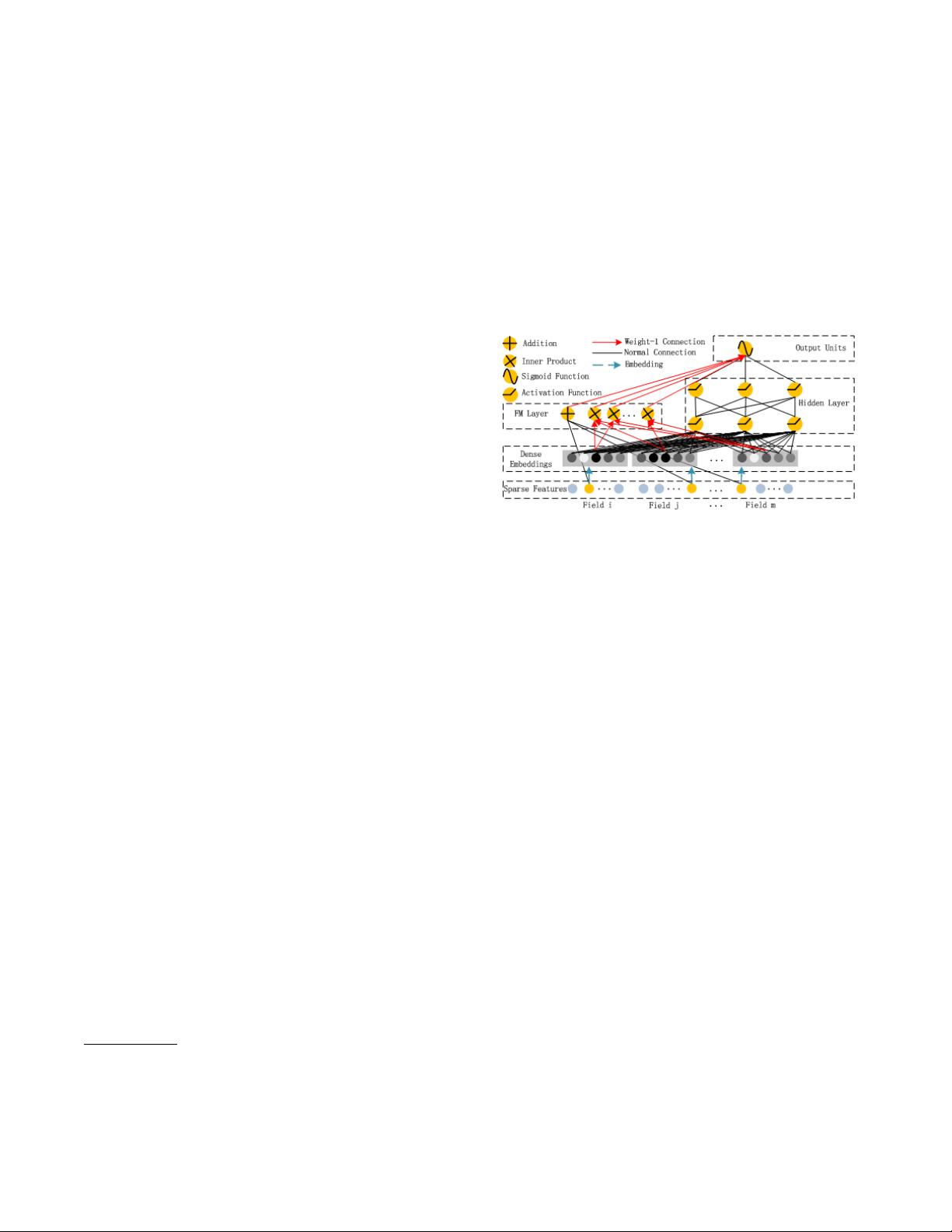

Figure 1: Wide & deep architecture of DeepFM. The wide and deep

component share the same input raw feature vector, which enables

DeepFM to learn low- and high-order feature interactions simulta-

neously from the input raw features.

can be used as a signal for CTR. As a second observation,

male teenagers like shooting games and RPG games, which

means that the (order-3) interaction of app category, user gen-

der and age is another signal for CTR. In general, such inter-

actions of features behind user click behaviors can be highly

sophisticated, where both low- and high-order feature interac-

tions should play important roles. According to the insights

of the Wide & Deep model

[

Cheng et al., 2016

]

from google,

considering low- and high-order feature interactions simulta-

neously brings additional improvement over the cases of con-

sidering either alone.

The key challenge is in effectively modeling feature inter-

actions. Some feature interactions can be easily understood,

thus can be designed by experts (like the instances above).

However, most other feature interactions are hidden in data

and difficult to identify a priori (for instance, the classic as-

sociation rule “diaper and beer” is mined from data, instead

of discovering by experts), which can only be captured auto-

matically by machine learning. Even for easy-to-understand

interactions, it seems unlikely for experts to model them ex-

haustively, especially when the number of features is large.

Despite their simplicity, generalized linear models, such as

FTRL

[

McMahan et al., 2013

]

, have shown decent perfor-

mance in practice. However, a linear model lacks the abil-

ity to learn feature interactions, and a common practice is

to manually include pairwise feature interactions in its fea-

ture vector. Such a method is hard to generalize to model

high-order feature interactions or those never or rarely appear

in the training data

[

Rendle, 2010

]

. Factorization Machines

arXiv:1703.04247v1 [cs.IR] 13 Mar 2017