5

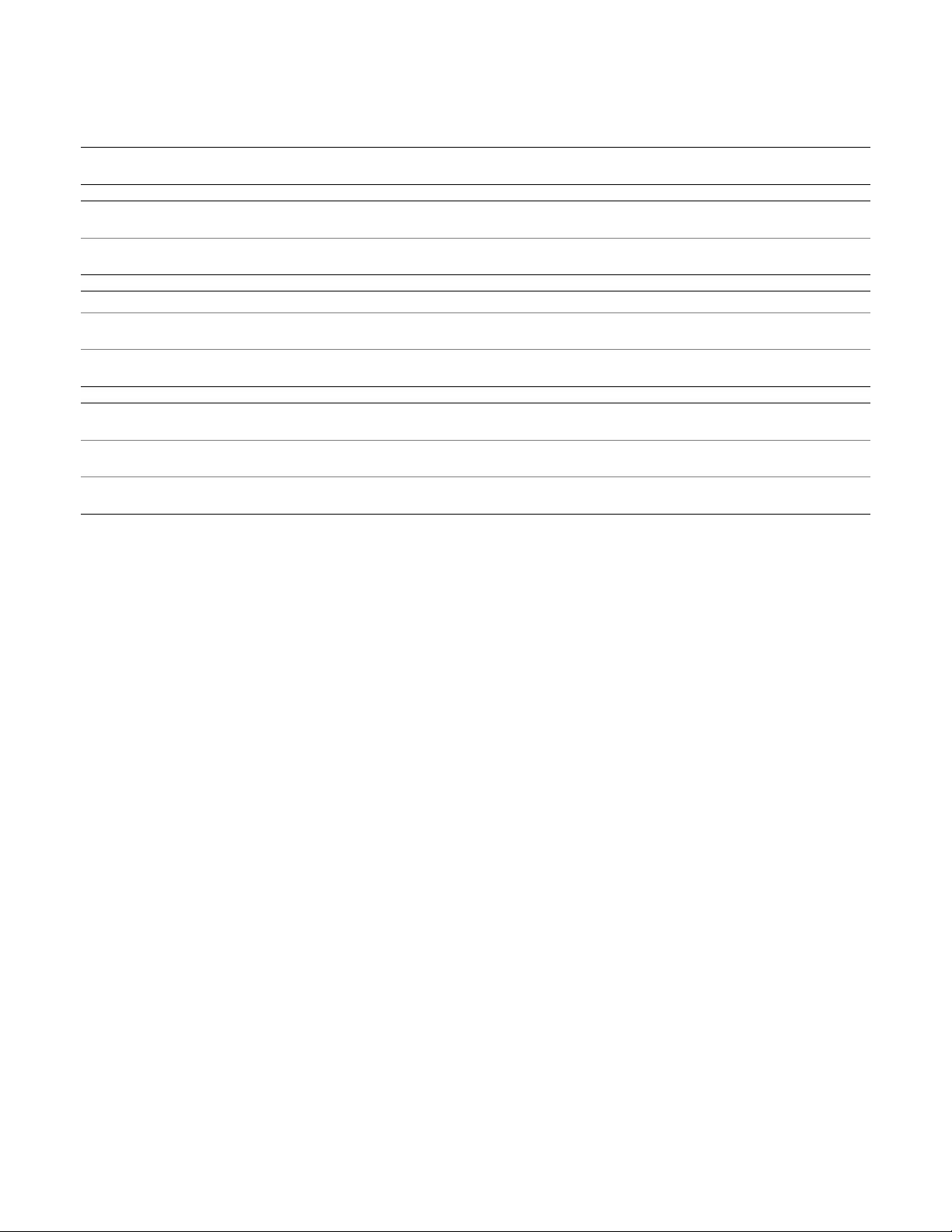

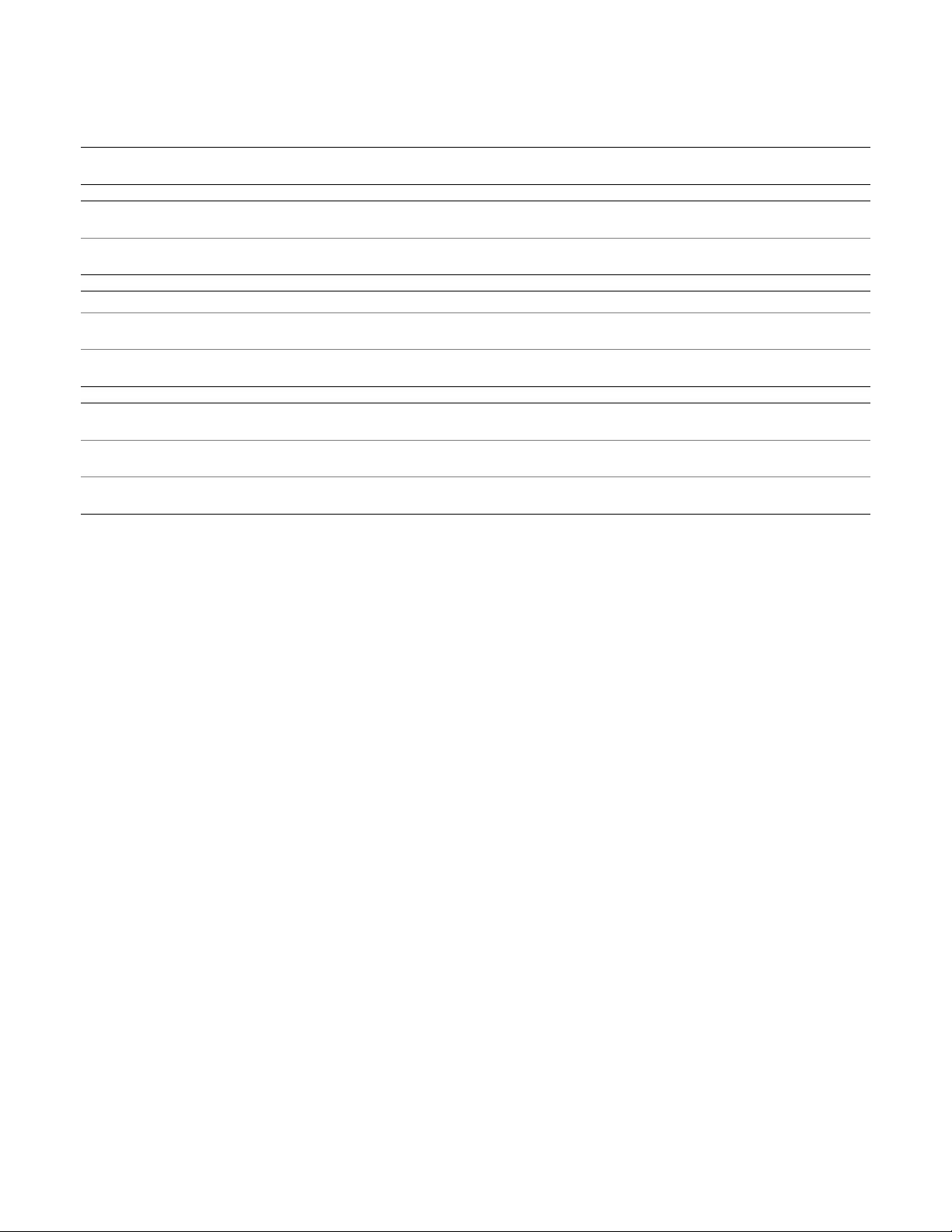

TABLE II: Classification-based methods (CNN: Convolutional Neural Network, CRF: Conditional Random Field, ICP: Iterative Closest

Point, NMS: Non-Maximum Suppression, R: Real data, RF: Random Forest, S: Synthetic data, s-SVM: structured-Support Vector Machine,

for symbols see Table I)

method input input training classification classifier trained refinement filtering level

pre-processing data parameters training classifier step

2D-DRIVEN 3D

GS3D [104] RGB 7 R θ

y

L

ce

CNN CNN 7 category

refinement step RGB 7 R x, d, θ

y

L

ce

CNN 7 7

Papon et al. [88] RGB-D intensity & normal R & S θ

y

, z L

ce

CNN 7 NMS category

Gupta et al. [81] RGB-D normal S θ

y

L

ce

CNN ICP 7 category

3D

Sliding Shapes [89] Depth 3D grid R & S x, d, θ

y

L

hinge

SVM 7 NMS category

Ren et al. [149] RGB-D 3D grid R x, d, θ

y

IoU

3D

s-SVM s-SVM NMS category

refinement step RGB-D 3D grid R x, d, θ

y

IoU

3D

s-SVM 7 7

Wang et al. [96] LIDAR 3D grid R x L

hinge

SVM 7 NMS category

Vote3Deep [77] LIDAR 3D grid R x, θ

y

L

hinge

CNN 7 NMS category

6D

Bonde et al. [27] Depth 3D grid S Θ IG RF 7 7 instance

Brachmann et al. [28] RGB-D 7 R & S x IG RF ICP 7 instance

Krull et al. [29] RGB-D 7 R & S x IG RF CNN 7 instance

refinement step Depth 7 R & S x, Θ log-like CNN 7 7

Michel et al. [33] RGB-D 7 R & S x IG RF CRF & ICP 7 instance

refinement step RGB-D 7 R & S x, Θ 7 CRF ICP 7

more accurate results [128], [129], [130], [131], [132]. In

classification forests, information gain is often used as the

quality function Q

cla

and in regression tasks, the training

objective Q

reg

is to minimize the variance in translation offset

vectors and rotation parameters. For pose regression problems,

Hough voting process [133] is usually employed.

A. Classification

Overall schematic representation of the classification-based

methods is shown in Fig. 1. In the figure, the blocks drawn

with continuous lines are employed by all methods, and

depending on the architecture design, dashed-line blocks are

additionally operated by the clusters of specific methods.

Training Phase. During an off-line stage, classifiers are

trained based on synthetic or real data. Synthetic data are

generated using the 3D model M of an interested object

O, and a set of RGB/D/RGB-D images are rendered from

different camera viewpoints. The 3D model M can either be

a CAD or a reconstructed model, and the following factors are

considered when deciding the size of the data:

• Reasonable viewpoint coverage. In order to capture rea-

sonable viewpoint coverage of the target object, synthetic

images are rendered by placing a virtual camera at each

vertex of a subdivided icosahedron of a fixed radius. The

hemisphere or full sphere of icosahedron can be used

regarding the scenario.

• Object distance. Synthetic images are rendered at differ-

ent scales depending on the range in which the target

object is located.

Computer graphic systems provide precise data annotation,

and hence synthetic data generated by these systems are used

by the classification-based methods [88], [81], [89], [27], [28],

[29], [33]. It is hard to get accurate object pose annotations for

real images, however, there are classification-based methods

using real training data [104], [88], [89], [149], [77], [96], [28],

[29], [33]. Training data are annotated with pose parameters

i.e., 3D translation x = (x, y, z), 3D rotation Θ = (θ

r

, θ

p

, θ

y

),

or both. Once the training data are generated, the classifiers

are trained using related loss functions.

Testing Phase. A real test image, during an on-line stage,

is taken as input by the classifiers. 2D-driven 3D methods

[104], [88], [81] first extract a 2D BB around the object of

interest (2D BB generation block), which is then lifted to 3D.

Depending on the input, the methods in [88], [81], [89], [149],

[96], [77], [27] employ a pre-processing step on the input

image and then generate 3D hypotheses (input pre-processing

block). 6D object pose estimators [27], [28], [29], [33] extract

features from the input images (feature extraction block), and

using the trained classifiers, estimate objects’ 6D pose. Several

methods further refine the output of the trained classifiers

[104], [81], [149], [28], [29], [33] (refinement block), and

finally hypothesize the object pose after filtering.

Table II details the classification-based methods. GS3D

[104] concentrates on extracting the 3D information hidden

in a 2D image to generate accurate 3D BB hypotheses. It

modifies Faster R-CNN [117] to classify the rotation θ

y

in

RGB images in addition to the 2D BB parameters. Utilizing

another CNN architecture, it refines the object’s pose parame-

ters further classifying x, d, and θ

y

. Papon et al. [88] estimate

semantic poses of common furniture classes in complex clut-

tered scenes. The input is converted into the intensity (RGB)

& surface normal (D) image. 2D BB proposals are generated

using the 2D GOP detector [122], which is then lifted to 3D

space further classifying θ

y

and z using the bin-based cross

entropy loss L

ce

. Gupta et al. [81] start with the segmented