The primary goal of MVD is the high-quality

reconstruction of arbitrary number of views for

advanced stereoscop ic processing to support auto-

stereoscopic displays. The virtual view quality

depends highly on the quality of depth map. Thus,

efficient depth map coding is crucial in 3D video

coding system. The main object ive of depth map

coding is compression of de pth map to guarantee that

the decoded depth can synthesise high-quality virtual

view. It is important to note that depth map is only

used in view rendering process, but not directly

displayed. Therefore, it is necessary to understand

how the distortion in depth map will affect rendered

view quality. In standard video compression, quanti-

sation errors directly affect the rendered view quality

by adding noise to the luminance or chrominance

level of each pixel. In contrast, the distortion in the

depth map will indirectly affect the rendered video

quality. The dep th map error will lead to geometric

error in the interpolation, which in turn will translate

errors into the luminance or chrominance of the

rendered view.

This section briefly revie ws the general idea of

depth-based view synthesis.

DIBR is the process of synthesising virtual views of

a scene from a reference colour image and associated

per-pixel depth infor mation. First, a pixel (x

r

,y

r

)in

the reference view is warped to the world coordinates

(u,v,w), using the depth of the reference view,

u,v,w½

T

~R

r

A

{1

r

x

r

,y

r

,1½

T

Z

r

x,yðÞzT

r

(1)

where A

r

, R

r

and T

r

respectively represent the

reference camera parameters of the intrinsic matrices,

rotation matrix and translation vector. Z

r

(x,y) is the

depth value associated with (x

r

,y

r

), and subscript r

indicates the reference view. Then, the 3D point is

mapped to the virtual view,

x’,y’,z’½

T

~A

v

R

{1

v

u,v,w

jj

T

{T

v

no

(2)

where the subscript v refers to the virtual view.

By combining equations (1) and (2),

x

0

,y

0

,z

0

½

T

~

A

v

R

{1

v

R

r

A

{1

r

x,y,1½

T

Z

r

x

r

,y

r

ðÞzT

r

{T

v

no

(3)

The corresponding pixel located in the rendered

image of the virtual view is x

v

,y

v

ðÞ~ x’=z’,y’=z’ðÞ.

Equation (2) describes a depth-dependent relation

between the pixel coordinates of corresponding

points in an image pair. According to equation (3),

an arbitrary virtual view can be generated, provided

the depth value Z

r

(x

r

,y

r

) is known for every pixel in the

reference image and the camera parameters are avai-

lable. However, the viewpoint navigation is con-

strained by disocclusion problems of ‘holes’ appearing

in synthesised images if areas occluded in the reference

view become visible in a virtual view. Such artefacts

become obvious when the virtual view is very far away

from its reference.

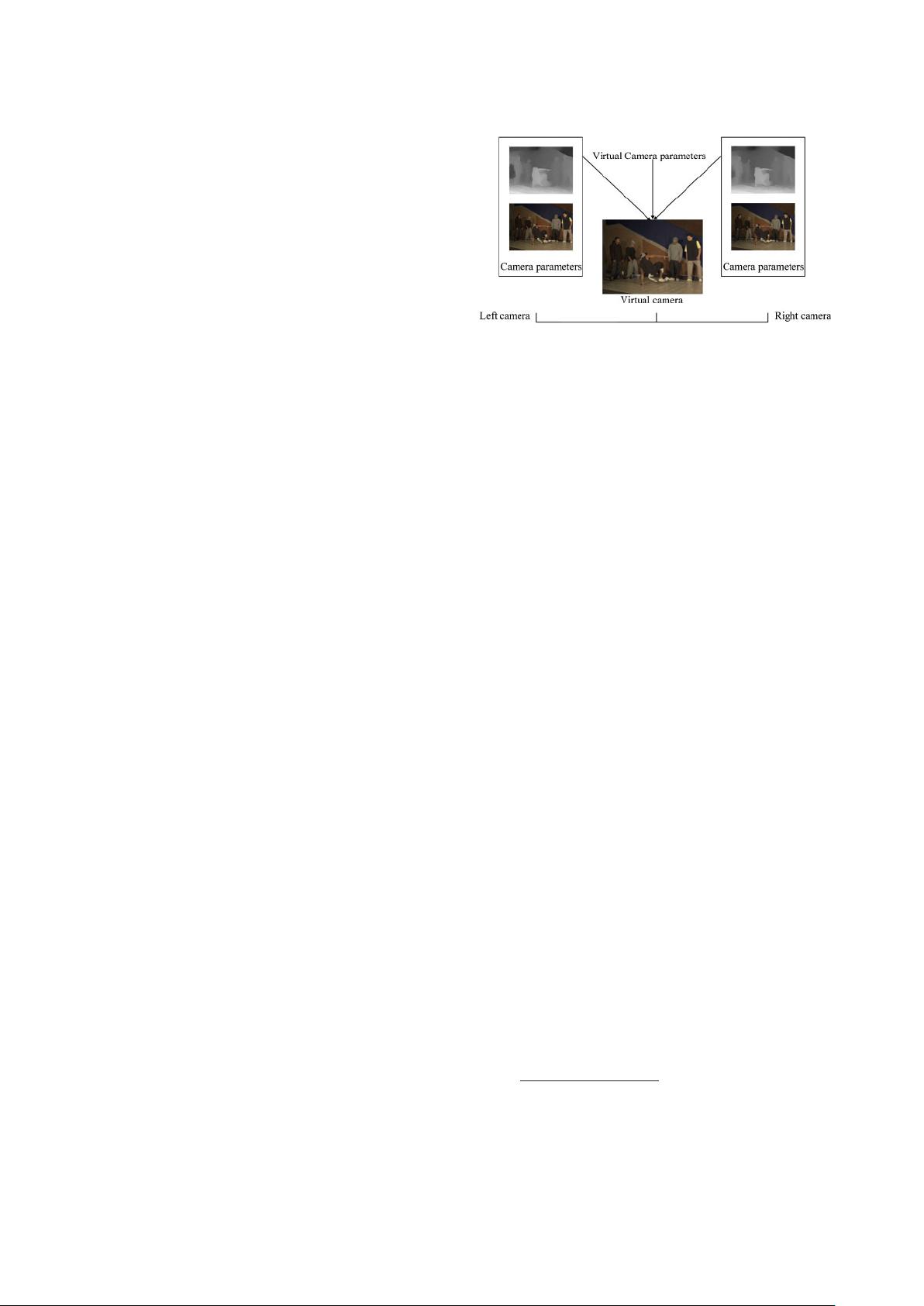

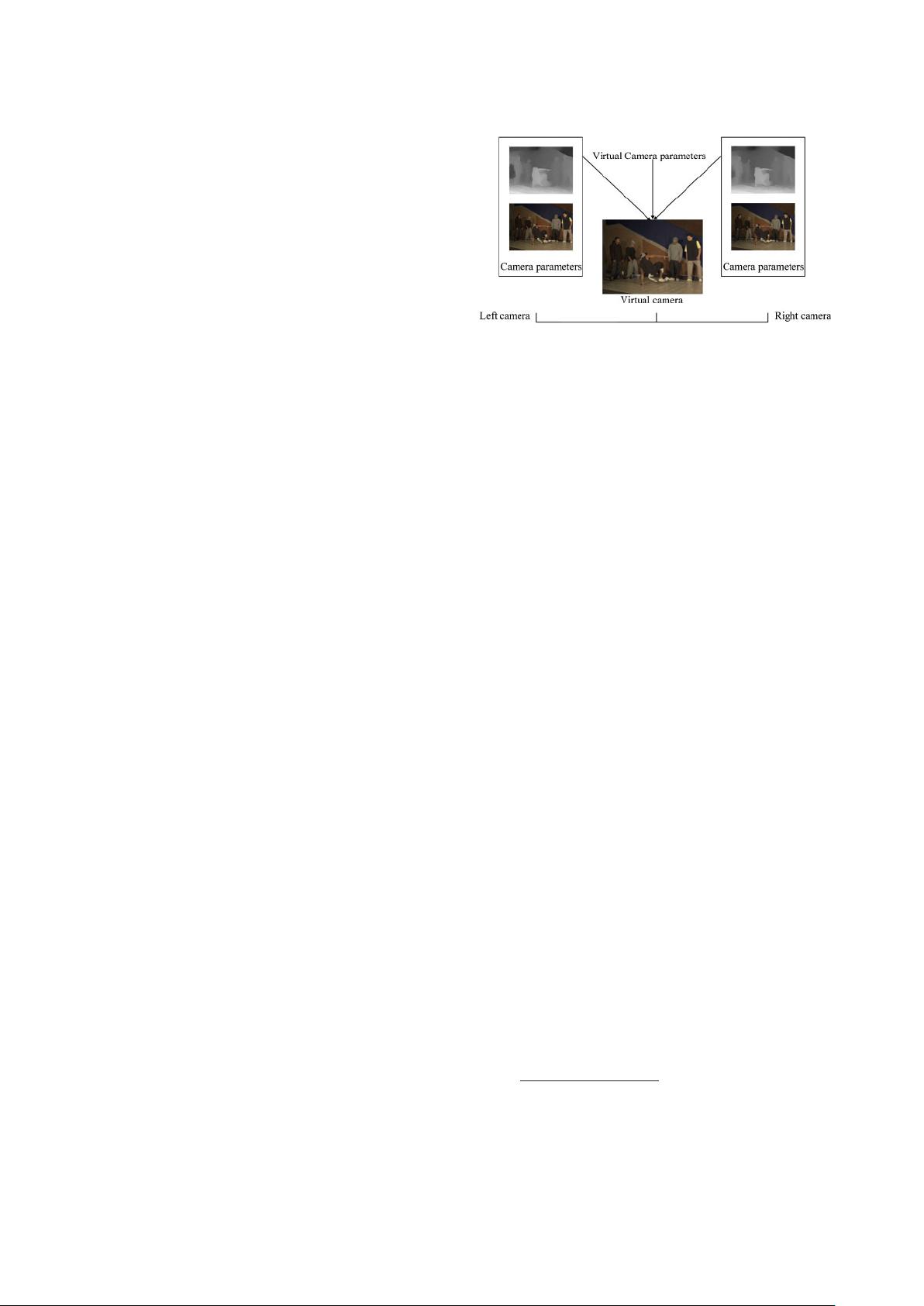

To reduce the impact of disocclusion, MPEG has

recently proposed an ‘MVD’ data format which

enables the generation of a virtual view by making

use of more than one reference view. Figure 2 shows

a classic illustration of the view synthesis based on

such data format. In this paper, the camera placed at

the left of the virtual camera denotes the left camera,

and the other one is called the right camera. In the

example, each pixel in the virtual view is composed by

a weighted sum of its corres ponding points in the two

reference views (the left view and the right view).

Taking into account the occlusion effect, the

virtual view synthesis is rendered by blending two

neighbouring images, as described in equation (4)

I

V

x

V

,y

V

ðÞ~

1{aðÞI

L

x

L

,y

L

ðÞzaI

R

x

R

,y

R

ðÞ,

if x

V

,y

V

ðÞis both visib le in cameras L andR

I

L

x

L

,y

L

ðÞ,

if x

V

,y

V

ðÞis only visible in camera L

I

R

x

R

,y

R

ðÞ,

if x

V

,y

V

ðÞis only visible in camera R

0,

otherwise

8

>

>

>

>

>

>

>

>

>

>

>

>

>

<

>

>

>

>

>

>

>

>

>

>

>

>

>

:

a~

T

V

{T

L

jj

T

V

{T

L

jjz T

V

{T

R

jj

(5)

where I

V

(x

V

, y

V

) means the pixel value at virtual

image plane, I

L

(x

L

, y

L

) and I

R

(x

R

, y

R

) respectively

2 View synthesis based on multiview video plus depth

ð4Þ

DEPTH MAP COMPRESSION IN 3D VIDEO 3

IMAG 161

#

RPS 2012 The Imaging Science Journal Vol 0