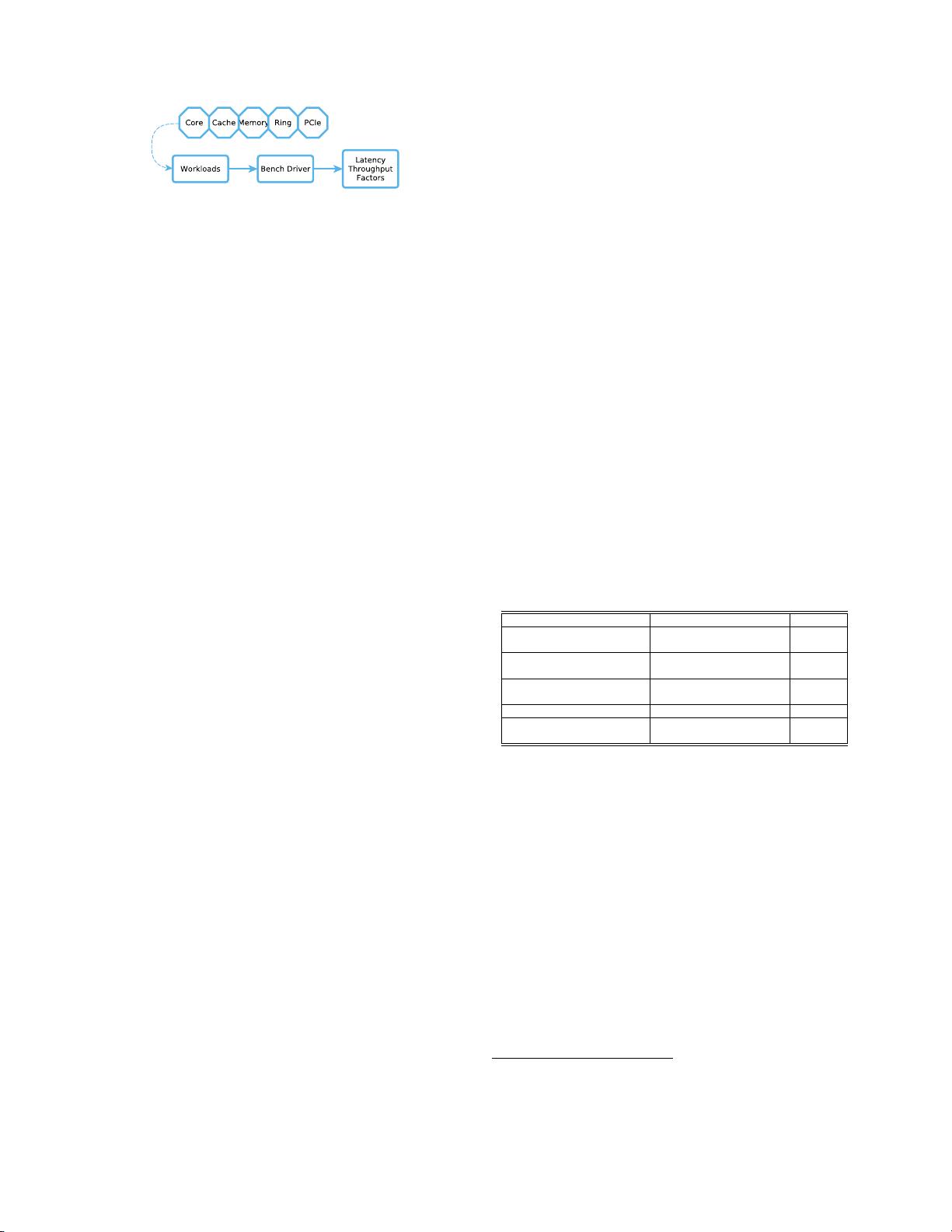

Figure 2: The MIC-Meter Overview.

a highly accurate, non-intrusive timing method. Alterna-

tively, we can measure a long enough sequence of operations

with an accurate timer, and estimate latency per operation

by dividing the measured time by the number of operations.

In this paper, latency measurements are done with a single

thread (for individual operations) or two threads (for trans-

fer operations) with Pthreads. All latency benchmarks are

written in C (with inline assembly).

Throughput is the number of (a type of) operations exe-

cuted in a given unit of time. As higher throughput means

better performance, microbenchmarking focuses on measur-

ing the maximum achievable throughput for different oper-

ations, under different loads; typically, the benchmarked

throughput values are slightly lower than the theoretical

ones. Thus, to measure maximum throughput, the main

challenge is to build the workload such that the resource that

is being evaluated is fully utilized. For example, when mea-

suring computational throughput, enough threads should be

used to fully utilize the cores, while when measuring memory

bandwidth, the workload needs to have sufficient threads to

generate enough memory requests. For all the throughput

measurements in this paper, our multi-threaded workloads

are written in C and OpenMP.

We note that the similarities between Phi and a regular

multi-core CPU allow us to adapt existing CPU benchmarks

to the requirements of Xeon Phi. In most cases, we use such

”refurbished” solutions, that prove to serve our purposes.

3. EMPIRICAL EVALUATION

In the following sections, we present in detail the MIC-

Meter and the results for each of the components: (1) the

vector processing cores, (2) the on-chip and off-chip memory,

(3) the ring interconnect, and (4) the PCIe connection.

3.1 Vector Processing Cores

We evaluate the vector processing cores in terms of both

instruction latency and throughput. For latency, we use

a method similar to those proposed by Agner Fog [7] and

Torbjorn Granlund [9]: we measure instruction latency by

running a (long enough) sequence of dependent instructions

(i.e., a list of instructions that, being dependent on each

other, are forced to be executed sequentially - an instruction

stream).

The same papers propose a similar approach to measure

throughput in terms of instructions per cycle (IPC). How-

ever, we argue that a measurement that uses all processing

cores together, and not in isolation, is more realistic for pro-

grammers. Thus, we develop a flops microbenchmark to

explore the factors for reaching the theoretical maximum

throughput on Xeon Phi (Section 3.1.2).

3.1.1 Vector Instruction Latency

Xeon Phi introduces 177 vector instructions [11]. We

roughly divide these instructions into five classes

3

: mask

instructions, arithmetic (logic) instructions, conversion in-

structions, permutation instructions, and extended mathe-

matical instructions.

The benchmark for measuring the latency of vector in-

structions is measuring the execution time of a sequence

of 100 vector operations using the same format: zmm1 =

op(zmm1, zmm2), where zmm1 and zmm2 represent two

vectors and op is the instruction being measured. By mak-

ing zmm1 be both a source operand and the destination

operand, we ensure the instruction dependency - i.e., the

current operation will depend on the result of the previous

one.

For special classes of instructions - such as the conversion

instructions vcvtps2pd and vcvtpd2ps - we have to measure

the latency of the conversion pair (zmm2 = op12(zmm1);

zmm1 = op21(zmm2)) in order to guarantee the depen-

dency between contiguous instructions (i.e., it is not possi-

ble to write the result of the conversion in the same source

operand, due to type incompatibility). Similarly, we mea-

sure the latency of extended mathematical instructions such

as vexp223ps and vlog2ps in pairs, to avoid overflow (e.g.,

when using 100 successive exp()’s).

The interesting results for vector instruction latency are

presented in Table 1. With these latency numbers, we know

how many threads or instruction streams we need to hide

the latency on one processing core.

Table 1: The vector instruction latency (in cycles).

Instruction Category Latency

kand, kor,

knot, kxor

mask instructions 2

vaddpd, vfmadd213pd,

vmulpd, vsubpd

arithmetic instructions 4

vcvtdq2pd, vcvtfxpntdq2ps,

vcvtfxpntps2dq, vcvtps2pd

convert instructions 5

vpermd, vpermf32x4 permutation instructions 6

vexp223ps, vlog2ps,

vrcp23ps, vrsqrt23ps

extended

mathematical instructions

6

3.1.2 Vector Instruction Throughput

The Xeon Phi 5100 has 60 cores working at 1.05 GHz,

and each core can process 8 double-precision data elements

at a time, with maximum 2 operations (multiply-add or

mad) per cycle in each lane (i.e., a vector element). There-

fore, the theoretical instruction throughput is 1008 GFlops

(approximately 1 TFlop). But is this 1 TFlop perfor-

mance actually achievable? To measure the instruction

throughput, we run 1, 2, 4 threads on a core (60, 120, and

240 threads in total). During measurement, each thread per-

forms one or two instruction streams for a fixed number of

iterations: b

i+1

= b

i

op a, where i represents the iteration,

a is a constant, and b serves as an operand and the destina-

tion. The loop was fully unrolled to avoid branch overheads.

The microbenchmark is vectorized using explicit intrinsics,

to ensure a 100% vector usage.

3

Note that we choose not to measure the latency of memory

access instructions because the latency results are highly

dependent on the data location(s).