Q. Hu et al. / Neural Networks 57 (2014) 1–11 3

we derive a general loss function and construct ν-support vector

regression machines for a general noise model.

Finally, we design a technique to find the optimal solution to the

corresponding regression tasks. While there are a large number of

implementations of SVR algorithms in the past years, we introduce

the Augmented Lagrange Multiplier (ALM) method, presented in

Section 4. If the task is non-differentiable or discontinuous, the

sub-gradient descent method can be used (Ma, 2010), and if there

are very large scale of samples, SMO can also be used (Shevade,

Keerthi, Bhattacharyya, & Murthy, 2000).

The main contributions of our work are listed as follows: (1) we

derive the optimal loss functions for different error models by the

use of Bayesian approach and optimization theory; (2) we develop

the uniform ν-support vector regression model for the general

noise with inequality constraints (N-SVR); (3) the Augmented

Lagrange Multiplier method is applied to solve N-SVR, which

guarantees the stability and validity of the solution in N-SVR;

(4) we utilize N-SVR to short-term wind speed prediction and show

the effectiveness of the proposed model in practical applications.

This paper is organized as follows: in Section 2 we derive the

optimal loss function corresponding to a noise model by using

the Bayesian approach; in Section 3 we describe the proposed

ν-support vector regression technique for general noise model

(N-SVR); in Section 4 we give the solution and algorithm design

of N-SVR; numerical experiments are conducted on artificial data

sets, UCI data and short-term wind speed prediction in Sections 5

and 6; finally, we conclude the work in Section 7.

2. Bayesian approach to the general loss function

Given a set of noisy training samples D

l

, we require to estimate

an unknown function f (x). Following Chu, Keerthi, and Ong (2004);

Girosi (1991); Klaus-Robert and Sebastian (2001); Pontil et al.

(1998), the general approach is to minimize

H[f ] =

l

i=1

c(ξ

i

) + λ · Φ[f ], (7)

where c(ξ

i

) = c(y

i

−f (x

i

)) is a loss function, λ is a positive number

and Φ[f ] is a smoothness functional.

We assume the noise is additive

y

i

= f (x

i

) + ξ

i

, i = 1, 2, . . . , l, (8)

where ξ

i

is random, independent, identical probability distribu-

tions (i.i.d.) with P(ξ

i

) of variance σ and mean µ. We want to es-

timate the function f (x) with the set of data D

f

⊆ D

l

. We take a

probabilistic approach, and regard the function f as the realization

of a random field with a known prior probability distribution. We

are interested in maximizing the posteriori probability of f given

the data D

f

, namely P[f |D

f

], which can be written as

P[f |D

f

] ∝ P[D

f

|f ] · P[f ], (9)

where P[D

f

|f ] is the conditional probability of the data D

f

given

the function f and P[f ] is a priori probability of the random field

f , which is often written as P[f ] ∝ exp(−λ · Φ[f ]), where Φ[f ]

is a smoothness functional. The probability P[D

f

|f ] is essentially a

model of the noise, and if the noise is additive, as in Eq. (8) and i.i.d.

with probability distribution P(ξ

i

), it can be written as

P[D

f

|f ] =

l

i=1

P(ξ

i

). (10)

Substituting P[f ] and Eq. (10) in (9), we see that the function

that maximizes the posterior probability of f is the one which

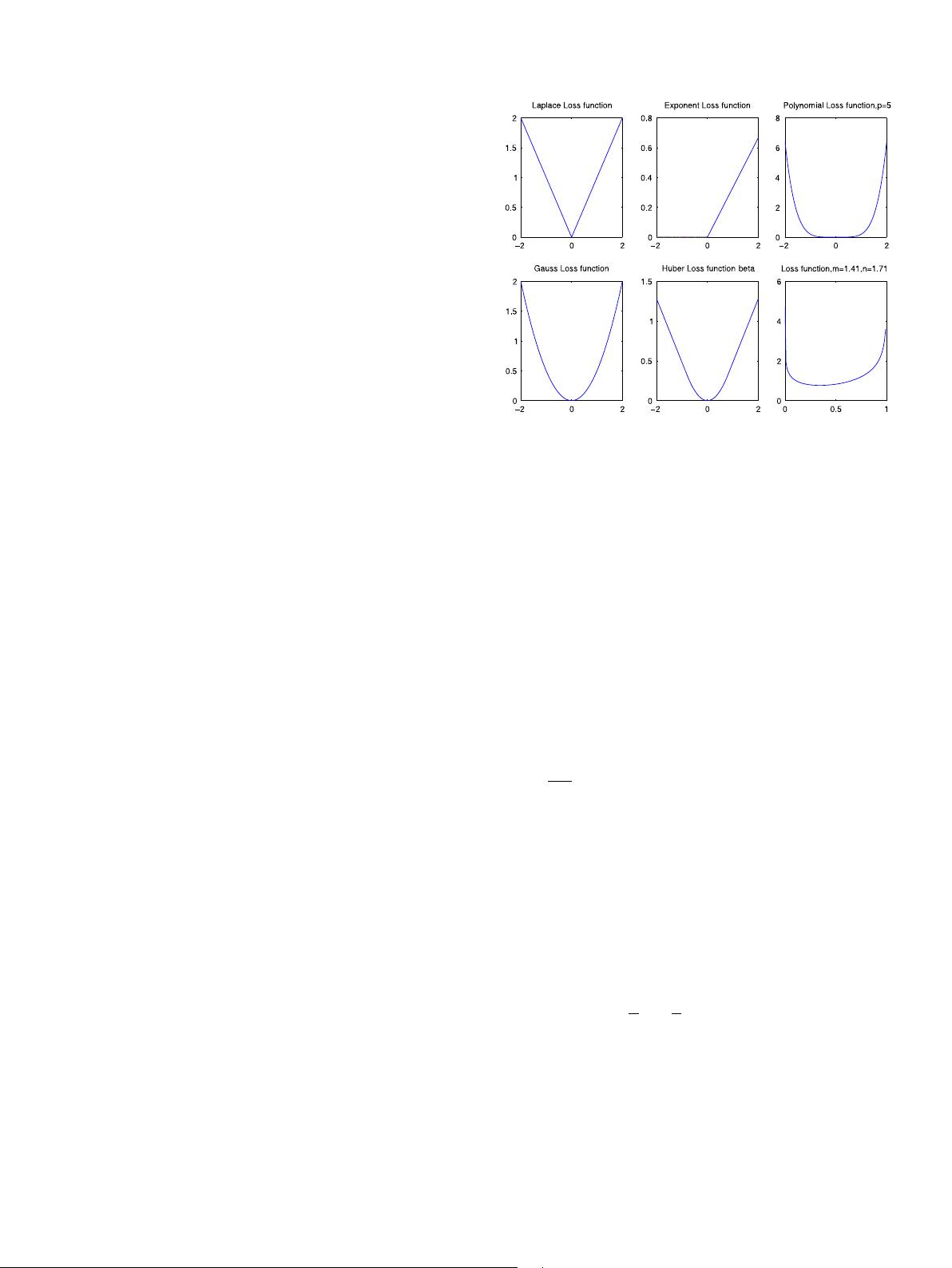

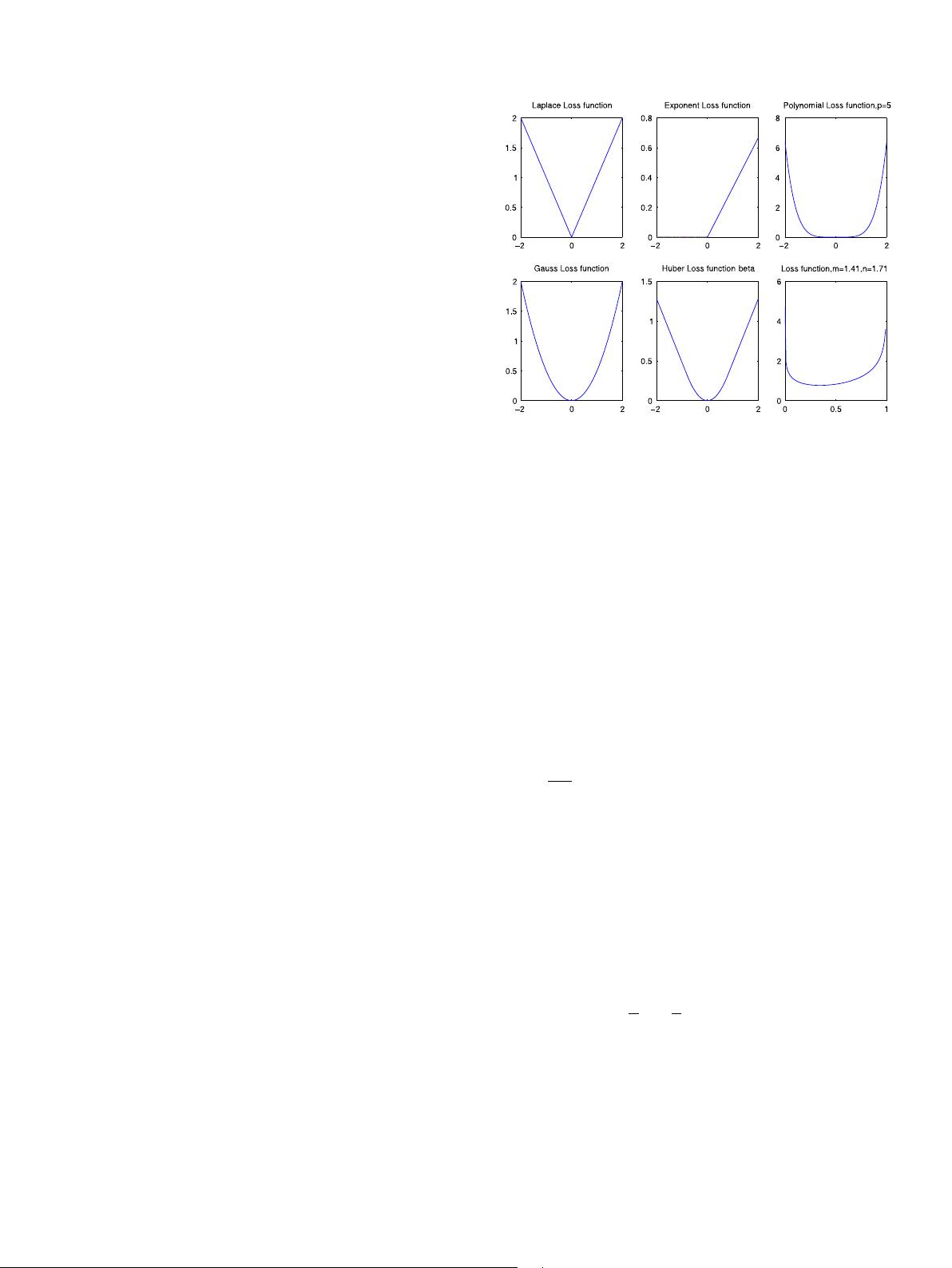

Fig. 2. Loss function of the corresponding noise model.

minimizes the following functional

H[f ] = −

l

i=1

log[P(y

i

− f (x

i

)) · e

−λ·Φ[f ]

]

= −

l

i=1

log P(y

i

− f (x

i

)) + λ · Φ[f ]. (11)

This functional is of the same form as Eq. (7) (Girosi, 1991; Pontil

et al., 1998). By Eqs. (7) and (11), the optimal loss function in a

maximum likelihood sense is

c(x, y, f (x)) = −log p(y − f (x)), (12)

i.e. the loss function c(ξ ) is the log-likelihood of the noise.

We assume that the noise in Eq. (8) is Gaussian, with zero mean

and variance σ . By Eq. (12), the loss function corresponding to

Gaussian-noise is

c(ξ

i

) =

1

2σ

2

(y

i

− f (x

i

))

2

. (13)

If the noise in Eq. (8) is beta, with mean µ ∈ (0, 1) and variance

σ , get m = (1−µ)·µ

2

/σ

2

−µ, n = ((1−µ)/µ)·m (m > 1, n > 1),

and h = Γ (m + n)/(Γ (m) · Γ (n)) is the normalization factor

(Bofinger et al., 2002). By Eq. (12), the loss function corresponding

to beta-noise is

c(ξ

i

) = (1 − m) log(ξ

i

) + (1 − n) log(1 − ξ

i

), (14)

where parameters m > 1, n > 1.

And if the noise in Eq. (8) is Weibull, with parameters θ and k.

By Eq. (12), the loss function should be

c(ξ

i

) =

(1 − k) log

ξ

i

θ

+

ξ

i

θ

k

, if ξ

i

≥ 0,

0, otherwise.

(15)

The loss functions and their corresponding probability density

functions (PDF) of the noise models used in regression problems

are listed in Table 1 and shown in Fig. 2.

3. Noise model based ν-support vector regression

Given samples D

l

, we construct a linear regression function

f (x) = ω

T

· x + b. In order to deal with nonlinear functions the