Section: (none)

Explanation

Explanation/Reference:

QUESTION 31

You analyzed TerramEarth's business requirement to reduce downtime, and found that they can achieve a majority of time saving by reducing customer's

wait time for parts. You decided to focus on reduction of the 3 weeks aggregate reporting time.

Which modifications to the company's processes should you recommend?

A.

Migrate from CSV to binary format, migrate from FTP to SFTP transport, and develop machine learning analysis of metrics

B.

Migrate from FTP to streaming transport, migrate from CSV to binary format, and develop machine learning analysis of metrics

C.

Increase fleet cellular connectivity to 80%, migrate from FTP to streaming transport, and develop machine learning analysis of metrics

D.

Migrate from FTP to SFTP transport, develop machine learning analysis of metrics, and increase dealer local inventory by a fixed factor

Correct Answer: C

Section: (none)

Explanation

Explanation/Reference:

Section: (none)

Explanation

Explanation/Reference:

Explanation:

The Avro binary format is the preferred format for loading compressed data. Avro data is faster to load because the data can be read in parallel, even when

the data blocks are compressed. Cloud Storage supports streaming transfers with the gsutil tool or boto library, based on HTTP chunked transfer encoding.

Streaming data lets you stream data to and from your Cloud Storage account as soon as it becomes available without requiring that the data be first saved to

a separate file. Streaming transfers are useful if you have a process that generates data and you do not want to buffer it locally before uploading it, or if you

want to send the result from a computational pipeline directly into Cloud Storage.

Reference: https://cloud.google.com/storage/docs/streaming https://cloud.google.com/bigquery/docs/loading-data

QUESTION 32

Which of TerramEarth's legacy enterprise processes will experience significant change as a result of increased Google Cloud Platform adoption?

A. Opex/capex

allocation,

LAN

changes,

capacity

planning

B. Capacity

planning,

TCO

calculations,

opex/capex

allocation

C. Capacity

planning,

utilization

measurement,

data

center

expansion

D. Data

Center

expansion,

TCO

calculations,

utilization

measurement

Correct Answer: B

Section: (none)

Explanation

Explanation/Reference:

Section: (none)

Explanation

Explanation/Reference:

QUESTION 33

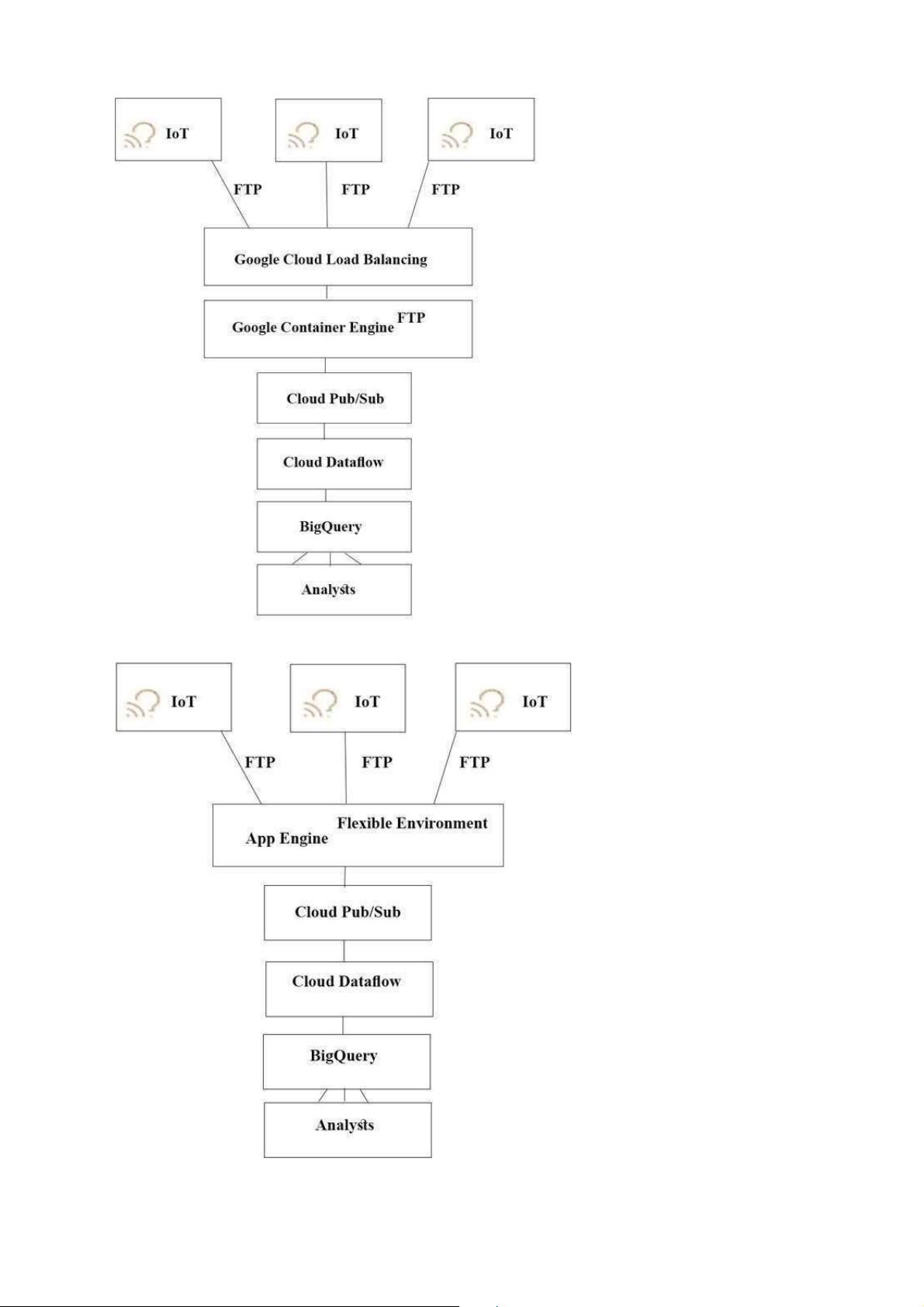

To speed up data retrieval, more vehicles will be upgraded to cellular connections and be able to transmit data to the ETL process. The current FTP process

is error-prone and restarts the data transfer from the start of the file when connections fail, which happens often. You want to improve the reliability of the

solution and minimize data transfer time on the cellular connections.

What should you do?

A.

Use one Google Container Engine cluster of FTP servers. Save the data to a Multi-Regional bucket. Run the ETL process using data in the bucket

B.

Use multiple Google Container Engine clusters running FTP servers located in different regions. Save the data to Multi-Regional buckets in US, EU, and

Asia. Run the ETL process using the data in the bucket

C.

Directly transfer the files to different Google Cloud Multi-Regional Storage bucket locations in US, EU, and Asia using Google APIs over HTTP(S). Run

the ETL process using the data in the bucket

D.

Directly transfer the files to a different Google Cloud Regional Storage bucket location in US, EU, and Asia using Google APIs over HTTP(S). Run the

ETL process to retrieve the data from each Regional bucket

Correct Answer: D

Section: (none)

Explanation

Explanation/Reference:

Section: (none)

Explanation

Explanation/Reference:

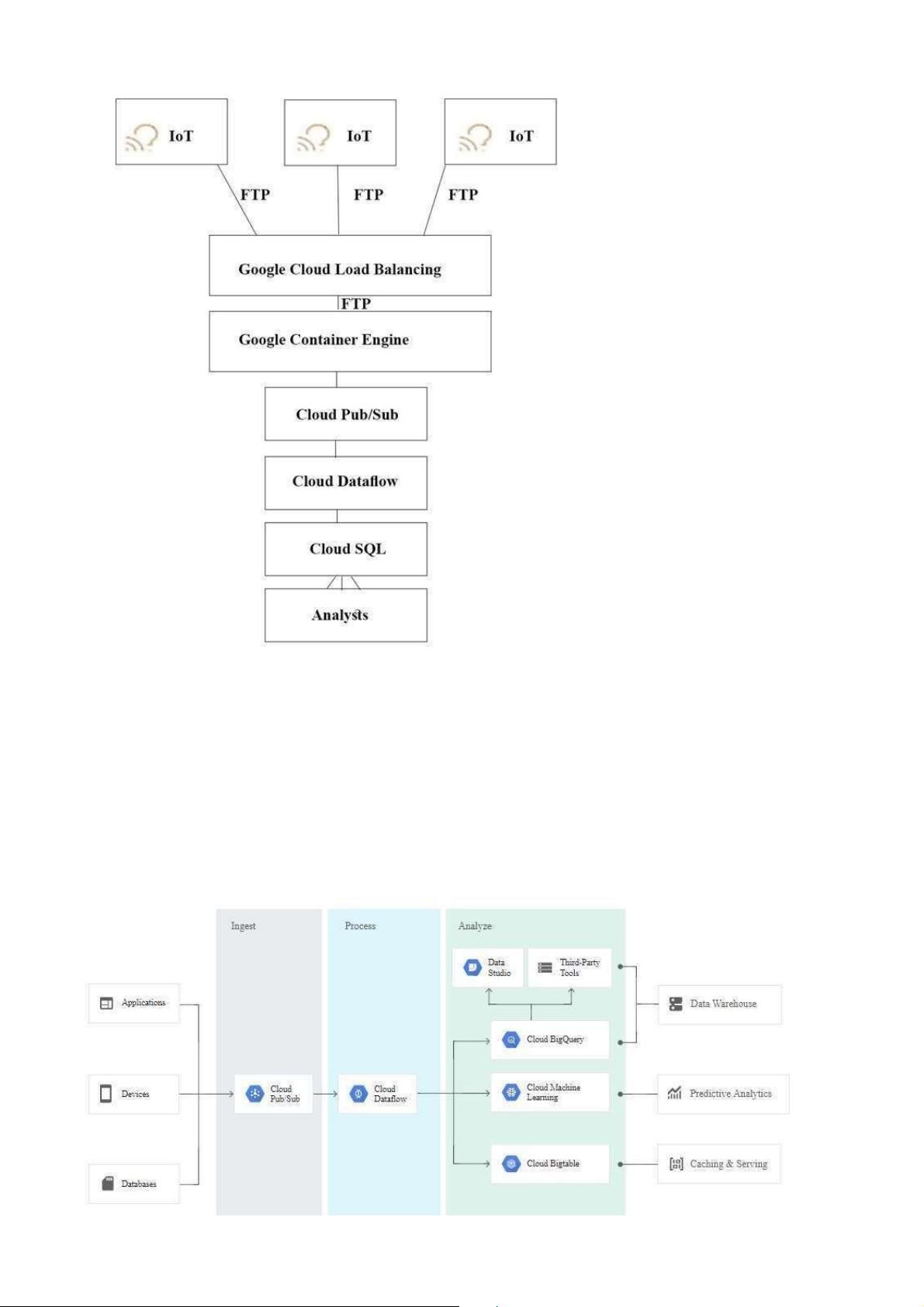

QUESTION 34

TerramEarth's 20 million vehicles are scattered around the world. Based on the vehicle's location, its telemetry data is stored in a Google Cloud Storage

(GCS) regional bucket (US, Europe, or Asia). The CTO has asked you to run a report on the raw telemetry data to determine why vehicles are breaking down

after 100 K miles.

You want to run this job on all the data.

What is the most

cost-effective way to run this job?

A.

Move all the data into 1 zone, then launch a Cloud Dataproc cluster to run the job

B.

Move all the data into 1 region, then launch a Google Cloud Dataproc cluster to run the job

C.

Launch a cluster in each region to preprocess and compress the raw data, then move the data into a multi- region bucket and use a Dataproc cluster to