2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM)

978-1-5090-1610-5/16/$31.00 ©2016 IEEE 707

Sparse Canonical Correlation Analysis via Truncated

1

-norm with Application to

Brain Imaging Genetics

Lei Du

∗

, Tuo Zhang

∗

, Kefei Liu

†

, Xiaohui Yao

†

, Jingwen Yan

†

,

Shannon L. Risacher

†

, Lei Guo

∗

, Andrew J. Saykin

†

and Li Shen

†§

for the ADNI

∗

School of Automation

Northwestern Polytechnical University, Xi’an, China 710072

Email: dulei@nwpu.edu.cn

†

Indiana University School of Medicine, Indianapolis, USA 46202

§

Corresponding to: Email: shenli@iu.edu

Abstract—Discovering bi-multivariate associations between

genetic markers and neuroimaging quantitative traits is a

major task in brain imaging genetics. Sparse Canonical

Correlation Analysis (SCCA) is a popular technique in this

area for its powerful capability in identifying bi-multivariate

relationships coupled with feature selection. The existing SCCA

methods impose either the

1

-norm or its variants. The

0

-

norm is more desirable, which however remains unexplored

since the

0

-norm minimization is NP-hard. In this paper, we

impose the truncated

1

-norm to improve the performance of

the

1

-norm based SCCA methods. Besides, we propose two

efficient optimization algorithms and prove their convergence.

The experimental results, compared with two benchmark meth-

ods, show that our method identifies better and meaningful

canonical loading patterns in both simulated and real imaging

genetic analyse.

Keywords-Sparse Canonical Correlation Analysis, Truncated

1

-norm, Brain Imaging Genetics

I. INTRODUCTION

Brain imaging genetics has gained more and more atten-

tions recently [1], [2]. A major task of imaging genetics

is to identify bi-multivariate associations between single

nucleotide polymorphisms (SNPs) and imaging quantitative

traits (QTs). Sparse canonical correlation analysis (SCCA),

which is powerful in bi-multivariate relationship discovery

coupled with feature selection, has become a popular tech-

nique in imaging genetic studies [3], [4], [5], [6], [7].

Witten et al. [3] introduced the

1

-norm (Lasso) to assure

sparsity which only selects a small proportion of the features.

Since then, many SCCA methods using the

1

-norm or its

variants are proposed [8]. There are two major concerns

regarding them. First, the

0

-norm, which only penalizes

those nonzero features, is the most ideal constraint. But it

is neither non-convex nor discontinuous [9]. Second, the

1

-

norm constraint is not a stable feature selector and thus could

incur estimation bias [10].

To overcome the problem above, the truncated

1

-norm

penalty (TLP) [10], [11] is proposed. The TLP is defined

as J

τ

(|x|)=min(

|x|

τ

, 1) with τ being a positive tuning

parameter. It approximates

0

-norm and permits desirable

sparsity. In addition, TLP can be equivalently transferred to

a piecewise linear function, and thus is easy to handle.

In this paper, we propose the TLP based SCCA (TLP-

SCCA) which embraces the TLP into the CCA model.

The TLP-SCCA has the following advantages [10]. First,

the TLP performs as a tradeoff between the

0

and

1

functions. This means that it not only has improved feature

selection, but also can be solved effectively. Second, it is an

adaptive shrinkage method if τ is tuned appropriately. We

propose two effective optimization algorithms, both using

the alternating direction method of multipliers (ADMM)

technique [12], and they are guaranteed to converge. The

experimental results, compared with two popular

1

-norm

based SCCA [3], [6], show that both TLP-SCCA exhibit

cleaner canonical loading patterns than the

1

-SCCA.

II. T

HE TRUNCATED

1

-NORM PENALTY

In this paper, a boldface lowercase letter denotes a vector,

and a boldface uppercase letter denotes a matrix. X ∈ R

n×p

denotes the SNP data, and Y ∈ R

n×q

is the QT data.

The truncated

1

-norm is defined as follows [13]:

P

TLP

(u)=

i

J

τ

(|u

i

|), where J

τ

(|u

i

|)=min(

|u

i

|

τ

, 1).

(1)

X

X

X

X

´

´

X

X

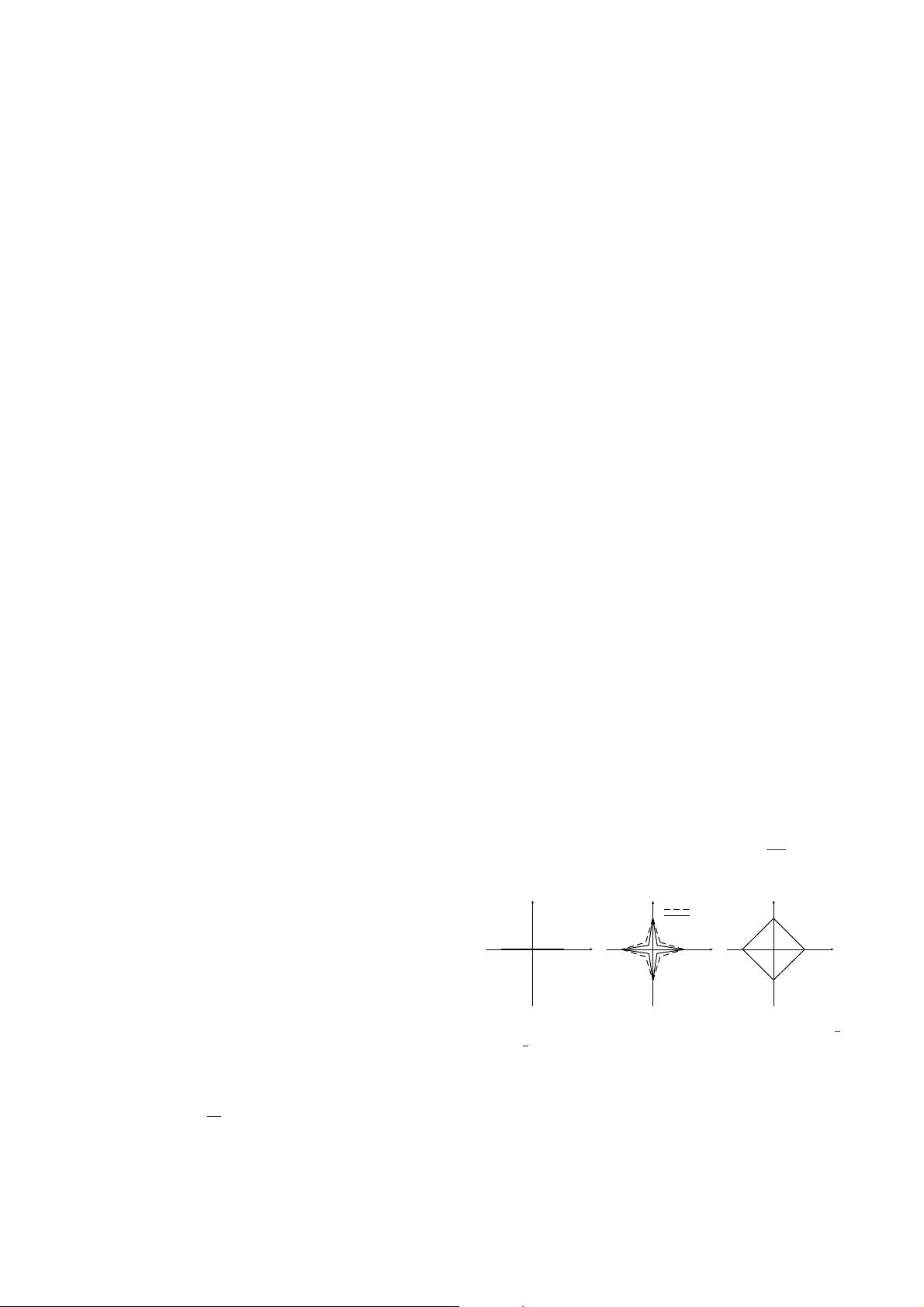

Figure 1. Visualization of the

0

-norm ball (left), TLP ball with τ =

1

4

and τ =

1

6

(middle), and

1

-norm ball (right).

The parameter τ is a threshold. Given an appropriate τ,

TLP balances between the

0

-norm and

1

-norm according

to the magnitude of the coefficients. Fig. 1 presents the norm

ball of

0

-norm,

1

-norm, and TLP with different τ ’s. The