Hyper-Heuristics with Low Level Parameter Adaptation

Adaptive. In this approach, the values of the parameters are maintained based

on the feedback information collected from the search procedure, which may

involve the quality of the solution, the diversity of the population, and so on.

Thus, this approach can also be considered similar to perturbative LLH selection-

based hyper-heuristics, as well as DMAB-based operator selection (DaCosta et al.,

2008), since all these approaches are based on the communication of the feedback

information. For example, the classical 1/5 rule in evolution strategy (ES) is an

adaptive parameter control approach (Rechenberg, 1973). Y. Li and W. Li (2007)

developed an adaptive ant-based algorithm, in which the parameters α and β are

adaptively adjusted based on entropy calculation.

Self-Adaptive. Instead of interacting with the search procedure according to

some feedback information, in self-adaptive approaches, the parameters are en-

coded into the solution, and get optimized along with the exploration of the so-

lution space. One example is the σ -self-adaptation in ES investigated by Hansen

(2006). Serpell and Smith (2010) discussed the self-adaptation of the mutation op-

erator for the permutation representations. See Meyer-Nieberg and Beyer (2007)

for a survey of self-adaptive parameter control.

This concludes the introduction of both hyper-heuristics and parameter setting

techniques. Interestingly, we observe that these two research directions have much in

common. From the perspective of motivations, both directions aim at preventing the

algorithms from being instance and/or problem dependent. From the perspective of

methodologies, these two directions can also be considered relevant, especially when

we compare perturbative LLH selection-based hyper-heuristics and adaptive param-

eter control approaches. Thus, in this paper, we intend to integrate perturbative LLH

selection-based hyper-heuristics and adaptive parameter control into a unified frame-

work, so as to improve the generality and the robustness of hyper-heuristics.

3 Hyper-Heuristics with Low Level Parameter Adaptation

As mentioned in Section 1, static LLP configurations may suffer from a series of prob-

lems, such as the training overhead, as well as the generality issue. As a solution, we

intend to propose a general framework that incorporates the LLP adaptation.

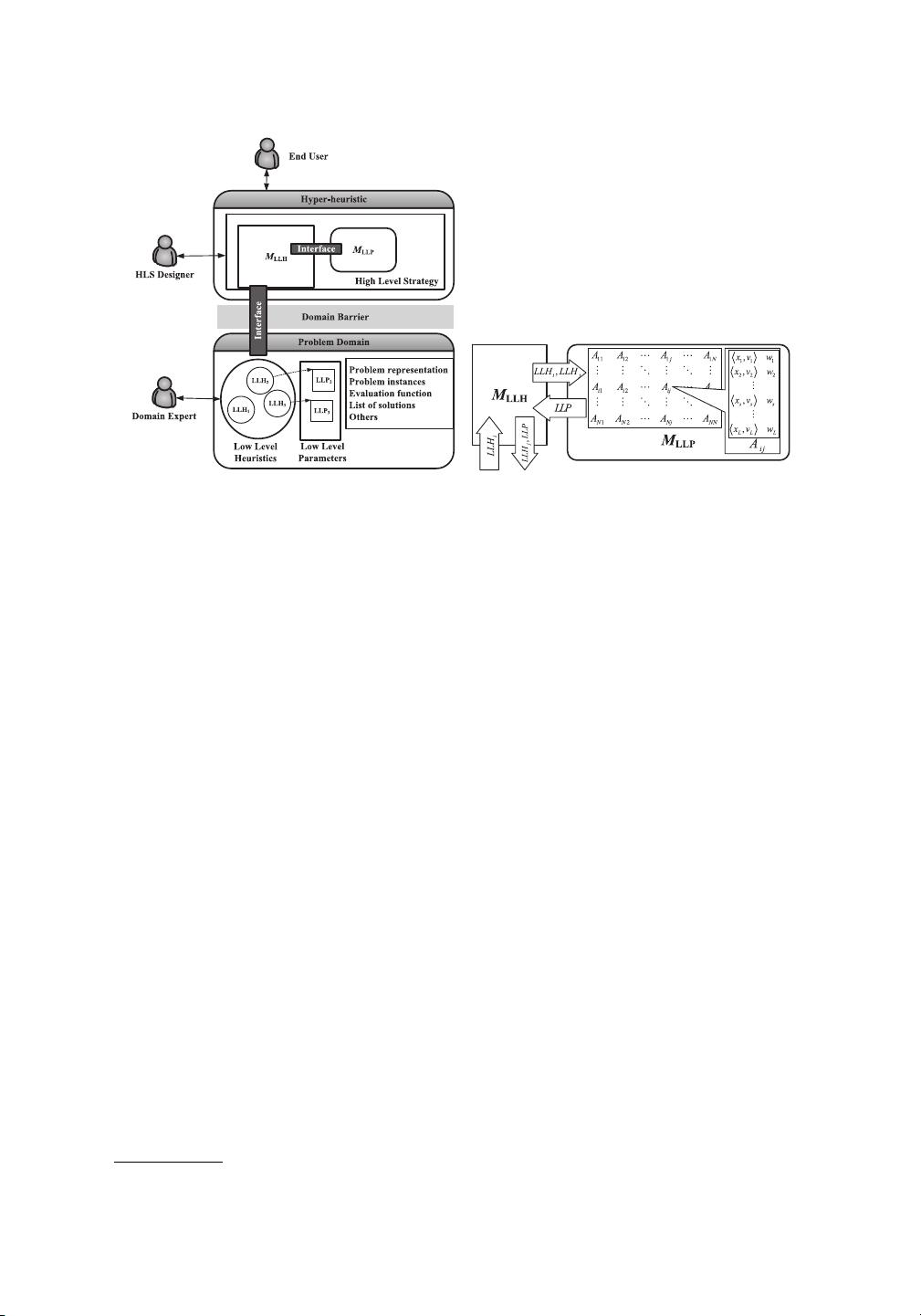

The main idea of AD-HH is simple. In the framework, the HLS is further decom-

posed into two modules, the LLH management module (denoted as M

LLH

), and the

LLP management module (denoted as M

LLP

). On one hand, M

LLH

selects, applies, and

evaluates the LLHs in a similar way as most of the existing hyper-heuristics. On the

other hand, M

LLP

is responsible for the selection and evaluation of LLPs. Figure 2(a)

describes the framework diagram, in which the two modules communicate with each

other so as to pass the values of LLPs, the feedback information about the quality of

the applied LLPs, and so on. Note that since the maintenance of the LLPs is achieved

through the information exchange between M

LLH

and M

LLP

, the LLP adaptation in this

study should be classified as an adaptive parameter control approach (see Section 2).

More specifically, our framework consists of the following components: H, Q,

S, M

LLH

,andM

LLP

. Among these components, H ={LLH

1

, LLH

2

,...,LLH

N

} in-

dicates the set of LLHs, where N is the number of the LLHs, Q ={q

1

,q

2

,...,q

num

} is

a population of num LLH sequences. In the population, each sequence is defined as

q

i

=q

1

i

,q

2

i

,...,q

len

i

, where q

j

i

∈ H is the jth LLH of the ith sequence in Q,andlen

Evolutionary Computation Volume 20, Number 2 195