990 IEEE TRANSACTIONS ON NEURAL NETWORKS, VOL. 10, NO. 5, SEPTEMBER 1999

Equation (9) shows that solutions found using ERM

converge to the best possible one. Equation (10) shows

that values of empirical risk converge to the value of

the smallest risk.

2) How fast does the sequence of smallest empirical risk

values converge to the smallest actual risk? In other

words what is the rate of generalization of a learning

machine that implements the empirical risk minimization

principle?

3) How can one control the rate of convergence (the rate of

generalization) of the learning machine?

4) How can one construct algorithms that can control the

rate of generalization?

The answers to these questions form the four parts of

learning theory:

1) the theory of consistency of learning processes;

2) the nonasymptotic theory of the rate of convergence of

learning processes;

3) the theory of controlling the generalization of learning

processes;

4) the theory of constructing learning algorithms.

II. T

HE THEORY OF CONSISTENCY OF LEARNING PROCESSES

The theory of consistency is an asymptotic theory. It de-

scribes the necessary and sufficient conditions for convergence

of the solutions obtained using the proposed method to the

best possible as the number of observations is increased. The

question arises:

Why do we need a theory of consistency if our goal is to

construct algorithms for a small (finite) sample size?

The answer is:

We need a theory of consistency because it provides not

only sufficient but necessary conditions for convergence of

the empirical risk minimization inductive principle. Therefore

any theory of the empirical risk minimization principle must

satisfy the necessary and sufficient conditions.

In this section, we introduce the main capacity concept (the

so-called Vapnik–Cervonenkis (VC) entropy which defines

the generalization ability of the ERM principle. In the next

sections we show that the nonasymptotic theory of learning is

based on different types of bounds that evaluate this concept

for a fixed amount of observations.

A. The Key Theorem of the Learning Theory

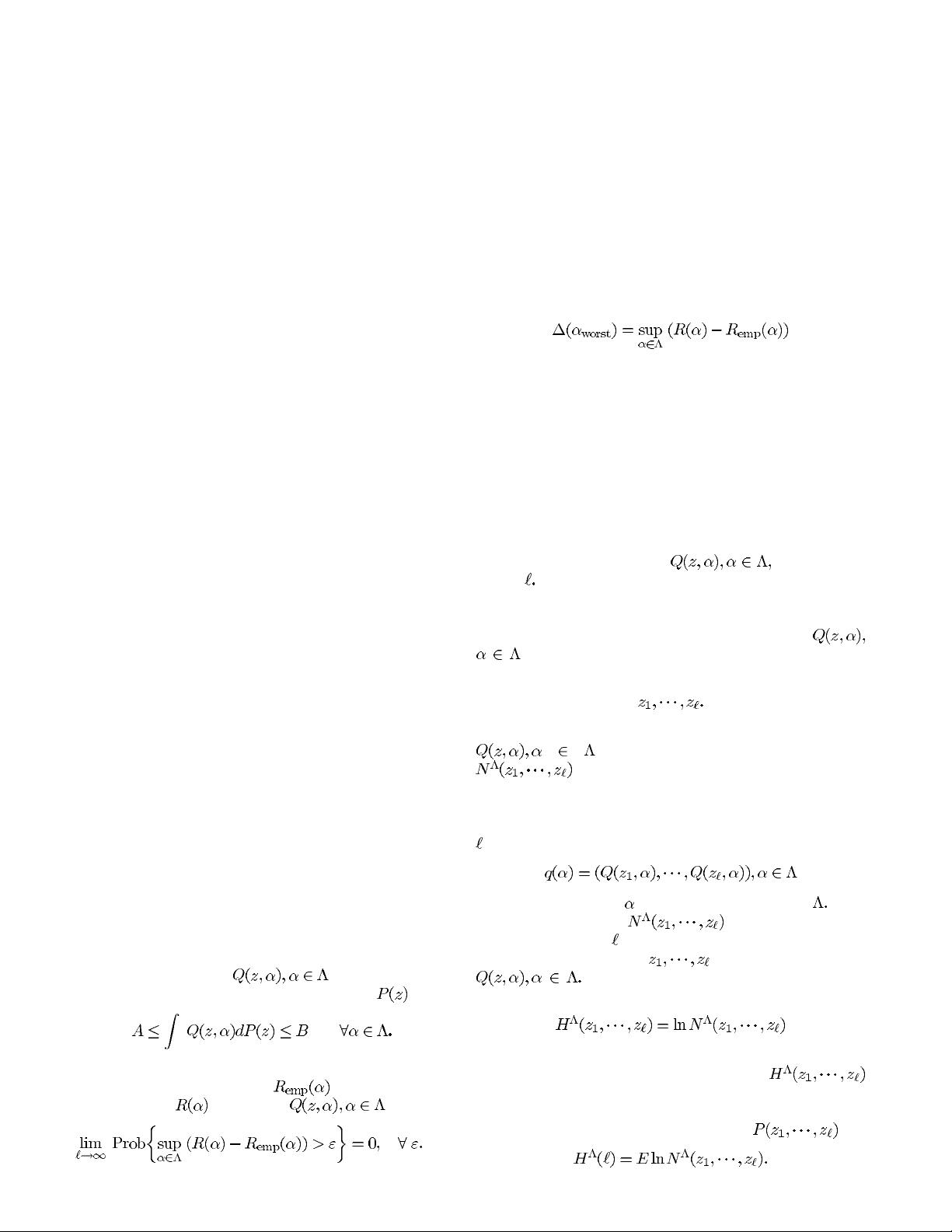

The key theorem of the theory concerning the ERM-based

learning processesis the following [27].

The Key Theorem: Let

be a set of functions

that has a bounded loss for probability measure

Then for the ERM principle to be consistent it is necessary and

sufficient that the empirical risk

converge uniformly

to the actual risk

over the set as follows:

(11)

This type of convergence is called uniform one-sided conver-

gence.

In other words, according to the Key theorem the conditions

for consistency of the ERM principle are equivalent to the

conditions for existence of uniform one-sided convergence

(11).

This theorem is called the Key theorem because it asserts

that any analysis of the convergence properties of the ERM

principle must be a worst case analysis. The necessary condi-

tion for consistency (not only the sufficient condition) depends

on whether or not the deviation for the worst function over

the given set of of functions

converges in probability to zero.

From this theorem it follows that the analysis of the ERM

principle requires an analysis of the properties of uniform

convergence of the expectations to their probabilities over the

given set of functions.

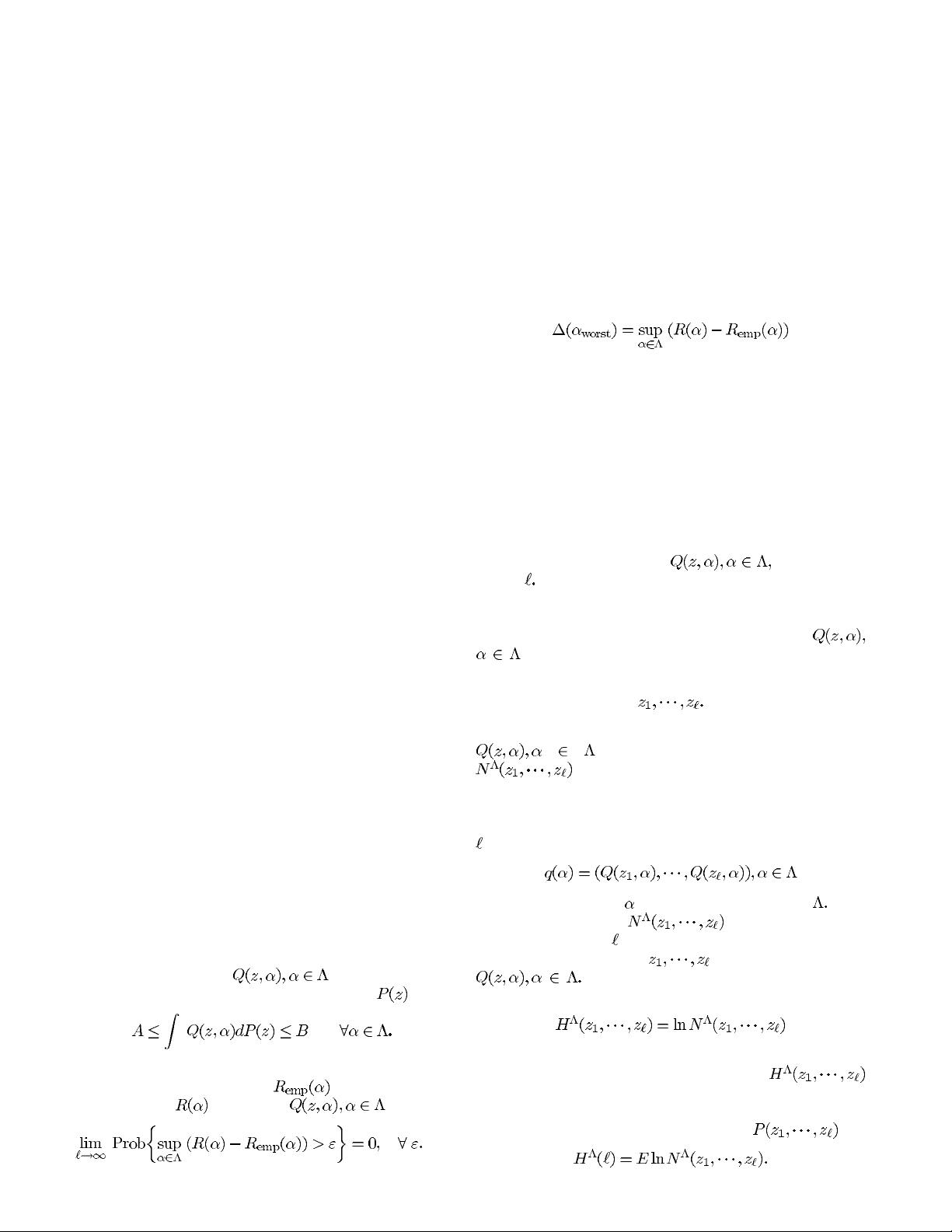

B. The Necessary and Sufficient Conditions

for Uniform Convergence

To describe the necessary and sufficient condition for uni-

form convergence (11), we introduce a concept called the

entropy of the set of functions

on the sample

of size

We introduce this concept in two steps: first for sets of

indicator functions and then for sets of real-valued functions.

Entropy of the Set of Indicator Functions: Let

be a set of indicator functions, that is the functions

which take on only the values zero or one. Consider a sample

(12)

Let us characterize the diversity of this set of functions

on the given sample by a quantity

that represents the number of different

separations of this sample that can be obtained using functions

from the given set of indicator functions.

Let us write this in another form. Consider the set of

-dimensional binary vectors

that one obtains when takes various values from Then

geometrically speaking

is the number of dif-

ferent vertices of the

-dimensional cube that can be obtained

on the basis of the sample

and the set of functions

Let us call the value

the random entropy. The random entropy describes the diver-

sity of the set of functions on the given data.

is a random variable since it was constructed using random

i.i.d. data. Now we consider the expectation of the random

entropy over the joint distribution function