𝑰

𝒕

𝑰

𝒕"𝟏

𝑰

𝒕"𝟐

Input Video Stream

Backbone

&

Encoder

Transformer Joint

Decoder

Detection

Decoder

𝑸

𝒅𝒆𝒕

𝑬

𝒅𝒆𝒕

𝒕

𝑬

𝒕𝒄𝒌

𝒕

𝑴

𝒕𝒄𝒌

𝒕

𝑶

𝒕𝒄𝒌

𝒕

𝑶

𝒕𝒄𝒌

𝒕&𝟏

Temp ora l

Interaction

Module

copy for newborn targets

update for subsequent

normal input

𝑴

𝒕𝒄𝒌

𝒕

learnable detect query

track and detect embedding

long-term memory

output embedding

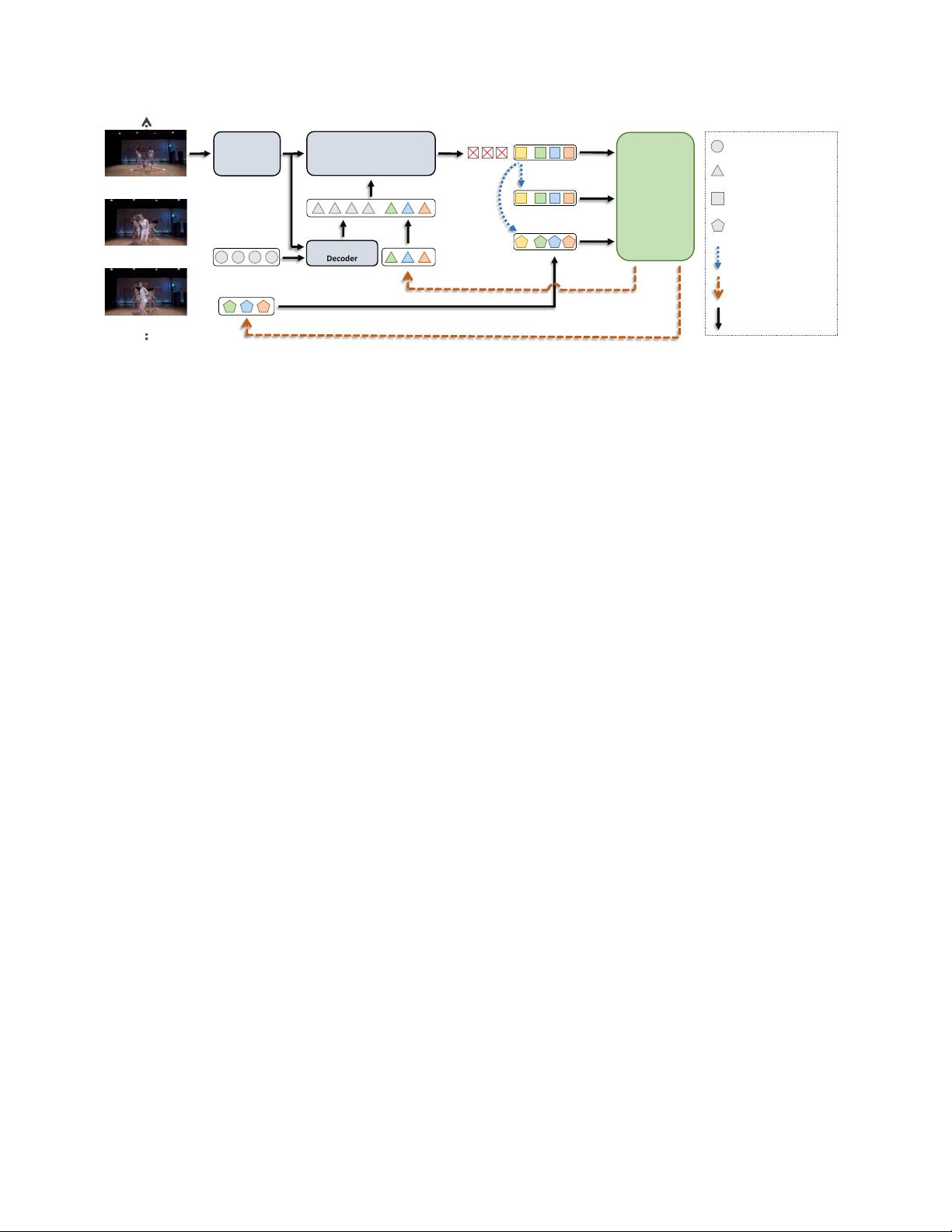

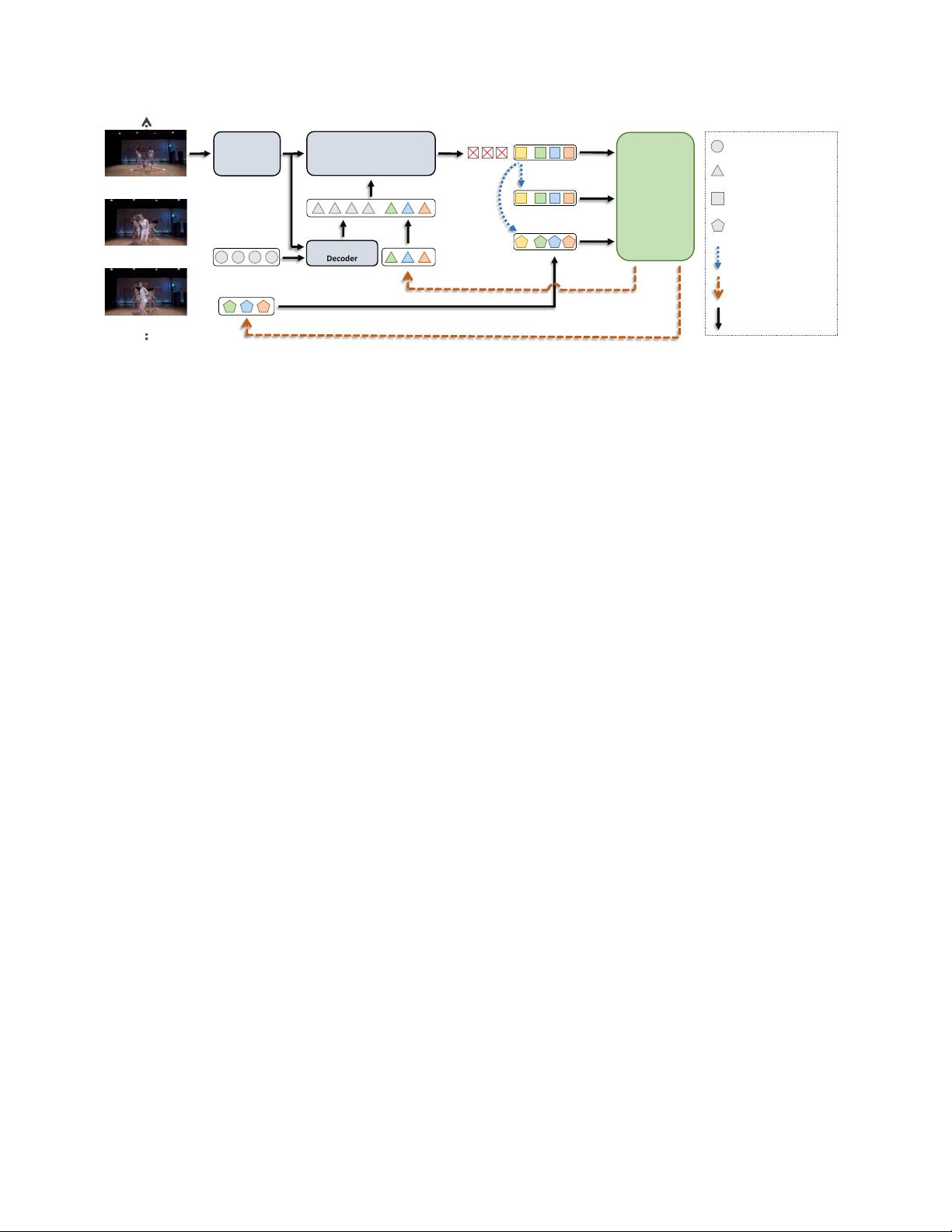

Figure 1. Overview of MeMOTR. Like most DETR-based [6] methods, we exploit a ResNet-50 [15] backbone and a Transformer [32]

Encoder to learn a 2D representation of an input image. We use different colors to indicate different tracked targets, and the learnable

detect query Q

det

is illustrated in gray. Then the Detection Decoder D

det

processes the detect query to generate the detect embedding

E

t

det

, which aligns with the track embedding E

t

tck

from previous frames. Long-term memory is denoted as M

t

tck

. The initialization process

in the blue dotted arrow will be applied to newborn objects. Our Long-Term Memory and Temporal Interaction Module is discussed in

Section 3.3 and 3.4. More details are illustrated in Figure 2.

ing the encoded image feature with [E

t

det

, E

t

tck

], the Trans-

former Joint Decoder D

joint

produces the corresponding

output [

ˆ

O

t

det

,

ˆ

O

t

tck

]. For simplicity, we merge the newborn

objects in

ˆ

O

t

det

(yellow box) with tracked objects’ output

ˆ

O

t

tck

, denoted by O

t

tck

. Afterward, we predict the classifi-

cation confidence c

t

i

and bounding box b

t

i

corresponding to

the i

th

target from the output embeddings. Finally, we feed

the output from adjacent frames [O

t

tck

, O

t−1

tck

] and the long-

term memory M

t

tck

into the Temporal Interaction Module,

updating the subsequent track embedding E

t+1

tck

and long-

term memory M

t+1

tck

. The details of our components will be

elaborated in the following sections.

3.2. Detection Decoder

In the previous Transformer-based methods [22, 43],

the learnable detect query and the previous track query

are jointly input to Transformer Decoder from scratch.

This simple idea extends the end-to-end detection Trans-

former [6] to multi-object tracking. Nonetheless, we argue

that this design may cause misalignment between detect and

track queries. As discussed in numerous works [6, 20], the

learnable object query in DETR-family plays a role similar

to a learnable anchor with little semantic information. On

the other hand, track queries have specific semantic knowl-

edge to resolve their category and bounding boxes since

they are generated from the output of previous frames.

Therefore, as illustrated in Figure 1, we split the origi-

nal Transformer Decoder into two parts. The first decoder

layer is used for detection, and the remaining five layers are

used for joint detection and tracking. These two decoders

have the same structure but different inputs. The Detection

Decoder D

det

takes the original learnable detect query Q

det

as input and generates the corresponding detect embedding

E

t

det

, carrying enough semantic information to locate and

classify the target roughly. After that, we concatenate the

detect and track embedding together and feed them into the

Joint Decoder D

joint

.

3.3. Long-Term Memory

Unlike previous methods [17, 43] that only exploit ad-

jacent frames’ information, we explicitly introduce a long-

term memory M

t

tck

to maintain longer temporal information

for tracked targets. When a newborn object is detected, we

initialize its long-term memory with the current output.

It should be noted that in a video stream, objects only

have minor deformation and movement in consecutive

frames. Thus, we suppose the semantic feature of a tracked

object changes only slightly in a short time. In the same

way, our long-term memory should also update smoothly

over time. Inspired by [29], we apply a simple but effec-

tive running average with exponentially decaying weights

to update long-term memory M

t

tck

:

f

M

t+1

tck

= (1 − λ)M

t

tck

+ λ · O

t

tck

, (1)

where

f

M

t+1

tck

is the new long-term memory for the next

frame. The memory update rate λ is experimentally set to

0.01, following the assumption that the memory changes

smoothly and consistently in consecutive frames. We also

tried some other values in Table 7.

3.4. Temporal Interaction Module

Adaptive Aggregation for Temporal Enhancement. Is-

sues such as blurring or occlusion are often seen in a video

stream. An intuitive idea to solve this problem is using