to large parallel systems or networked clusters. It maps

MPI nodes to individual processes. It is not easy to mod-

ify the current MPICH system to map each MPI node to

a lightweight thread because the low-level layers of MPICH

are not thread-safe. (Even though the latest MPICH re-

lease supports the MPI-2 standard, its MT level is actually

MPI_THREAD_SINGLE.)

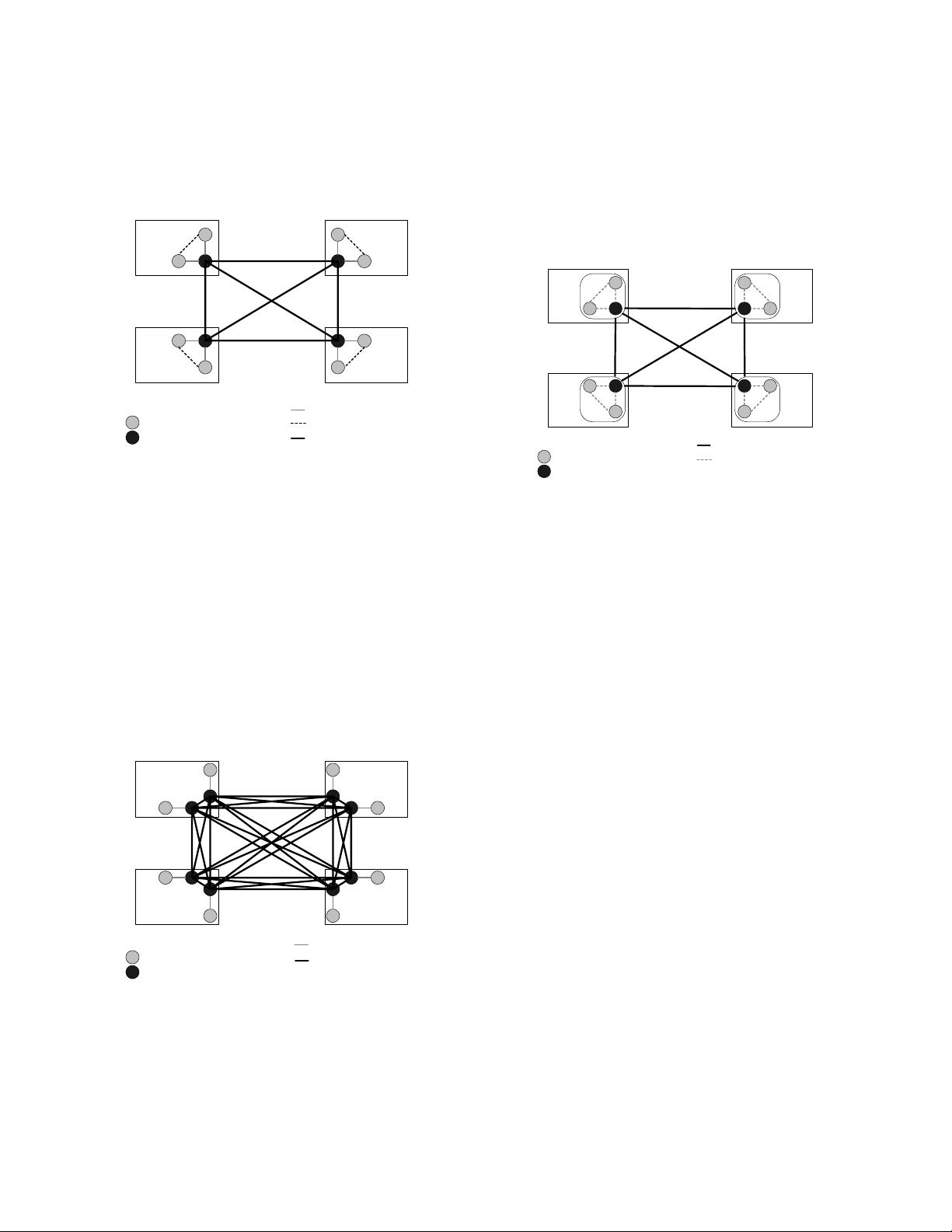

WS- A cluster node

- MPI node (process)

- MPICH daemon process

- Inter-process pipe

- Shared memory

- TCP connection

WS

WSWS

WS

Figure 2: MPICH Using a Combination of TCP and

Shared Memory.

As shown in Figure 1, the current support for SMP clus-

ters in MPICH is basically a combination of two devices: a

shared-memory device and a network device such as TCP/IP.

Figure 2 shows a sample setup of such a configuration. In

this example, there are 8 MPI nodes evenly scattered on

4 cluster nodes. There are also 4 MPI daemon processes,

one on each cluster node, that are fully connected with each

other. The daemon processes are necessary to drain the mes-

sages from TCP connections and to deliver messages across

cluster-node boundaries. MPI nodes communicate with dae-

mon processes through standard inter-process communica-

tion mechanisms such as domain sockets. MPI nodes on

the same cluster node can also communicate through shared

memory.

WS

WSWS

WS

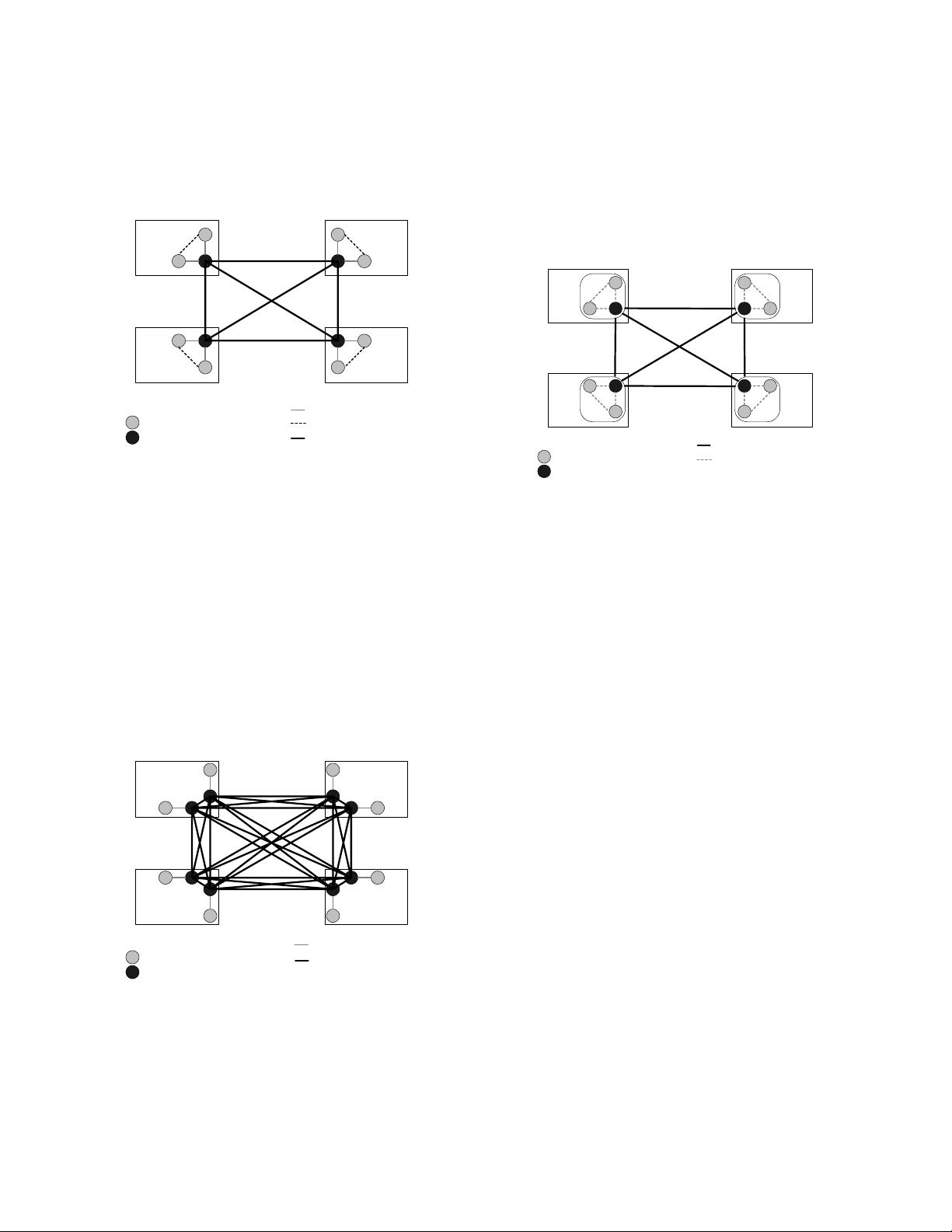

WS- A cluster node

- MPI node (process)

- MPICH daemon process

- Inter-process pipe

- TCP connection

Figure 3: MPICH Using TCP Only.

One can also configure MPICH at compile time to make

it completely oblivious to the shared-memory architecture

inside each cluster node and use loopback devices to com-

municate among MPI nodes running on the same cluster

node. In this case, the setup will look like Figure 3. In

theexample,weshowthesamenumberofMPInodeswith

the same node distribution as in the previous configuration.

What is different from the previous one is that there are now

8 daemon processes, one for each MPI node. Sending a mes-

sage between MPI nodes on the same cluster node will go

through the same path as sending a message between MPI

nodes on different cluster nodes, but possibly faster.

2.3 Threaded MPI Execution on SMP Clus-

ters

WS-Aclusternode

- MPI node (thread)

- TMPI daemon thread

- TCP connection

- Direct mem access

and thread sync

WS

WSWS

WS

Figure 4: Communication Structure for Threaded MPI

Execution.

In this section, we provide an overview of threaded MPI

execution on SMP clusters and describe the potential ad-

vantages of TMPI. To facilitate the understanding, we take

the same sample program used in Figure 2 and illustrate the

setup of communication channels for TMPI (or any thread-

based MPI system) in Figure 4. As we can see, we map MPI

nodes on the same cluster node into threads inside the same

process and we add an additional daemon thread in each

process. Despite the apparent similarities to Figure 2, there

are a number of differences between our design and MPICH.

1. In TMPI, the communication between MPI nodes on

the same cluster node uses direct memory access in-

stead of the shared-memory facility provided by oper-

ating systems.

2. In TMPI, the communication between the daemons

and the MPI nodes also uses direct memory access

instead of domain sockets.

3. Unlike a process-based design in which all the remote

message send or receive has to be delegated to the

daemon processes, in a thread-based MPI implemen-

tation, every MPI node can send to or receive from

any remote MPI node directly.

As will be shown in later sections, these differences have

an impact of the software design and provide potential per-

formance gain of TMPI or any thread-based MPI systems.

Additionally, TMPI gives us the following immediate ad-

vantages over process-based MPI systems. Comparing with

an MPICH system that uses a mixing of TCP and shared

memory (depicted in Figure 2):

383