Design Decision Rationale/Benets Challenges

B4 routers built from

merchant switch silicon

B4 apps are willing to trade more average bandwidth for fault tolerance.

Edge application control limits nee d for large buers. Limited number of B4 sites means

large forwarding tables are not required.

Relatively low router cost allows us to scale network capacity.

Sacrice hardware fault tolerance,

deep buering, and support for

large routing tables.

Drive links to 100%

utilization

Allows ecient use of expensive long haul transport.

Many applications willing to trade higher average bandwidth for predictability. Largest

bandwidth consumers adapt dynamically to available bandwidth.

Packet loss becomes inevitable

with substantial capacity loss dur-

ing link/switch failure.

Centralized trac

engineering

Use multipath forwarding to balance application demands across available capacity in re-

sponse to failures and changing application demands.

Leverage application classication and priority for scheduling in cooperation with edge rate

limiting.

Trac engineering with traditional distributed routing protocols (e.g. link-state) is known

to be sub-optimal [17, 16] except in special cases [39].

Faster, deterministic global convergence for failures.

No existing protocols for func-

tionality. Requires knowledge

about site to site demand and im-

portance.

Separate hardware

from soware

Customize routing and monitoring protocols to B4 requirements.

Rapid iteration on soware protocols.

Easier to protect against common case soware failures through external replication.

Agnostic to range of hardware deployments exporting the same programming interface.

Previously untested development

model. Breaks fate sharing be-

tween hardware and soware.

Table 1: Summary of design decisions in B4.

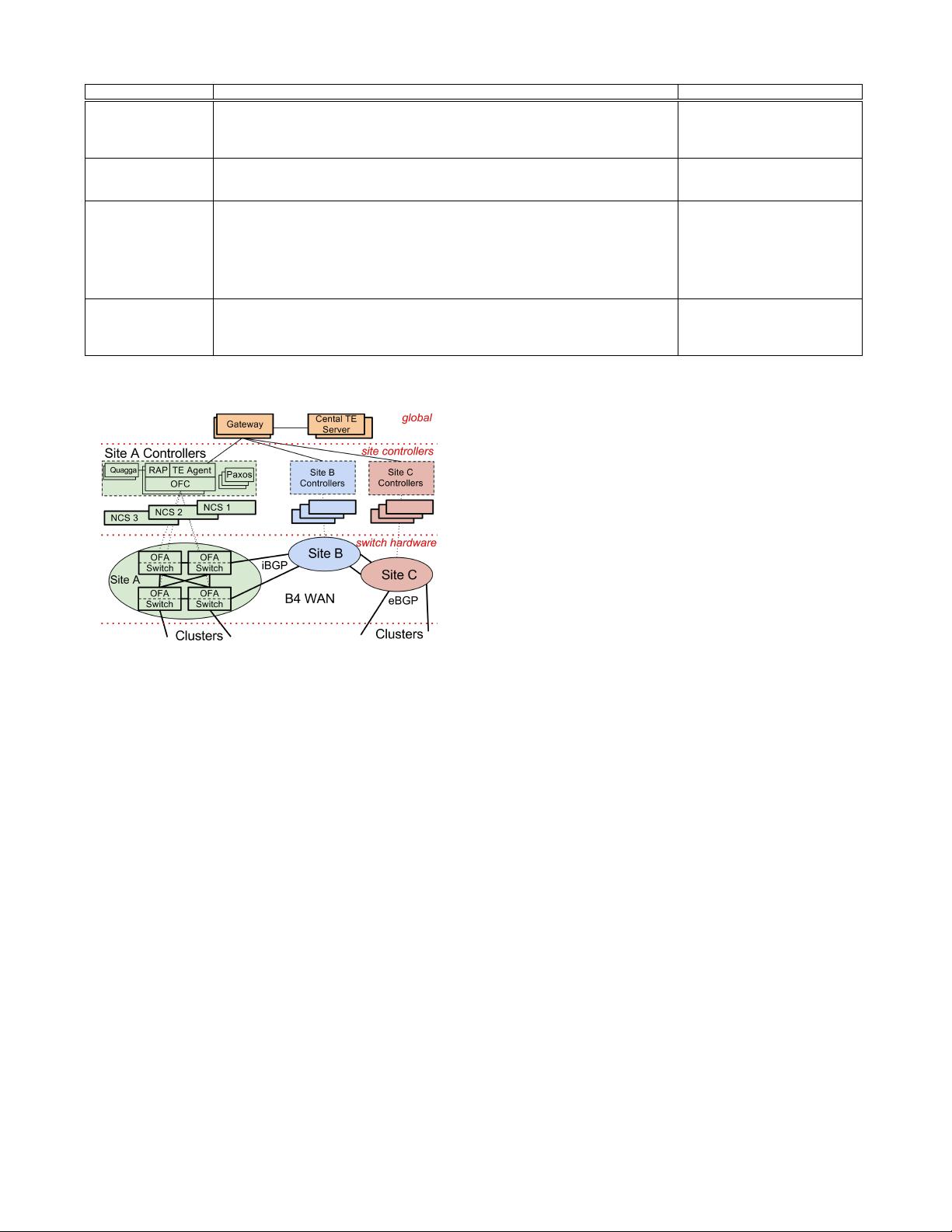

Figure 2: B4 architecture overview.

stance of Paxos [9] elects one of multiple available soware replicas

(placed on dierent physical servers) as the primary instance.

e global layer consists of logically centralized applicat ions (e.g.

an SDN Gateway and a central TE server) that enable the central

control of the entire network via the site-level NCAs. e SDN Gate-

way abstracts details of OpenFlow and switch hardware from the

central TE server. We replicate global layer applications across mul-

tiple WAN sites with separate leader election to set the primary.

Each server cluster in our network is a logical “Autonomous Sys-

tem” (AS) with a set of IP prexes. Each cluster contains a set of BGP

routers (not shown in Fig. 2) that peer with B4 switches at each WAN

site. Even before introducing SDN, we ran B4 as a single AS pro-

viding transit among clusters running traditional BGP/ISIS network

protocols. We chose BGP because of its isolation properties bet ween

domains and operator familiarity with the protocol. e SDN-based

B4 then had to support existing distributed routing protocols, both

for interoperability with our non-SDN WAN implementation, and

to enable a gradual rollout.

We considered a number of options for integrating existing rout-

ing protocols with centralized trac engineering. In an aggressive

approach, we would have built one integrated, centralized service

combining routing (e.g., ISIS functionality) and trac engineering.

We instead chose to deploy routing and trac engineering as in-

dependent services, with the standard routing service deployed ini-

tially and central TE subsequently deployed as an overlay. is sep-

aration delivers a number of benets. It allowed us to focus initial

work on building SDN infrastructure, e.g., the OFC and agent, rout-

ing, etc. Moreover, since we initially deployed our network with no

new externally visible functionality such as TE, it gave time to de-

velop and debug the SDN architecture before trying to implement

new features such as TE.

Perhaps most importantly, we layered trac engineering on top

of baseline routing protocols using prioritized switch forwarding ta-

ble entries (§ 5). is isolation gave our network a “ big red button”;

faced with any critical issues in trac engineering, we could dis-

able the service and fall back to shortest path forwarding. is fault

recovery mechanism has proven invaluable (§ 6).

Each B4 site consists of multiple switches with potentially hun-

dreds of individual ports linking to remote sites. To scale, the TE ab-

stracts each site into a single node with a single edge of given capac-

ity to each remote site. To achieve this topology abstraction, all traf-

c crossing a site-to-site edge must be evenly distributed across all

its constituent links. B4 routers employ a custom variant of ECMP

hashing [37] to achieve the necessary load balancing.

In the rest of this section, we describe how we integrate ex-

isting routing protocols running on separate control servers with

OpenFlow-enabled hardware switches. § 4 then describes how we

layer TE on top of this baseline routing implementation.

3.2 Switch Design

Conventional wisdom dictates that wide area routing equipment

must have deep buers, very large forwarding tables, and hardware

support for high availability. All of this functionality adds to hard-

ware cost and complexity. We posited that with careful endpoint

management, we could adjust transmission rates to avoid the need

for deep buers while avoiding expensive packet drops. Further,

our switches run across a relatively small set of data centers, so

we did not require large forwarding tables. Finally, we found that

switch failures typically result from soware rather than hardware

issues. By moving most soware functionality o the switch hard-

ware, we can manage soware fault tolerance through known tech-

niques widely available for existing distributed systems.

Even so, the main reason we chose to build our own hardware

was that no existing platform could support an SDN deployment,

i.e., one that could export low-level control over switch forwarding

behavior. Any extra costs from using custom switch hardware are

more than repaid by the eciency gains available from supporting

novel services such as centralized TE. Given the bandwidth required