CNet: Context-Aware Network for Semantic Segmentation

Rongliang Cheng

1

, Junge Zhang

2, 3

, Peipei Yang

3

, Kangwei Liu

4

, Shujun Zhang

1

1

College of Information Science & Technology, Qingdao University of Science and Technology

4

FF & LeFuture AI Institute

2

CRIPAC &

3

NLPR, Institute of Automation, Chinese Academy of Sciences (CASIA)

chengrongliang@hotmail.com, jgzhang@nlpr.ia.ac.cn, ppyang@nlpr.ia.ac.cn, liukangwei@le.com, zhangsj@qust.edu.cn

Abstract—Semantic segmentation is one of the great

challenges in computer vision. Recently, deep convolutional

neural networks (DCNNs) have achieved great success in

most of the computer vision tasks. However, in terms of

semantic segmentation, it is still difficult for the DCNN

methods to take full advantage of context information and

determine the fine boundaries of objects. In this paper, we

propose a Context-aware Network (CNet), which utilizes

robust context information to improve segmentation results.

CNet has two significant components: 1) a feature collec-

tion module (FCM), which is constructed to extract low-

level contextual features including texture, layout, boundary,

local and global relationships by different receptive fields

to complement high-level feature learning, and 2) a novel

layer named ResGate, which is developed to select robust

contextual features from the FCM. The two combined

components can thoroughly explore context information to

improve boundary segmentation accuracy. We evaluate the

proposed method on the popular PASCAL VOC2012 dataset,

and obtain promising performance compared with related

methods, especially in the situation of similar objects or

objects in complex scene.

Keywords-Semantic Segmentation; CNN; Contextual Fea-

ture;

I. INTRODUCTION

In this paper, we address the problem of semantic seg-

mentation for natural images. The semantic segmentation

task is an instance of dense prediction, and its goal is

to compute a discrete or continuous semantic label for

each pixel in the image. This task is both fundamental and

of great importance to a variety of computer vision tasks

ranging from traditional tasks such as tracking and motion

analysis, medical imaging, structure-from-motion and 3D

reconstruction, to modern applications like autonomous

driving, mobile computing, and image-to-text analysis.

Recently, deep learning has achieved tremendous suc-

cess in image classification [1] [2] [3] [4] and object

detection [5]. This huge success has received much high

attention due to the efficient feature extraction methods of

deep convolutional neural networks (DCNNs) [2] [6] [7].

DCNN has strong ability to capture high-level visual

features for visiual tasks, and this motivates exploring the

use of DCNN for pixel-level labeling problems.

Fully convolutional network (FCN) [8] achieves

significant accuracy by adapting convolutional neural

network(CNN)-based image classifiers to semantic seg-

mentation task, and has become the most popular approach

to dense prediction tasks. Many methods have been pro-

posed to further improve this framework. For example,

deeplab [9] refines feature extracted by CNNs with the

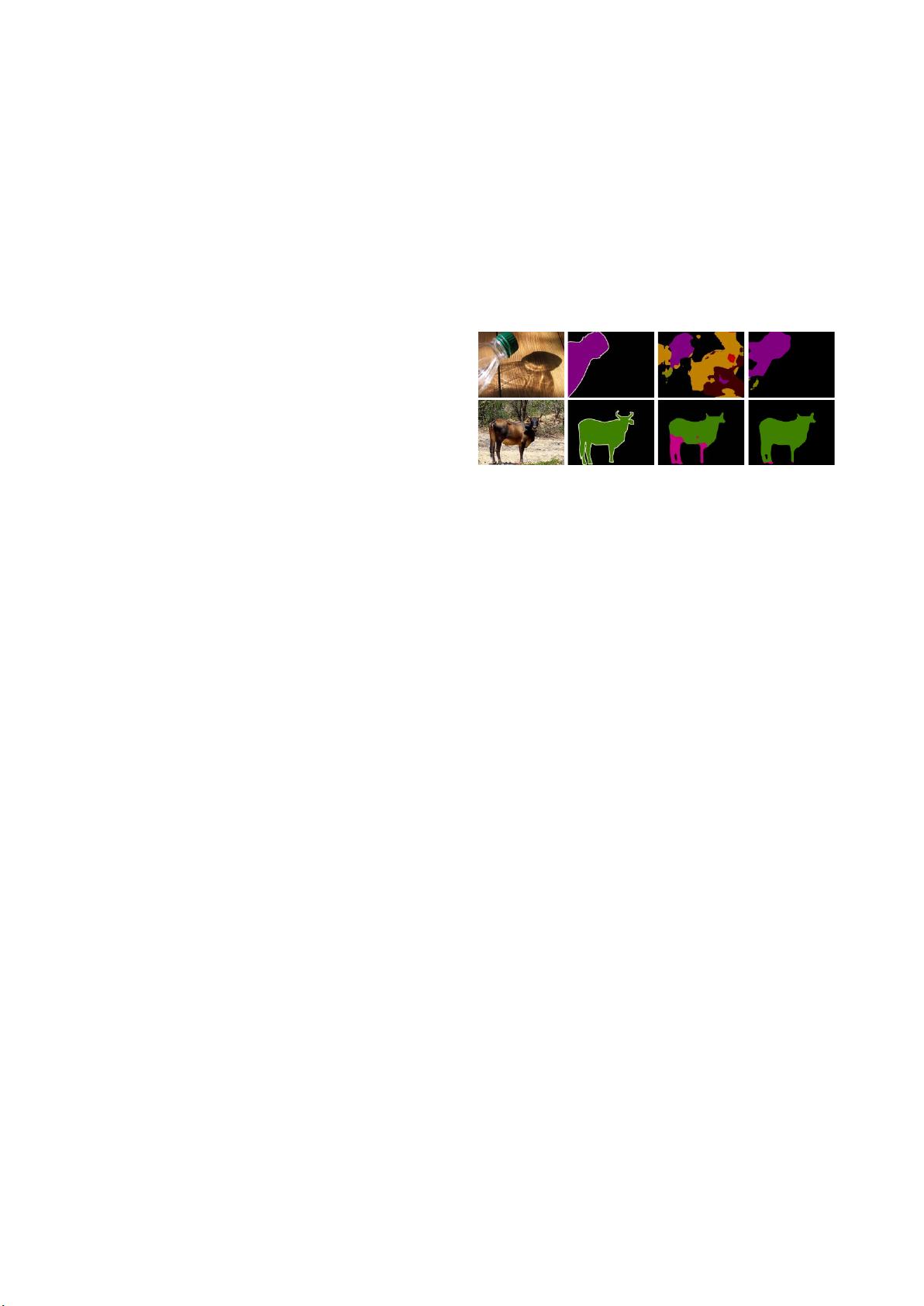

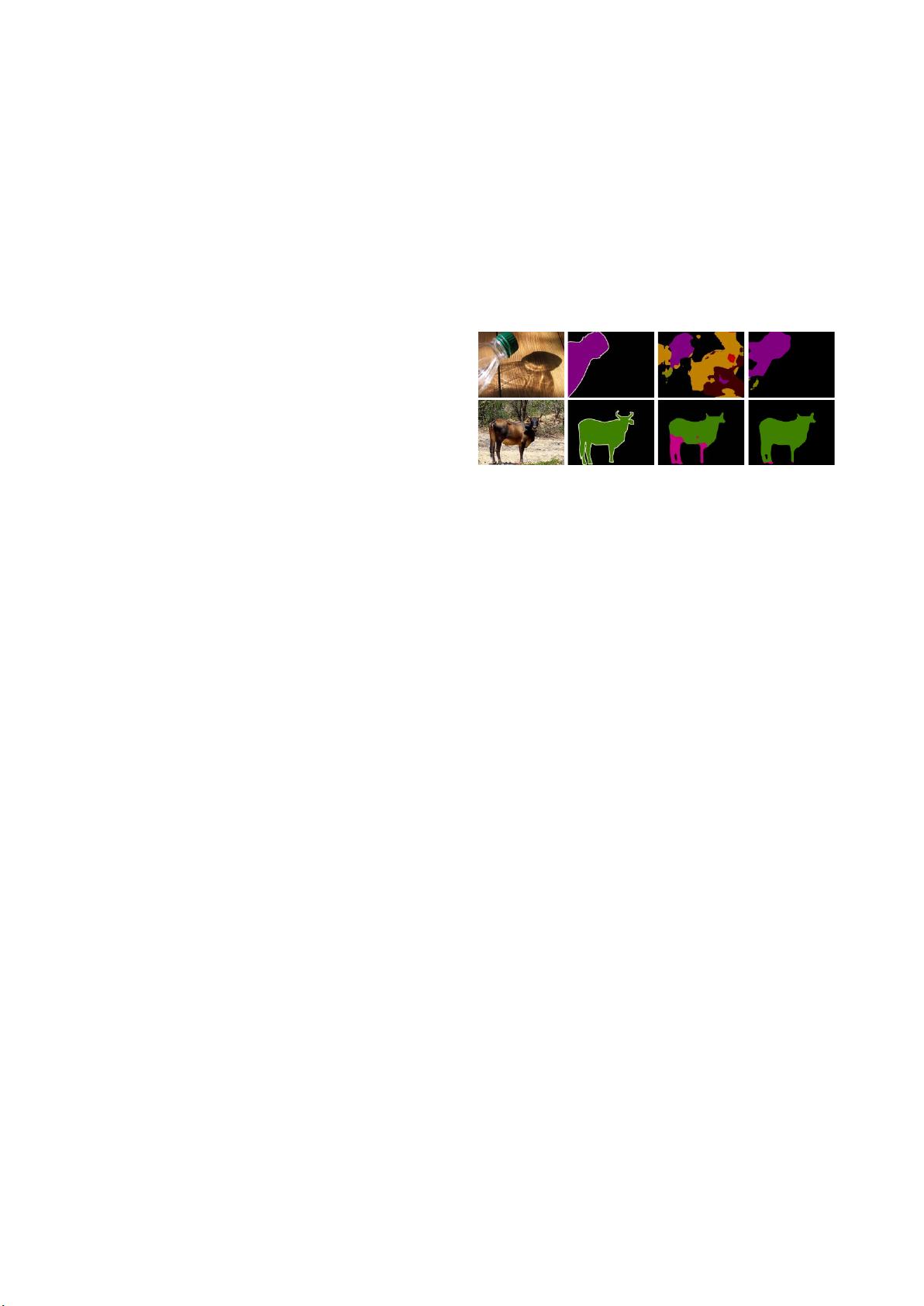

(a) Image (b) GT (c) Deeplab (d) CNet (ours)

Figure 1. Illustration of sample failure cases on the validation set

of the Pascal VOC 2012 dataset using the most popular deeplab

method (VGG-16). In the first row, most of the bottle is cropped,

so the segmentation result displays plenty of noises. In the second

row, a part of the cow is labeled as a horse. We also show the

results of our proposed CNet in the last column.

help of pairwise similarities between pixels based on loca-

tion and color features. This method uses the feature maps

of classification networks with an independent conditional

random field (CRF) based post-processing technique [10].

Zheng et al. [11] combine deep learning and CRF pairwise

inference as a sequence of operations through end-to-end

training. This approach improves the accuracy of FCN and

simplifies the additional operation with regard to the in-

dependent post-processing CRF. Further studies [12] [13]

on learning deconvolutional networks have tremendously

benefited from the recovery of input image resolution, and

even deeper convolutional neural networks [14] [15] sub-

stantially improve the segmentation benchmark. Despite

the success of these methods, the semantic segmentation

task still faces many difficult problems, especially when

considering complex scenes and multiple objects. One

example is shown in the first row of Figure 1. The bottle is

hard to be recognized because the majority of its parts or

regions are hidden or cropped. Another difficult example

is shown in the second row of Figure 1. The legs of

the cow are highly likely to be labeled as horse because

the bodies of the cow and horse are similar. Generally,

the segmentation task will be more challenging when the

object is situated in complex scenes or has an appearance

similar to other categories. As a result, bad or erroneous

segmentation results occur. In this paper, we argue that

these problems can be addressed by incorporating local

and global context information. Taking Figure 1 as an

example, a part of the bottle is cropped and the shadow

of the bottle is regarded as an object. In the second

row, the legs of the cow are labeled as horse. However,

2017 4th IAPR Asian Conference on Pattern Recognition

2327-0985/17 $31.00 © 2017 IEEE

DOI 10.1109/ACPR.2017.31

67