7

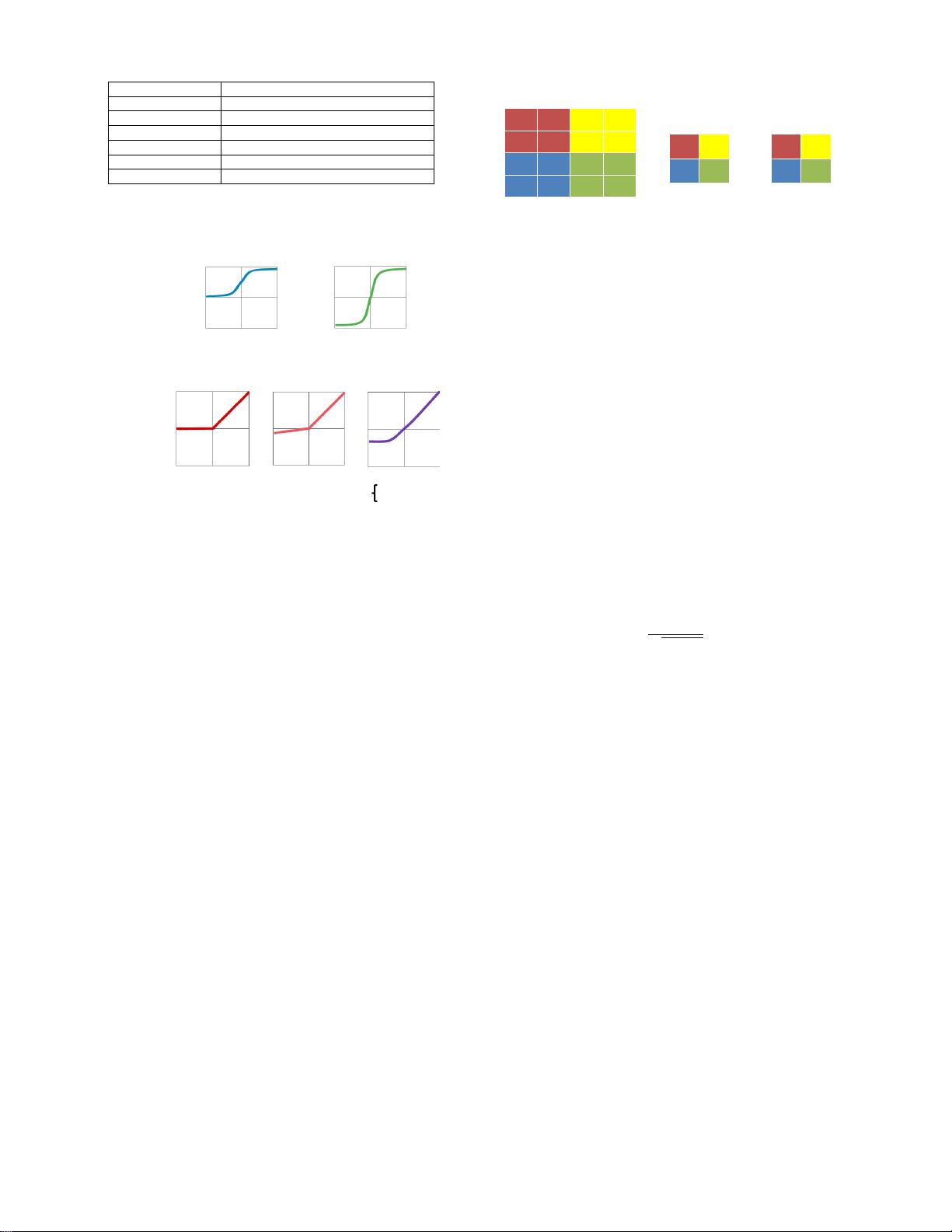

Shape Parameter Description

N batch size of 3-D fmaps

M # of 3-D filters / # of ofmap channels

C # of ifmap/filter channels

H ifmap plane width/height

R filter plane width/height (= H in FC)

E ofmap plane width/height (= 1 in FC)

TABLE I

SHAPE PARAMETERS OF A CONV/FC LAYER.

Sigmoid

1

-1

0

0 1

-1

!"#$%#&'

()

*+

Hyperbolic Tangent

1

-1

0

0 1

-1

!"%'

)

('

()

*$%'

)

&'

()

*+

Rectified Linear Unit

(ReLU)

1

-1

0

0 1

-1

!",-)%./)*+

Leaky ReLU

1

-1

0

0 1

-1

!",-)%0)/)*+

Exponential LU

1

-1

0

0 1

-1

++++)/+++++++

++++0%'

)

(#*/+

)1.+

)2.+

!"+

α = small const. (e.g. 0.1)

Traditional

Non-Linear

Activation

Functions

Modern

Non-Linear

Activation

Functions

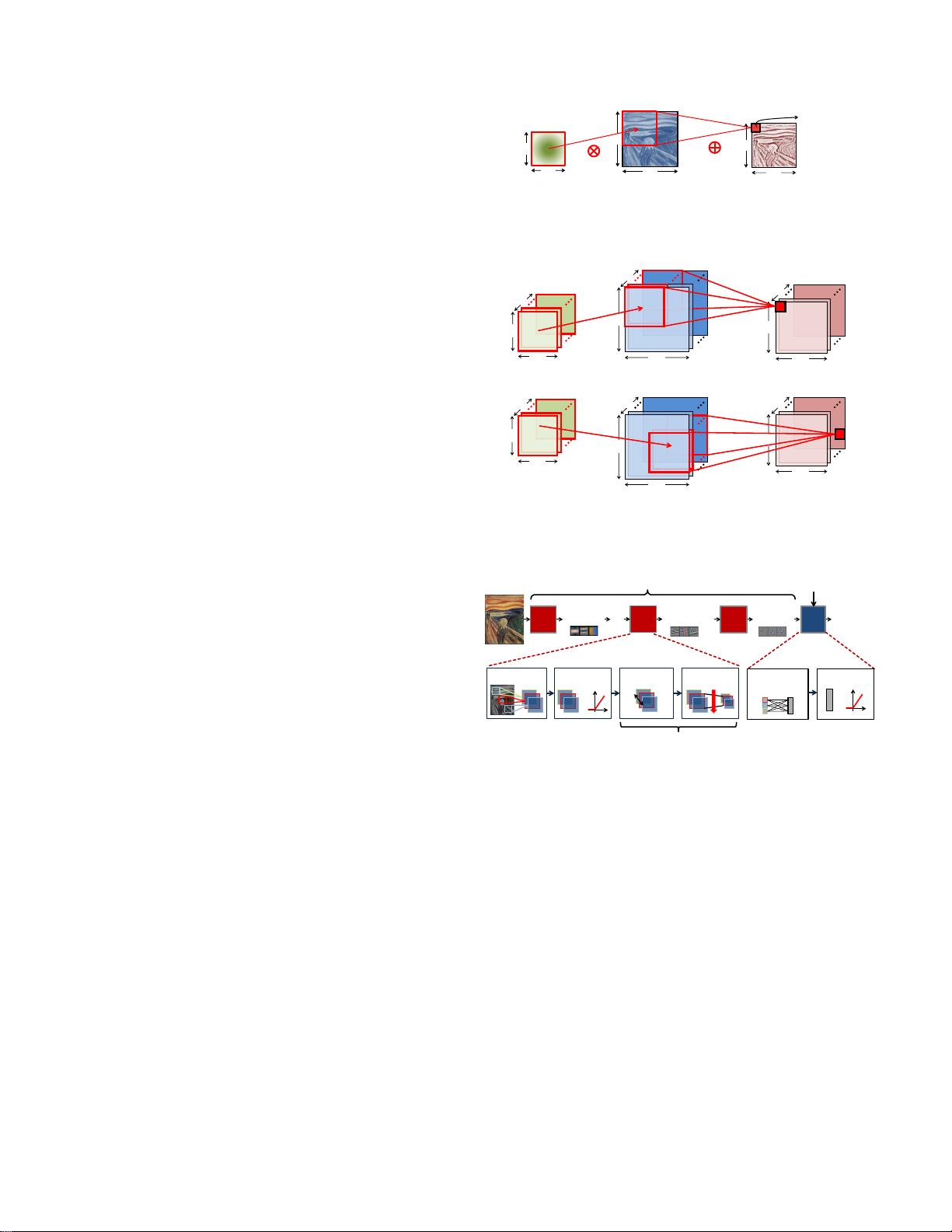

Fig. 8. Various forms of non-linear activation functions (Figure adopted from

Caffe Tutorial [43]).

From five [

3

] to even more than a thousand [

11

] CONV

layers are commonly used in recent CNN models. A small

number, e.g., 1 to 3, of fully-connected (FC) layers are typically

applied after the CONV layers for classification purposes. A FC

layer also applies filters on the ifmaps as in the CONV layers,

but the filters are of the same size as the ifmaps. Therefore,

it does not have the weight sharing property of CONV layers.

Eq. (1) still holds for the computation of FC layers with a

few additional constraints on the shape parameters:

H = R

,

E = 1, and U = 1.

In addition to CONV and FC layers, various optional layers

can be found in a DNN such as the non-linearity (NON),

pooling (POOL), and normalization (NORM). Each of these

layers can be configured as discussed next.

2) Non-Linearity: A non-linear activation function is typ-

ically applied after each convolution or fully connected

computation. Various non-linear functions are used to introduce

non-linearity into the DNN as shown in Fig. 8. These include

conventional non-linear functions such as sigmoid or hyperbolic

tangent as well as rectified linear unit (ReLU) [

37

], which has

become popular in recent years due to its simplicity and its

ability to enable fast training. Variations of ReLU, such as leaky

ReLU [

38

], parametric ReLU [

39

], and exponential LU [

40

]

have also been explored for improved accuracy. Finally, a

non-linearity called maxout, which takes the max value of

two intersecting linear functions, has shown to be effective in

speech recognition tasks [41, 42].

3) Pooling: Pooling enables the network to be robust and

invariant to small shifts and distortions and is applied to each

channel separately. It can be configured based on the size of

9 3 5 3

10 32 2 2

1 3 21 9

2 6 11 7

2x2 pooling, stride 2

32 5

6 21

Max pooling

Average pooling

18 3

3 12

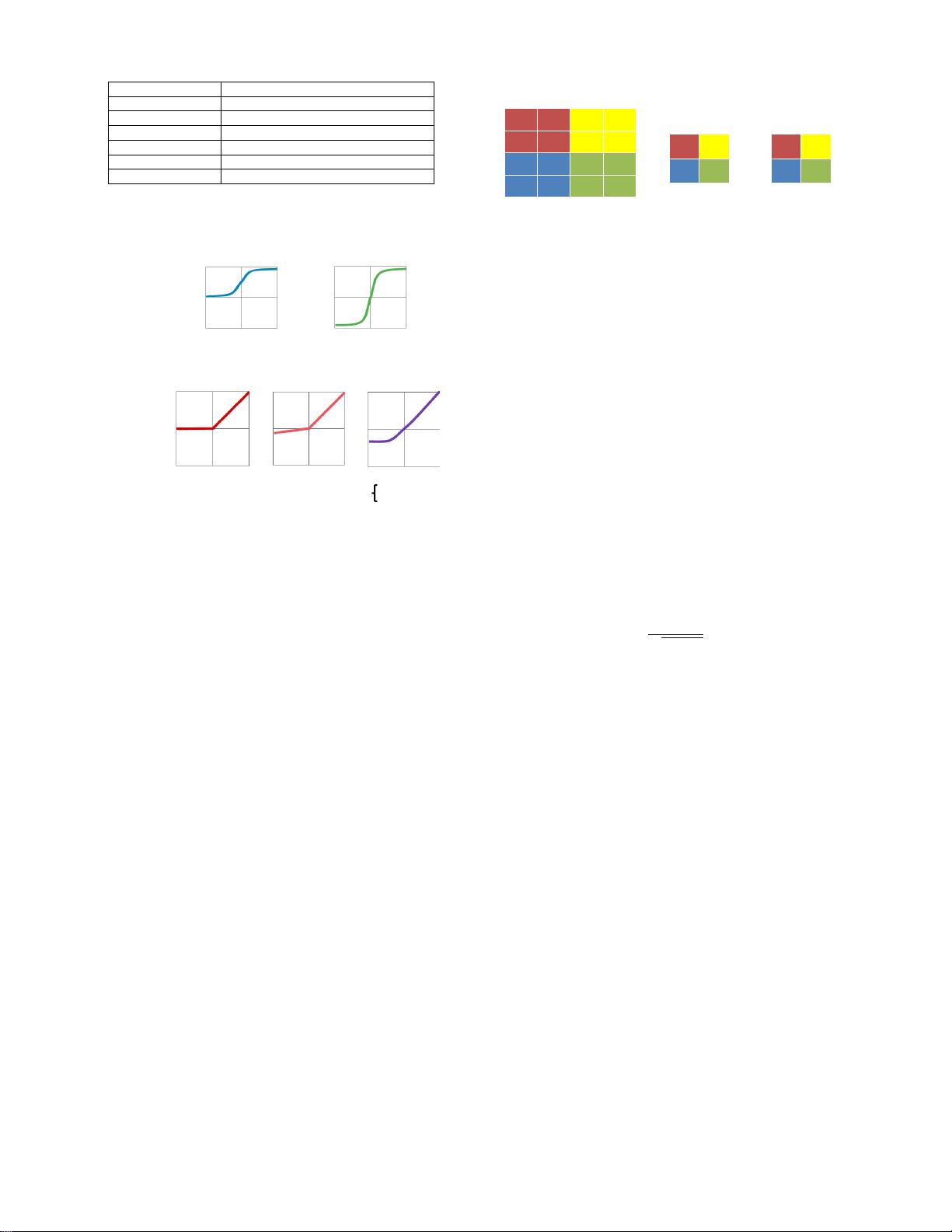

Fig. 9. Various forms of pooling (Figure adopted from Caffe Tutorial [

43

]).

its receptive field (e.g., 2

×

2) and the type of pooling (e.g.,

max or average), as shown in Fig. 9. Typically the pooling

occurs on non-overlapping blocks (i.e., the stride is equal to

the size of the pooling). Usually a stride of greater than one

is used such that there is a reduction in the dimension of the

representation (i.e., feature map).

4) Normalization: Controlling the input distribution across

layers can help to significantly speed up training and improve

accuracy. Accordingly, the distribution of the layer input

activations (

σ

,

µ

) are normalized such that it has a zero mean

and a unit standard deviation. In batch normalization, the

normalized value is further scaled and shifted, as shown in

Eq. (2), the parameters (

γ

,

β

) are learned from training [

44

].

is a small constant to avoid numerical problems. Prior to this,

local response normalization [

3

] was used, which was inspired

by lateral inhibition in neurobiology where excited neurons

(i.e., high values activations) should subdue its neighbors (i.e.,

low value activations); however, batch normalization is now

considered standard practice in the design of CNNs.

y =

x − µ

√

σ

2

+

γ + β

(2)

A. Popular DNN Models

Many DNN models have been developed over the past

two decades. Each of these models has a different ”network

architecture” in terms of number of layers, filter shapes (i.e.,

filter size, number of channels and filters), layer types, and

connections between layers. Understanding these variations

and trends is important for incorporating the right flexibility

in any efficient DNN engine.

Although the first popular DNN, LeNet [

45

], was published

in the 1990s, it wasn’t until 2012 that the AlexNet [

3

] was

used in the ImageNet Challenge [

10

]. We will give an overview

of various popular DNNs that competed in and/or won the

ImageNet Challenge [

10

] as shown in Fig. 5, most of whose

models with pre-trained weights are publicly available for

download; the DNN models are summarized in Table II. Two

results for top-5 error results are reported. In the first row, the

accuracy is boosted by using multiple crops from the image,

and an ensemble of multiple trained models (i.e., the DNN

needs to be run several times); these are results that are used to

compete in the ImageNet Challenge. The second row reports

the accuracy if only a single crop was used (i.e., the DNN is

run only once), which is more consistent with what would be

deployed in real applications.

LeNet [9] was one of the first CNN approaches introduced

in 1989. It was designed for the task of digit classification

in grayscale images of size 28

×

28. The most well known