the sky is [mask] .

blue

[CLS]

the sky is

blue

blue

.the sky is

[CLS]

.

[SEP]

[SEP]

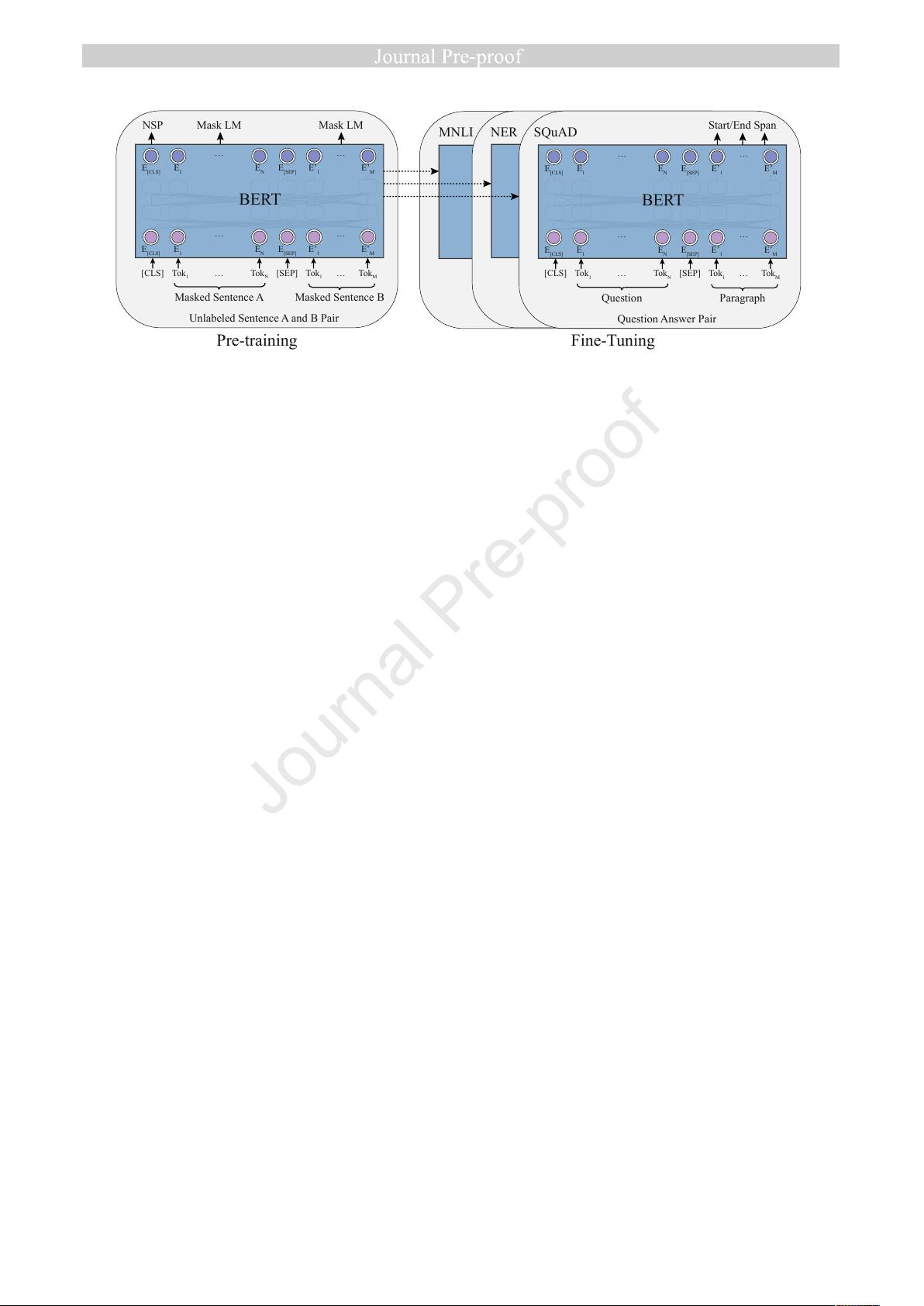

BERT GPT

Figure 6: The difference between GPT and BERT in their self-attention mechanisms and pre-training objectives.

after GPT and BERT to reveal the recent develop-

ment of PTMs.

3.1 Transformer

Before Transformer, RNNs have been typical neu-

ral networks for processing sequential data (espe-

cially for natural languages) for a long time. As

RNNs are equipped with sequential nature, they

read a word at each time step in order and refer to

the hidden states of the previous words to process it.

Such a mechanism is considered to be difficult to

take advantage of the parallel capabilities of high-

performance computing devices such as GPUs and

TPUs.

As compared to RNNs, Transformer is an

encoder-decoder structure that applies a self-

attention mechanism, which can model correlations

between all words of the input sequence in parallel.

Hence, owing to the parallel computation of the

self-attention mechanism, Transformer could fully

take advantage of advanced computing devices to

train large-scale models. In both the encoding and

decoding phases of Transformer, the self-attention

mechanism of Transformer computes representa-

tions for all input words. Next, we dive into the

self-attention mechanism more specifically.

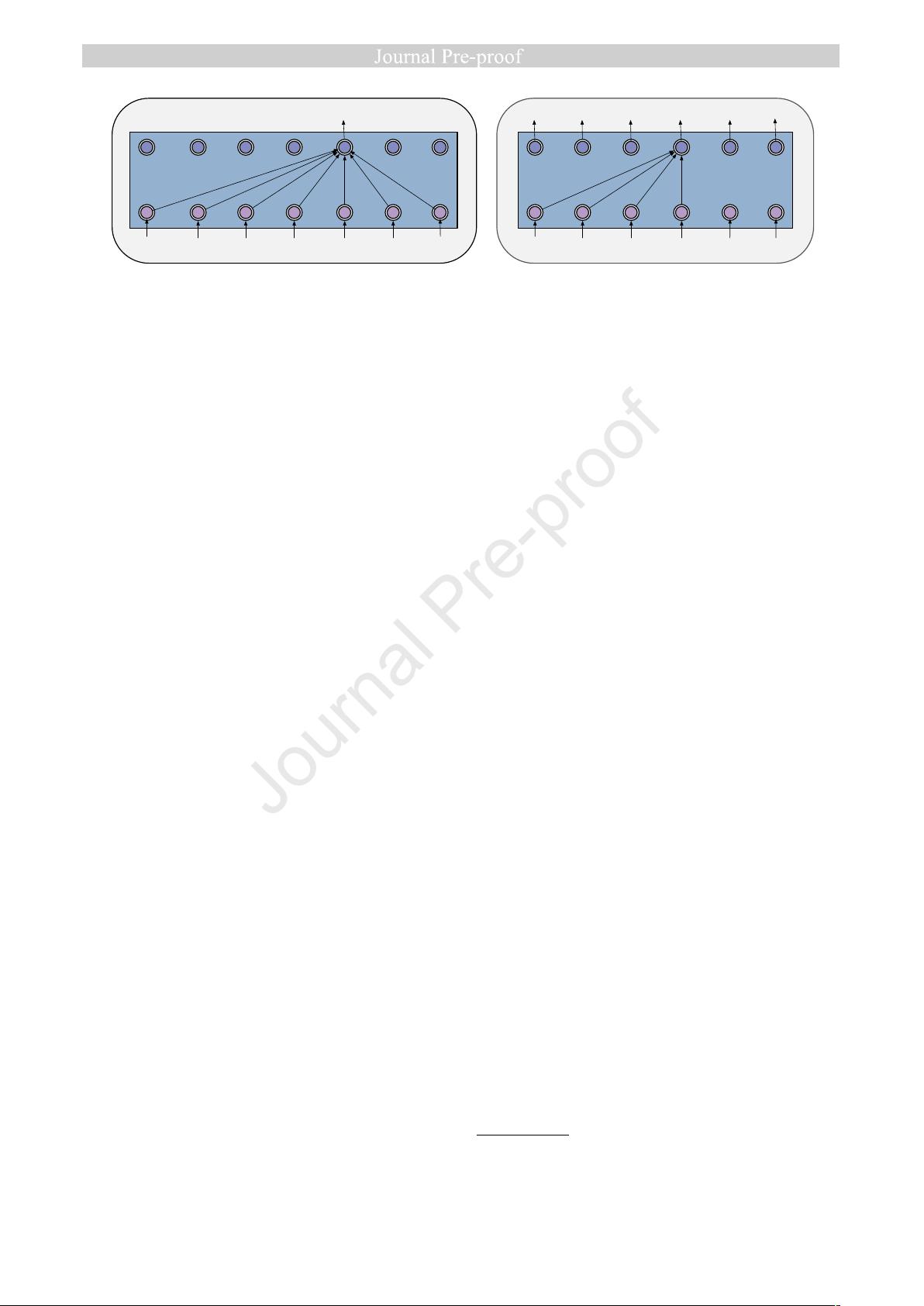

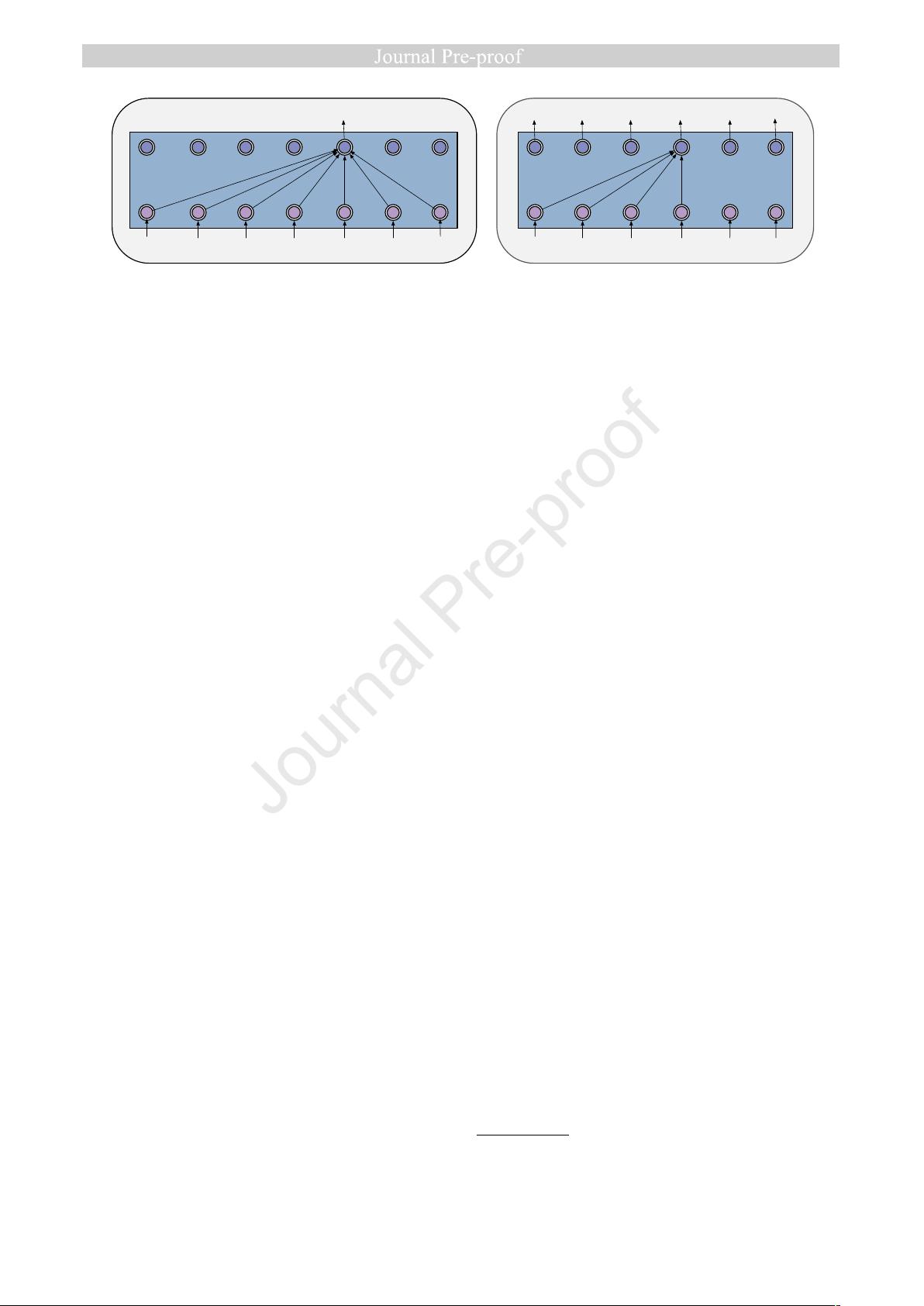

In the encoding phase, for a given word, Trans-

former computes an attention score by comparing

it with each other word in the input sequence. And

such attention scores indicate how much each of the

other words should contribute to the next represen-

tation of the given word. Then, the attention scores

are utilized as weights to compute a weighted aver-

age of the representations of all the words. We give

an example in Figure 5, where the self-attention

mechanism accurately captures the referential rela-

tionships between “Jack” and “he”, generating the

highest attention score. By feeding the weighted

average of all word representations into a fully con-

nected network, we obtain the representation of

the given word. Such a procedure is essentially an

aggregation of the information of the whole input

sequence, and it will be applied to all the words

to generate representations in parallel. In the de-

coding phase, the attention mechanism is similar to

the encoding, except that it only decodes one repre-

sentation from left to right at one time. And each

step of the decoding phase consults the previously

decoded results. For more details of Transformer,

please refer to its original paper (Vaswani et al.,

2017) and the survey paper (Lin et al., 2021).

Due to the prominent nature, Transformer grad-

ually becomes a standard neural structure for natu-

ral language understanding and generation. More-

over, it also serves as the backbone neural structure

for the subsequently derived PTMs. Next, we in-

troduce two landmarks that completely open the

door towards the era of large-scale self-supervised

PTMs, GPT and BERT. In general, GPT is good at

natural language generation, while BERT focuses

more on natural language understanding.

3.2 GPT

As introduced in Section 2, PTMs typically con-

sist of two phases, the pre-training phase and the

fine-tuning phase. Equipped by the Transformer

decoder as the backbone

3

, GPT applies a genera-

tive pre-training and a discriminative fine-tuning.

Theoretically, compared to precedents of PTMs,

GPT is the first model that combines the modern

Transformer architecture and the self-supervised

pre-training objective. Empirically, GPT achieves

significant success on almost all NLP tasks, includ-

ing natural language inference, question answering,

commonsense reasoning, semantic similarity and

3

Since GPT uses autoregressive language modeling, the

encoder-decoder attention in the original Transformer decoder

is removed.