An Asymmetric Stagewise Least Square Loss Function for

Imbalanced Classification

Guibiao Xu, Bao-Gang Hu and Jose C. Principe

Abstract— In this paper, we present an asymmetric stagewise

least square (ASLS) loss function for imbalanced classification.

While keeping all the advantages of the stagewise least square

(SLS) loss function, such as, better robustness, computational

efficiency and sparseness, the ASLS loss extends the SLS loss by

adding another two parameters, namely, ramp coefficient and

margin coefficient. Therefore, asymmetric ramps and margins

can be formed which makes the ASLS loss be more flexible and

appropriate for processing class imbalance problems. A reduced

kernel classifier of the ASLS loss is also developed which only

uses a small part of the dataset to generate an efficient nonlinear

classifier. Experimental results confirm the effectiveness of the

ASLS loss in imbalanced classification.

I. INTRODUCTION

I

N this paper, we consider the problem of binary classifi-

cation. In classification, the quality of a classifier 𝑓 (𝒙) is

measured by a problem dependent loss function 𝑙

(

𝑓(𝒙),𝑡

)

,

where 𝑡 ∈{±1} is the true label of pattern 𝒙. 𝑙

(

𝑓(𝒙),𝑡

)

can also be written as 𝑙(𝑧), where 𝑧 = 𝑡𝑓(𝒙) is the margin

variable and can be used to measure the confidence of

classification. Given a training set {(𝒙

𝑖

,𝑡

𝑖

)}

𝑁

𝑖=1

, where each

training pattern 𝒙

𝑖

∈ ℝ

𝑑

, the classifier 𝑓(𝒙) can be found

by empirical risk minimization of 𝑙(𝑧). Misclassification

error rate (0-1 loss) 𝑙

0−1

= ∣∣(−𝑧)

+

∣∣

0

, where (⋅)

+

denotes

the positive part and ∣∣⋅∣∣

0

denotes the 𝐿

0

norm, is the

most appealing loss function for classification because it

relates to the misclassification probability directly. However,

the noncontinuity and nonconvexity of the 0-1 loss make

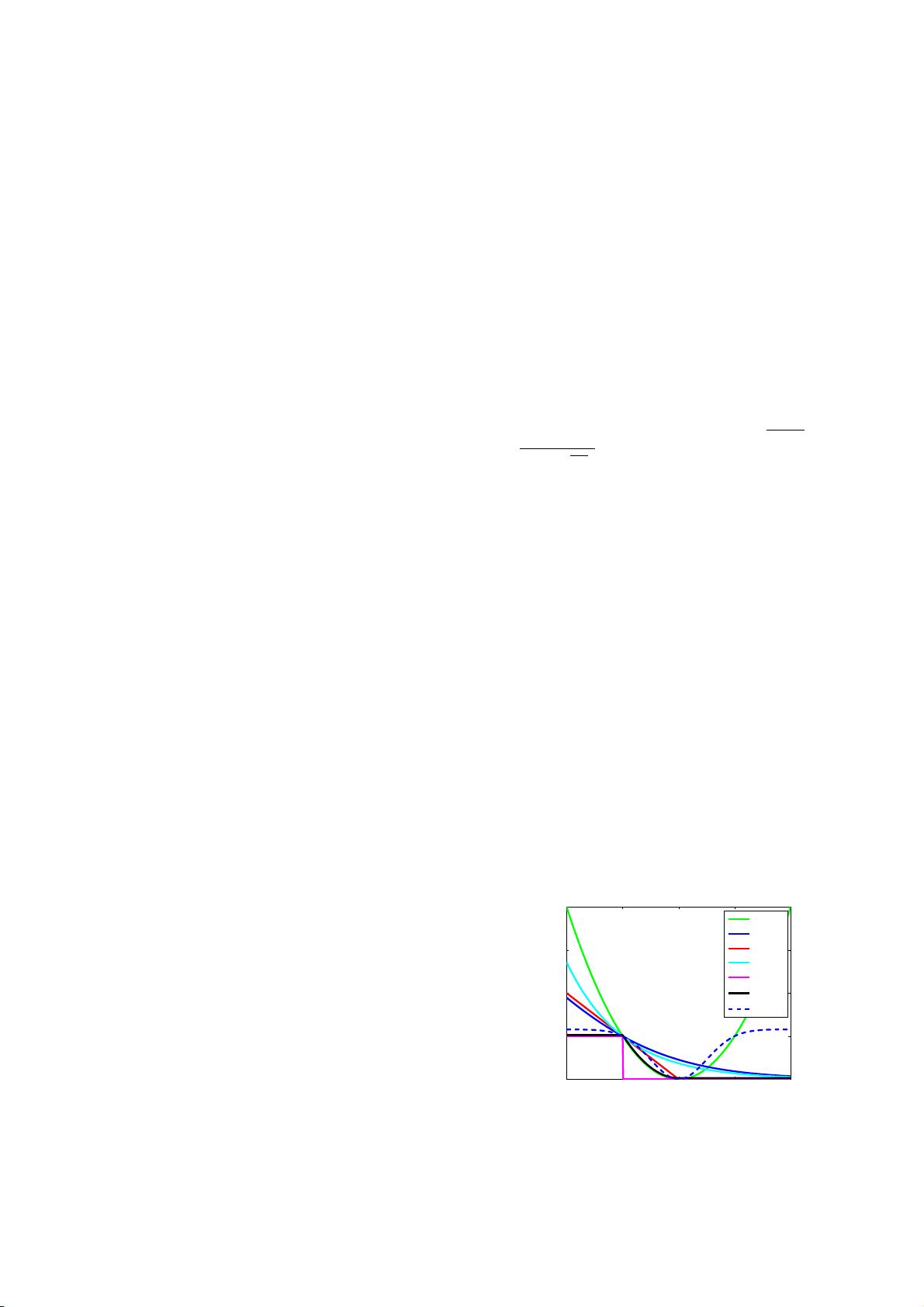

its optimization NP-hard [1]. Therefore, researchers apply

various convex upper bounds of the 0-1 loss to alleviate this

computational problem [2], such as the hinge loss function

𝑙

ℎ𝑖𝑛𝑔 𝑒

(𝑧)=[(1−𝑧)

+

]

𝑞

(𝑞 =1or2), the logistic loss function

𝑙

𝑙𝑜𝑔

(𝑧) = log[1 + exp(−𝑧)], the least square (LS)loss

function 𝑙

𝑙𝑠

(𝑧)=(1−𝑧)

2

, and the exponential loss function

𝑙

𝑒𝑥𝑝

(𝑧) = exp(−𝑧) (see Fig.1). These convex surrogate loss

functions are popular because of their virtues of convex op-

timization like unique optima, abundant convex optimization

tools and theoretic generalization error bound analysis [3].

However, these convex loss functions are poor approximation

to the 0-1 loss and less robust. Despite of the disadvantages

of nonconvex loss functions, various algorithms of noncon-

vex loss functions are studied in [4], [5] which prove that

Guibiao Xu and Bao-Gang Hu are with the NLPR, Institute of Automa-

tion, Chinese Academy of Sciences, Beijing, China (email: {guibiao.xu,

hubg}@nlpr.ia.ac.cn).

Jose C. Principe is with the CNEL, Department of Electrical & Computer

Engineering, University of Florida, Gainesville, FL 32611, USA (email:

principe@cnel.ufl.edu).

This work was supported by NSFC grants #61075051, #61273196 and

China Scholarship Council.

nonconvex loss functions have higher generalization ability,

better scalability and better robustness. The successful appli-

cations of deep neural networks further shows the promising

future of nonconvex loss functions [6]. In [7], Yang and Hu

innovatively proposed a stagewise least square (SLS)loss

function that gradually approximates a nonconvex squared

ramp loss function by adaptively updating the targets (the

details are in Section II-B). SLS loss inherits the advantages

from both convex and nonconvex loss functions. Correntropy

loss function (C-loss) 𝑙

𝐶

(𝑧)=𝛽[1 − exp(−

(1−𝑧)

2

2𝜎

2

)], where

𝛽 =

1

1−exp(−

1

2𝜎

2

)

and 𝜎 is the correntropy window width,

is another nonconvex loss function that was proposed in [8].

One of the appealing advantages of C-loss is that it is more

robust to overfitting compared with other loss functions. Both

the SLS loss and the C-loss are also shown in Fig.1.

Imbalanced classification is another key problem in classi-

fication. Because all the above loss functions assume that the

class distributions and misclassification costs are balanced,

classifiers based on these assumptions tend to classify all

the patterns to be negatives

1

when they run into imbalanced

datasets. The objective of imbalanced classification is trying

our best to separate positives from negatives, and it usually

costs more if we classify positives to be negatives than

otherwise. Hence, a variety of class imbalance learning

methods [9], [10] have been developed which could be

broadly divided into external methods and internal methods

[20], [21]. External methods are about data pre-processing so

as to balance the classes, while internal methods focus on al-

gorithmic modifications in order to reduce their sensitiveness

to class imbalance. MetaCost [11], one-side selection [12]

1

In this paper, we use +1 (positive) to represent the minority class and

-1 (negative) to represent the majority class.

−1 0 1 2 3

0

1

2

3

4

Margin z

Loss

LS

log

hinge

exp

0−1

SLS

*

C−loss

Fig. 1. Loss functions in classification (𝜎 =0.5 for C-loss).

2014 International Joint Conference on Neural Networks (IJCNN)

July 6-11, 2014, Beijing, China

978-1-4799-1484-5/14/$31.00 ©2014 IEEE