CHERKASSKY et al.: MODEL COMPLEXITY CONTROL FOR REGRESSION 1077

(guaranteed) bounds on the prediction risk based on VC-theory

have been proposed in [1].

A. Classical Model Selection Criteria

There are two general approaches for estimating prediction

risk for regression problems with finite data. One is based

on data resampling. The other approach is to use analytic

estimates of the prediction risk as a function of the empirical

risk (training error) penalized (adjusted) by some measure of

model complexity. Once an accurate estimate of the prediction

risk is found it can be used for model selection by choosing the

model complexity which minimizes the estimated prediction

risk. In the statistical literature, various prediction risk esti-

mates have been proposed for model selection (in the linear

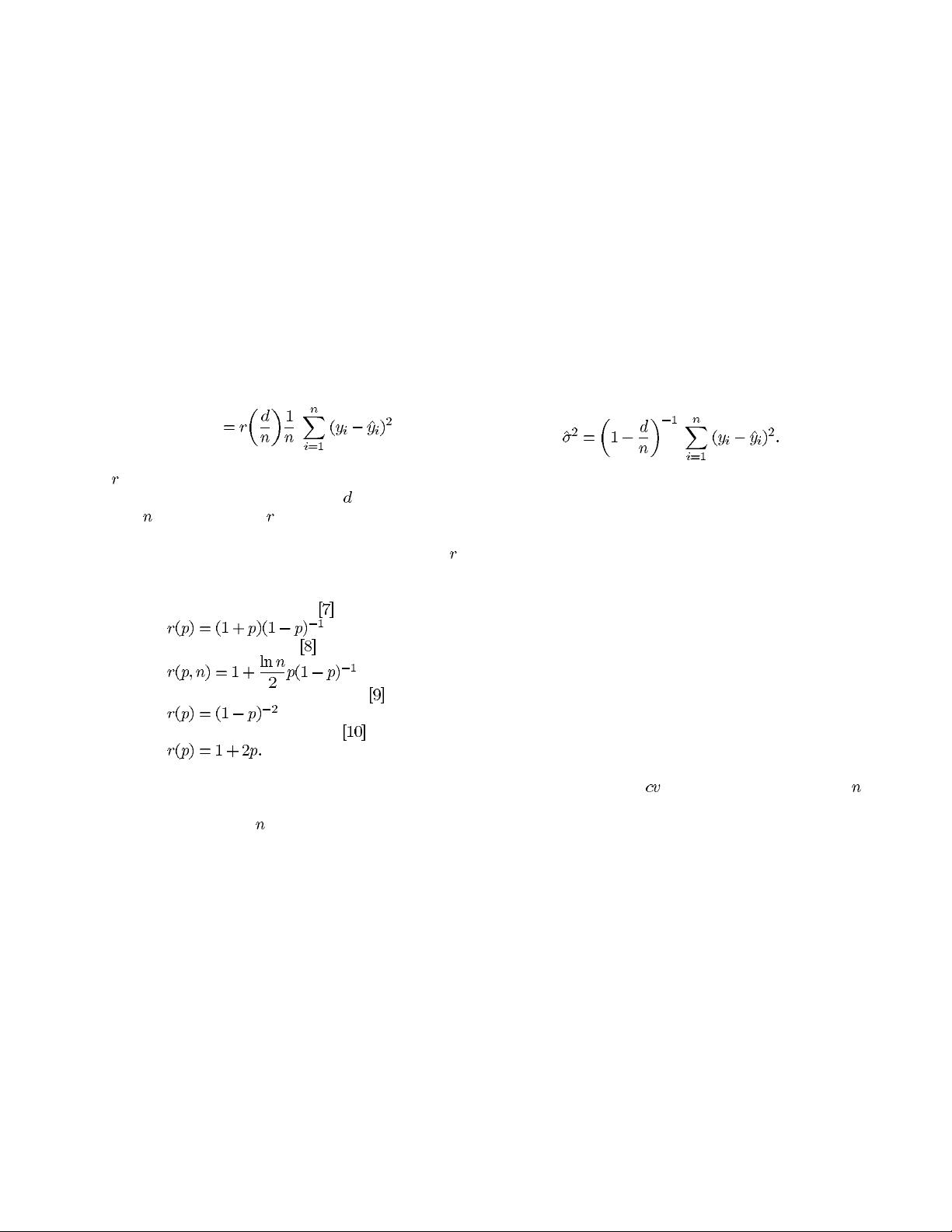

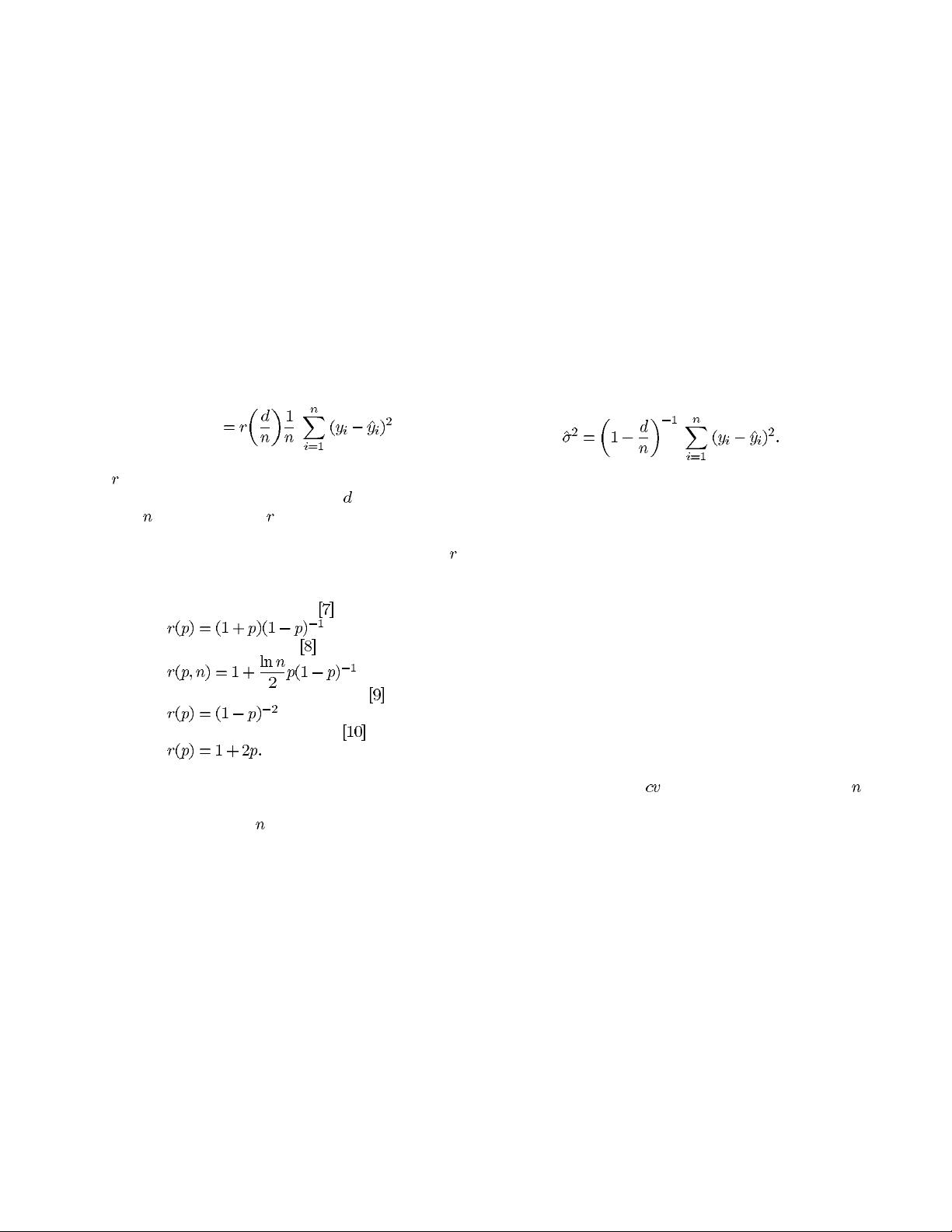

case). In general, these estimates all take the form of

estimated risk

(7)

where

is a monotonically increasing function of the ratio

of model complexity (degrees of freedom)

and the training

sample size

[6]. The function is often called a penalization

factor because it inflates the average residual sum of squares

for increasingly complex models. The following forms of

have been proposed in the statistical literature:

final prediction error (FPE)

Schwartz’ criterion (SC)

generalized cross-validation (GCV)

Shibata’s model selector (SMS)

All these classical approaches are motivated by asymptotic

arguments for linear models and therefore apply well for large

training sets. In fact, for large

, prediction estimates provided

by FPE, GCV, and SMS are asymptotically equivalent. More-

over, the model selection criteria above are all based on a

parametric philosophy. That is, the goal of model selection is

to select the terms of the approximating function in order to

match the target function (under the assumption that the target

function is contained in a set of linear approximating func-

tions). The sucess of the model selection criteria is measured

according to this philosophy [7], [10], [11], [12]. This classical

approach can be contrasted to the VC-theory approach, where

the goal of model selection is to choose the approximating

function with the lowest prediction risk (irrespective of the

number of terms chosen).

Classical model selection criteria were designed with spe-

cific applications in mind. For example, FPE was originally de-

signed for model identification for autoregressive time series,

and GCV was developed as an estimate for cross-validation

(itself an estimate of prediction risk) in spline smoothing. It

is only SC and SMS which were developed for the generic

problem of regression. Note that application of the minimum

description length (MDL) arguments [13] yields a penalization

factor identical to the Schwartz criterion, though the latter was

derived using Bayesian formulation. A recent model selection

criteria described in [14] has the goal of minimizing prediction

risk for regression.

Typically, the classical criteria are constructed by first defin-

ing the prediction risk in terms of the linear approximating

function. Then, asymptotic arguments are used to develop limit

distributions for various components of the prediction risk,

leading to an asymptotic form of the prediction risk. Finally,

the data, along with estimates of the noise variance are used

to estimate the expected value of the asymptotic prediction

risk. For example the FPE criterion depends on assuming a

gaussian distribution to develop the asymptotic prediction risk.

In addition, FPE depends on an estimate of the noise variance

given by

There are several common assumptions underlying all these

model selection criteria:

1) The target function is linear.

2) The set of linear functions of the learning machine con-

tains the target function. That is, the learning machine

provides an unbiased estimate.

3) The noise is independent and identically distributed.

4) That the empirical risk is minimized.

Additional assumptions reflecting the noise distribution and

limit distributions are also applied in the development of each

selection criterion.

Another popular alternative (to analytic methods) is to

choose model complexity using resampling. In this paper

we consider leave-one-out cross-validation (CV). Under this

approach, the prediction risk is estimated via cross-validation,

and the model providing lowest estimated risk is chosen.

It can be shown [4] that

is asymptotically (for large )

equivalent to analytic model selection criteria (such as FPE,

GCV, and SMS). Unfortunately, the computational cost of

CV grows linearly with the number of samples, and often

becomes prohibitively large for practical applications. Addi-

tional complications arise in the context of using resampling

with nonlinear estimators (such as neural networks), due to

existence of multiple local minima and the dependence of the

final solution (obtained by an optimization algorithm) on the

initial conditions (weight initialization)—see [4]. Nevertheless,

resampling remains the prefered approach for model selection

in many learning methods. In this paper, we use CV as a

benchmark method for comparing various analytic methods.

It can be shown [12] that the above estimates of the pre-

diction risk (excluding SC) are not consistent in the following

sense: The probability of selecting the model with the same

number of terms as the target function does not converge to

one as the number of observations is increased (with fixed

number of maximum basis functions). In addition, resampling

methods for model selection may suffer from the same lack

of consistency. For example, leave-one-out CV which has