Generalized orderless pooling performs implicit salient matching

Marcel Simon

1

, Yang Gao

2

, Trevor Darrell

2

, Joachim Denzler

1

, Erik Rodner

3

1

Computer Vision Group, University of Jena, Germany

2

EECS, UC Berkeley, USA

3

Corporate Research and Technology, Carl Zeiss AG

{marcel.simon, joachim.denzler}@uni-jena.de {yg, trevor}@eecs.berkeley.edu

Abstract

Most recent CNN architectures use average pooling as

a final feature encoding step. In the field of fine-grained

recognition, however, recent global representations like bi-

linear pooling offer improved performance. In this paper,

we generalize average and bilinear pooling to “α-pooling”,

allowing for learning the pooling strategy during training.

In addition, we present a novel way to visualize decisions

made by these approaches. We identify parts of training

images having the highest influence on the prediction of a

given test image. It allows for justifying decisions to users

and also for analyzing the influence of semantic parts. For

example, we can show that the higher capacity VGG16

model focuses much more on the bird’s head than, e.g.,

the lower-capacity VGG-M model when recognizing fine-

grained bird categories. Both contributions allow us to an-

alyze the difference when moving between average and bi-

linear pooling. In addition, experiments show that our gen-

eralized approach can outperform both across a variety of

standard datasets.

1. Introduction

Deep architectures are characterized by interleaved con-

volution layers to compute intermediate features and pool-

ing layers to aggregate information. Inspired by recent re-

sults in fine-grained recognition [19, 10] showing certain

pooling strategies offered equivalent performance as clas-

sic models involving explicit correspondence, we investi-

gate here a new pooling layer generalization for deep neu-

ral networks suitable both for fine-grained and more generic

recognition tasks.

Fine-grained recognition developed from a niche re-

search field into a popular topic with numerous applications,

ranging from automated monitoring of animal species [9]

to fine-grained recognition of cloth types [8]. The defin-

ing property of fine-grained recognition is that all possi-

ble object categories share a similar object structure and

hence similar object parts. Since the objects do not sig-

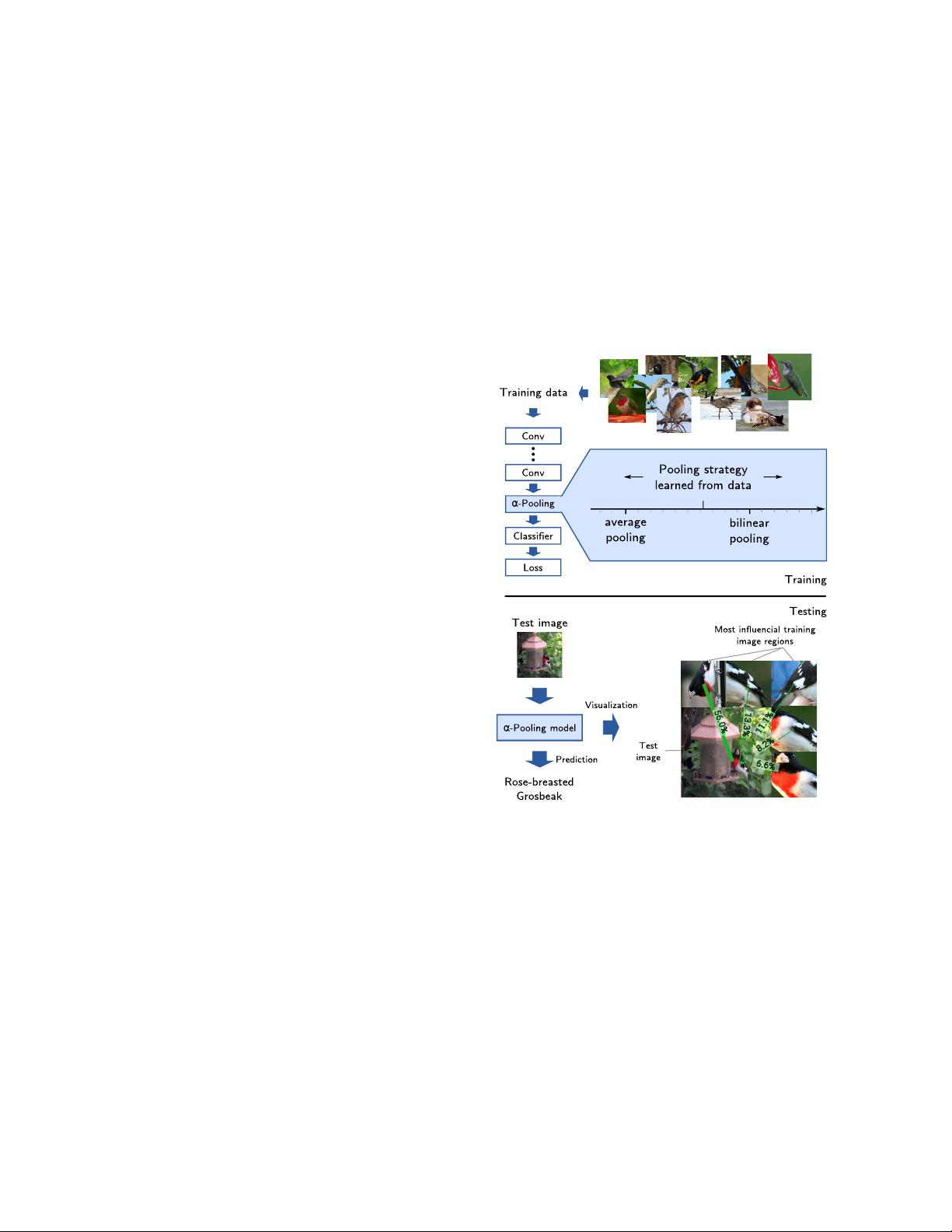

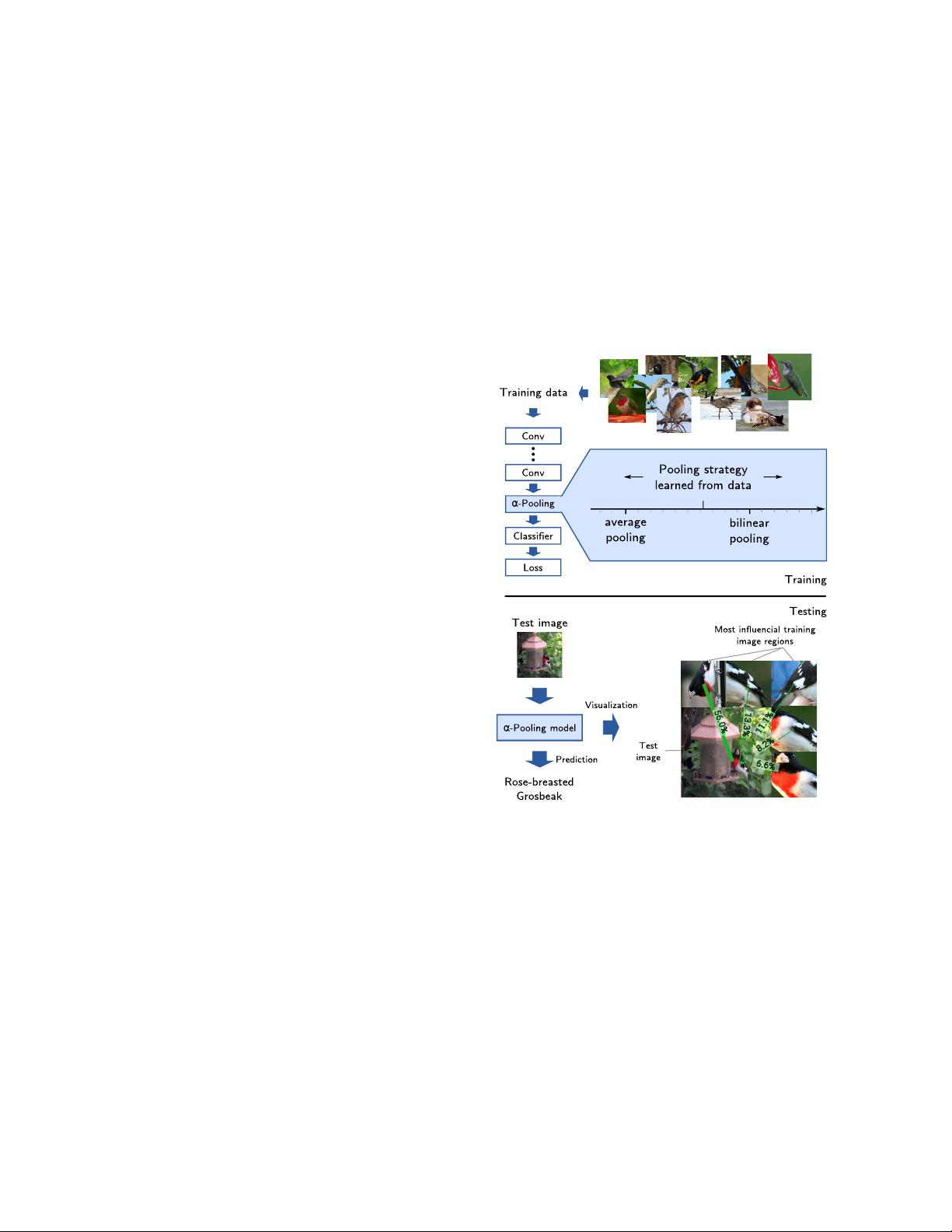

Figure 1. We present the novel pooling strategy α-pooling, which

replaces the final average pooling or bilinear pooling layer in

CNNs. It allows for a smooth combination of average and bilinear

pooling techniques. The optimal pooling strategy can be learned

during training to optimally adapt to the properties of the task. In

addition, we present a novel way to visualize predictions of α-

pooling-based classification decisions. It allows in particular for

analyzing incorrect classification decisions, which is an important

addition to all widely used orderless pooling strategies.

nificantly differ in the overall shape, subtle differences in

the appearance of an object part can likely make the differ-

ence between two classes. For example, one of the most

popular fine-grained tasks is bird species recognition. All

birds have the basic body structure with beak, head, throat,

belly, wings as well as tail parts, and two species might dif-

1

arXiv:1705.00487v3 [cs.CV] 20 Jul 2017