CCNet: Criss-Cross Attention for Semantic Segmentation

Zilong Huang

1∗

, Xinggang Wang

1†

, Lichao Huang

2

, Chang Huang

2

, Yunchao Wei

3,4

, Wenyu Liu

1

1

School of EIC, Huazhong University of Science and Technology

2

Horizon Robotics

3

ReLER, UTS

4

Beckman Institute, University of Illinois at Urbana-Champaign

Abstract

Full-image dependencies provide useful contextual in-

formation to benefit visual understanding problems. In this

work, we propose a Criss-Cross Network (CCNet) for ob-

taining such contextual information in a more effective and

efficient way. Concretely, for each pixel, a novel criss-cross

attention module in CCNet harvests the contextual infor-

mation of all the pixels on its criss-cross path. By taking a

further recurrent operation, each pixel can finally capture

the full-image dependencies from all pixels. Overall, CC-

Net is with the following merits: 1) GPU memory friendly.

Compared with the non-local block, the proposed recurrent

criss-cross attention module requires 11× less GPU mem-

ory usage. 2) High computational efficiency. The recurrent

criss-cross attention significantly reduces FLOPs by about

85% of the non-local block in computing full-image depen-

dencies. 3) The state-of-the-art performance. We conduct

extensive experiments on popular semantic segmentation

benchmarks including Cityscapes, ADE20K, and instance

segmentation benchmark COCO. In particular, our CCNet

achieves the mIoU score of 81.4 and 45.22 on Cityscapes

test set and ADE20K validation set, respectively, which

are the new state-of-the-art results. The source code is

available at

https://github.com/speedinghzl/

CCNet

.

1. Introduction

Semantic segmentation, which is a fundamental problem

in the computer vision community, aims at assigning se-

mantic class labels to each pixel in the given image. It has

been extensively and actively studied in many recent works

and is also critical for various challenging and meaningful

applications such as autonomous driving [

14], augmented

reality [

1], and image editing [13]. Specifically, current

state-of-the-art semantic segmentation approaches based on

∗

The work was mainly done during an internship at Horizon Robotics

†

Corresponding author.

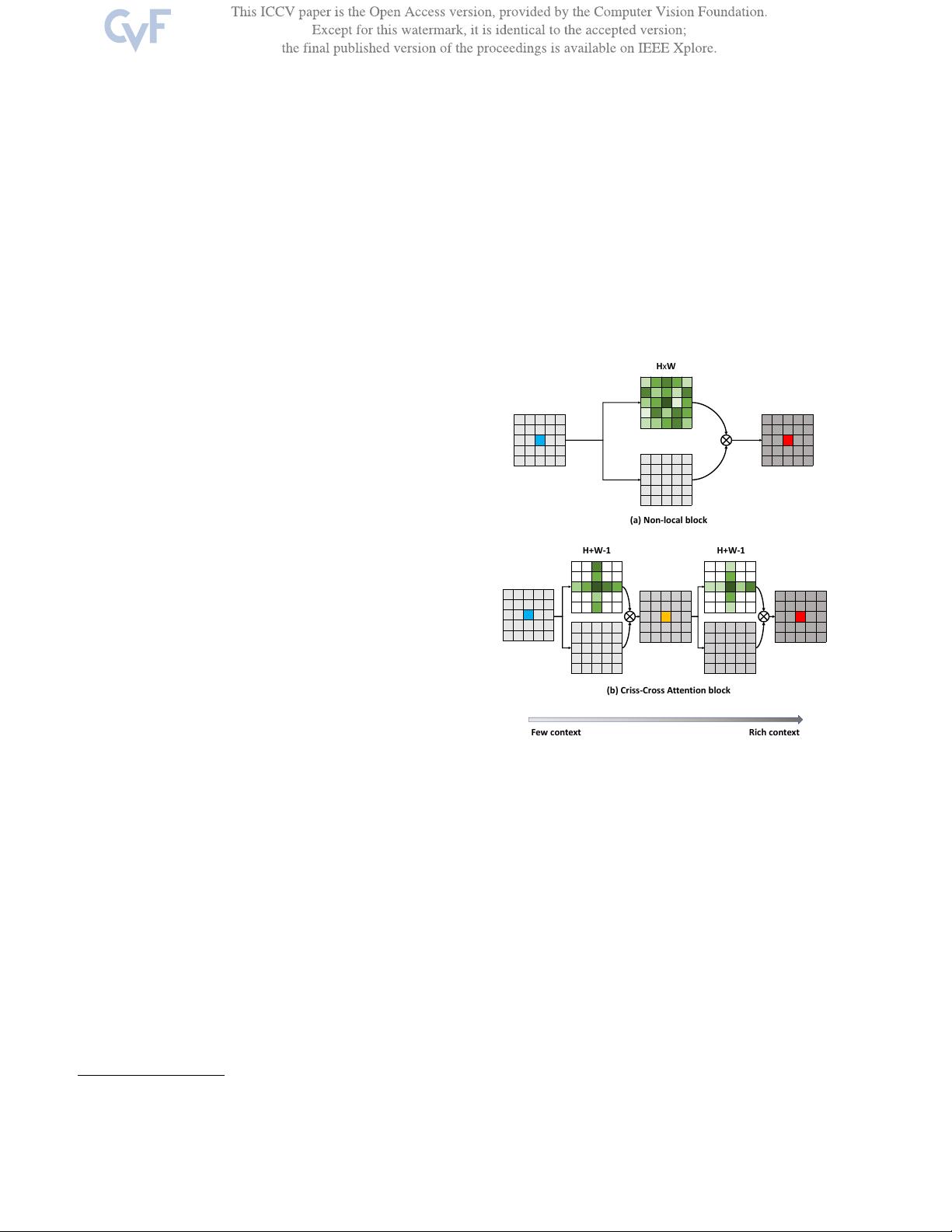

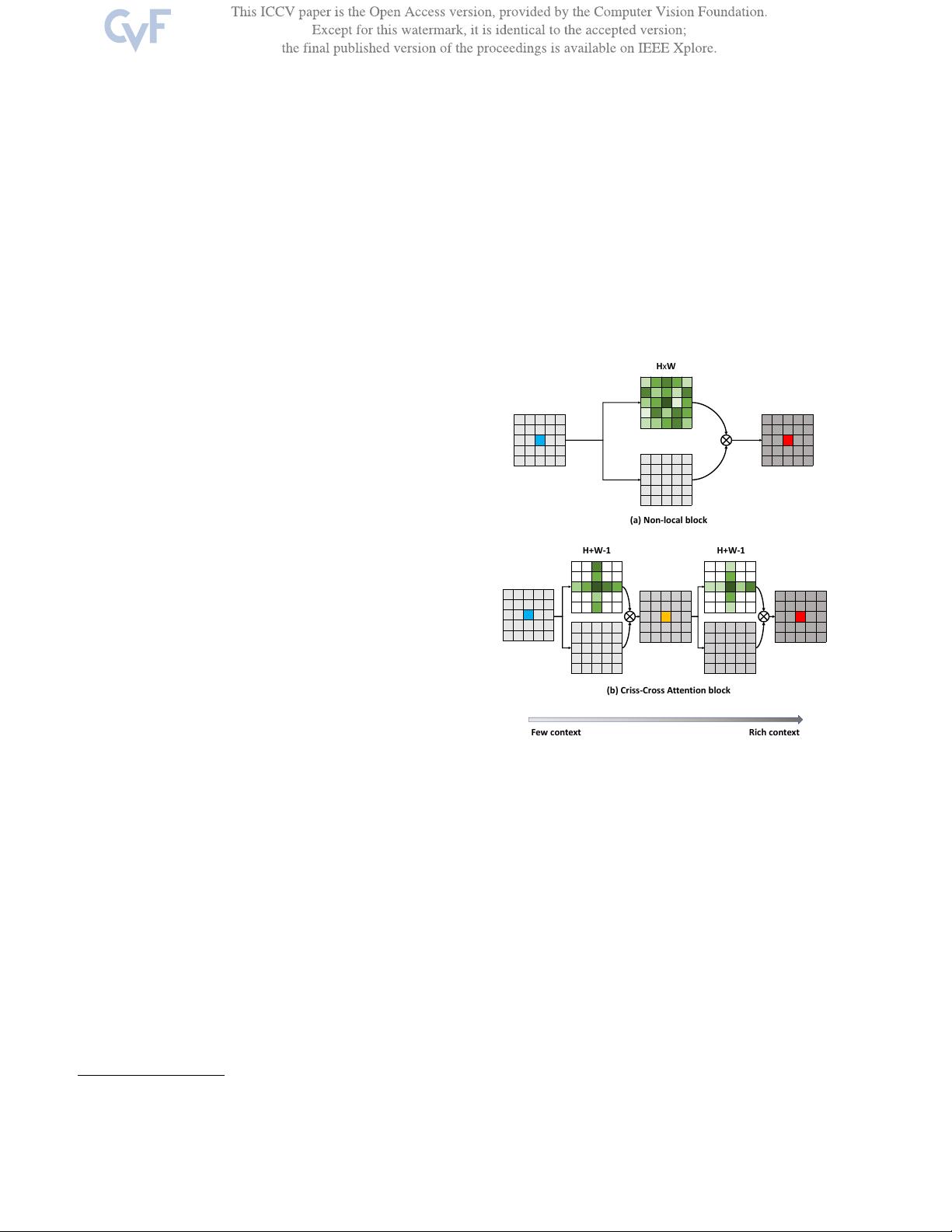

(a) Non-local block

(b) Criss-Cross Attention block

H+W-1

Rich context

Few context

H+W-1

HxW

Figure 1. Diagrams of two attention-based context aggregation

methods. (a) For each position (e.g. blue), the Non-local module

[

31] generates a dense attention map which has H × W weights

(in green). (b) For each position (e.g. blue), the criss-cross at-

tention module generates a sparse attention map which only has

H + W − 1 weights. After the recurrent operation, each position

(e.g. red) in the final output feature maps can collect information

from all pixels. For clear display, residual connections are ignored.

the fully convolutional network (FCN) [

26] have made re-

markable progress. However, due to the fixed geomet-

ric structures, they are inherently limited to local receptive

fields and short-range contextual information. These limita-

tions impose a great adverse effect on FCN-based methods

due to insufficient contextual information.

To make up for the above deficiency of FCN, some works

have been proposed to introduce useful contextual infor-

mation to benefit the semantic segmentation task. Specif-

ically, Chen et al. [

5] proposed atrous spatial pyramid pool-

603