2168-6750 (c) 2016 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TETC.2016.2633228, IEEE

Transactions on Emerging Topics in Computing

> REPLACE THIS LINE WITH YOUR PAPER IDENTIFICATION NUMBER (DOUBLE-CLICK HERE TO EDIT) <

Ambusaidi et al. in [33] proposed a mutual information based

IDS that selects optimal feature for classification based on

feature selection algorithm. Their approach was evaluated using

three benchmark data set (KDD Cup 99, NSL-KDD and Kyoto

2006+).

Intrusion detection systems have also been used for

managing security risks in industrial control systems [14]. For

example, Pan et al. [34] proposed a systematic and automated

approach to build a hybrid IDS that learns temporal state-based

specifications for electric power systems to accurately

differentiate between disturbances, normal control operations,

and cyber-attacks. Zhou et al. [35] presented an industrial

anomaly and multi model driven IDS based on Hidden Markov

Model to filter attacks from actual faults.

Security issues can be a barrier to widespread adoption of IoT

devices [36]. Whitmore et al., [37] showed that wide range of

techniques could mitigate cyber threat targeting IoT systems.

Ning et al. [38] proposed a hierarchical authentication

architecture to provide anonymous data transmission in IoT

networks. Cao et al. [39] highlighted the impact and

importance of ghost attacks on ZigBee based IoT devices. Chen

et al. [40] proposed an autonomic model-driven cyber security

management approach for IoT systems, which can be used to

estimate, detect, and respond to cyberattacks with little or no

human intervention. Teixeira et al. [41] proposed a scheme for

thwarting insiders attacks in IoT networks by crosschecking

data transformation of every IoT node.

III. PROPOSED TDTC MODEL

The proposed model comprises a dimension reduction module

and a classification module, to be discussed in sections III.A

and III.B, respectively.

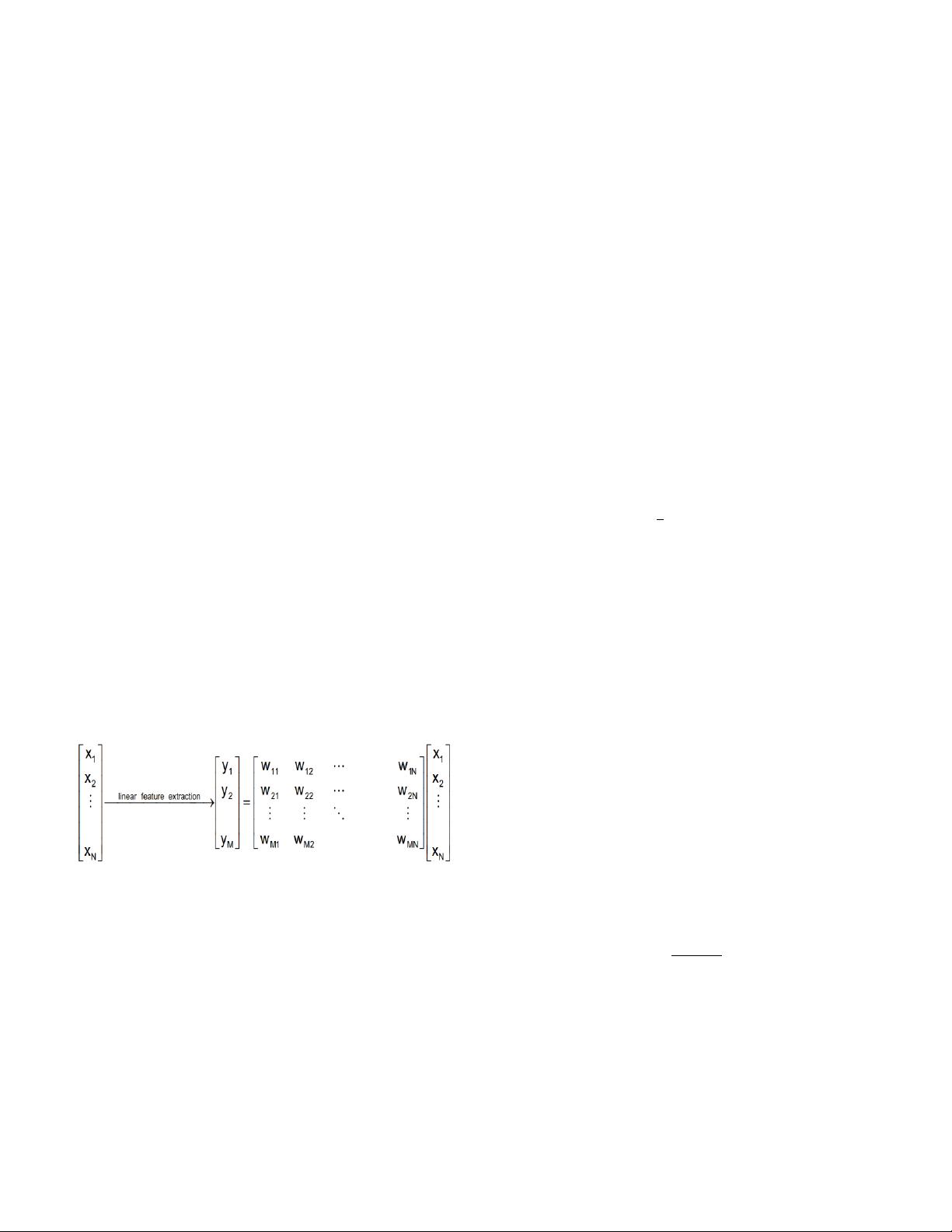

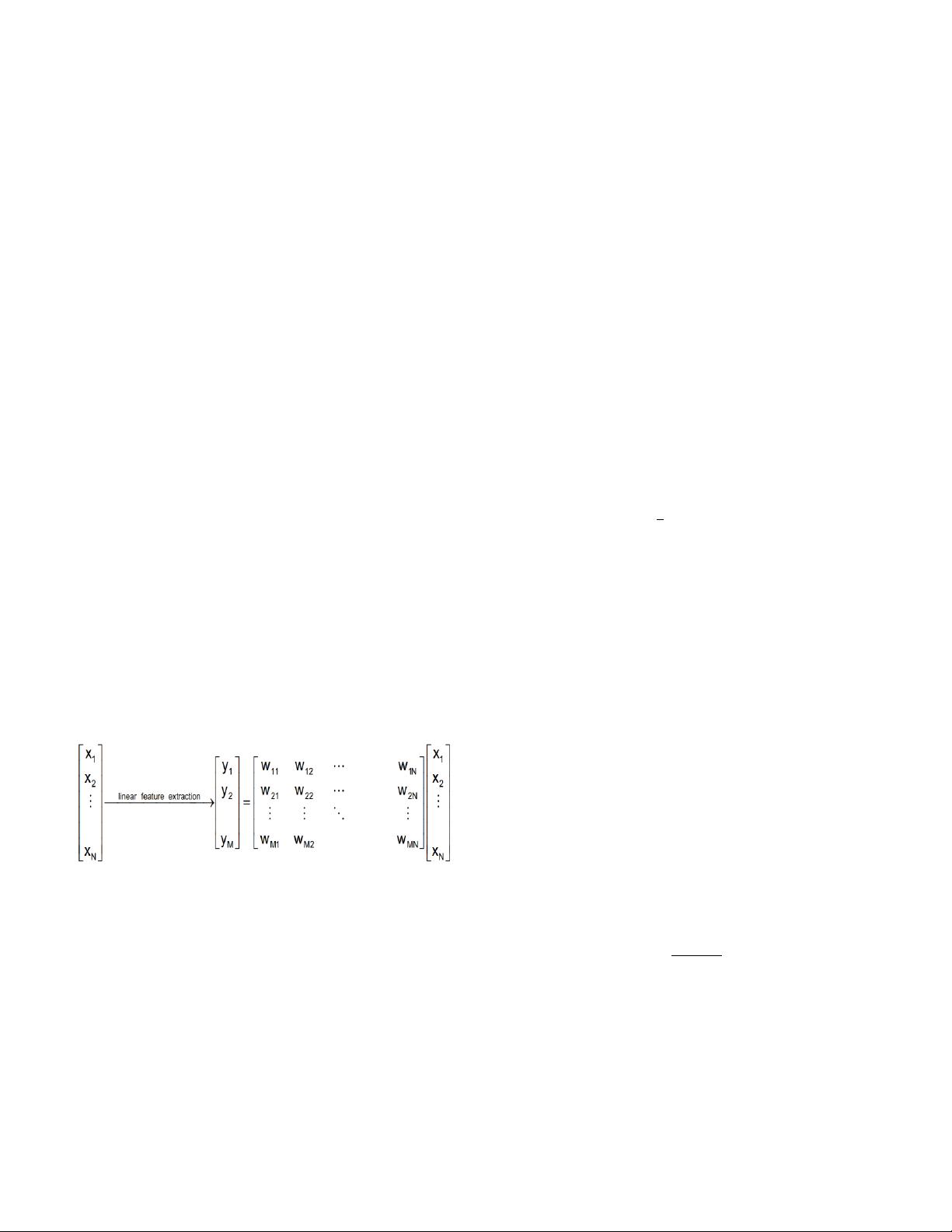

Fig 2. In PCA, linear transformation is used to reduce high dimension dataset

to a low dimension dataset

A. Dimension Reduction Module

The dimension reduction module is deployed to address

limitations due to dimensionality that may lead to making

wrong decisions while increasing computational complexity of

the classifier. We deployed both Linear Discriminant Analysis

(LDA) (i.e. a supervised dimension reduction technique) and

Principal Component Analysis (i.e. an unsupervised dimension

reduction technique) in order to address the high dimensionality

issue. Principal Component Analysis (PCA) can be used to

perform feature selection and extraction [42]:

a) Feature selection: choose a subset of all features based

on their effectiveness in higher classification (i.e.

choosing more informative features)

b) Feature extraction: create a subset of new features by

combining existing features.

In TDTC, we used PCA as a feature extraction mechanism to

map the NSL-KDD dataset, which consists of 41 features to one

with a lower feature space by removing less significant features.

Feature extraction technique is commonly limited to linear

transforms: as shown in in Figure 2.

Let X be an N-dimensional random vector in the original

dataset, and the new feature space consists of lower M-

dimensions (M is the number of new dataset features that are

transformed) where ( ). For the transformation operation,

we will need to compute Eq. 1 to Eq.3:

Covariance matrix:

, (Eq.1)

Where m (mean vector) is:

(Eq.2)

Eigenvector-eigenvalue decomposition:

Where v=Eigenvector =Eigenvalue (Eq.3)

PCA will then sort the eigenvectors in descending order. In

other words, eigenvectors with lower eigenvalues have the least

information about the distribution of the data and these are the

eigenvectors we wish to drop. A common approach is to rank

the eigenvectors from the highest to the lowest eigenvalue and

choose the top eigenvectors based on eigenvalues. Similarly,

in TDTC, one may decide which eigenvalues are more useful;

thus, the ideal feature mapping matrix can be concluded and

used for linear transformation of training and test dataset.

At this layer of dimension reduction, Imbedded Error

Function (IEF) factor analysis measure [43] is used to select the

principal [44] as shown in Eq.4, where l, m denotes the number

of Principal Components (PCs). Both l and m are used to

represent the data and number of dimension, respectively. N

and denote the number of samples and Eigenvalues,

respectively.

(E q.4)

Cross Validation (CV) is used to evaluate optimum principals

with minimum errors as shown in Figure 3. Applying selection

criteria would reduce some features and help the next layer of

dimension reduction module to compute lower dimension

matrix and spreadable objects.