2.8. EXERCISES 17

Bin 1: 13, 15, 16 Bin 2: 16, 19, 20 Bin 3: 20, 21, 22

Bin 4: 22, 25, 25 Bin 5: 25, 25, 30 Bin 6: 33, 33, 35

Bin 7: 35, 35, 35 Bin 8: 36, 40, 45 Bin 9: 46, 52, 70

• Step 3: Calculate the arithmetic mean of each bin.

• Step 4: Replace each of the values in each bin by the arithmetic mean calculated for the bin.

Bin 1: 142/3, 142/3, 142/3 Bin 2: 181/3, 181/3, 181/3 Bin 3: 21, 21, 21

Bin 4: 24, 24, 24 Bin 5: 262/3, 262/3, 262/3 Bin 6: 332/3, 332/3, 332/3

Bin 7: 35, 35, 35 Bin 8: 401/3, 401/3, 401/3 Bin 9: 56, 56, 56

(b) How might you determine outliers in the data?

Outliers in the data may be detected by clustering, where similar values are organized into groups, or

“clusters”. Values that fall outside of the set of clusters may be considered outliers. Alternatively, a

combination of computer and human inspection can be used where a predetermined data distribution

is implemented to allow the computer to identify possible outliers. These possible outliers can then be

verified by human inspection with much less effort than would be required to verify the entire initial

data set.

(c) What other methods are there for data smoothing?

Other methods that can b e used for data smoothing include alternate forms of binning such as smooth-

ing by bin medians or smoothing by bin boundaries. Alternatively, equal-width bins can be used to

implement any of the forms of binning, where the interval range of values in each bin is constant.

Methods other than binning include using regression techniques to smooth the data by fitting it to a

function such as through linear or multiple regression. Classification techniques can be used to imple-

ment concept hierarchies that can smooth the data by rolling-up lower level concepts to higher-level

concepts.

2.8. Discuss issues to consider during data integration.

Answer:

Data integration involves combining data from multiple sources into a coherent data store. Issues that must

be considered during such integration include:

• Schema integration: The metadata from the different data sources must be integrated in order to

match up equivalent real-world entities. This is referred to as the entity identification problem.

• Handling redundant data: Derived attributes may be redundant, and inconsistent attribute naming

may also lead to redundancies in the resulting data set. Duplications at the tuple level may occur and

thus need to be detected and resolved.

• Detection and resolution of data value conflicts: Differences in representation, scaling, or encod-

ing may cause the same real-world entity attribute values to differ in the data sources being integrated.

2.9. Suppose a hospital tested the age and body fat data for 18 randomly selected adults with the following

result

age 23 23 27 27 39 41 47 49 50

%fat 9.5 26.5 7.8 17.8 31.4 25.9 27.4 27.2 31.2

age 52 54 54 56 57 58 58 60 61

%fat 34.6 42.5 28.8 33.4 30.2 34.1 32.9 41.2 35.7

(a) Calculate the mean, median and standard deviation of age and %fat.

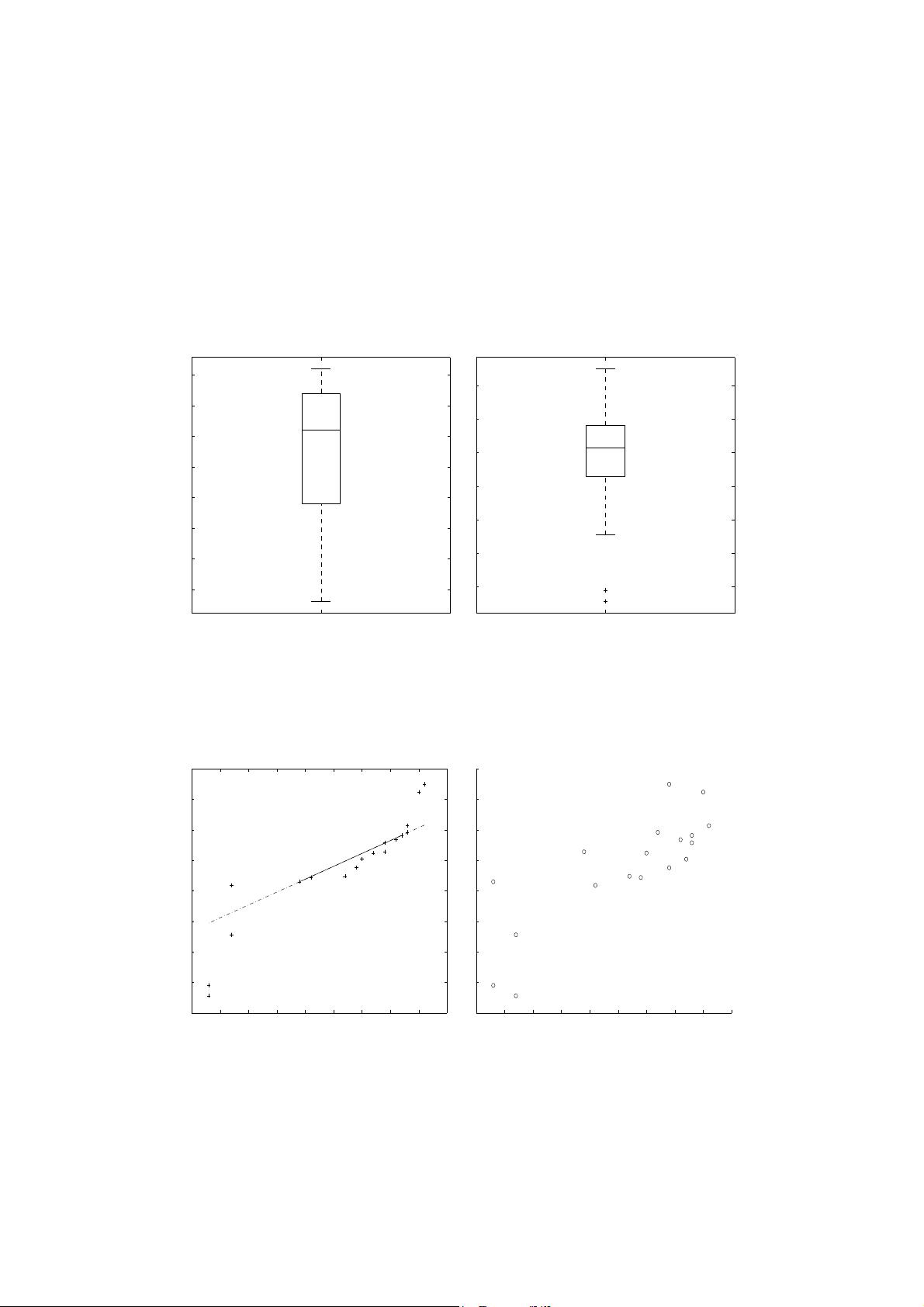

(b) Draw the boxplots for age and %fat.

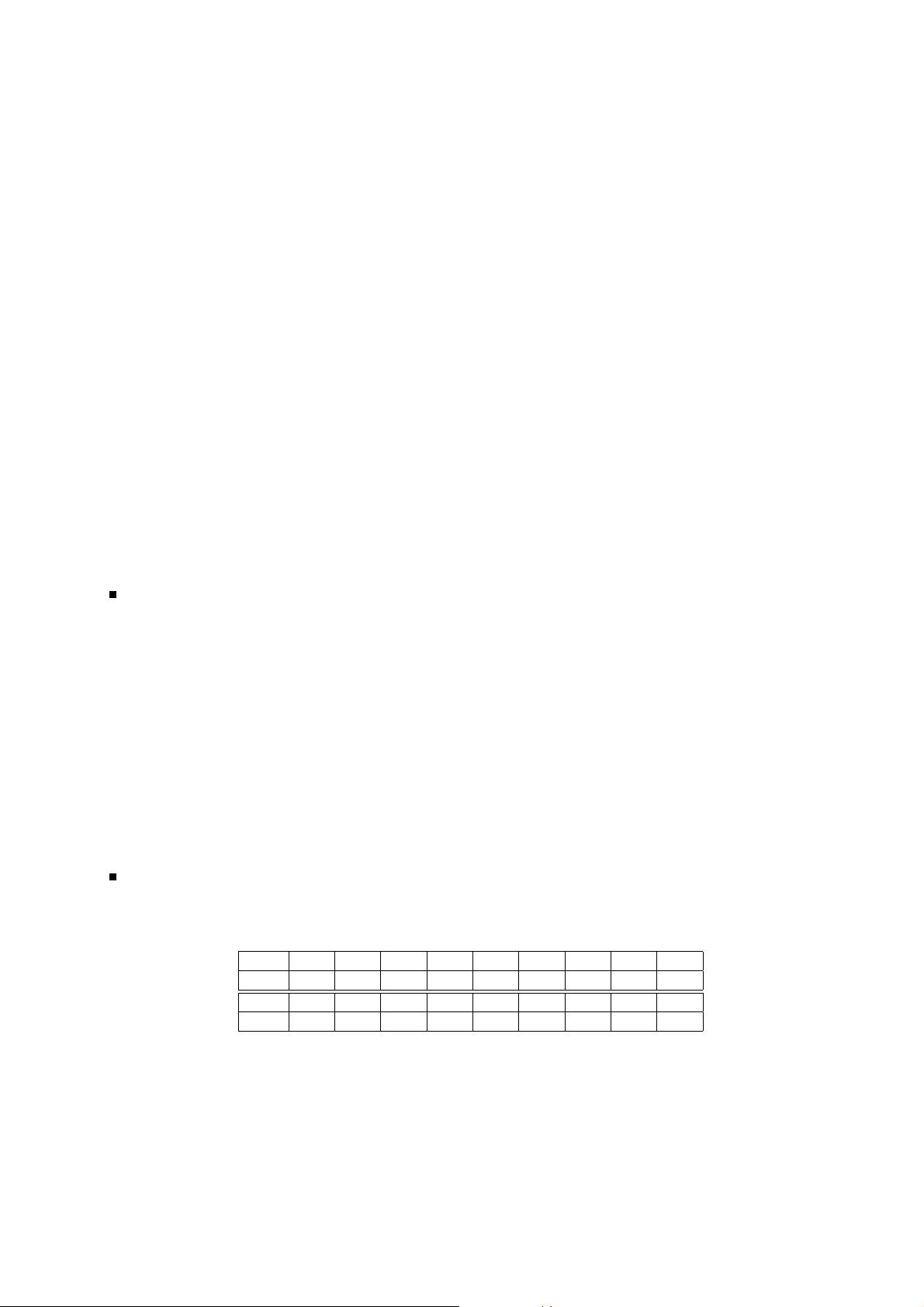

(c) Draw a scatter plot and a q-q plot based on these two variables.